The proliferation of artificial intelligence applications across diverse industries has necessitated the development of powerful edge computing solutions capable of executing complex machine learning models with minimal latency and optimal energy efficiency. Two dominant platforms have emerged as leaders in this space: NVIDIA’s Jetson series and Google’s Coral ecosystem. These sophisticated edge AI platforms represent fundamentally different approaches to solving the challenge of bringing high-performance AI inference capabilities to resource-constrained environments where cloud connectivity may be limited or entirely unavailable.

Explore the latest developments in edge AI technology to understand how these platforms are shaping the future of distributed artificial intelligence across various application domains. The choice between NVIDIA Jetson and Google Coral platforms involves careful consideration of multiple factors including computational requirements, power constraints, development ecosystem preferences, and specific application use cases that demand different levels of processing capability and energy efficiency.

Understanding Edge AI Inference Requirements

Edge AI inference represents a paradigm shift from traditional cloud-based machine learning deployments, bringing computational intelligence directly to the point of data collection and decision-making. This approach eliminates the latency associated with network communication, reduces bandwidth requirements, enhances privacy protection, and enables AI applications to function reliably in environments with limited or intermittent connectivity. The fundamental challenge lies in balancing computational performance with power consumption while maintaining the accuracy and reliability required for production applications.

Modern edge AI applications span an enormous range of use cases, from autonomous vehicles requiring real-time object detection and path planning to industrial IoT systems performing predictive maintenance on critical equipment. Smart city infrastructure relies on edge AI for traffic optimization, security monitoring, and environmental sensing, while healthcare applications utilize edge inference for medical imaging analysis and patient monitoring systems. Each of these applications presents unique requirements in terms of processing power, energy consumption, form factor constraints, and integration capabilities that influence platform selection decisions.

The complexity of modern machine learning models, particularly deep neural networks used for computer vision and natural language processing tasks, demands specialized hardware acceleration to achieve acceptable performance levels within edge computing constraints. Traditional general-purpose processors lack the parallel processing capabilities and energy efficiency required for intensive matrix operations that form the core of deep learning inference. This has led to the development of specialized AI accelerators, neuromorphic processors, and optimized software stacks designed specifically for edge deployment scenarios.

NVIDIA Jetson Platform Architecture and Capabilities

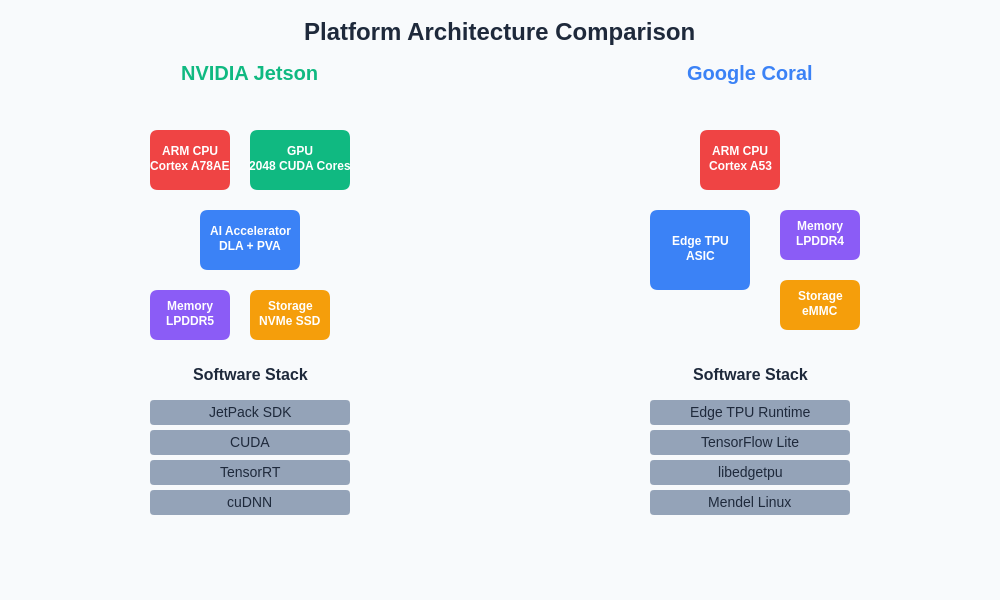

NVIDIA’s Jetson platform represents a comprehensive ecosystem built around the company’s extensive GPU architecture expertise and CUDA parallel computing platform. The Jetson series encompasses multiple form factors and performance levels, ranging from the compact Jetson Nano designed for entry-level applications to the powerful Jetson AGX Orin capable of delivering server-class AI performance in an embedded form factor. This architectural approach leverages NVIDIA’s proven GPU computing capabilities while incorporating ARM-based CPU cores, dedicated AI acceleration units, and comprehensive software development tools.

The Jetson platform’s strength lies in its unified architecture that seamlessly scales from development prototyping to production deployment across diverse application domains. Each Jetson module incorporates NVIDIA’s GPU architecture with hundreds or thousands of CUDA cores optimized for parallel processing operations fundamental to machine learning workloads. The integration of specialized Tensor cores in higher-end models provides additional acceleration specifically designed for mixed-precision training and inference operations commonly used in modern deep learning frameworks.

Experience advanced AI development with Claude for comprehensive support in designing and optimizing edge AI applications across different hardware platforms. The Jetson ecosystem extends beyond hardware to include JetPack SDK, a comprehensive software development kit that provides optimized libraries, runtime environments, and development tools specifically tailored for AI application development. This includes cuDNN for deep neural network acceleration, TensorRT for inference optimization, VisionWorks for computer vision applications, and support for popular machine learning frameworks including TensorFlow, PyTorch, and ONNX.

The versatility of the Jetson platform enables deployment across numerous application scenarios, from autonomous mobile robots requiring real-time perception and navigation capabilities to industrial automation systems performing quality control inspection and predictive maintenance analysis. The platform’s ability to handle multiple concurrent AI workloads while maintaining real-time performance characteristics makes it particularly suitable for complex applications requiring simultaneous processing of multiple data streams and decision-making processes.

The architectural differences between NVIDIA Jetson and Google Coral platforms reflect their distinct design philosophies, with Jetson emphasizing computational flexibility through GPU-based parallel processing while Coral prioritizes energy efficiency through specialized ASIC-based AI acceleration.

Google Coral Ecosystem Design Philosophy

Google’s Coral platform represents a fundamentally different approach to edge AI acceleration, built around the company’s custom Edge TPU (Tensor Processing Unit) technology specifically designed for TensorFlow Lite model inference. The Coral ecosystem emphasizes energy efficiency, cost-effectiveness, and seamless integration with Google’s broader AI and cloud services infrastructure. This approach prioritizes optimized inference performance for pre-trained models over the flexibility and programmability offered by more general-purpose platforms.

The Edge TPU architecture represents Google’s specialized approach to AI acceleration, featuring a dedicated ASIC (Application-Specific Integrated Circuit) designed exclusively for neural network inference operations. This specialization enables exceptional energy efficiency for supported operations while maintaining compact form factors suitable for integration into space-constrained applications. The Coral ecosystem includes various hardware form factors, from USB-connected coral accelerators that can augment existing systems to integrated system-on-module solutions for embedded applications.

The Coral platform’s integration with TensorFlow and TensorFlow Lite provides a streamlined development experience for applications built using Google’s machine learning frameworks. The ecosystem includes pre-optimized models for common computer vision tasks, natural language processing applications, and audio processing workflows that can be immediately deployed without extensive optimization or modification. This approach significantly reduces development time and expertise requirements for organizations seeking to implement proven AI solutions rather than developing custom machine learning models from scratch.

The emphasis on energy efficiency makes Coral particularly attractive for battery-powered applications, IoT deployments, and scenarios where thermal constraints limit the use of higher-power processing solutions. The platform’s ability to deliver consistent inference performance while consuming minimal power enables deployment in applications such as smart cameras, environmental monitoring sensors, and portable devices where power consumption directly impacts operational lifetime and deployment feasibility.

Performance Analysis and Benchmarking

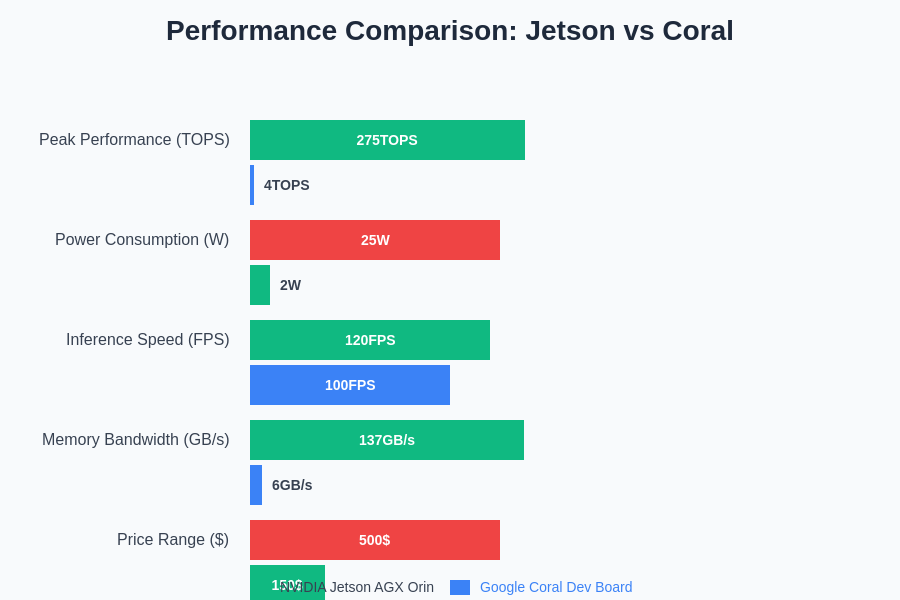

Comprehensive performance evaluation of edge AI platforms requires analysis across multiple dimensions including raw computational throughput, energy efficiency, model compatibility, and real-world application performance. NVIDIA Jetson platforms generally demonstrate superior raw computing power, particularly for applications requiring high-resolution image processing, complex neural network architectures, or simultaneous execution of multiple AI models. The GPU-based architecture excels at parallel processing operations and can efficiently handle variable input sizes and dynamic computational graphs commonly found in research and development environments.

Google Coral platforms optimize for specific inference scenarios, delivering exceptional performance per watt for supported TensorFlow Lite models while maintaining consistent low-latency response characteristics. The Edge TPU’s specialized architecture achieves remarkable energy efficiency for quantized neural networks, making it ideal for deployment scenarios where power consumption, thermal management, and cost considerations outweigh requirements for maximum computational flexibility. Benchmark results consistently demonstrate Coral’s advantages in scenarios involving standard computer vision tasks, object detection, and classification workloads using optimized model architectures.

Utilize Perplexity for comprehensive research into specific performance benchmarks and use case studies that can inform platform selection decisions based on detailed application requirements. The performance characteristics of both platforms vary significantly depending on the specific models being deployed, input data characteristics, and optimization strategies employed during development. Applications utilizing custom neural network architectures or requiring extensive preprocessing and postprocessing operations may favor the flexibility offered by Jetson platforms, while standardized computer vision applications may achieve optimal results with Coral’s specialized acceleration.

Real-world performance evaluation must also consider factors beyond raw inference speed, including system integration complexity, development time requirements, and long-term maintenance considerations. The comprehensive software ecosystem surrounding NVIDIA Jetson platforms provides extensive optimization tools and debugging capabilities that can be crucial for complex applications, while Coral’s streamlined approach may offer advantages for teams seeking rapid deployment of proven AI solutions with minimal customization requirements.

Development Ecosystem and Software Support

The software development experience represents a critical factor in platform selection, particularly for organizations with varying levels of AI expertise and different development timeline constraints. NVIDIA’s Jetson ecosystem provides a comprehensive development environment built around the CUDA programming model, JetPack SDK, and extensive documentation covering everything from basic setup to advanced optimization techniques. This ecosystem supports multiple programming languages, development frameworks, and deployment strategies, enabling teams to leverage existing expertise while accessing cutting-edge AI capabilities.

The JetPack software development kit includes optimized libraries for computer vision, deep learning, and parallel computing operations, along with comprehensive debugging and profiling tools essential for performance optimization. The platform’s compatibility with popular development environments including Docker containers, Python development frameworks, and C++ applications provides flexibility for teams with diverse technical backgrounds and existing codebases. The extensive community support and documentation ecosystem surrounding NVIDIA’s platforms facilitates knowledge sharing and problem resolution throughout the development lifecycle.

Google’s Coral development ecosystem emphasizes simplicity and rapid deployment through its integration with TensorFlow and Google Cloud services. The platform provides pre-built Docker containers, Python libraries, and example applications that enable rapid prototyping and deployment of common AI applications. The Coral ecosystem includes model zoo resources offering pre-trained, optimized models for various computer vision and machine learning tasks, significantly reducing development time for applications utilizing standard AI capabilities.

The development workflow for Coral applications typically involves model training using TensorFlow or TensorFlow Lite, followed by quantization and optimization for Edge TPU deployment. Google provides comprehensive documentation, tutorials, and community support resources that guide developers through this process while highlighting best practices for achieving optimal performance. The ecosystem’s integration with Google Cloud services facilitates hybrid edge-cloud deployments where edge devices handle real-time inference while leveraging cloud resources for model training, data analysis, and system management.

Hardware Specifications and Form Factor Considerations

Physical implementation requirements play a crucial role in edge AI platform selection, particularly for applications with strict size, weight, power, or environmental constraints. NVIDIA Jetson modules span a broad range of form factors from the compact Jetson Nano measuring just 69.6 mm x 45 mm to larger development kits designed for prototyping and evaluation purposes. The modular approach enables integration into custom carrier boards tailored for specific application requirements while maintaining access to the full Jetson software ecosystem and development tools.

Power consumption characteristics vary significantly across the Jetson product line, with entry-level modules consuming as little as 5-10 watts during typical operation while high-performance variants may require 15-60 watts depending on computational load and optimization strategies. Thermal management considerations become increasingly important for higher-performance modules, requiring appropriate heat dissipation solutions that may impact overall system design and deployment constraints. The platform’s GPU-based architecture provides excellent performance scalability but may require careful thermal and power management in space-constrained applications.

Google Coral hardware offerings prioritize compact form factors and minimal power consumption, with USB accelerators adding Edge TPU capabilities to existing systems while consuming only 2-3 watts during operation. The system-on-module offerings provide integrated solutions suitable for custom hardware designs while maintaining the platform’s energy efficiency characteristics. The compact size and low power consumption make Coral particularly suitable for applications where space and power constraints are paramount, such as battery-powered devices, embedded sensors, and mobile applications.

Environmental considerations including operating temperature ranges, humidity tolerance, and mechanical robustness requirements may influence platform selection for challenging deployment scenarios. Industrial applications, outdoor installations, and mobile platforms often require additional environmental protection and may benefit from the extensive ecosystem of ruggedized carrier boards and enclosures available for both platforms. The selection process must balance performance requirements with physical constraints while considering long-term availability and support considerations for production deployments.

Use Case Applications and Industry Deployments

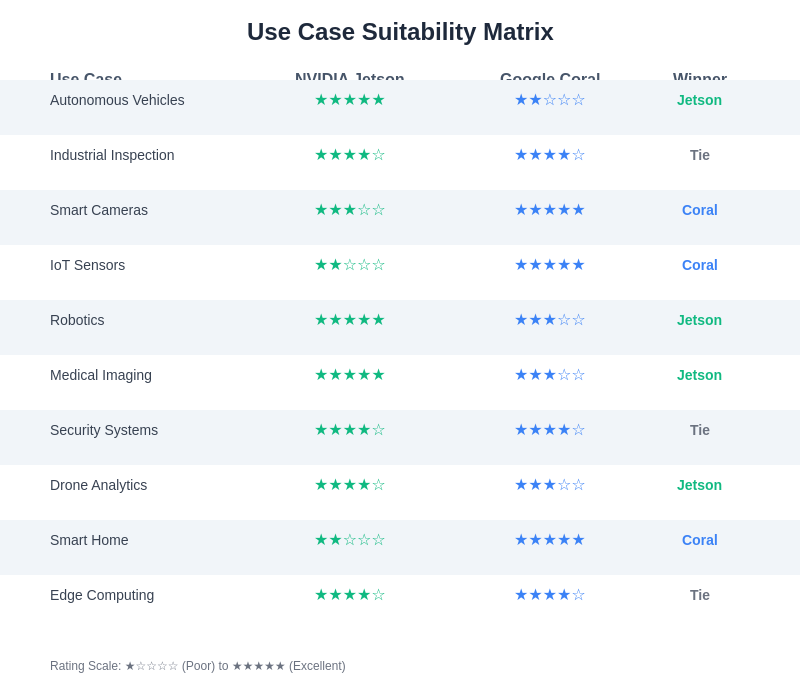

The practical application of edge AI platforms spans numerous industries and use cases, each presenting unique requirements that influence platform selection decisions. Autonomous vehicle applications represent one of the most demanding edge AI scenarios, requiring real-time processing of multiple high-resolution camera feeds, LIDAR data, and sensor inputs to enable safe navigation and obstacle avoidance. NVIDIA Jetson platforms have gained significant traction in this domain due to their ability to handle multiple concurrent AI workloads while maintaining the low-latency response characteristics essential for safety-critical applications.

Industrial automation and quality control applications leverage edge AI for real-time inspection, defect detection, and process optimization tasks that require immediate response without reliance on network connectivity. These applications often benefit from the flexibility of Jetson platforms when dealing with variable lighting conditions, diverse product geometries, and custom inspection requirements that may require specialized neural network architectures. The ability to implement custom preprocessing and postprocessing operations directly on the edge device provides significant advantages for industrial applications with unique requirements.

Smart city infrastructure deployments utilize edge AI for traffic management, security monitoring, and environmental sensing applications where power efficiency and cost-effectiveness are primary concerns. Google Coral platforms excel in these scenarios where standardized computer vision tasks such as object detection, people counting, and license plate recognition can be efficiently implemented using optimized TensorFlow Lite models. The platform’s energy efficiency and compact form factor enable deployment in solar-powered installations and distributed sensor networks where minimal power consumption is essential for operational viability.

The application-specific suitability analysis demonstrates how different use cases align with the architectural strengths of each platform, providing guidance for optimal platform selection based on specific deployment requirements and performance expectations.

Healthcare applications represent a rapidly growing segment for edge AI deployment, encompassing medical imaging analysis, patient monitoring, and diagnostic assistance tools that require reliable performance while maintaining strict privacy and security requirements. The ability to process sensitive medical data locally without transmitting to cloud services provides significant advantages for privacy protection and regulatory compliance. Both platforms offer capabilities suitable for healthcare applications, with selection typically based on specific computational requirements, integration constraints, and regulatory approval considerations.

Cost Analysis and Total Cost of Ownership

Economic considerations encompass not only initial hardware costs but also development effort, deployment complexity, ongoing maintenance requirements, and long-term platform support availability. NVIDIA Jetson platforms typically command higher initial hardware costs, particularly for high-performance variants, but may provide superior value for applications requiring maximum computational flexibility or custom optimization capabilities. The comprehensive development ecosystem and extensive community support can reduce development time and expertise requirements, potentially offsetting higher hardware costs through reduced engineering effort.

The quantitative performance differences between NVIDIA Jetson and Google Coral platforms reveal distinct optimization strategies, with Jetson platforms delivering superior raw computational throughput while Coral excels in energy efficiency and cost-effectiveness for specific deployment scenarios.

Google Coral platforms emphasize cost-effectiveness and rapid deployment, with lower initial hardware costs and streamlined development workflows that can significantly reduce time-to-market for applications utilizing standard AI capabilities. The platform’s optimization for specific use cases may provide superior price-performance characteristics for applications that align well with Coral’s architectural strengths, while potentially requiring additional engineering effort for applications with unique requirements not addressed by the standard ecosystem.

Total cost of ownership analysis must consider factors beyond initial hardware and development costs, including power consumption impact on operational expenses, maintenance requirements, update and security patch management, and long-term platform availability. Edge AI deployments often involve hundreds or thousands of devices requiring consistent management and support over extended operational lifetimes. The selection of platforms with robust ecosystem support and clear long-term roadmaps becomes crucial for minimizing operational risks and ensuring sustainable deployment strategies.

Scaling considerations become particularly important for organizations planning large-scale deployments where even small per-unit cost differences can have significant impact on overall project economics. The availability of volume pricing, development support programs, and ecosystem partnerships may influence the economic viability of different platforms for specific deployment scenarios. Organizations must carefully evaluate both immediate and long-term cost implications while considering the strategic value of platform capabilities and ecosystem alignment with organizational goals.

Security and Privacy Considerations

Edge AI deployments often process sensitive data including personal information, proprietary business data, and security-critical information that requires robust protection throughout the processing pipeline. Both NVIDIA Jetson and Google Coral platforms provide security features designed to protect against various threat vectors, but the implementation and effectiveness of these protections vary based on specific deployment configurations and security requirements. The ability to process data locally without cloud transmission provides inherent privacy advantages for applications handling sensitive information.

NVIDIA Jetson platforms include hardware-based security features such as secure boot capabilities, cryptographic acceleration, and trusted execution environments that can be leveraged to implement comprehensive security architectures. The platform’s flexibility enables implementation of custom security measures tailored to specific application requirements, while the extensive software ecosystem provides access to security-focused development tools and libraries. The ability to implement end-to-end encryption, secure model loading, and tamper detection capabilities makes Jetson platforms suitable for security-critical applications.

Google Coral platforms integrate with Google’s security infrastructure and provide features designed to ensure model integrity and secure operation within the TensorFlow ecosystem. The platform’s integration with Google Cloud services enables implementation of hybrid security architectures where edge devices handle local processing while leveraging cloud-based security management and monitoring capabilities. The Edge TPU’s specialized architecture provides certain protections against side-channel attacks and unauthorized access to model parameters.

The selection of appropriate security measures depends heavily on specific threat models, regulatory requirements, and deployment environments that may present different risk profiles. Organizations deploying edge AI systems must carefully evaluate available security capabilities against their specific requirements while considering the trade-offs between security features and system performance, development complexity, and operational management requirements.

Future Roadmap and Platform Evolution

The rapidly evolving landscape of edge AI technology continues to drive innovation in both hardware capabilities and software optimization techniques. NVIDIA’s roadmap includes continued advancement of GPU architectures with enhanced AI acceleration capabilities, improved energy efficiency, and expanded software ecosystem support for emerging machine learning paradigms. The company’s significant investment in AI research and development positions the Jetson platform to benefit from ongoing innovations in neural network architectures, optimization techniques, and application-specific acceleration capabilities.

Google’s continued development of TPU technology and TensorFlow optimization represents a commitment to advancing the Coral ecosystem’s capabilities while maintaining its focus on energy efficiency and ease of deployment. The integration with Google’s broader AI research efforts and cloud services ecosystem positions Coral to benefit from advances in model compression, quantization techniques, and automated optimization tools that can enhance edge deployment capabilities without requiring extensive specialized expertise.

The broader edge AI market continues to evolve with new entrants, alternative architectures, and innovative approaches to addressing the fundamental challenges of bringing AI capabilities to resource-constrained environments. Organizations selecting edge AI platforms must consider not only current capabilities but also the long-term viability and evolution potential of their chosen solutions. The ability to migrate applications between platform generations and leverage ongoing ecosystem improvements becomes crucial for protecting long-term investment in edge AI capabilities.

Industry trends including the growing adoption of federated learning, edge-cloud hybrid architectures, and specialized AI accelerators for emerging workloads will continue to influence platform development priorities and capabilities. The most successful edge AI deployments will likely be those that can adapt to these evolving requirements while maintaining operational stability and cost-effectiveness throughout extended deployment lifecycles.

The choice between NVIDIA Jetson and Google Coral platforms ultimately depends on careful analysis of specific application requirements, organizational capabilities, and long-term strategic objectives. Both platforms offer compelling advantages for different use cases and deployment scenarios, representing mature solutions backed by significant ongoing investment and development effort. Success in edge AI deployment requires not only selecting appropriate hardware platforms but also developing comprehensive strategies for model optimization, system integration, security implementation, and operational management that leverage the unique strengths of chosen technologies while addressing the inherent challenges of distributed AI systems.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of edge AI technologies and platform capabilities. Readers should conduct thorough evaluation of their specific requirements and consult with platform vendors and technical experts before making deployment decisions. Performance characteristics, pricing, and feature availability may vary and should be verified through direct evaluation and testing for specific use cases.