The convergence of artificial intelligence and mobile computing has reached a revolutionary milestone with the advent of Edge AI, fundamentally transforming how machine learning capabilities are deployed and executed on smartphones, tablets, and other mobile devices. This paradigm shift from cloud-dependent AI processing to on-device computation represents one of the most significant technological advances in mobile computing, enabling unprecedented levels of performance, privacy, and accessibility for AI-powered applications.

Edge AI represents the strategic deployment of machine learning models directly onto mobile hardware, eliminating the traditional dependency on cloud-based processing and internet connectivity for AI functionality. This architectural transformation has profound implications for application responsiveness, user privacy, and the overall mobile computing experience, creating new possibilities for intelligent applications that can operate seamlessly regardless of network conditions or connectivity constraints.

Explore the latest AI developments and mobile technologies to stay informed about cutting-edge innovations that are reshaping the mobile AI landscape. The intersection of edge computing and artificial intelligence continues to evolve rapidly, with new breakthroughs emerging regularly that push the boundaries of what’s possible on mobile devices.

The Evolution of Mobile AI Architecture

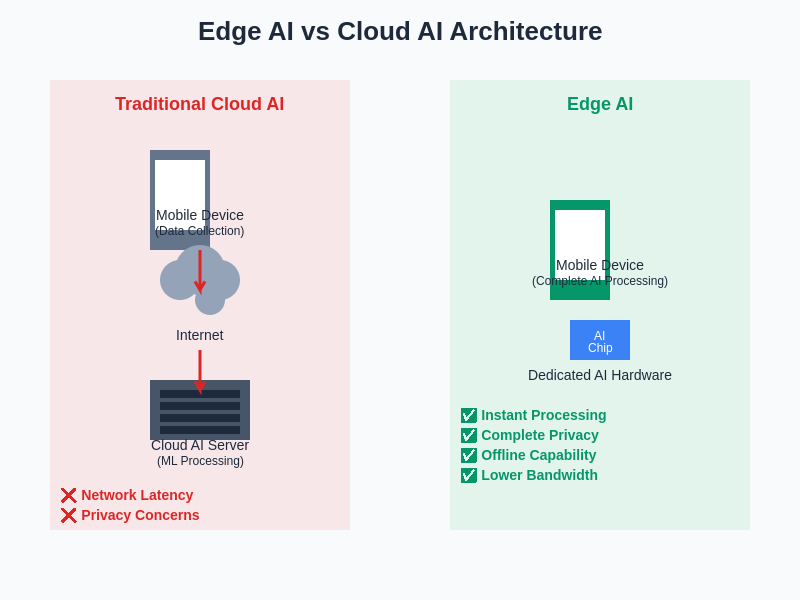

The journey toward Edge AI on mobile devices represents a fundamental shift in how artificial intelligence capabilities are architected and deployed in mobile applications. Traditional mobile AI implementations relied heavily on cloud-based processing, where mobile devices served primarily as data collection and display interfaces, transmitting user inputs to remote servers for AI processing and receiving results back over network connections. This approach, while effective for many applications, introduced significant limitations including latency issues, bandwidth requirements, privacy concerns, and dependency on stable internet connectivity.

The transition to Edge AI architecture addresses these limitations by bringing the computational intelligence directly to the mobile device itself. Modern smartphones and tablets now possess sufficient processing power, memory capacity, and specialized hardware accelerators to execute sophisticated machine learning models locally. This architectural evolution has been enabled by advances in mobile processor design, the development of efficient neural network architectures, and the creation of optimized machine learning frameworks specifically designed for resource-constrained mobile environments.

The fundamental architectural difference between traditional cloud-based AI and Edge AI represents a paradigm shift in how intelligent systems are designed and deployed. Edge AI eliminates the need for constant network connectivity and centralized processing, enabling immediate responses and enhanced privacy through local computation.

The implications of this architectural shift extend beyond mere technical improvements. Edge AI enables mobile applications to provide instantaneous responses to user interactions, maintain full functionality in offline scenarios, and process sensitive user data without transmitting it to external servers. This transformation has opened new possibilities for mobile application development and has fundamentally changed user expectations regarding AI-powered mobile experiences.

Hardware Enablers and Mobile Processing Power

The successful implementation of Edge AI on mobile devices has been made possible through significant advances in mobile hardware architecture and processing capabilities. Modern smartphones incorporate specialized processors designed specifically for machine learning workloads, including neural processing units, dedicated AI accelerators, and optimized graphics processing units that can efficiently execute neural network computations. These hardware innovations have dramatically increased the computational capacity available for on-device AI processing while maintaining reasonable power consumption levels.

Contemporary mobile processors feature heterogeneous computing architectures that can intelligently distribute AI workloads across different processing units based on the specific requirements of each task. Central processing units handle general computation and orchestration, graphics processors excel at parallel matrix operations common in neural networks, and specialized AI chips provide optimized execution for specific machine learning operations. This multi-processor approach maximizes computational efficiency while minimizing energy consumption, enabling sustained AI processing on battery-powered devices.

Experience advanced AI capabilities with Claude for development projects that require sophisticated reasoning and analysis. The integration of powerful AI tools in development workflows mirrors the evolution happening in mobile devices, where AI capabilities are becoming increasingly accessible and powerful.

The memory hierarchy in modern mobile devices has also been optimized to support Edge AI workloads effectively. High-speed memory interfaces, intelligent caching mechanisms, and efficient memory management systems ensure that machine learning models can access required data quickly while minimizing power consumption. These hardware optimizations are crucial for enabling complex AI applications to run smoothly on mobile devices without compromising battery life or overall system performance.

Framework Ecosystem and Development Tools

The mobile Edge AI ecosystem has been enriched by the development of sophisticated frameworks and tools specifically designed to facilitate machine learning deployment on mobile devices. TensorFlow Lite represents one of the most significant contributions to this ecosystem, providing a lightweight runtime environment optimized for mobile and embedded devices. This framework enables developers to convert full TensorFlow models into efficient mobile-optimized versions that can execute with minimal resource overhead while maintaining acceptable accuracy levels.

Apple’s Core ML framework has similarly revolutionized AI development for iOS devices by providing seamless integration between machine learning models and iOS applications. Core ML automatically optimizes models for Apple hardware and provides easy-to-use APIs that enable developers to integrate sophisticated AI capabilities into their applications with minimal complexity. The framework supports a wide range of model types and provides tools for converting models from popular machine learning frameworks into Core ML format.

Android’s ML Kit and Neural Networks API provide comprehensive support for Edge AI development on Android devices, offering both high-level APIs for common AI tasks and low-level access to hardware acceleration capabilities. These frameworks enable developers to choose the appropriate level of abstraction based on their specific requirements, from simple pre-built models for common tasks to custom neural network architectures that leverage device-specific hardware optimizations.

The development toolchain for mobile Edge AI has also evolved to include sophisticated model optimization techniques, profiling tools, and deployment utilities that streamline the process of bringing machine learning capabilities to mobile applications. These tools address the unique challenges of mobile deployment, including model size constraints, performance optimization, and cross-platform compatibility considerations.

Model Optimization and Efficiency Strategies

Deploying machine learning models on mobile devices requires careful attention to model optimization and efficiency considerations that differ significantly from cloud-based AI deployments. Mobile devices operate under strict constraints regarding computational resources, memory capacity, battery life, and thermal management, necessitating specialized optimization techniques that balance model accuracy with practical deployment requirements.

Quantization represents one of the most effective optimization strategies for mobile Edge AI, involving the reduction of numerical precision in model parameters and computations. By converting 32-bit floating-point operations to 8-bit integer operations, quantization can significantly reduce model size and computational requirements while maintaining acceptable accuracy levels. This technique is particularly effective for inference workloads and can result in substantial improvements in both speed and energy efficiency.

Model pruning techniques selectively remove unnecessary connections and parameters from neural networks, resulting in smaller, faster models that require less memory and computational resources. Structured pruning approaches can eliminate entire neurons or layers, while unstructured pruning removes individual connections based on their contribution to model performance. These techniques can achieve significant model compression ratios while preserving most of the original model’s accuracy.

Knowledge distillation provides another powerful approach to model optimization for mobile deployment. This technique involves training smaller, more efficient models to mimic the behavior of larger, more complex models, effectively transferring knowledge from complex teacher models to simpler student models that are more suitable for mobile deployment. The resulting distilled models can achieve performance levels comparable to their larger counterparts while requiring significantly fewer computational resources.

Real-Time Processing and User Experience

The implementation of Edge AI on mobile devices has revolutionized real-time processing capabilities and user experience in mobile applications. Unlike cloud-based AI systems that introduce network latency and connectivity dependencies, Edge AI enables instantaneous responses to user inputs and real-time processing of sensor data, creating more responsive and engaging user interfaces that feel natural and intuitive.

Real-time computer vision applications exemplify the transformative impact of Edge AI on user experience. Mobile cameras can now perform sophisticated image analysis, object recognition, and augmented reality processing in real-time without any network dependency. This capability has enabled new categories of applications including real-time translation, interactive shopping experiences, and immersive gaming applications that seamlessly blend digital content with the physical world.

Voice processing and natural language understanding have similarly been transformed by Edge AI capabilities. Mobile devices can now perform speech recognition, language translation, and voice command processing entirely on-device, eliminating the delays associated with cloud-based processing while ensuring that sensitive voice data never leaves the device. This advancement has improved both the responsiveness and privacy of voice-controlled applications.

Discover comprehensive AI research capabilities with Perplexity to enhance your understanding of emerging technologies and development approaches. The integration of multiple AI tools creates powerful workflows that parallel the multi-modal AI capabilities emerging on mobile devices.

The continuous processing capabilities enabled by Edge AI have also created new possibilities for proactive and contextual mobile applications. Devices can continuously analyze sensor data, user behavior patterns, and environmental conditions to provide intelligent suggestions and automated responses without requiring explicit user requests. This shift from reactive to proactive AI creates more intuitive and helpful mobile experiences that anticipate user needs and provide relevant assistance automatically.

Privacy and Security Advantages

One of the most compelling advantages of Edge AI implementation on mobile devices lies in the enhanced privacy and security protections it provides for user data and AI processing activities. Traditional cloud-based AI systems require transmitting user data to remote servers for processing, creating potential privacy vulnerabilities and requiring users to trust external organizations with sensitive personal information. Edge AI eliminates these concerns by processing all data locally on the user’s device, ensuring that sensitive information never leaves the user’s direct control.

The privacy benefits of Edge AI extend beyond simple data locality considerations. On-device processing enables applications to analyze sensitive information such as personal photos, voice recordings, location data, and behavioral patterns without exposing this information to external parties. This approach aligns with growing privacy expectations and regulatory requirements while enabling sophisticated AI functionality that would otherwise require compromising user privacy.

Security advantages of Edge AI include reduced attack surface area, elimination of network-based vulnerabilities, and improved resilience against data breaches. When AI processing occurs entirely on-device, there are no network communications to intercept, no cloud servers to compromise, and no centralized databases containing user data that could be targeted by malicious actors. This distributed security model significantly reduces the potential impact of security incidents while maintaining full AI functionality.

The implementation of Edge AI also enables more granular privacy controls, allowing users to benefit from AI capabilities while maintaining complete control over their data. Applications can provide sophisticated AI features without requiring users to agree to broad data sharing agreements, creating a more transparent and user-controlled privacy model that builds trust and encourages adoption of AI-powered features.

Performance Optimization and Battery Management

The successful deployment of Edge AI on mobile devices requires careful attention to performance optimization and battery management strategies that balance AI capabilities with the practical constraints of mobile hardware. Mobile devices operate under strict power budgets and thermal limitations that must be considered when implementing computationally intensive AI workloads, necessitating sophisticated optimization approaches that maintain functionality while preserving battery life and device performance.

Dynamic frequency scaling and adaptive processing techniques enable mobile devices to adjust computational intensity based on current requirements and available resources. AI applications can dynamically scale model complexity, reduce processing frequency, or defer non-critical computations based on battery level, thermal conditions, and user activity patterns. These adaptive approaches ensure that Edge AI functionality remains available when needed while minimizing impact on overall device performance and battery life.

Hardware acceleration utilization represents a critical optimization strategy for mobile Edge AI implementations. Modern mobile processors include specialized AI acceleration units that can execute neural network operations with significantly higher efficiency than general-purpose processors. Effective utilization of these specialized processing units can reduce both computation time and energy consumption, enabling more sophisticated AI capabilities while maintaining acceptable battery performance.

Intelligent caching and model management strategies further optimize mobile Edge AI performance by preloading frequently used models, caching intermediate results, and efficiently managing memory allocation for AI workloads. These techniques minimize the overhead associated with model initialization and data movement, creating more responsive AI applications that operate efficiently within mobile device constraints.

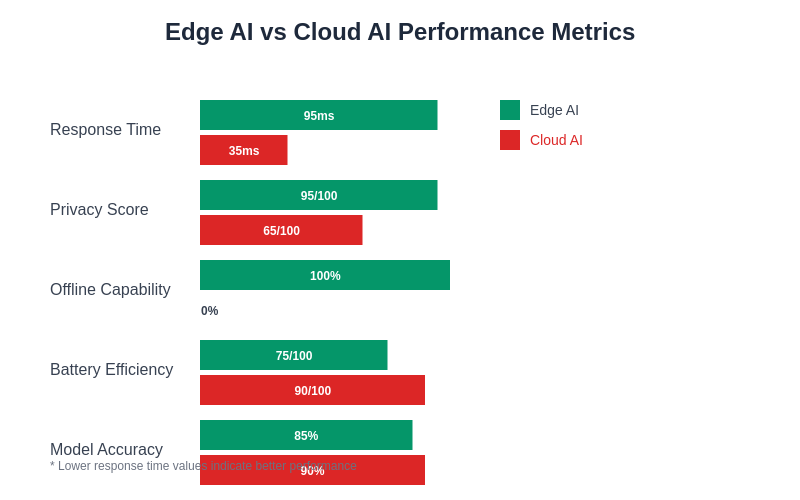

The performance advantages of Edge AI become evident when examining key metrics across multiple dimensions. While cloud-based AI may offer slightly higher model accuracy due to larger computational resources, Edge AI excels in response time, privacy protection, offline capability, and user experience consistency.

Application Domains and Use Cases

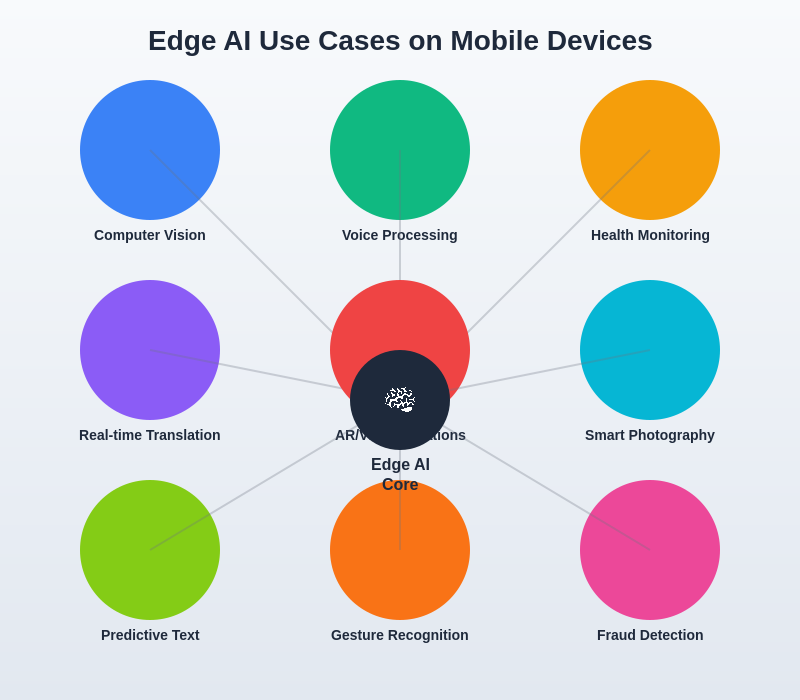

Edge AI on mobile devices has enabled transformative applications across numerous domains, creating new categories of mobile experiences that were previously impossible or impractical with cloud-based AI architectures. Computer vision applications represent one of the most visible and impactful domains, with mobile cameras now capable of real-time object recognition, scene understanding, and augmented reality processing that enhances both productivity and entertainment applications.

Healthcare applications have particularly benefited from mobile Edge AI capabilities, enabling sophisticated medical imaging analysis, vital sign monitoring, and health assessment tools that can operate privately and independently of network connectivity. Mobile devices can now perform preliminary medical screenings, analyze symptoms, and provide health insights without transmitting sensitive medical data to external servers, creating more accessible and private healthcare tools.

Educational applications leverage Edge AI to provide personalized learning experiences, real-time language translation, and intelligent tutoring systems that adapt to individual learning styles and progress. These applications can operate effectively in environments with limited connectivity while providing sophisticated AI-powered educational support that enhances learning outcomes and accessibility.

Creative applications have been revolutionized by Edge AI capabilities, enabling real-time photo and video enhancement, music creation tools, and artistic style transfer applications that provide professional-quality results directly on mobile devices. These applications democratize access to sophisticated creative tools while enabling new forms of artistic expression and content creation.

The diverse ecosystem of Edge AI applications demonstrates the technology’s versatility and broad applicability across multiple domains. From computer vision and voice processing to health monitoring and augmented reality, Edge AI has transformed how mobile devices understand and respond to user needs and environmental conditions.

Development Challenges and Solutions

Implementing Edge AI on mobile devices presents unique development challenges that require specialized approaches and solutions different from traditional mobile application development or cloud-based AI development. Resource constraints represent the most fundamental challenge, requiring developers to carefully balance model complexity, accuracy requirements, and available computational resources while maintaining acceptable application performance and battery life.

Model deployment and versioning present additional complexity in mobile Edge AI development. Unlike cloud-based systems where models can be updated centrally, mobile Edge AI requires careful consideration of model distribution, version management, and backward compatibility across diverse device capabilities and operating system versions. Developers must create robust deployment strategies that can handle the fragmentation and diversity of the mobile device ecosystem.

Cross-platform compatibility adds another layer of complexity to mobile Edge AI development. Different mobile platforms provide different AI frameworks, hardware acceleration capabilities, and optimization tools, requiring developers to either choose platform-specific approaches or invest in cross-platform solutions that may compromise optimal performance on individual platforms.

Testing and validation of Edge AI applications require specialized approaches that account for the diversity of mobile hardware configurations, operating conditions, and use cases. Traditional software testing methodologies must be extended to include model performance validation, resource utilization analysis, and edge case handling across a wide range of device capabilities and environmental conditions.

Industry Adoption and Market Impact

The adoption of Edge AI technologies across mobile applications and industries has accelerated rapidly as organizations recognize the significant advantages of on-device AI processing for user experience, privacy, and operational efficiency. Major technology companies have invested heavily in Edge AI capabilities, developing specialized hardware, optimized software frameworks, and comprehensive developer tools that facilitate widespread adoption of mobile AI technologies.

Consumer applications have embraced Edge AI for features ranging from photography enhancement and voice assistants to fitness tracking and personal productivity tools. The ability to provide sophisticated AI functionality without network dependency has enabled new application categories and improved existing applications by making them more responsive, reliable, and private.

Enterprise adoption of mobile Edge AI has focused on applications requiring high privacy, real-time processing, or operation in connectivity-challenged environments. Industries such as healthcare, manufacturing, retail, and field services have leveraged Edge AI to create mobile solutions that provide sophisticated analysis and decision support capabilities while maintaining data privacy and operational independence.

The market impact of Edge AI extends beyond individual applications to influence mobile hardware design, software platform development, and the broader technology ecosystem. Hardware manufacturers are incorporating more sophisticated AI acceleration capabilities, software platforms are evolving to better support Edge AI development, and new business models are emerging that leverage the unique capabilities of on-device AI processing.

Future Technological Developments

The future of Edge AI on mobile devices promises continued advancement across multiple technological dimensions, driven by ongoing improvements in mobile hardware capabilities, machine learning algorithms, and software optimization techniques. Next-generation mobile processors will incorporate even more sophisticated AI acceleration capabilities, enabling more complex models and new categories of AI applications that are currently impractical on mobile devices.

Federated learning represents an emerging paradigm that combines the privacy benefits of Edge AI with the collaborative advantages of distributed learning. This approach enables mobile devices to contribute to model improvement while maintaining complete data privacy, creating systems that become more intelligent over time without compromising user privacy or requiring centralized data collection.

Neuromorphic computing approaches promise to revolutionize mobile AI efficiency by implementing brain-inspired computing architectures that can process information with dramatically reduced energy requirements. These technologies could enable always-on AI capabilities that continuously analyze environmental conditions and user behavior without significant battery impact, creating more proactive and intelligent mobile experiences.

The integration of Edge AI with emerging technologies such as 5G networks, Internet of Things devices, and augmented reality systems will create new possibilities for distributed intelligence that spans multiple devices and environments. Mobile devices will serve as intelligent coordinators in broader AI ecosystems that include smart home devices, wearable technology, and environmental sensors, creating more comprehensive and contextual AI experiences.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Edge AI technologies and their applications in mobile development. Readers should conduct their own research and consider their specific requirements when implementing Edge AI solutions. The effectiveness and suitability of Edge AI approaches may vary depending on specific use cases, device capabilities, and application requirements.