The realm of artificial intelligence has witnessed extraordinary advancements in understanding human emotions through vocal patterns, marking a revolutionary transformation in how machines interpret and respond to human sentiment. Emotion recognition in speech represents one of the most sophisticated applications of AI technology, combining deep learning algorithms with acoustic analysis to decode the complex emotional landscape embedded within human vocal communications. This breakthrough technology has fundamentally altered our approach to human-computer interaction, creating unprecedented opportunities for empathetic and responsive AI systems that can understand not just what we say, but how we feel when we say it.

Explore the latest developments in AI emotion recognition to stay informed about cutting-edge research and applications that are reshaping our understanding of artificial emotional intelligence. The convergence of speech processing, machine learning, and psychological insights has created a new frontier in computational understanding that promises to bridge the gap between human emotional expression and artificial intelligence comprehension.

The Science Behind Vocal Emotion Detection

The human voice carries a wealth of emotional information that extends far beyond the semantic content of spoken words. Every utterance contains subtle acoustic features including pitch variations, speaking rate fluctuations, voice quality changes, and rhythmic patterns that collectively form a unique emotional fingerprint. Modern AI systems leverage sophisticated signal processing techniques to extract these paralinguistic features, analyzing them through complex mathematical models that can identify emotional states with remarkable accuracy and nuance.

The technological foundation of emotion recognition relies on advanced machine learning architectures, particularly deep neural networks that can process multiple layers of acoustic information simultaneously. These systems examine spectral characteristics, prosodic features, and temporal dynamics of speech signals to construct comprehensive emotional profiles. The integration of convolutional neural networks for feature extraction and recurrent neural networks for temporal modeling has enabled AI systems to capture both instantaneous emotional expressions and longer-term emotional trajectories within conversations.

Recent developments in transformer architectures have further enhanced the capability of emotion recognition systems by enabling better understanding of contextual relationships within speech patterns. These models can now distinguish between subtle emotional variations such as mild frustration versus intense anger, or genuine happiness versus polite enthusiasm, demonstrating a level of emotional intelligence that approaches human-level discrimination in many scenarios.

Acoustic Features and Emotional Indicators

The acoustic landscape of human speech contains numerous indicators that reveal emotional states through measurable parameters. Fundamental frequency variations, commonly known as pitch changes, serve as primary indicators of emotional arousal and valence. Higher pitch levels often correlate with excitement, anxiety, or happiness, while lower pitch ranges may indicate sadness, calmness, or authority. However, the relationship between acoustic features and emotions is far more complex than simple correlations, requiring sophisticated pattern recognition to interpret accurately.

Voice quality parameters such as jitter, shimmer, and harmonics-to-noise ratio provide additional layers of emotional information. These measures reflect the physiological changes in vocal tract configuration that occur during different emotional states. For instance, stress or anxiety often manifests through increased vocal tension, resulting in altered harmonic structures that can be detected and quantified by AI algorithms.

Enhance your understanding with advanced AI tools like Claude that can process and analyze complex emotional patterns in speech data with unprecedented accuracy and insight. The temporal dynamics of speech, including speaking rate variations, pause patterns, and rhythm changes, offer crucial insights into emotional states. Rapid speech may indicate excitement or nervousness, while slower delivery might suggest thoughtfulness, sadness, or emphasis. These temporal features, when combined with spectral analysis, create comprehensive emotional signatures that enable precise sentiment classification.

Machine Learning Approaches in Speech Emotion Recognition

The evolution of machine learning methodologies has dramatically improved the accuracy and reliability of speech emotion recognition systems. Traditional approaches relied heavily on handcrafted feature engineering, where researchers manually selected acoustic parameters believed to be relevant for emotion detection. While these methods achieved reasonable success, they were limited by human understanding of which features were most important and how they should be combined.

Contemporary deep learning approaches have revolutionized this field by automatically learning optimal feature representations directly from raw audio data. Convolutional neural networks excel at identifying local patterns in spectrograms, while recurrent architectures capture temporal dependencies that are crucial for understanding emotional context. The combination of these approaches in hybrid architectures has yielded significant improvements in recognition accuracy across diverse emotional categories.

Attention mechanisms have emerged as particularly powerful tools for emotion recognition, allowing models to focus on the most emotionally relevant portions of speech signals while ignoring irrelevant background information. These mechanisms enable systems to identify key emotional moments within longer utterances, improving both accuracy and interpretability of emotional predictions.

Real-World Applications and Industry Impact

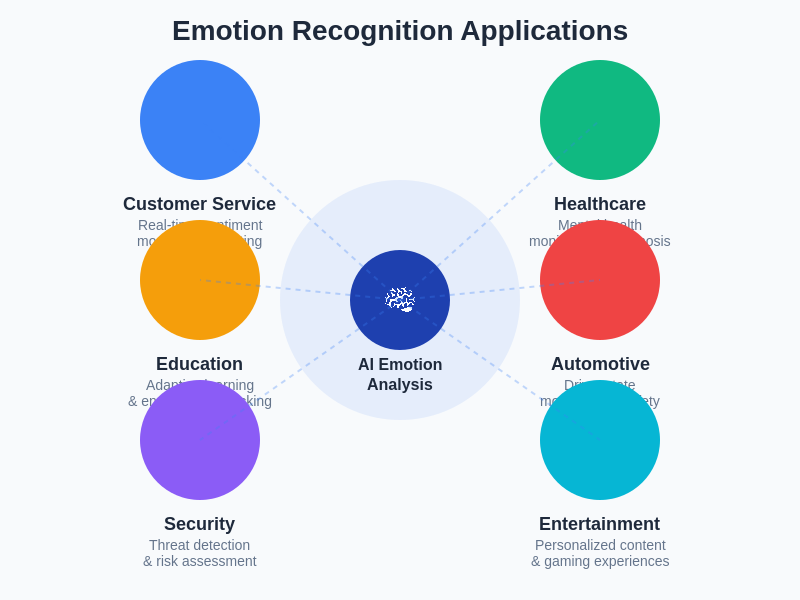

The practical applications of speech emotion recognition span numerous industries and use cases, transforming how organizations interact with customers and understand human needs. Customer service represents one of the most impactful applications, where real-time emotion detection enables support agents to adjust their communication style based on customer emotional state. This technology can automatically escalate calls when frustration is detected, route customers to specialized agents based on emotional needs, or provide real-time coaching to improve service quality.

Healthcare applications have demonstrated remarkable potential for early detection of mental health conditions and monitoring patient well-being. Speech patterns can reveal early indicators of depression, anxiety, cognitive decline, and other conditions that might not be immediately apparent through traditional assessment methods. Continuous monitoring through voice-based applications enables healthcare providers to track patient progress and intervene when concerning emotional patterns emerge.

Educational technology has embraced emotion recognition to create more responsive and personalized learning experiences. AI tutoring systems can adapt their teaching strategies based on student emotional states, providing additional support when frustration is detected or increasing challenge levels when engagement appears high. This emotional awareness enhances learning outcomes by maintaining optimal emotional conditions for knowledge acquisition.

The automotive industry has integrated emotion recognition into advanced driver assistance systems, monitoring driver emotional state to detect fatigue, stress, or distraction. These systems can recommend breaks, adjust vehicle settings, or even intervene in potentially dangerous situations when emotional indicators suggest compromised driving ability. The diverse application landscape demonstrates the transformative potential of emotion recognition technology across multiple sectors, each leveraging emotional intelligence to enhance human experiences and outcomes.

Challenges in Cross-Cultural Emotion Recognition

One of the most significant challenges in developing robust emotion recognition systems lies in addressing cultural variations in emotional expression. Different cultures exhibit distinct patterns of emotional vocalization, with varying degrees of expressiveness, different baseline vocal characteristics, and culture-specific emotional norms. AI systems trained primarily on data from one cultural context may perform poorly when applied to speakers from different cultural backgrounds.

Language-specific characteristics further complicate cross-cultural emotion recognition. Tonal languages like Mandarin Chinese use pitch variations for semantic purposes, which can interfere with emotion detection algorithms designed for non-tonal languages. Similarly, languages with different stress patterns, rhythmic structures, or phonetic inventories require specialized adaptation to achieve accurate emotion recognition.

Discover comprehensive AI research capabilities with Perplexity to explore cross-cultural studies and multilingual emotion recognition research that addresses these complex challenges. Researchers are developing culturally adaptive models that can automatically adjust their emotional interpretation based on detected linguistic and cultural contexts, improving accuracy across diverse populations.

Privacy and Ethical Considerations

The deployment of emotion recognition technology raises important privacy and ethical concerns that must be carefully addressed to ensure responsible implementation. Voice data contains highly personal information about individuals’ emotional states, mental health conditions, and private feelings that require stringent protection measures. Organizations implementing these systems must establish robust data governance frameworks that protect user privacy while enabling beneficial applications.

Consent and transparency represent fundamental requirements for ethical emotion recognition deployment. Users must understand how their emotional data is being collected, processed, and utilized, with clear options for opting out or controlling data usage. The potential for emotional manipulation through AI systems that understand user emotional states requires careful consideration of appropriate use cases and limitations.

Bias in emotion recognition systems poses another significant ethical challenge. Training data that lacks diversity in terms of demographics, cultural backgrounds, or emotional expression styles can result in systems that perform poorly for underrepresented groups. This bias can lead to unfair treatment in applications such as hiring, education, or healthcare, where emotional assessment influences important decisions.

Technical Architecture and Implementation

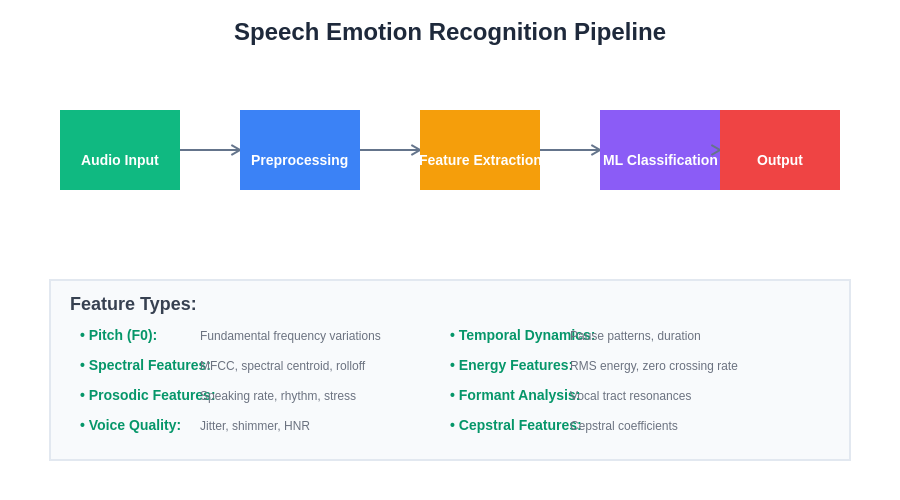

Modern emotion recognition systems employ sophisticated multi-stage architectures that process speech signals through several layers of analysis and interpretation. The initial preprocessing stage involves signal enhancement, noise reduction, and normalization to ensure consistent input quality regardless of recording conditions or equipment variations. This stage is crucial for maintaining system performance across diverse real-world deployment scenarios.

Feature extraction represents the core technical challenge in emotion recognition, involving the conversion of raw audio signals into meaningful numerical representations that capture emotional content. Mel-frequency cepstral coefficients, spectral features, prosodic parameters, and voice quality measures are typically extracted and combined into comprehensive feature vectors that represent the acoustic properties relevant to emotional state detection.

The classification stage employs machine learning models trained on large datasets of emotionally labeled speech samples. These models learn to map acoustic features to emotional categories through complex mathematical transformations that capture the subtle relationships between vocal characteristics and emotional states. Advanced architectures incorporate attention mechanisms, residual connections, and regularization techniques to improve generalization and prevent overfitting.

Post-processing and temporal smoothing help improve the stability and reliability of emotion predictions by considering emotional context over time. Sudden emotional transitions are rare in natural speech, so systems often employ temporal filtering to ensure that predicted emotions follow realistic patterns of emotional change. This comprehensive pipeline approach ensures robust and accurate emotion detection across diverse speech inputs and environmental conditions.

Integration with Natural Language Processing

The combination of speech emotion recognition with natural language processing creates powerful multimodal systems that understand both the semantic content and emotional context of spoken communication. This integration enables more sophisticated interpretation of human intent and feeling, as emotional tone often modifies or contradicts the literal meaning of spoken words.

Sentiment analysis of transcribed speech content provides additional context that can improve emotion recognition accuracy. When vocal emotional indicators are ambiguous, semantic sentiment can help disambiguate emotional state. Conversely, vocal emotion can reveal when speakers are being sarcastic, deceptive, or expressing emotions that contradict their word choice.

Advanced systems now incorporate large language models that can understand the relationship between spoken content and emotional expression, enabling more nuanced interpretation of complex emotional communications. These models can identify subtle emotional cues such as passive aggression, hidden frustration, or suppressed excitement that might be missed by purely acoustic analysis.

Performance Metrics and Evaluation Methods

Evaluating the performance of emotion recognition systems requires sophisticated metrics that capture both accuracy and practical utility. Traditional classification metrics such as precision, recall, and F1-score provide basic performance indicators, but emotion recognition presents unique challenges that require specialized evaluation approaches. The subjective nature of emotional perception means that even human annotators often disagree on emotional labels, making perfect accuracy an unrealistic goal.

Confusion matrices reveal patterns of misclassification that can inform system improvements and identify which emotions are most easily confused. Understanding these patterns helps developers focus optimization efforts on the most problematic emotion pairs and guides the selection of appropriate emotional categories for specific applications.

Real-world evaluation involves testing systems in naturalistic conditions with spontaneous emotional expressions rather than acted emotions typically found in laboratory datasets. This evaluation approach better reflects actual deployment performance and reveals challenges that may not be apparent in controlled experimental conditions.

Future Directions and Emerging Technologies

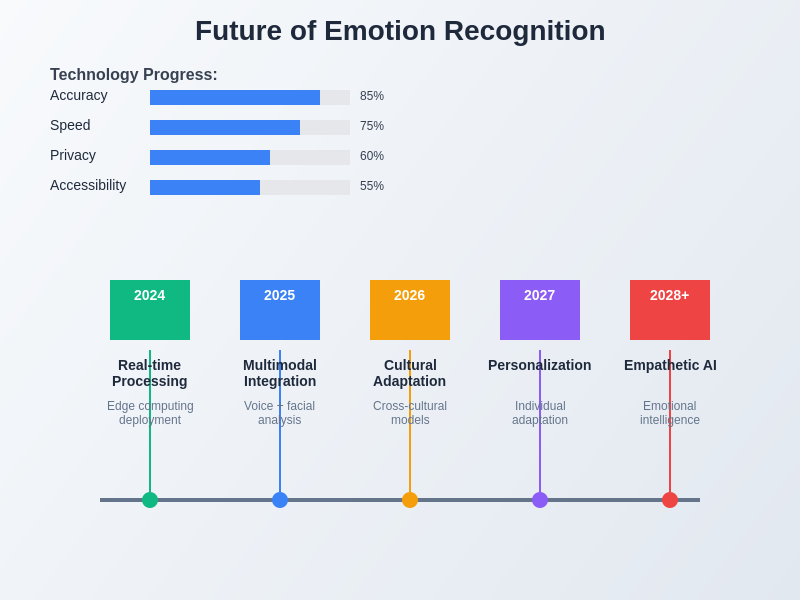

The future of speech emotion recognition promises exciting developments in several key areas that will significantly expand capabilities and applications. Real-time processing improvements will enable more responsive systems that can provide immediate feedback and adaptation based on detected emotional states. Edge computing implementations will allow emotion recognition to operate on mobile devices and embedded systems without requiring cloud connectivity.

Multimodal emotion recognition represents a particularly promising direction, combining speech analysis with facial expression detection, physiological monitoring, and contextual information to create more robust and accurate emotional assessment. These comprehensive systems will provide richer understanding of human emotional states by leveraging multiple sources of emotional information.

Personalized emotion recognition models that adapt to individual emotional expression patterns will improve accuracy and user experience. These systems will learn user-specific emotional signatures and adjust their interpretation accordingly, accounting for individual differences in vocal emotional expression.

Advances in few-shot learning and transfer learning will enable rapid adaptation to new languages, cultures, and emotional categories without requiring extensive retraining. This capability will accelerate the global deployment of emotion recognition technology and improve accessibility across diverse populations. The convergence of these technological advances promises a future where emotional intelligence becomes a fundamental capability of artificial intelligence systems.

Industry Standards and Best Practices

The development of industry standards for emotion recognition technology has become increasingly important as deployment scales and applications diversify. Standardized evaluation protocols ensure consistent performance measurement across different systems and enable meaningful comparisons between competing approaches. These standards address dataset requirements, annotation guidelines, performance metrics, and testing procedures.

Best practices for data collection and annotation emphasize the importance of diverse, representative datasets that capture natural emotional expressions across different demographics, languages, and cultural contexts. Proper annotation requires trained human evaluators who understand emotional nuances and can provide consistent, reliable labels for training and evaluation.

Deployment guidelines address technical requirements for real-world implementation, including computational requirements, latency constraints, privacy protections, and integration considerations. These guidelines help organizations successfully implement emotion recognition technology while avoiding common pitfalls and ensuring optimal performance.

Economic Impact and Market Opportunities

The economic implications of speech emotion recognition technology extend across numerous sectors, creating new market opportunities and transforming existing business models. The global emotion recognition market has experienced rapid growth, driven by increasing demand for personalized customer experiences, mental health monitoring solutions, and intelligent human-computer interfaces.

Cost savings from improved customer service efficiency represent a significant economic benefit for organizations implementing emotion recognition technology. Automated emotional assessment can reduce call handling times, improve first-call resolution rates, and decrease customer churn through more responsive service delivery.

New business models emerge from the capability to understand customer emotions at scale, enabling personalized product recommendations, dynamic pricing strategies, and targeted marketing approaches based on emotional preferences and responses. These applications create competitive advantages for early adopters while establishing new revenue streams.

Research Frontiers and Academic Developments

Academic research in speech emotion recognition continues to push the boundaries of what is possible through innovative algorithmic approaches and novel application areas. Researchers are exploring the intersection of emotion recognition with other AI capabilities such as dialogue systems, recommendation engines, and autonomous agents to create more emotionally intelligent artificial systems.

Cross-disciplinary collaboration between computer scientists, psychologists, linguists, and neuroscientists has enriched understanding of the relationship between vocal patterns and emotional states. This collaboration has led to more sophisticated models that incorporate psychological theories of emotion and linguistic insights into emotional expression.

Open-source research initiatives and shared datasets have accelerated progress by enabling broader participation in emotion recognition research and facilitating reproducible studies. These collaborative efforts help establish common benchmarks and drive collective advancement in the field.

The integration of emotion recognition technology into artificial intelligence systems represents a fundamental step toward creating more empathetic and responsive machines that can better understand and serve human needs. As this technology continues to evolve and mature, we can expect to see increasingly sophisticated applications that enhance human-computer interaction while respecting privacy and ethical considerations. The future promises AI systems that not only understand what we say but truly comprehend how we feel, opening new possibilities for technology that serves humanity with genuine emotional intelligence.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of emotion recognition technologies and their applications. Readers should conduct their own research and consider their specific requirements when implementing emotion recognition systems. The effectiveness and accuracy of emotion recognition technology may vary depending on specific use cases, cultural contexts, and individual differences in emotional expression.