The convergence of artificial intelligence and embedded systems has reached a remarkable milestone with the emergence of ESP32-powered machine learning applications that bring sophisticated AI capabilities directly to the edge of the network. This revolutionary combination of WiFi-enabled microcontrollers and compact machine learning models represents a fundamental shift in how we approach intelligent IoT applications, enabling real-time decision-making capabilities in devices that were previously limited to simple sensor data collection and basic automation tasks.

Explore the latest AI hardware trends and innovations to stay informed about cutting-edge developments in edge computing and microcontroller-based artificial intelligence. The ESP32 ecosystem has evolved far beyond its original conception as a simple WiFi and Bluetooth module, transforming into a powerful platform capable of executing complex neural networks while maintaining the low power consumption and cost-effectiveness that make it ideal for widespread IoT deployment.

The ESP32 Revolution in Edge Computing

The ESP32 microcontroller family has fundamentally transformed the landscape of edge computing by democratizing access to powerful processing capabilities in an incredibly compact and affordable package. Unlike traditional embedded systems that required separate components for connectivity, processing, and storage, the ESP32 integrates dual-core processors, WiFi and Bluetooth connectivity, and sufficient memory to handle machine learning workloads directly on the device. This integration eliminates the need for constant cloud connectivity while reducing latency, improving privacy, and enabling autonomous operation in environments where network connectivity may be unreliable or unavailable.

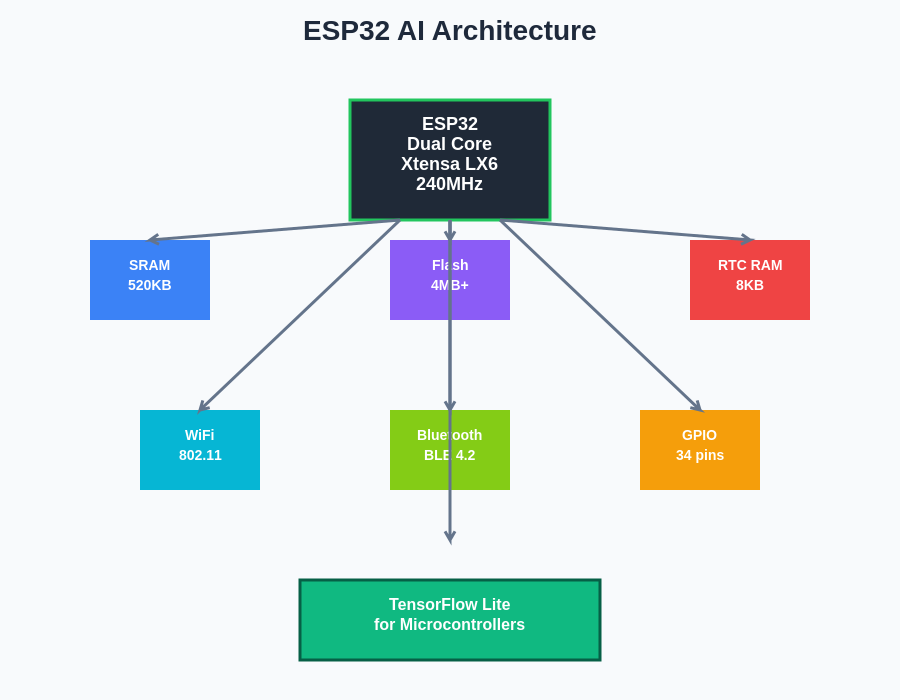

The architectural design of the ESP32 makes it uniquely suited for AI applications through its dual-core Xtensa LX6 processors, which can be configured to handle different aspects of machine learning operations simultaneously. One core can manage network communications and system maintenance tasks while the other focuses entirely on neural network inference, creating an efficient division of labor that maximizes performance while maintaining real-time responsiveness. This parallel processing capability, combined with the device’s built-in hardware acceleration for certain mathematical operations, enables the execution of surprisingly sophisticated machine learning models despite the constraints of embedded hardware.

The comprehensive architecture of the ESP32 demonstrates how memory management, processing cores, and connectivity modules work together to create a powerful platform for edge AI applications. The strategic allocation of resources across different system components ensures optimal performance for machine learning workloads while maintaining efficient power consumption.

Machine Learning Frameworks for ESP32

The development of machine learning applications for ESP32 has been greatly facilitated by the adaptation of popular frameworks specifically optimized for microcontroller environments. TensorFlow Lite for Microcontrollers stands as the most prominent solution, providing developers with the ability to deploy models trained using full TensorFlow on desktop or cloud environments directly to ESP32 devices. This framework handles the complex optimization required to compress neural networks into formats suitable for execution on devices with limited memory and processing power, while maintaining acceptable inference accuracy for most practical applications.

Experience advanced AI development with Claude for assistance in optimizing machine learning models and developing sophisticated ESP32 applications that push the boundaries of edge AI capabilities. The process of adapting machine learning models for ESP32 deployment involves careful consideration of model architecture, quantization techniques, and memory management strategies that balance computational efficiency with inference accuracy.

Edge Impulse has emerged as another significant platform in the ESP32 machine learning ecosystem, offering an end-to-end solution for developing, training, and deploying machine learning models specifically designed for edge devices. This platform simplifies the complex process of data collection, feature engineering, model training, and deployment optimization, making machine learning accessible to developers who may not have extensive expertise in neural network design. The platform’s integration with ESP32 development environments streamlines the workflow from initial concept to production deployment, reducing development time and improving the reliability of deployed solutions.

WiFi Connectivity and Distributed Intelligence

The WiFi capabilities of the ESP32 enable sophisticated distributed intelligence architectures where multiple devices can collaborate to solve complex problems while maintaining individual autonomy. This connectivity allows ESP32 devices to participate in mesh networks, share computational loads, and coordinate responses to environmental changes across multiple sensors and actuators. The combination of local machine learning capabilities with selective cloud connectivity creates hybrid systems that leverage the best aspects of both edge and cloud computing paradigms.

The implementation of WiFi-enabled machine learning on ESP32 devices opens up possibilities for dynamic model updates, collaborative learning scenarios, and real-time performance monitoring that were previously impossible in standalone embedded systems. Devices can download updated models when connectivity is available, share learned insights with other devices in the network, and adapt their behavior based on collective intelligence gathered from the entire system. This capability is particularly valuable in applications such as smart agriculture, environmental monitoring, and industrial automation where multiple sensors must work together to optimize system performance.

Real-World Applications and Use Cases

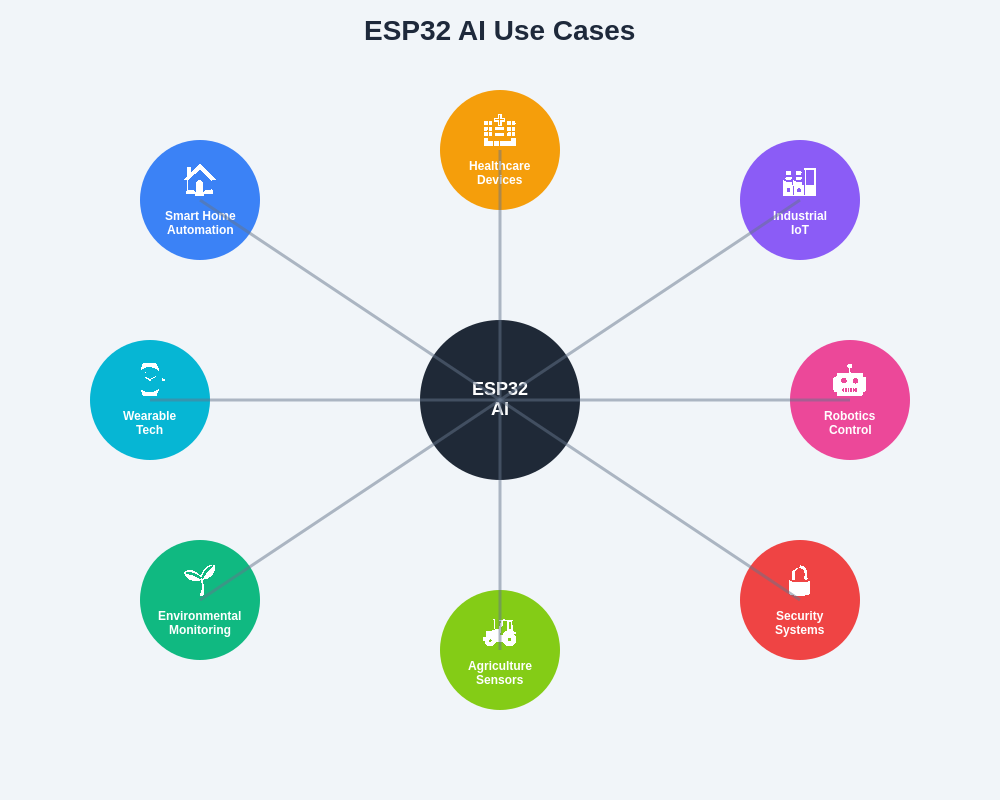

The practical applications of ESP32-based machine learning span numerous industries and use cases, demonstrating the versatility and power of this technology combination. In smart home applications, ESP32 devices equipped with machine learning capabilities can perform sophisticated analysis of audio patterns for voice recognition, image classification for security systems, and behavioral pattern recognition for energy optimization. These applications benefit from the ability to process sensitive data locally, reducing privacy concerns while providing immediate response to detected events.

Industrial IoT applications have embraced ESP32 machine learning for predictive maintenance, quality control, and process optimization scenarios where real-time decision-making is critical for operational efficiency. Manufacturing environments deploy ESP32 devices to monitor equipment vibrations, analyze acoustic signatures of machinery, and detect anomalies in production processes before they result in costly failures or quality issues. The combination of machine learning inference with WiFi connectivity enables these devices to coordinate maintenance schedules, share diagnostic information, and continuously improve their detection capabilities through collaborative learning.

Environmental monitoring represents another significant application area where ESP32 machine learning devices excel at processing complex sensor data to identify patterns, predict weather changes, and detect environmental hazards. These systems can analyze air quality data, monitor wildlife behavior through acoustic analysis, and track environmental changes over time while operating autonomously in remote locations with minimal power consumption.

The diverse application ecosystem surrounding ESP32 AI demonstrates the versatility of edge machine learning across multiple industries and use cases. From smart home automation to industrial IoT, each application leverages the unique combination of local processing power, WiFi connectivity, and energy efficiency that makes ESP32 devices ideal for intelligent edge computing solutions.

Development Tools and Programming Environments

The development ecosystem for ESP32 machine learning has matured significantly, offering multiple programming environments and toolchains that cater to different developer preferences and project requirements. The Arduino IDE remains popular for its simplicity and extensive library support, while ESP-IDF provides more advanced developers with direct access to hardware features and optimization opportunities. PlatformIO has emerged as a powerful alternative that combines the best aspects of both approaches, offering professional development features while maintaining compatibility with Arduino libraries and ESP-IDF components.

Leverage Perplexity’s research capabilities to stay current with the rapidly evolving ESP32 development tools and machine learning frameworks that continue to expand the possibilities for edge AI applications. The integration of these development environments with machine learning frameworks has been streamlined through specialized plugins and extensions that automate many of the complex configuration steps required for successful deployment.

The debugging and optimization of machine learning applications on ESP32 requires specialized tools and techniques that account for the unique constraints of embedded systems. Memory profiling tools help developers optimize model size and memory usage, while performance monitoring utilities provide insights into inference timing and power consumption. These tools are essential for creating production-ready applications that meet the strict requirements of embedded deployment environments.

Model Optimization and Deployment Strategies

Successful deployment of machine learning models on ESP32 devices requires careful attention to optimization strategies that balance model accuracy with resource constraints. Quantization techniques play a crucial role in reducing model size and improving inference speed by converting floating-point weights and activations to lower-precision integer representations. This process can achieve significant reductions in memory usage and computational requirements while maintaining acceptable accuracy for most practical applications.

Pruning represents another important optimization technique that removes unnecessary connections and neurons from neural networks, further reducing model size and computational complexity. The combination of quantization and pruning can often achieve model size reductions of 80-90% compared to the original floating-point models, making it possible to deploy sophisticated neural networks on devices with severe memory constraints.

The deployment process itself involves careful consideration of memory management strategies, including the use of external flash storage for model weights and dynamic loading of model components based on current application requirements. Advanced deployment strategies may involve model partitioning across multiple devices or dynamic model selection based on current operating conditions and available resources.

Power Management and Battery Life Optimization

Power consumption represents a critical consideration for ESP32 machine learning applications, particularly in battery-powered IoT devices where extended operation without maintenance is essential. The ESP32’s built-in power management features, including deep sleep modes and dynamic frequency scaling, can be leveraged to minimize energy consumption during periods of inactivity while maintaining rapid response to important events.

Machine learning inference timing must be carefully coordinated with power management strategies to achieve optimal battery life. Techniques such as duty cycling, where devices alternate between active inference periods and low-power sleep modes, can extend battery life significantly while maintaining adequate responsiveness for most applications. The scheduling of inference operations can be optimized based on predicted event patterns, environmental conditions, and battery status to maximize operational lifetime.

Advanced power management strategies may involve adaptive model complexity, where devices automatically switch between different model variants based on current battery levels and performance requirements. This approach ensures that critical functionality remains available even as battery power decreases, while less essential features are gradually disabled to extend operational lifetime.

Security and Privacy Considerations

The implementation of machine learning on ESP32 devices introduces important security and privacy considerations that must be addressed to ensure safe and reliable operation. Local processing of sensitive data provides inherent privacy benefits by eliminating the need to transmit raw sensor data to cloud services, but it also requires careful implementation of security measures to protect against unauthorized access and tampering.

Secure boot mechanisms and encrypted firmware storage help protect against malicious modifications to deployed devices, while secure communication protocols ensure that any necessary data transmission occurs safely. The implementation of over-the-air update mechanisms requires particular attention to security, as these systems provide potential attack vectors that could compromise device integrity.

Privacy-preserving machine learning techniques, such as federated learning and differential privacy, can be adapted for ESP32 environments to enable collaborative learning while protecting individual data privacy. These approaches allow devices to contribute to collective intelligence without exposing sensitive local information, creating systems that benefit from shared knowledge while maintaining strict privacy protections.

Integration with Cloud Services and Edge Computing

While ESP32 devices excel at local machine learning inference, their integration with cloud services and edge computing infrastructure creates powerful hybrid systems that combine the benefits of local processing with the scalability and advanced capabilities of cloud-based AI services. This integration enables sophisticated applications where ESP32 devices handle real-time decision-making while cloud services provide model training, performance analytics, and long-term data storage.

The selective use of cloud connectivity allows ESP32 devices to operate autonomously during network outages while benefiting from cloud-based intelligence when connectivity is available. This approach is particularly valuable in applications such as smart agriculture, where devices must continue operating during network disruptions while taking advantage of weather prediction services and agricultural expertise available through cloud platforms.

Edge computing architectures can leverage ESP32 devices as distributed sensors and actuators within larger intelligent systems, where local machine learning capabilities reduce bandwidth requirements and improve system responsiveness. These architectures enable sophisticated applications such as smart city systems, industrial automation networks, and environmental monitoring systems that operate across multiple scales and time horizons.

Performance Benchmarking and Optimization

The evaluation of machine learning performance on ESP32 devices requires specialized benchmarking approaches that account for the unique characteristics of embedded systems. Traditional machine learning metrics such as accuracy and F1 scores remain important, but they must be considered alongside embedded-specific metrics such as inference latency, memory usage, and power consumption.

Benchmarking frameworks specifically designed for embedded machine learning provide standardized methodologies for comparing different models, optimization techniques, and deployment strategies. These frameworks help developers make informed decisions about trade-offs between accuracy, performance, and resource usage while ensuring that deployed systems meet their operational requirements.

Performance optimization often involves iterative refinement of model architecture, quantization parameters, and deployment configurations based on empirical testing with representative data sets and operating conditions. This process requires careful documentation and version control to ensure that optimization efforts result in consistent improvements and that successful configurations can be reliably reproduced.

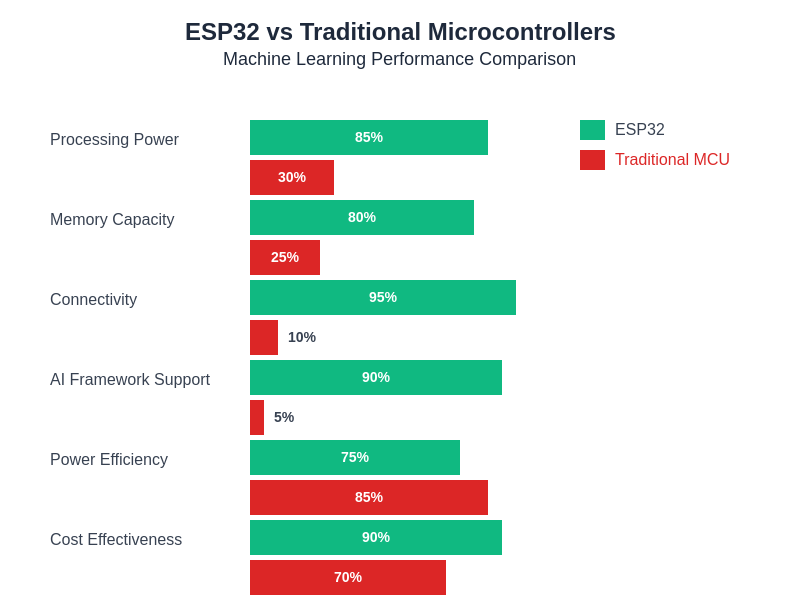

The performance advantages of ESP32 over traditional microcontrollers become evident when comparing key metrics relevant to machine learning applications. While traditional microcontrollers may have slight advantages in power efficiency, the ESP32’s superior processing power, memory capacity, connectivity options, and AI framework support make it the clear choice for edge AI applications that require sophisticated computational capabilities.

Future Developments and Emerging Trends

The future of ESP32 machine learning is being shaped by several emerging trends that promise to further expand the capabilities and applications of edge AI systems. Advanced hardware features in newer ESP32 variants, including dedicated neural network acceleration units and improved memory architectures, will enable more sophisticated machine learning applications while maintaining the cost-effectiveness and power efficiency that make ESP32 devices attractive for IoT applications.

The development of more efficient machine learning algorithms specifically designed for embedded deployment continues to push the boundaries of what is possible on resource-constrained devices. Techniques such as neural architecture search and automated model optimization are being adapted for embedded environments, enabling the creation of models that are specifically optimized for ESP32 hardware capabilities.

The integration of ESP32 devices with emerging communication technologies such as 5G and LoRaWAN will create new opportunities for distributed intelligence applications that combine local processing with high-speed, low-latency connectivity to cloud services and other intelligent systems. These developments will enable applications that were previously impossible due to connectivity limitations or bandwidth constraints.

The continued evolution of machine learning frameworks and development tools specifically designed for embedded systems will lower barriers to entry for developers and enable more sophisticated applications across a broader range of industries and use cases. This democratization of edge AI technology will accelerate innovation and lead to the development of intelligent systems that can operate effectively in environments and applications that were previously beyond the reach of artificial intelligence.

The ESP32 ecosystem represents a remarkable achievement in making artificial intelligence accessible and practical for a vast range of applications, from simple sensor monitoring to sophisticated autonomous systems. As the technology continues to evolve, we can expect to see even more impressive applications that push the boundaries of what is possible with edge AI while maintaining the simplicity, cost-effectiveness, and reliability that make ESP32 devices ideal for real-world deployment.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The information presented is based on current understanding of ESP32 capabilities and machine learning technologies. Readers should conduct their own research and testing when implementing ESP32-based machine learning solutions. Performance characteristics, compatibility, and functionality may vary depending on specific hardware configurations, software versions, and implementation details. Always consult official documentation and conduct thorough testing before deploying systems in production environments.