The modern landscape of machine learning deployment has evolved dramatically, with API services serving as the critical bridge between sophisticated ML models and real-world applications. At the heart of this transformation lies a fundamental decision that significantly impacts the success of ML projects: choosing between FastAPI and Flask for building robust, scalable, and efficient ML API services. This choice extends far beyond simple framework preferences, encompassing considerations of performance optimization, development velocity, scalability requirements, and long-term maintenance strategies that can determine the ultimate success or failure of machine learning initiatives.

Explore the latest AI development trends to understand how modern frameworks are shaping the future of machine learning deployment and API development. The selection between FastAPI and Flask represents more than a technical decision; it embodies a strategic choice that influences development workflows, team productivity, and the overall architecture of ML-powered applications that serve millions of users worldwide.

The Evolution of ML API Development

The journey from traditional web applications to sophisticated machine learning API services has necessitated frameworks that can handle the unique challenges of ML workloads, including high computational demands, complex data processing pipelines, and the need for real-time inference capabilities. Flask, with its minimalist philosophy and extensive ecosystem, established itself as the go-to choice for Python web development, while FastAPI emerged as a modern alternative specifically designed to address the performance and developer experience shortcomings that became apparent as ML applications scaled to production environments.

The fundamental differences between these frameworks reflect broader shifts in software development methodologies, with FastAPI embracing modern Python features such as type hints, asynchronous programming, and automatic documentation generation, while Flask maintains its philosophy of simplicity and flexibility that has made it a beloved choice for countless web applications. Understanding these philosophical differences is crucial for making informed decisions about which framework best aligns with specific ML project requirements and organizational constraints.

Performance Architecture and Scalability

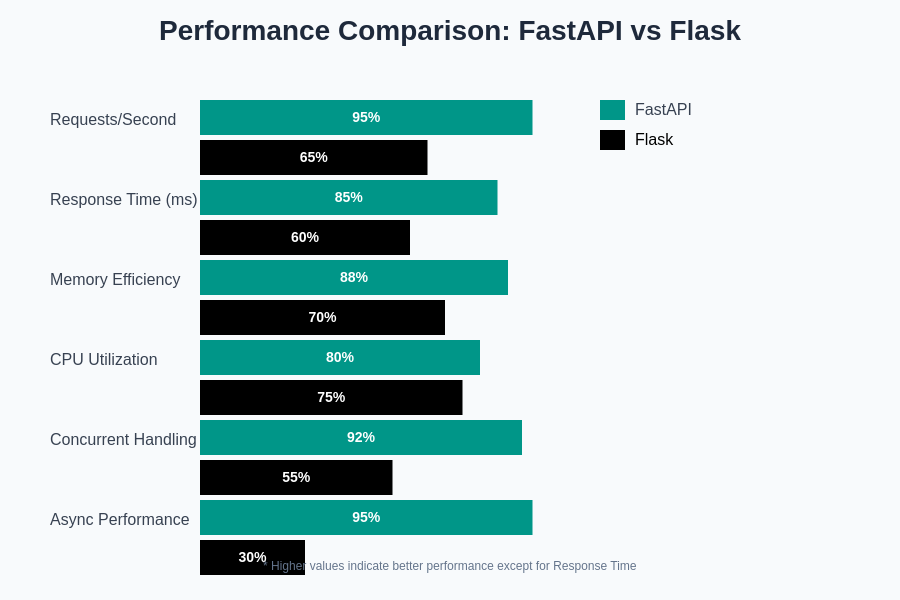

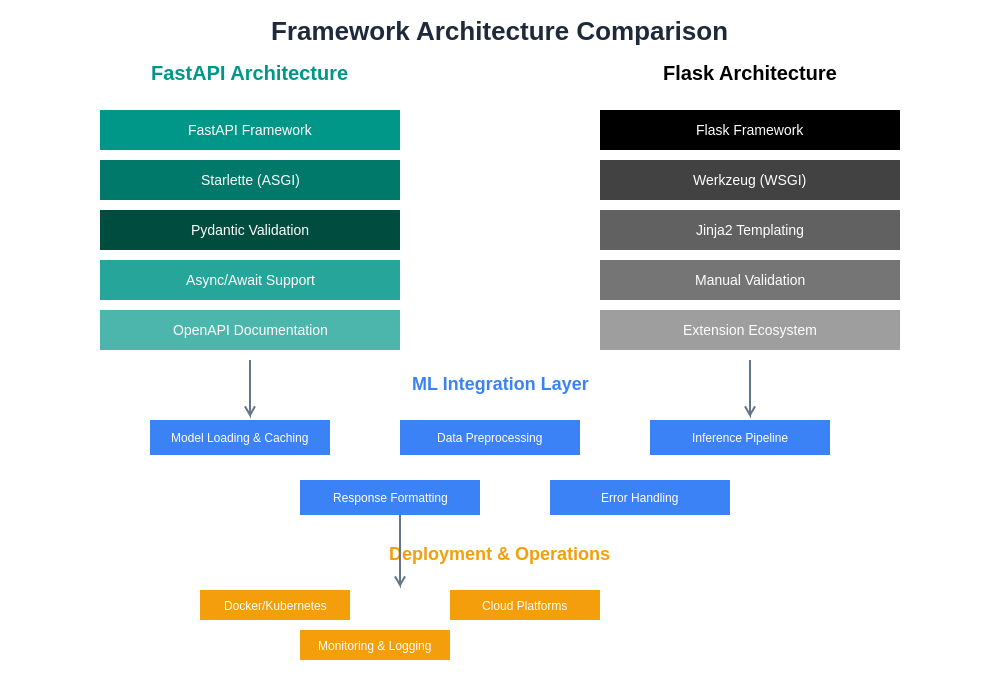

Performance considerations in ML API services extend beyond traditional web application metrics, encompassing model inference times, memory management, concurrent request handling, and the ability to efficiently process large datasets or complex model outputs. FastAPI’s foundation on Starlette and Pydantic provides inherent performance advantages through asynchronous request handling and efficient data validation, making it particularly well-suited for high-throughput ML applications that require rapid response times and concurrent processing capabilities.

Flask’s synchronous nature, while simpler to understand and debug, can become a bottleneck in scenarios involving computationally intensive ML operations or high-concurrency requirements. However, Flask’s maturity and extensive ecosystem provide numerous optimization strategies and extensions that can address performance concerns, though often requiring additional configuration and architectural considerations that may increase system complexity.

The choice between these frameworks often hinges on specific performance requirements, with FastAPI offering superior out-of-the-box performance for concurrent operations and real-time ML inference, while Flask provides more predictable behavior and easier debugging capabilities that may be preferable during development and testing phases of ML projects.

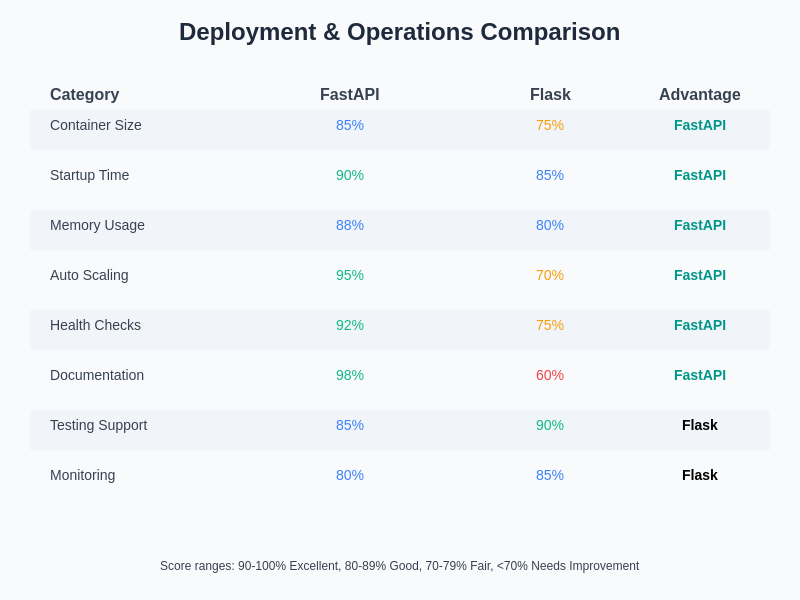

The quantitative differences in performance metrics between FastAPI and Flask become particularly pronounced under high-load conditions typical of production ML API services. These differences extend beyond simple request throughput to encompass memory efficiency, CPU utilization, and response time consistency under varying load patterns.

Development Experience and Productivity

The developer experience provided by each framework significantly impacts development velocity, code maintainability, and team onboarding processes in ML projects. FastAPI’s automatic OpenAPI documentation generation, built-in request validation, and comprehensive type checking create a development environment that promotes rapid prototyping while maintaining code quality standards essential for production ML systems.

Experience advanced AI development with Claude to enhance your ML API development workflow with intelligent code generation and architectural guidance that complements both FastAPI and Flask development approaches. The integration of modern development tools and AI assistance creates synergistic effects that amplify the productivity benefits offered by contemporary web frameworks.

Flask’s minimalist approach requires developers to implement many features manually or through third-party extensions, which can slow initial development but provides greater control over system architecture and dependencies. This trade-off between convenience and control becomes particularly important in ML contexts where specific performance optimizations or custom data processing pipelines may require fine-grained control over framework behavior.

The learning curve associated with each framework also differs significantly, with Flask’s simplicity making it more accessible to developers new to web development, while FastAPI’s modern Python features and asynchronous programming paradigms may require additional learning investment but offer long-term productivity benefits for experienced developers working on complex ML systems.

Data Validation and Type Safety

Machine learning APIs typically handle complex data structures, ranging from simple feature vectors to sophisticated nested objects containing images, text, numerical data, and metadata. The approach each framework takes to data validation and type safety significantly impacts both development efficiency and runtime reliability of ML API services.

FastAPI’s integration with Pydantic provides automatic request and response validation, eliminating many common sources of runtime errors while providing clear, actionable error messages when validation fails. This built-in validation system is particularly valuable in ML contexts where data quality and consistency are crucial for model performance and where invalid inputs can lead to unpredictable model behavior or system failures.

Flask requires manual implementation of data validation logic or integration with third-party libraries, which increases development overhead but provides flexibility in handling edge cases or implementing custom validation rules specific to particular ML models or business requirements. This manual approach can be advantageous in scenarios where standard validation patterns are insufficient or where legacy systems require specific data handling approaches.

Asynchronous Processing and Real-Time Inference

The ability to handle asynchronous operations efficiently is crucial for ML API services that need to perform time-consuming operations such as model inference, data preprocessing, or interaction with external services without blocking other requests. FastAPI’s native asynchronous support enables developers to build highly responsive ML APIs that can handle multiple concurrent inference requests while maintaining system responsiveness.

Flask’s synchronous nature can be limiting in scenarios requiring real-time inference or batch processing capabilities, though extensions like Celery can provide asynchronous processing capabilities at the cost of increased system complexity and operational overhead. The choice between synchronous and asynchronous architectures often depends on specific use case requirements and team expertise with asynchronous programming paradigms.

The implications of this architectural difference extend beyond simple performance considerations to encompass system scalability, resource utilization efficiency, and the ability to implement sophisticated ML pipeline architectures that may involve multiple models, data preprocessing steps, and external API integrations.

The architectural differences between FastAPI and Flask fundamentally influence how ML systems scale and evolve, with implications for long-term maintenance, feature development, and operational complexity that extend well beyond initial implementation considerations.

Ecosystem and Community Support

The ecosystem surrounding each framework significantly impacts development efficiency, available resources, and long-term project viability. Flask’s maturity and extensive ecosystem provide access to a vast array of extensions, tutorials, and community resources that can accelerate development and provide solutions for common challenges encountered in ML API development.

FastAPI’s newer but rapidly growing ecosystem focuses specifically on modern Python development practices and API-first approaches that align well with ML deployment strategies. The framework’s emphasis on standards compliance and automatic documentation generation has attracted a community of developers working on sophisticated ML and data science applications.

Leverage Perplexity’s AI capabilities for comprehensive research into framework-specific solutions, community best practices, and emerging trends in ML API development that can inform architectural decisions and implementation strategies. The combination of human expertise and AI-assisted research enables more informed decision-making when evaluating framework ecosystems and community resources.

The availability of ML-specific extensions and integrations varies between frameworks, with Flask offering mature integrations with traditional ML libraries and deployment platforms, while FastAPI provides modern approaches to ML model serving and cloud-native deployment strategies that may be more suitable for contemporary ML operations workflows.

Security Considerations in ML APIs

Security in ML API services encompasses traditional web security concerns as well as ML-specific vulnerabilities such as adversarial attacks, model extraction attempts, and data privacy issues. Both frameworks provide mechanisms for implementing security measures, though their approaches and default security postures differ significantly.

FastAPI’s automatic request validation and built-in security utilities provide a strong foundation for implementing secure ML APIs, with features like automatic HTTPS redirection, CORS handling, and OAuth2 integration available out of the box. These built-in security features reduce the likelihood of common security vulnerabilities while providing developers with standardized approaches to implementing authentication and authorization.

Flask’s security approach relies more heavily on developer implementation and third-party extensions, which can provide greater flexibility but also increases the risk of security misconfigurations or overlooked vulnerabilities. The manual nature of Flask security implementation requires developers to have comprehensive understanding of web security principles and ML-specific threat models.

Model Integration and Deployment Strategies

The approach each framework takes to model integration and deployment significantly impacts development workflows and operational complexity. FastAPI’s modern architecture and built-in support for dependency injection make it easier to implement sophisticated model loading strategies, caching mechanisms, and A/B testing frameworks that are essential for production ML systems.

Flask’s flexibility allows for custom model integration approaches that may be necessary for specific ML frameworks or deployment requirements, though this flexibility comes at the cost of additional implementation overhead and the need for developers to solve common problems that may have standardized solutions in more opinionated frameworks.

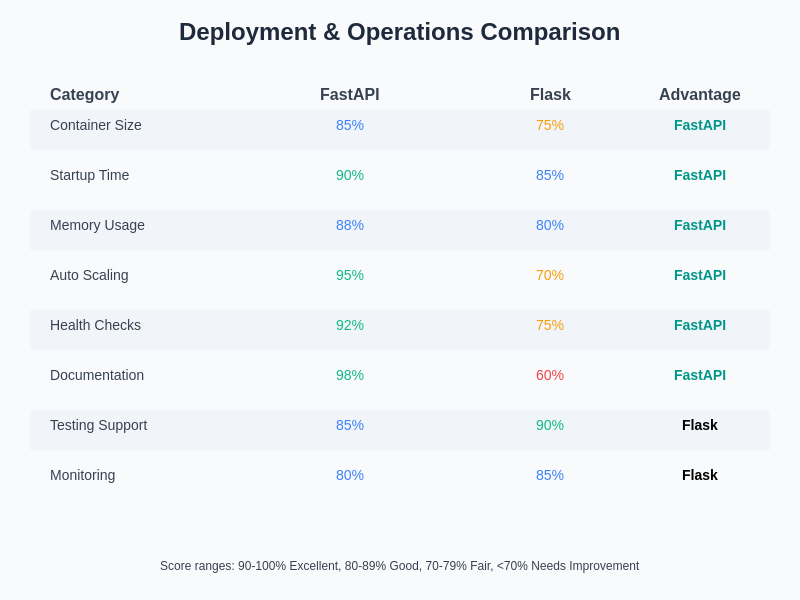

The choice between frameworks often depends on specific deployment requirements, including container orchestration needs, cloud platform integrations, and monitoring and observability requirements that may favor one approach over another based on organizational infrastructure and operational capabilities.

Testing and Quality Assurance

Testing ML APIs presents unique challenges that extend beyond traditional web application testing to encompass model behavior validation, performance testing under various load conditions, and ensuring consistent behavior across different input data distributions. The testing capabilities and patterns supported by each framework significantly impact development workflows and system reliability.

FastAPI’s built-in testing utilities and integration with modern Python testing frameworks provide comprehensive testing capabilities that can handle both traditional API testing and ML-specific testing requirements. The framework’s automatic documentation generation also facilitates testing by providing clear specifications of expected API behavior.

Flask’s testing approach relies on established patterns and third-party testing libraries, which may require additional setup and configuration but provide time-tested approaches to web application testing that many developers are already familiar with. The maturity of Flask’s testing ecosystem can be advantageous for teams with existing testing infrastructure and processes.

Monitoring and Observability

Production ML API services require sophisticated monitoring and observability capabilities to track not only traditional web metrics but also model performance, data drift, inference latency, and resource utilization patterns. The approach each framework takes to monitoring integration and instrumentation can significantly impact operational visibility and incident response capabilities.

FastAPI’s modern architecture and standards compliance make it easier to integrate with contemporary observability platforms and monitoring tools, while its structured request/response handling provides clear points for instrumentation and metrics collection. The framework’s automatic documentation generation also facilitates monitoring setup by providing clear specifications of expected system behavior.

Flask’s monitoring capabilities depend largely on third-party integrations and manual instrumentation, which can provide fine-grained control over monitoring implementation but requires additional development effort and expertise in monitoring best practices. The flexibility of Flask’s approach can be advantageous for organizations with specific monitoring requirements or existing observability infrastructure.

The operational characteristics of each framework influence not only day-to-day system management but also long-term scalability planning and incident response capabilities that are crucial for maintaining reliable ML API services in production environments.

Cost Considerations and Resource Efficiency

The resource efficiency and operational costs associated with each framework can significantly impact the total cost of ownership for ML API services, particularly for high-volume applications or resource-constrained environments. FastAPI’s performance advantages can translate directly into cost savings through reduced server requirements and improved resource utilization efficiency.

Flask’s resource utilization characteristics may require additional infrastructure investment to achieve similar performance levels, though its simplicity and predictable behavior can reduce operational overhead and simplify capacity planning. The trade-offs between infrastructure costs and operational complexity vary depending on specific deployment requirements and organizational capabilities.

Understanding these cost implications is crucial for making informed architectural decisions, particularly for organizations deploying multiple ML services or operating under strict budget constraints where framework efficiency can have substantial financial impact.

The operational characteristics of each framework influence not only day-to-day system management but also long-term scalability planning and incident response capabilities that are crucial for maintaining reliable ML API services in production environments.

Migration Strategies and Long-Term Maintenance

The decision between FastAPI and Flask often involves considerations of long-term maintainability, migration strategies, and evolving requirements that may favor one framework over another as projects mature and scale. The effort required to migrate between frameworks or upgrade to newer versions can significantly impact development velocity and system reliability.

FastAPI’s modern architecture and adherence to current Python standards position it well for future evolution and integration with emerging technologies, while Flask’s stability and backward compatibility provide predictable upgrade paths and minimal maintenance overhead for established systems.

Planning for long-term maintenance and potential migration needs requires understanding not only current framework capabilities but also development trajectories, community roadmaps, and emerging trends in ML deployment that may influence future architectural decisions.

Making the Strategic Choice

The decision between FastAPI and Flask for ML API development ultimately depends on a complex interplay of technical requirements, team capabilities, organizational constraints, and long-term strategic objectives. FastAPI offers compelling advantages for new ML projects that prioritize performance, modern development practices, and rapid iteration, while Flask provides stability, simplicity, and extensive ecosystem support that may be preferable for certain use cases or organizational contexts.

Organizations building high-performance ML APIs with modern development teams and aggressive scalability requirements may find FastAPI’s advantages compelling, while those prioritizing stability, simplicity, or integration with existing Flask-based systems may benefit from continuing with Flask’s proven approach to web application development.

The choice between these frameworks represents more than a technical decision; it embodies a strategic choice about development philosophy, team capabilities, and long-term architectural vision that will influence ML project success and organizational capability development for years to come.

Future Trends and Technological Evolution

The landscape of ML API development continues to evolve rapidly, with emerging technologies, changing performance requirements, and evolving best practices influencing framework selection and implementation strategies. Understanding current trends and anticipated developments can inform framework choices and architectural decisions that position ML projects for long-term success.

The continued growth of edge computing, serverless architectures, and cloud-native deployment strategies may favor frameworks that provide better performance characteristics and modern deployment capabilities, while the increasing complexity of ML systems may require more sophisticated tooling and development approaches that align with contemporary software engineering practices.

Staying informed about technological evolution and emerging best practices enables organizations to make framework choices that not only meet current requirements but also position ML systems for future growth and adaptation as the field continues to advance and mature.

Disclaimer

This article provides general guidance on framework selection for ML API development and does not constitute professional advice for specific technical implementations. The choice between FastAPI and Flask should be based on careful evaluation of specific project requirements, team capabilities, and organizational constraints. Performance characteristics, security considerations, and operational requirements may vary significantly depending on specific use cases and deployment environments. Readers should conduct thorough testing and evaluation before making framework decisions for production ML systems.