Feature stores have emerged as a critical component in modern machine learning infrastructure, serving as centralized repositories for storing, managing, and serving features across training and inference pipelines. The proliferation of machine learning applications in production environments has created an urgent need for robust feature management solutions that can handle the complexities of real-time and batch feature computation, consistent data transformation, and reliable feature serving at scale.

Explore the latest AI and ML trends to understand how feature stores are evolving alongside other crucial machine learning infrastructure components. The selection of an appropriate feature store solution significantly impacts the efficiency, reliability, and scalability of machine learning operations, making it essential for organizations to understand the strengths and limitations of leading platforms in this space.

Understanding Feature Store Architecture

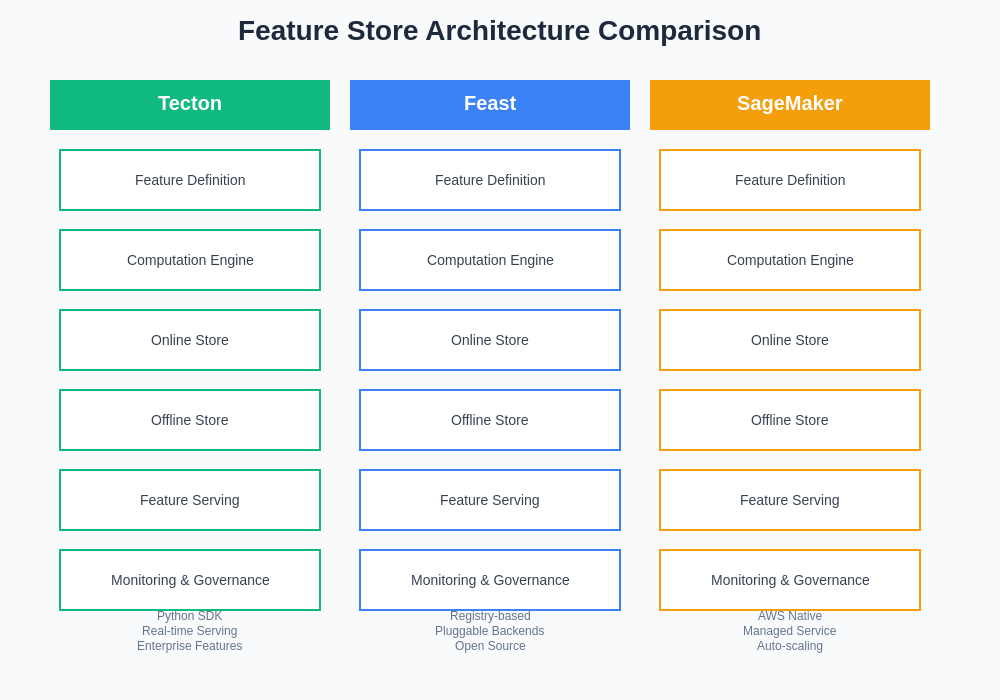

Feature stores address fundamental challenges in machine learning operations by providing a unified platform for feature engineering, storage, and serving. The architecture typically encompasses feature definition frameworks, computation engines for both batch and real-time processing, storage layers optimized for different access patterns, and serving infrastructure that can handle both training and inference workloads with consistent performance characteristics.

The evolution of feature store technology has been driven by the need to eliminate feature engineering debt, reduce training-serving skew, enable feature reusability across teams and projects, and provide comprehensive feature lineage and governance capabilities. Modern feature stores integrate seamlessly with existing data infrastructure while providing abstractions that simplify the complex orchestration of feature pipelines and ensure data consistency across different stages of the machine learning lifecycle.

Tecton: Enterprise-Grade Feature Platform

Tecton represents a comprehensive enterprise solution that emphasizes real-time feature serving and sophisticated feature engineering capabilities. The platform provides a declarative approach to feature definition through its Python SDK, enabling data scientists and engineers to define features using familiar programming paradigms while leveraging Tecton’s underlying infrastructure for computation and serving optimization.

The architecture of Tecton is built around the concept of feature services that can dynamically compute and serve features with sub-millisecond latency requirements. The platform integrates deeply with streaming data infrastructure, supporting real-time feature computation from Apache Kafka, Amazon Kinesis, and other streaming platforms while maintaining consistency with batch-computed features through its sophisticated reconciliation mechanisms.

Tecton’s approach to feature versioning and experimentation provides robust support for A/B testing and gradual rollouts of new feature definitions. The platform maintains comprehensive feature lineage tracking, enabling teams to understand feature dependencies and impact analysis when making changes to upstream data sources or feature definitions. The enterprise focus is evident in Tecton’s extensive security and compliance features, including role-based access control, audit logging, and integration with enterprise identity management systems.

Experience advanced AI capabilities with Claude for developing sophisticated feature engineering workflows that integrate seamlessly with enterprise feature store architectures. The combination of human expertise and AI assistance accelerates the development of complex feature transformations while ensuring adherence to best practices in feature engineering and data management.

Feast: Open-Source Feature Store Foundation

Feast has established itself as the leading open-source feature store solution, providing organizations with the flexibility to build customized feature management systems while leveraging proven architectural patterns and community contributions. The project’s commitment to vendor neutrality and extensible architecture has made it a popular choice for organizations seeking to avoid vendor lock-in while building sophisticated feature management capabilities.

The core strength of Feast lies in its modular architecture that supports pluggable components for different aspects of feature store functionality. Organizations can choose from multiple storage backends including Redis, DynamoDB, and Firestore for online serving, while supporting various offline storage systems such as BigQuery, Snowflake, and Apache Parquet for training data generation. This flexibility enables organizations to optimize their feature store deployment based on existing infrastructure investments and specific performance requirements.

Feast’s feature definition framework uses a registry-based approach where features are defined through Python objects and registered with a central feature registry. The platform supports both batch and stream processing for feature computation, with integrations for popular data processing frameworks including Apache Spark, Apache Beam, and various cloud-native data processing services. The open-source nature of Feast has fostered a vibrant ecosystem of extensions and integrations, providing organizations with access to community-developed solutions for specialized use cases.

AWS SageMaker Feature Store: Cloud-Native Integration

AWS SageMaker Feature Store represents Amazon’s cloud-native approach to feature management, providing deep integration with the broader AWS ecosystem while offering managed infrastructure that reduces operational overhead for organizations already invested in AWS services. The platform leverages AWS’s extensive data and analytics services to provide a comprehensive feature management solution that scales automatically based on demand.

The architecture of SageMaker Feature Store is built around feature groups that serve as logical containers for related features, with automatic schema evolution and validation capabilities that ensure data quality and consistency. The platform provides dual storage modes with an online store optimized for real-time inference and an offline store designed for training data generation and historical analysis. The integration with AWS services extends to comprehensive monitoring and alerting through CloudWatch, security management through IAM, and data governance through AWS Lake Formation.

SageMaker Feature Store’s approach to feature computation emphasizes integration with existing AWS data processing services, supporting feature engineering workflows through SageMaker Processing jobs, AWS Glue ETL operations, and Amazon EMR clusters. The platform provides automatic feature discovery and cataloging capabilities that integrate with AWS Glue Data Catalog, enabling comprehensive metadata management and feature lineage tracking across complex data pipelines.

The architectural differences between these platforms reflect different philosophies regarding feature store design and deployment strategies. Understanding these architectural distinctions is crucial for selecting the most appropriate solution based on organizational requirements and existing infrastructure constraints.

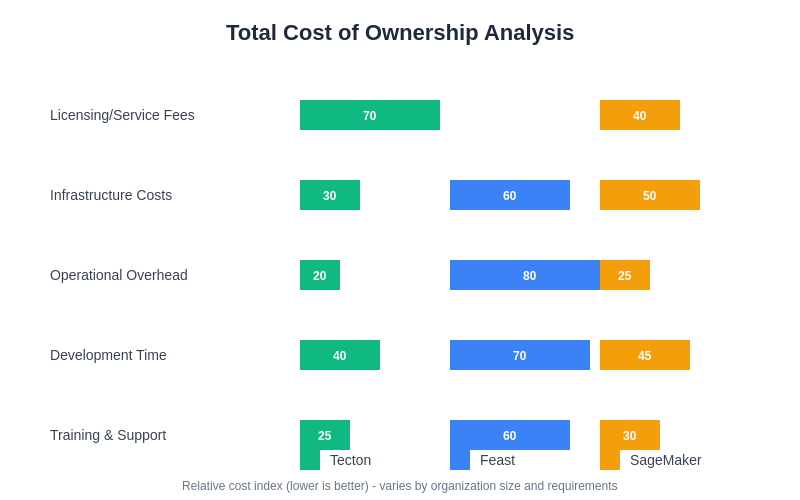

The total cost of ownership varies significantly across platforms, with each solution presenting different cost profiles based on licensing models, infrastructure requirements, and operational overhead considerations.

Performance and Scalability Analysis

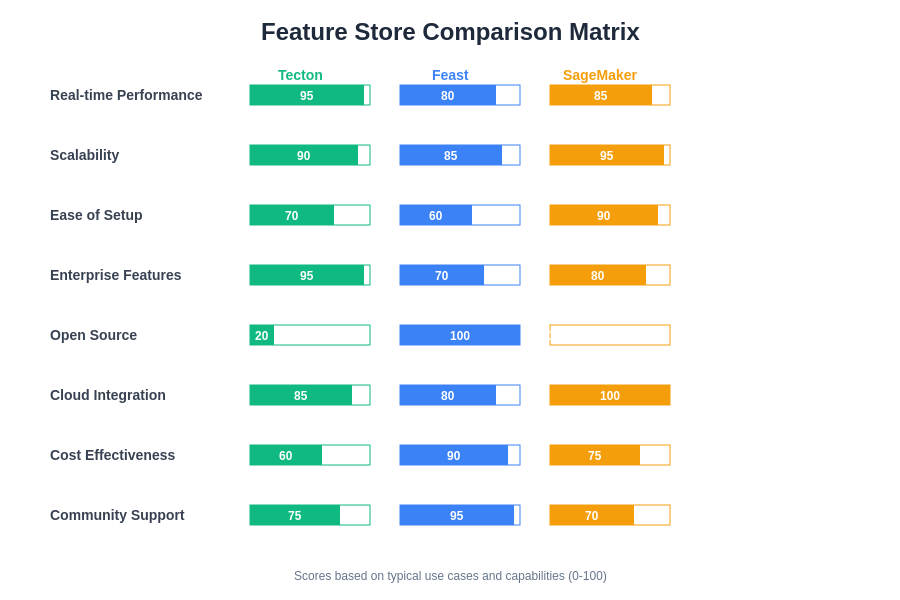

Performance characteristics vary significantly across feature store platforms, with each solution optimized for different access patterns and scalability requirements. Tecton’s focus on real-time serving results in exceptional low-latency performance for inference workloads, with the platform capable of serving features with sub-millisecond response times through its optimized caching and computation infrastructure. The platform’s ability to handle high-throughput scenarios makes it particularly suitable for applications with strict latency requirements such as fraud detection and real-time personalization.

Feast’s performance profile depends heavily on the chosen storage backends and deployment configuration, providing organizations with the flexibility to optimize for their specific use cases. The platform’s support for multiple storage systems enables performance tuning based on access patterns, with Redis providing excellent performance for low-latency serving while cloud-based storage systems like BigQuery optimize for analytical workloads and complex feature transformations.

SageMaker Feature Store leverages AWS’s managed infrastructure to provide automatic scaling capabilities that adapt to varying demand patterns without requiring manual intervention. The platform’s performance is optimized for integration with other AWS services, providing excellent throughput for batch processing operations while maintaining reasonable response times for online serving through its integration with Amazon ElastiCache and other AWS caching services.

Discover comprehensive research capabilities with Perplexity for analyzing performance benchmarks and conducting detailed evaluations of feature store solutions across different deployment scenarios and workload characteristics.

Cost Considerations and Total Ownership

The total cost of ownership for feature store solutions encompasses multiple dimensions including licensing fees, infrastructure costs, operational overhead, and development productivity impacts. Tecton’s enterprise pricing model reflects its comprehensive feature set and managed service approach, with costs typically scaling based on feature volume, computation requirements, and support levels. Organizations must evaluate the premium pricing against the reduced operational complexity and advanced enterprise features that Tecton provides.

Feast’s open-source nature eliminates licensing costs but transfers operational responsibility to the implementing organization. The total cost includes infrastructure expenses for chosen storage and computation backends, engineering time for deployment and maintenance, and potential consulting costs for specialized implementations. Organizations with strong engineering capabilities may find Feast’s total cost of ownership favorable, particularly when leveraging existing infrastructure investments.

SageMaker Feature Store follows AWS’s pay-as-you-use pricing model, with costs based on data storage, request volume, and associated AWS services utilization. The managed nature of the service reduces operational overhead while providing predictable scaling costs. Organizations must consider the cumulative impact of related AWS services costs when evaluating the total cost of ownership for SageMaker Feature Store deployments.

Integration Ecosystem and Compatibility

The integration capabilities of feature store platforms significantly impact their practical utility within existing machine learning infrastructure. Tecton provides extensive integration with popular machine learning frameworks including TensorFlow, PyTorch, and Scikit-learn, while supporting deployment patterns for major cloud platforms and on-premises infrastructure. The platform’s enterprise focus includes comprehensive APIs and SDKs that facilitate integration with custom applications and existing data workflows.

Feast’s open-source architecture enables extensive customization and integration possibilities, with community-developed connectors for numerous data sources, processing frameworks, and serving infrastructure. The platform’s vendor-neutral approach ensures compatibility with multi-cloud deployments and hybrid infrastructure scenarios, making it suitable for organizations with diverse technology stacks and complex integration requirements.

SageMaker Feature Store’s integration strengths lie in its seamless connectivity with the AWS ecosystem, providing native integration with services such as Amazon S3, AWS Glue, Amazon EMR, and various analytics services. The platform supports popular machine learning frameworks through SageMaker’s broader ecosystem while providing APIs that enable integration with non-AWS services and applications.

Security and Governance Frameworks

Security and governance capabilities represent critical evaluation criteria for feature store selection, particularly for organizations operating in regulated industries or handling sensitive data. Tecton’s enterprise orientation includes comprehensive security features such as encryption at rest and in transit, role-based access control, audit logging, and integration with enterprise identity management systems. The platform provides data lineage tracking and feature governance capabilities that support compliance requirements and change management processes.

Feast’s security model relies on the underlying infrastructure and storage systems chosen for deployment, requiring organizations to implement appropriate security controls based on their specific requirements. The open-source nature enables customization of security features while placing responsibility for security implementation on the deploying organization. Community contributions have enhanced Feast’s security capabilities over time, but enterprise-grade security features may require additional development effort.

SageMaker Feature Store leverages AWS’s comprehensive security infrastructure, providing encryption, access control through IAM, and integration with AWS security services such as AWS CloudTrail for audit logging and AWS Config for compliance monitoring. The platform’s integration with AWS Lake Formation enables sophisticated data governance capabilities including fine-grained access control and comprehensive data cataloging.

The comparative analysis reveals distinct strengths and trade-offs among the three platforms, highlighting the importance of aligning feature store selection with organizational priorities and technical requirements. Each platform excels in specific areas while presenting limitations in others, requiring careful evaluation of organizational needs against platform capabilities.

Development Experience and Productivity

The developer experience significantly impacts the adoption and productivity outcomes of feature store implementations. Tecton emphasizes a Python-first approach with comprehensive SDKs that abstract complex infrastructure management while providing sophisticated feature engineering capabilities. The platform’s declarative feature definition framework enables data scientists to focus on business logic while leveraging Tecton’s optimization and serving infrastructure automatically.

Feast provides a flexible development experience that accommodates various programming paradigms and integration patterns. The platform’s registry-based approach requires more explicit configuration compared to managed solutions, but offers greater control over implementation details. The open-source community contributes extensive documentation, examples, and best practices that facilitate learning and implementation.

SageMaker Feature Store integrates with SageMaker’s broader development environment, providing Jupyter notebook integration, automated feature discovery, and seamless connectivity with other SageMaker services. The platform’s managed nature reduces infrastructure concerns while providing comprehensive monitoring and debugging capabilities through AWS’s operational tools.

Use Case Scenarios and Recommendations

Different feature store solutions excel in specific use case scenarios based on their architectural strengths and operational characteristics. Tecton’s real-time capabilities and enterprise features make it particularly suitable for organizations requiring low-latency feature serving, complex feature engineering workflows, and comprehensive enterprise integration. Financial services, e-commerce, and advertising technology companies often find Tecton’s capabilities align well with their performance and compliance requirements.

Feast’s flexibility and open-source nature make it an excellent choice for organizations with strong engineering capabilities, diverse infrastructure requirements, or specific customization needs. Technology companies with existing investment in open-source tooling and organizations requiring multi-cloud or hybrid deployments often benefit from Feast’s architectural flexibility and community ecosystem.

SageMaker Feature Store provides optimal value for organizations already committed to the AWS ecosystem, particularly those seeking to minimize operational overhead while leveraging comprehensive cloud-native integration. Startups, enterprises standardizing on AWS, and organizations prioritizing managed infrastructure often find SageMaker Feature Store’s integration and automation capabilities compelling.

Implementation Strategies and Best Practices

Successful feature store implementation requires careful planning and adherence to established best practices regardless of the chosen platform. Organizations should begin with a comprehensive assessment of existing data infrastructure, feature engineering workflows, and performance requirements to inform platform selection and deployment strategies. The implementation approach should emphasize gradual migration and validation to minimize disruption to existing machine learning workflows.

Feature schema design and versioning strategies represent critical success factors that impact long-term maintainability and evolution capabilities. Organizations should establish consistent naming conventions, data type standards, and documentation practices that facilitate feature discovery and reuse across teams. The implementation should include comprehensive monitoring and alerting for feature quality, serving performance, and data freshness to ensure reliable operation in production environments.

Change management and team training represent often-overlooked aspects of feature store implementation that significantly impact adoption success. Organizations should invest in comprehensive training programs, establish clear governance processes, and create incentive structures that encourage feature reuse and standardization across machine learning projects.

Future Evolution and Technology Trends

The feature store landscape continues to evolve rapidly with emerging technologies and changing requirements driving innovation across all major platforms. Real-time machine learning applications are driving demand for more sophisticated streaming feature computation capabilities, while the growth of edge computing scenarios creates requirements for distributed feature serving and synchronization capabilities.

The integration of artificial intelligence into feature store operations represents an emerging trend with significant potential impact on feature engineering productivity and data quality. Automated feature discovery, intelligent feature selection, and AI-powered feature transformation suggestions are becoming increasingly sophisticated, potentially transforming how organizations approach feature engineering workflows.

Standardization efforts within the machine learning operations community are working toward improved interoperability between feature store platforms and other MLOps tools. These initiatives aim to reduce vendor lock-in concerns while enabling more seamless integration across diverse technology stacks and deployment scenarios.

The continued evolution of cloud-native architectures and serverless computing paradigms influences feature store design and deployment patterns. Organizations increasingly expect feature store solutions to provide automatic scaling, minimal operational overhead, and seamless integration with modern application development workflows.

As machine learning applications become more sophisticated and prevalent, feature stores will likely evolve to support more complex feature types, advanced privacy-preserving techniques, and enhanced collaboration capabilities that enable more effective cross-team feature sharing and governance.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The analysis presented is based on publicly available information and general industry knowledge as of the publication date. Organizations should conduct thorough evaluations based on their specific requirements, constraints, and use cases when selecting feature store solutions. The capabilities, pricing, and features of the discussed platforms may change over time, and readers should verify current information directly with vendors before making implementation decisions.