The evolution of artificial intelligence has reached a critical juncture where the need for sophisticated machine learning models collides with increasingly stringent data privacy requirements and security concerns. Federated learning has emerged as a revolutionary paradigm that addresses these challenges by enabling collaborative AI model training across distributed networks without requiring centralized data sharing. This innovative approach represents a fundamental shift in how organizations can harness the power of collective intelligence while maintaining strict data sovereignty and implementing comprehensive security measures that protect sensitive information throughout the learning process.

Discover the latest AI security trends and developments that are shaping the future of privacy-preserving machine learning and distributed artificial intelligence systems. The intersection of advanced AI capabilities with robust security frameworks has created unprecedented opportunities for organizations to participate in collaborative learning initiatives while maintaining complete control over their proprietary data assets and ensuring compliance with evolving regulatory requirements.

Understanding Federated Learning Architecture

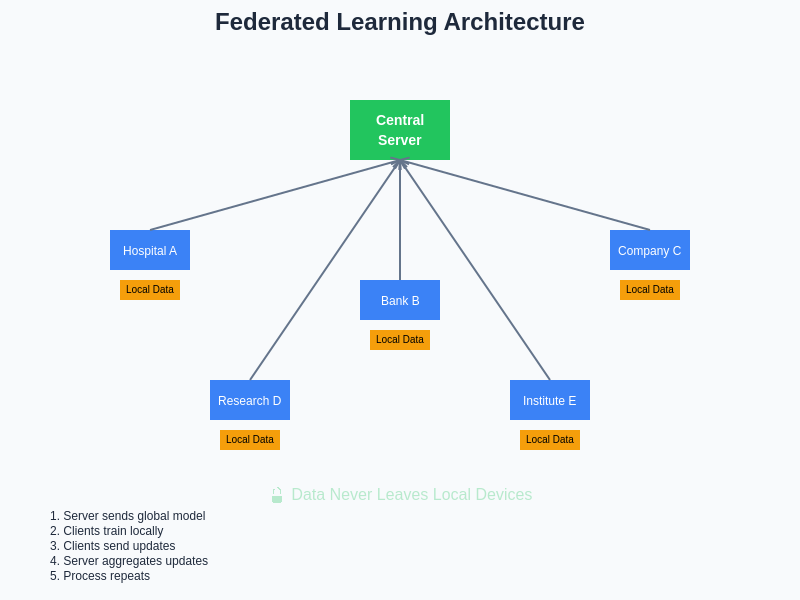

Federated learning fundamentally reimagines the traditional centralized machine learning paradigm by distributing the training process across multiple participating nodes while keeping raw data localized at each participant’s infrastructure. This architectural approach eliminates the need for data centralization, which has historically been a major barrier to collaborative AI development due to privacy concerns, regulatory constraints, and competitive considerations. The federated learning framework operates through a coordinated process where a central server orchestrates the training procedure by distributing model parameters to participating clients, who then perform local training on their private datasets and return only the updated model weights rather than raw data.

The sophistication of federated learning architecture lies in its ability to aggregate knowledge from diverse data sources while implementing multiple layers of security protection. Each participating node maintains complete autonomy over its data while contributing to a collectively trained model that benefits from the combined insights of all participants. This distributed approach not only preserves privacy but also enhances the robustness and generalizability of the resulting AI models by incorporating diverse perspectives and data characteristics that would be impossible to achieve through traditional centralized approaches.

The technical implementation of federated learning involves sophisticated algorithms for model aggregation, communication optimization, and convergence assurance across heterogeneous computing environments. The system must handle varying data distributions, network conditions, and computational capabilities while ensuring that the global model converges to an optimal solution despite the challenges inherent in distributed optimization. This complexity requires careful consideration of security measures at every stage of the process to prevent potential vulnerabilities and ensure the integrity of the collaborative learning process.

The architectural framework of federated learning demonstrates how multiple organizations can participate in collaborative AI development while maintaining complete control over their sensitive data. This distributed approach eliminates traditional centralization bottlenecks while implementing comprehensive security measures that protect all participants throughout the learning process.

Core Security Principles in Federated Learning

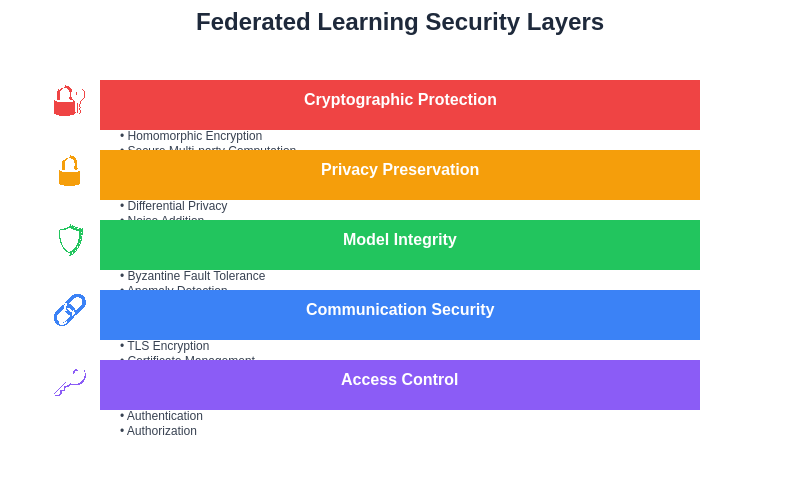

The security framework underlying federated learning is built upon several fundamental principles that work synergistically to protect data privacy, model integrity, and system availability throughout the distributed training process. Privacy preservation serves as the cornerstone principle, ensuring that sensitive information remains within the boundaries of participating organizations while still enabling meaningful collaborative learning. This is achieved through sophisticated cryptographic techniques, differential privacy mechanisms, and secure aggregation protocols that mathematically guarantee privacy protection even in the presence of potentially adversarial participants or compromised communication channels.

Model integrity represents another critical security principle that ensures the global model remains uncompromised despite the distributed nature of the training process. This involves implementing robust mechanisms for detecting and mitigating various forms of attacks, including model poisoning attempts, backdoor insertions, and adversarial manipulations that could compromise the reliability and trustworthiness of the resulting AI system. The federated learning framework must incorporate sophisticated anomaly detection algorithms, byzantine fault tolerance mechanisms, and consensus protocols that can identify and isolate malicious participants while maintaining the overall learning process.

System availability and resilience constitute essential security considerations that ensure the federated learning network can operate effectively despite network failures, participant dropouts, or targeted attacks designed to disrupt the collaborative learning process. This requires implementing redundant communication pathways, fault-tolerant aggregation algorithms, and dynamic participant management systems that can adapt to changing network conditions while maintaining the security and effectiveness of the overall learning process.

The comprehensive security architecture of federated learning implements multiple defensive layers that work together to protect against various threats while maintaining system functionality. Each layer provides specific protections that complement the others, creating a robust defense-in-depth security posture that can withstand sophisticated attacks and preserve the integrity of the collaborative learning process.

Experience advanced AI security capabilities with Claude for implementing sophisticated privacy-preserving machine learning solutions that protect sensitive data while enabling collaborative intelligence development. The integration of comprehensive security measures with federated learning protocols creates a robust foundation for organizations to participate in distributed AI initiatives with confidence in their data protection and system security.

Privacy-Preserving Techniques and Cryptographic Protocols

The implementation of privacy-preserving techniques in federated learning relies on advanced cryptographic protocols that provide mathematical guarantees of data protection while enabling meaningful collaborative learning. Differential privacy serves as a fundamental technique that adds carefully calibrated noise to model updates, ensuring that individual data points cannot be identified or extracted from the aggregated model parameters. This approach provides quantifiable privacy guarantees by limiting the amount of information that can be inferred about any specific data point while maintaining the statistical utility of the overall learning process.

Homomorphic encryption represents another powerful cryptographic tool that enables computations to be performed on encrypted data without requiring decryption, allowing participants to contribute to the learning process while keeping their model updates encrypted throughout the aggregation process. This technique ensures that even the central server cannot access the raw model parameters from individual participants, providing an additional layer of privacy protection that is particularly valuable in scenarios involving sensitive or regulated data.

Secure multi-party computation protocols enable multiple parties to jointly compute functions over their private inputs without revealing those inputs to each other or to any external observer. In the context of federated learning, these protocols can be used to perform secure aggregation of model parameters, ensuring that the central server can compute the global model update without gaining access to individual participant contributions. This approach provides strong privacy guarantees while maintaining the mathematical properties necessary for effective model training and convergence.

The integration of these cryptographic techniques requires careful consideration of computational overhead, communication costs, and security trade-offs to ensure that the federated learning system remains practical and efficient while providing robust privacy protection. Advanced implementations often combine multiple techniques in layered security architectures that provide defense-in-depth protection against various types of attacks and privacy breaches.

Defending Against Adversarial Attacks

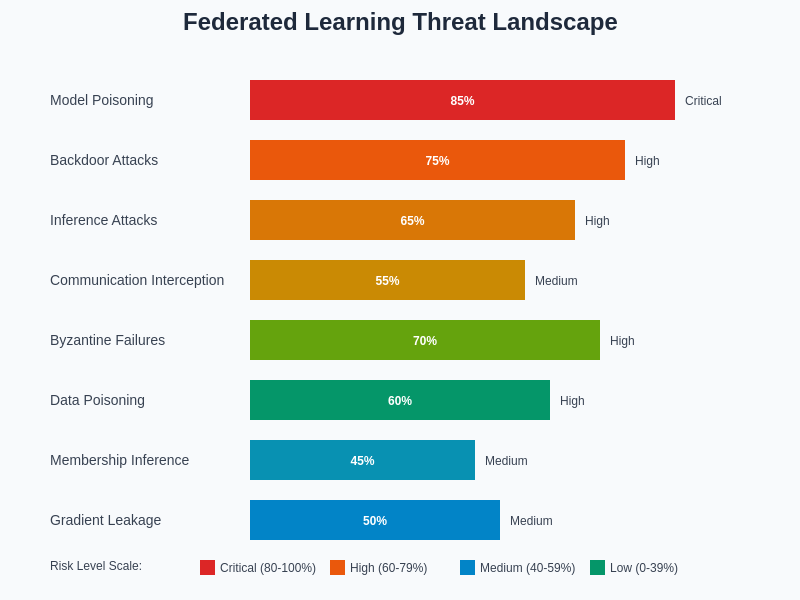

Federated learning systems face unique security challenges due to their distributed nature and the potential for malicious participants to attempt various forms of adversarial attacks. Model poisoning attacks represent a significant threat where malicious participants intentionally submit corrupted model updates designed to degrade the performance of the global model or introduce specific biases that benefit the attacker. Defending against these attacks requires sophisticated anomaly detection mechanisms that can identify unusual patterns in model updates while distinguishing between legitimate variations in local data distributions and malicious manipulations.

Backdoor attacks pose another serious security concern where adversarial participants attempt to insert hidden triggers into the global model that can be activated later to cause specific misclassifications or unauthorized behaviors. These attacks are particularly challenging to detect because the backdoor functionality may remain dormant during normal operation while being triggered only by specific input patterns known to the attacker. Effective defense mechanisms must incorporate comprehensive model validation, adversarial testing, and behavioral analysis techniques that can identify and neutralize potential backdoors before they compromise the system.

Inference attacks represent a more subtle but equally important security consideration where adversarial participants or external observers attempt to extract sensitive information about other participants’ data through careful analysis of model updates, communication patterns, or behavioral characteristics. Protecting against these attacks requires implementing comprehensive privacy protection measures, including differential privacy, secure aggregation, and communication obfuscation techniques that limit the amount of information that can be inferred from observable system behaviors.

The development of robust defense mechanisms requires a comprehensive understanding of the threat landscape, including both existing attack vectors and emerging threats that may evolve as federated learning systems become more widespread. This necessitates continuous research and development of new security techniques, regular security audits and assessments, and the implementation of adaptive security measures that can evolve to address new threats as they emerge.

The threat landscape facing federated learning systems encompasses a wide range of attack vectors with varying risk levels and potential impacts. Understanding these threats and their relative severity is essential for developing effective security strategies and implementing appropriate defensive measures that can protect against the most significant risks while maintaining system usability and performance.

Data Governance and Compliance Frameworks

The implementation of federated learning in enterprise environments requires careful consideration of data governance frameworks and regulatory compliance requirements that vary significantly across industries, jurisdictions, and types of data being processed. Organizations must establish comprehensive governance structures that define clear responsibilities for data protection, model security, and compliance monitoring throughout the federated learning lifecycle. This includes developing detailed policies for participant selection, data quality assurance, model validation, and incident response procedures that ensure consistent application of security and privacy protections across all aspects of the federated learning system.

Regulatory compliance represents a critical consideration that must be addressed through careful analysis of applicable laws and regulations, including data protection regulations such as GDPR, CCPA, and industry-specific requirements for healthcare, financial services, and other regulated sectors. The distributed nature of federated learning introduces additional complexity in determining jurisdiction, data residency requirements, and cross-border data transfer restrictions that must be carefully navigated to ensure full compliance with all applicable regulations.

The establishment of comprehensive audit trails and monitoring capabilities is essential for demonstrating compliance with regulatory requirements and providing transparency into the federated learning process. This includes detailed logging of all system activities, model updates, participant interactions, and security events that can be used to verify compliance with established policies and regulations. Advanced monitoring systems must also provide real-time alerting capabilities that can detect potential compliance violations or security incidents as they occur, enabling rapid response and remediation.

Explore comprehensive AI research capabilities with Perplexity for staying current with evolving regulatory requirements and compliance frameworks that impact federated learning implementations. The dynamic nature of regulatory environments requires continuous monitoring and adaptation of governance frameworks to ensure ongoing compliance and effective risk management.

Implementation Challenges and Solutions

The practical implementation of secure federated learning systems presents numerous technical and organizational challenges that must be carefully addressed to ensure successful deployment and operation. Communication efficiency represents a fundamental challenge, as the iterative nature of federated learning can generate substantial network traffic that may become prohibitive in bandwidth-constrained environments or when dealing with large numbers of participants. Advanced compression techniques, selective participation strategies, and optimized communication protocols are essential for minimizing bandwidth requirements while maintaining the effectiveness of the learning process.

Heterogeneity across participating nodes presents another significant implementation challenge, as different organizations may operate diverse computing infrastructures, data formats, and security requirements that must be accommodated within a unified federated learning framework. This requires developing flexible system architectures that can adapt to varying computational capabilities, storage constraints, and network conditions while maintaining consistent security and privacy protections across all participants.

The coordination and orchestration of large-scale federated learning deployments requires sophisticated management systems that can handle dynamic participant enrollment, load balancing, fault tolerance, and performance optimization across distributed environments. These systems must provide comprehensive monitoring and management capabilities while maintaining the security and privacy principles that are fundamental to federated learning success.

Quality assurance and model validation in federated learning environments require specialized techniques that can assess model performance and reliability without accessing the underlying training data. This includes developing novel evaluation methodologies, benchmark datasets, and validation frameworks that can provide meaningful insights into model quality while respecting the privacy constraints inherent in federated learning systems.

Industry Applications and Use Cases

The application of secure federated learning spans numerous industries and use cases where collaborative AI development can provide significant benefits while addressing critical privacy and security requirements. Healthcare represents one of the most promising application domains, where federated learning enables medical institutions to collaboratively develop diagnostic AI models, drug discovery algorithms, and treatment optimization systems without sharing sensitive patient data. This approach allows healthcare organizations to benefit from collective medical knowledge while maintaining strict compliance with healthcare privacy regulations and protecting patient confidentiality.

Financial services organizations have embraced federated learning for fraud detection, risk assessment, and regulatory compliance applications where the ability to leverage collective intelligence without sharing sensitive financial data provides significant competitive advantages. Banks and financial institutions can collaborate to develop more sophisticated fraud detection models that benefit from diverse transaction patterns and attack vectors while maintaining the confidentiality of customer information and proprietary business data.

The telecommunications industry has implemented federated learning for network optimization, predictive maintenance, and customer experience enhancement applications where multiple operators can collaborate to improve service quality while protecting competitive information. This collaborative approach enables the development of more robust network management algorithms that can adapt to diverse network conditions and usage patterns across different operators and geographic regions.

Manufacturing organizations utilize federated learning for predictive maintenance, quality control, and supply chain optimization applications where collaborative learning can improve operational efficiency while protecting proprietary manufacturing processes and competitive intelligence. This approach enables manufacturers to benefit from collective operational knowledge while maintaining the security of sensitive production data and trade secrets.

Technical Infrastructure and Architecture Considerations

The design and implementation of federated learning infrastructure requires careful consideration of numerous technical factors that impact security, performance, and scalability. The central server architecture must be designed to handle large numbers of concurrent participants while providing robust security protections, fault tolerance, and efficient model aggregation capabilities. This includes implementing sophisticated load balancing mechanisms, redundant processing capabilities, and secure communication protocols that can maintain system performance even under adverse conditions.

Client-side infrastructure considerations include the development of lightweight federated learning frameworks that can operate effectively on diverse computing platforms, from high-performance data centers to resource-constrained edge devices. These frameworks must provide consistent security protections while adapting to varying computational capabilities, storage limitations, and network connectivity constraints that characterize different deployment environments.

The network infrastructure supporting federated learning must provide reliable, secure, and efficient communication channels that can handle the iterative nature of the learning process while protecting against various forms of network-based attacks. This includes implementing advanced encryption protocols, intrusion detection systems, and traffic analysis protection mechanisms that ensure the security and privacy of all communications between participants and the central coordination server.

Storage and data management systems must be designed to support the unique requirements of federated learning, including secure model parameter storage, efficient data processing capabilities, and comprehensive audit logging that enables security monitoring and compliance verification. These systems must also provide robust backup and recovery capabilities that can ensure system continuity even in the event of hardware failures or security incidents.

Future Directions and Emerging Trends

The evolution of federated learning security continues to advance through ongoing research and development efforts that address emerging threats, improve efficiency, and expand the applicability of privacy-preserving collaborative learning. Advanced cryptographic techniques, including zero-knowledge proofs, secure enclaves, and quantum-resistant algorithms, are being integrated into federated learning frameworks to provide even stronger privacy guarantees and protection against evolving attack vectors.

The integration of artificial intelligence and machine learning techniques into federated learning security systems is enabling more sophisticated threat detection, automated response capabilities, and adaptive security measures that can evolve to address new challenges as they emerge. These intelligent security systems can analyze patterns in system behavior, participant interactions, and model performance to identify potential security issues before they impact the overall system.

Edge computing and Internet of Things applications are driving the development of more efficient and lightweight federated learning protocols that can operate effectively in resource-constrained environments while maintaining robust security protections. This includes the development of specialized hardware accelerators, optimized communication protocols, and energy-efficient algorithms that can support federated learning deployments across diverse edge computing environments.

The standardization of federated learning protocols, security frameworks, and interoperability standards is facilitating broader adoption and enabling more effective collaboration between organizations and technology providers. These standardization efforts are creating common frameworks that can ensure consistent security protections while enabling seamless integration between different federated learning implementations and platforms.

Conclusion and Strategic Implications

Federated learning represents a transformative approach to artificial intelligence development that addresses critical privacy and security challenges while enabling unprecedented levels of collaborative innovation. The security frameworks and techniques that underpin federated learning systems provide robust protection for sensitive data while facilitating the development of more sophisticated and effective AI models that benefit from diverse data sources and perspectives.

The strategic implications of secure federated learning extend far beyond technical considerations to encompass fundamental changes in how organizations approach data sharing, competitive collaboration, and regulatory compliance in an increasingly interconnected digital economy. Organizations that successfully implement federated learning capabilities can gain significant competitive advantages while contributing to collective innovation efforts that benefit entire industries and society as a whole.

The continued evolution of federated learning security will require ongoing investment in research and development, collaboration between industry and academia, and the establishment of comprehensive governance frameworks that can ensure the responsible and effective deployment of these powerful technologies. As federated learning becomes more widespread and sophisticated, its impact on artificial intelligence development and data privacy protection will continue to grow, creating new opportunities for innovation while addressing some of the most pressing challenges facing the digital economy.

Disclaimer

This article is for informational purposes only and does not constitute professional security, legal, or technical advice. The views expressed are based on current understanding of federated learning technologies and security practices. Readers should conduct their own research and consult with qualified professionals when implementing federated learning systems. Security requirements and regulatory compliance obligations may vary significantly based on specific use cases, industries, and jurisdictions. The effectiveness of security measures may vary depending on implementation details, threat environments, and evolving attack vectors.