The conventional wisdom in machine learning has long dictated that successful AI models require vast datasets containing thousands or millions of examples to achieve meaningful performance. However, a revolutionary paradigm known as few-shot learning has emerged to challenge this fundamental assumption, demonstrating that sophisticated artificial intelligence systems can achieve remarkable results with just a handful of carefully selected training examples. This breakthrough approach mirrors human learning capabilities, where we can quickly understand and generalize from minimal exposure to new concepts, fundamentally transforming how we approach machine learning challenges across diverse domains.

Explore the latest AI learning breakthroughs to understand cutting-edge developments in efficient learning algorithms that are reshaping the artificial intelligence landscape. Few-shot learning represents a paradigm shift from data-hungry models to intelligent systems capable of rapid adaptation and generalization, opening unprecedented possibilities for AI applications in resource-constrained environments and specialized domains where extensive training data remains scarce or expensive to obtain.

Understanding the Foundation of Few-Shot Learning

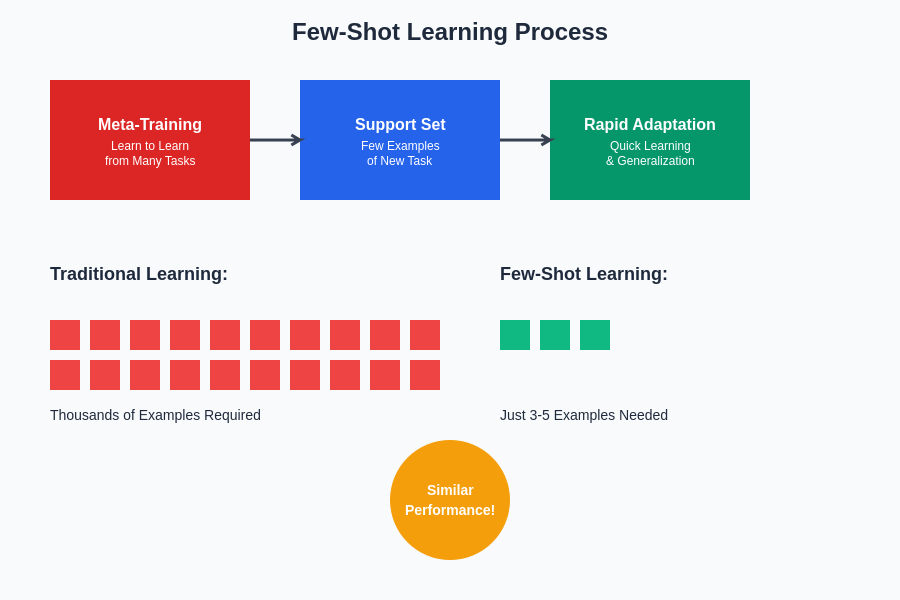

Few-shot learning fundamentally reimagines the traditional machine learning paradigm by focusing on the development of models that can rapidly adapt to new tasks using minimal training examples. Unlike conventional supervised learning approaches that require extensive datasets to achieve optimal performance, few-shot learning systems are designed to leverage prior knowledge and meta-learning strategies to generalize effectively from limited data. This approach draws inspiration from human cognitive abilities, where we can quickly learn new concepts by building upon existing knowledge structures and making intelligent inferences from sparse information.

The theoretical foundation of few-shot learning rests on several key principles that distinguish it from traditional machine learning methodologies. Meta-learning, often referred to as “learning to learn,” forms the cornerstone of few-shot approaches by training models to acquire general learning strategies that can be rapidly applied to new tasks. This meta-knowledge enables systems to identify relevant patterns and relationships within minimal examples, facilitating quick adaptation without extensive retraining. The integration of transfer learning principles further enhances few-shot capabilities by leveraging knowledge gained from related domains to inform decision-making in new contexts with limited data availability.

The Mechanics of Rapid Adaptation

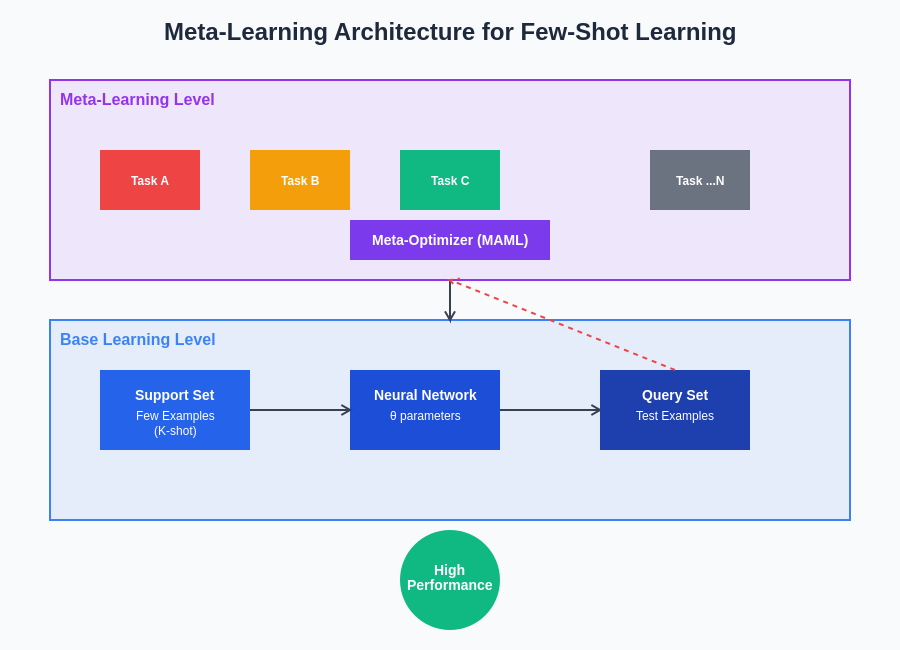

The remarkable efficiency of few-shot learning systems stems from sophisticated architectural innovations and training methodologies that enable rapid adaptation to new tasks. Model-agnostic meta-learning algorithms, such as MAML (Model-Agnostic Meta-Learning), train neural networks to acquire parameter configurations that can be quickly fine-tuned for new tasks using gradient descent with minimal examples. This approach creates models with inherent adaptability, where the initial parameter settings represent a favorable starting point for rapid specialization across diverse problem domains.

Experience advanced AI reasoning with Claude to understand how sophisticated neural architectures enable rapid learning and adaptation from minimal training data. Memory-augmented networks represent another crucial advancement in few-shot learning, incorporating external memory mechanisms that allow models to store and retrieve relevant information across different learning episodes. These systems can maintain persistent knowledge about task structures and solution patterns, enabling more effective generalization when encountering new but related challenges with limited training examples.

Applications in Computer Vision and Image Recognition

Computer vision represents one of the most successful application domains for few-shot learning techniques, where the ability to recognize and classify new object categories from minimal examples has profound practical implications. Traditional image classification systems typically require hundreds or thousands of labeled examples per category to achieve acceptable performance, making them impractical for specialized applications where extensive data collection is challenging or expensive. Few-shot learning approaches have demonstrated remarkable success in scenarios such as medical image analysis, where rare conditions may have limited available imagery, and wildlife conservation efforts that require identification of endangered species from sparse photographic evidence.

The implementation of few-shot learning in computer vision often involves sophisticated embedding techniques that map images into high-dimensional feature spaces where semantic similarity can be effectively measured. Prototypical networks exemplify this approach by learning to generate representative prototypes for each class based on available examples, enabling classification of new instances through distance-based comparisons in the learned feature space. Siamese networks and relation networks further advance these capabilities by learning to compare image pairs and determine similarity relationships, facilitating robust classification performance even when training data is severely limited.

The visual representation of few-shot learning demonstrates how models can rapidly adapt their understanding based on minimal examples, contrasting sharply with traditional learning approaches that require extensive datasets. This efficient learning process enables practical deployment in scenarios where data collection is challenging or expensive, revolutionizing applications across diverse domains from medical diagnostics to autonomous systems.

Natural Language Processing and Text Understanding

Natural language processing has experienced transformative advances through few-shot learning applications, particularly in the development of large language models that can adapt to new tasks with minimal task-specific training examples. The emergence of prompt-based learning represents a paradigm shift where models like GPT-3 and its successors demonstrate remarkable few-shot capabilities by leveraging contextual examples provided within input prompts rather than requiring extensive fine-tuning on task-specific datasets. This approach enables rapid adaptation to diverse language tasks including translation, summarization, question answering, and creative writing with just a few demonstrative examples.

The success of few-shot learning in natural language processing stems from the rich semantic representations learned during pre-training on massive text corpora, which provide models with comprehensive understanding of language structures, relationships, and contextual patterns. When presented with new tasks, these models can leverage their extensive background knowledge to interpret instructions and examples, generating appropriate responses without explicit training on task-specific data. This capability has revolutionized how we approach natural language applications, enabling rapid deployment of language models for specialized domains and tasks without the traditional requirement for large annotated datasets.

Meta-Learning Strategies and Algorithmic Innovations

The theoretical foundation of few-shot learning relies heavily on sophisticated meta-learning algorithms that enable models to acquire general learning strategies applicable across diverse task domains. Gradient-based meta-learning approaches, exemplified by algorithms like MAML and Reptile, focus on finding optimal parameter initializations that facilitate rapid adaptation through a small number of gradient steps when encountering new tasks. These methods train models to develop sensitivity to task-specific adjustments, creating neural networks that can quickly specialize their behavior based on minimal examples while maintaining their general learning capabilities.

Optimization-based meta-learning represents another crucial advancement in few-shot learning, where models learn to generate task-specific optimizers or learning rules rather than relying on fixed optimization algorithms. This approach enables more sophisticated adaptation strategies that can account for task-specific characteristics and requirements, leading to improved performance when learning from limited examples. Memory-augmented approaches further enhance meta-learning capabilities by incorporating external memory systems that allow models to store and retrieve relevant information across learning episodes, creating persistent knowledge structures that inform future learning experiences.

Discover comprehensive AI research tools with Perplexity to stay updated on the latest developments in meta-learning algorithms and few-shot learning methodologies that are advancing the field of artificial intelligence. The integration of attention mechanisms and transformer architectures has further revolutionized meta-learning approaches, enabling models to selectively focus on relevant information within support sets and query examples, improving their ability to identify crucial patterns and relationships that facilitate effective generalization from minimal training data.

Challenges and Limitations in Implementation

Despite the remarkable successes of few-shot learning, several significant challenges and limitations continue to impact the practical implementation and performance of these systems. The fundamental trade-off between generalization capability and task-specific performance remains a central concern, as few-shot models must balance their ability to adapt quickly to new tasks with the need to achieve competitive performance compared to models trained with extensive task-specific data. This balance is particularly challenging in domains where high accuracy is critical, such as medical diagnosis or safety-critical autonomous systems, where the consequences of suboptimal performance can be severe.

The quality and representativeness of the few available training examples significantly impact the effectiveness of few-shot learning systems, creating vulnerability to selection bias and domain shift. When the limited training examples do not adequately represent the full complexity and variability of the target task, few-shot models may fail to generalize effectively to real-world scenarios. Additionally, the meta-training phase required for few-shot learning systems often demands extensive computational resources and carefully curated task distributions, potentially limiting the accessibility and practical deployment of these approaches in resource-constrained environments.

Evaluation Metrics and Performance Assessment

The evaluation of few-shot learning systems requires specialized metrics and methodologies that account for the unique characteristics and constraints of learning from minimal examples. Traditional machine learning evaluation approaches may not adequately capture the nuanced performance aspects of few-shot systems, necessitating the development of specialized benchmarks and assessment frameworks. Few-shot accuracy metrics typically measure performance across varying numbers of support examples, providing insights into how model performance scales with the availability of training data and identifying optimal operating points for different applications.

Cross-task generalization represents another crucial evaluation dimension for few-shot learning systems, assessing how well models trained on one set of tasks can adapt to completely novel task domains. This evaluation approach provides insights into the transferability and robustness of learned meta-knowledge, helping researchers understand the boundaries and limitations of few-shot learning capabilities. Statistical significance testing becomes particularly important in few-shot evaluation due to the high variance that can result from limited training examples, requiring careful experimental design and multiple evaluation runs to establish reliable performance assessments.

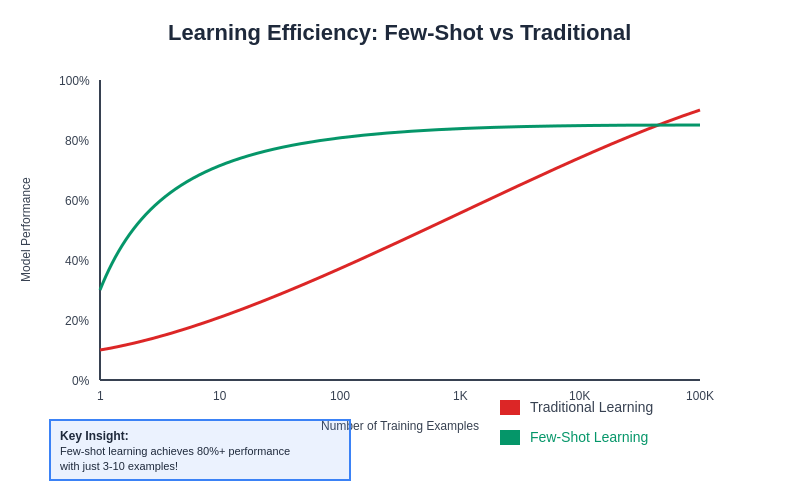

The comparative analysis demonstrates the remarkable efficiency advantages of few-shot learning approaches over traditional machine learning methods, particularly in scenarios where training data is limited or expensive to obtain. This efficiency enables practical deployment of AI systems in previously inaccessible domains and applications, expanding the reach and impact of artificial intelligence technologies across diverse industries and research areas.

Emerging Applications and Future Directions

The practical applications of few-shot learning continue to expand across diverse domains, driven by the increasing recognition of its potential to address real-world challenges where extensive training data is unavailable or impractical to collect. Personalized medicine represents a particularly promising application area, where few-shot learning systems can adapt to individual patient characteristics and rare medical conditions using limited clinical data. These systems can leverage existing medical knowledge while adapting to patient-specific factors, potentially improving diagnostic accuracy and treatment recommendations in cases where traditional approaches fail due to data scarcity.

Robotics and autonomous systems present another frontier for few-shot learning applications, where robots must quickly adapt to new environments, tasks, and operational requirements without extensive retraining. Few-shot learning enables robots to generalize from limited demonstrations or examples, facilitating rapid adaptation to new manipulation tasks, navigation challenges, or human-robot interaction scenarios. This capability is crucial for developing practical robotic systems that can operate effectively in dynamic, real-world environments where comprehensive pre-programming is impossible.

Theoretical Foundations and Mathematical Frameworks

The mathematical foundations underlying few-shot learning draw from diverse areas including information theory, Bayesian inference, and optimization theory to create robust frameworks for learning from limited data. Information-theoretic approaches focus on maximizing the informational content extracted from each training example, ensuring that models make optimal use of available data through sophisticated encoding and representation strategies. These frameworks provide theoretical guarantees about learning performance and help guide the development of more effective few-shot algorithms.

Bayesian approaches to few-shot learning leverage probabilistic modeling to incorporate uncertainty and prior knowledge into the learning process, enabling more robust generalization from limited examples. These methods treat model parameters as random variables with associated uncertainty distributions, allowing systems to make informed predictions while quantifying confidence levels. The integration of hierarchical Bayesian models further enhances few-shot learning by enabling the sharing of statistical strength across related tasks and domains, improving overall learning efficiency and performance.

Integration with Transfer Learning and Domain Adaptation

The synergy between few-shot learning and transfer learning techniques creates powerful combinations that leverage pre-trained knowledge while enabling rapid adaptation to new domains and tasks. Pre-trained foundation models, such as large language models and vision transformers, provide rich feature representations that serve as excellent starting points for few-shot learning applications. These models encapsulate extensive knowledge about language, visual patterns, or other domain-specific information that can be rapidly specialized for new tasks using minimal examples.

Domain adaptation techniques complement few-shot learning by addressing distributional differences between training and target domains, ensuring robust performance when applying few-shot models to new environments or populations. Advanced domain adaptation methods can identify and compensate for systematic differences between domains while preserving the few-shot learning capabilities of the underlying models. This integration enables practical deployment of few-shot systems across diverse real-world scenarios where perfect domain matching is rarely achievable.

The architectural innovations in meta-learning demonstrate how sophisticated neural network designs enable efficient learning from minimal examples through specialized attention mechanisms, memory systems, and adaptation strategies. These architectures represent the culmination of theoretical advances and practical engineering innovations that make few-shot learning viable for real-world applications across diverse domains and problem types.

Practical Implementation Considerations

Successfully implementing few-shot learning systems requires careful consideration of numerous practical factors that can significantly impact performance and deployment success. Data selection strategies play a crucial role in maximizing the effectiveness of limited training examples, with techniques such as active learning and uncertainty sampling helping identify the most informative examples for training. The quality and diversity of support examples often matter more than quantity, making careful curation and preprocessing essential for optimal performance.

Computational efficiency represents another critical implementation consideration, as few-shot learning systems must balance model complexity with inference speed requirements. While meta-learning often requires extensive computational resources during the training phase, the resulting models should be capable of rapid adaptation and inference to realize the practical benefits of few-shot learning. Model compression techniques and efficient architectural designs help address these requirements while maintaining learning capabilities.

Future Research Directions and Open Questions

The field of few-shot learning continues to evolve rapidly, with numerous open research questions and emerging directions that promise to further advance the capabilities and applications of these systems. Continual few-shot learning represents a particularly important research frontier, focusing on developing systems that can continuously acquire new tasks and knowledge while retaining previously learned capabilities without catastrophic forgetting. This capability would enable the development of truly adaptive AI systems that can evolve and improve throughout their operational lifetime.

The integration of few-shot learning with emerging AI paradigms such as neural-symbolic reasoning and causal inference presents exciting opportunities for developing more robust and interpretable learning systems. These approaches could address current limitations in few-shot learning while opening new application domains that require sophisticated reasoning capabilities combined with efficient learning from limited data. The continued advancement of few-shot learning promises to democratize AI capabilities by reducing data requirements and enabling practical deployment across diverse domains and applications.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of few-shot learning technologies and their applications in artificial intelligence. Readers should conduct their own research and consider their specific requirements when implementing few-shot learning systems. The effectiveness and performance of few-shot learning approaches may vary significantly depending on specific use cases, domain characteristics, and implementation details.