The convergence of Flutter’s cross-platform capabilities with TensorFlow Lite’s on-device machine learning prowess has created unprecedented opportunities for developers to build sophisticated AI-powered mobile applications that run seamlessly across iOS and Android platforms. This powerful combination enables the creation of intelligent applications that leverage machine learning models directly on user devices, providing real-time inference capabilities without requiring constant internet connectivity or compromising user privacy through cloud-based processing.

Explore the latest AI development trends to understand how mobile AI applications are reshaping user experiences and creating new possibilities for intelligent app interactions. The integration of TensorFlow Lite with Flutter represents a significant advancement in democratizing AI development, making it accessible to mobile developers who want to incorporate machine learning capabilities without requiring deep expertise in AI model development or deployment strategies.

Understanding the Flutter TensorFlow Lite Ecosystem

Flutter TensorFlow Lite integration provides developers with a comprehensive framework for implementing on-device machine learning capabilities within cross-platform mobile applications. This ecosystem combines Flutter’s declarative UI framework with TensorFlow Lite’s optimized inference engine, creating a seamless development experience that enables rapid prototyping and deployment of AI-powered features across multiple platforms simultaneously.

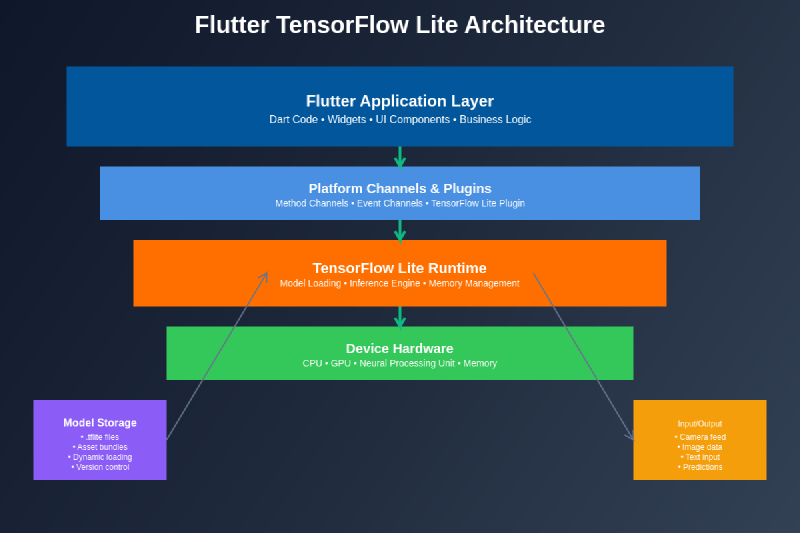

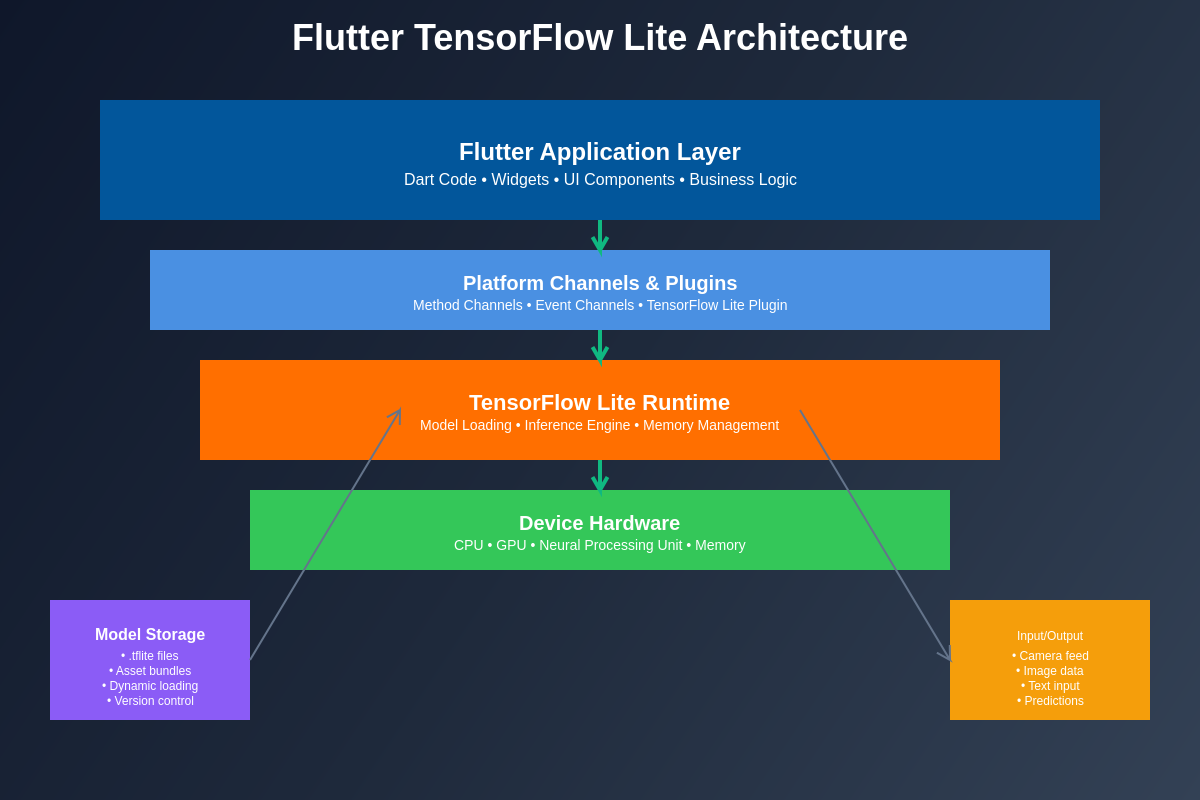

The architecture of Flutter TensorFlow Lite applications follows a structured approach where pre-trained machine learning models are converted to TensorFlow Lite format, integrated into Flutter projects through platform channels or dedicated plugins, and executed on device hardware to provide real-time predictions and classifications. This approach ensures consistent performance across different devices while maintaining the flexibility to customize AI functionality based on specific application requirements and user interaction patterns.

The ecosystem supports a wide range of machine learning tasks including image classification, object detection, natural language processing, and custom model implementations that can be tailored to specific business logic and user experience requirements. This versatility makes Flutter TensorFlow Lite an ideal choice for developers working on diverse AI applications ranging from simple classification tools to complex computer vision systems.

Setting Up Flutter TensorFlow Lite Development Environment

The development environment setup for Flutter TensorFlow Lite projects requires careful configuration of dependencies, platform-specific settings, and model integration pathways that ensure optimal performance across target devices. The process begins with standard Flutter project initialization followed by the addition of TensorFlow Lite plugins and dependencies that provide the necessary interfaces for model loading and inference execution.

Enhance your AI development workflow with Claude for intelligent code generation and debugging assistance throughout the Flutter TensorFlow Lite integration process. The setup process involves configuring platform-specific build settings, managing model assets within the Flutter project structure, and implementing proper error handling mechanisms that ensure robust application performance even when dealing with complex machine learning operations.

The development environment must also account for platform-specific optimizations such as GPU acceleration on supported devices, memory management considerations for large models, and battery usage optimization strategies that maintain application responsiveness while executing computationally intensive machine learning tasks. These considerations are crucial for creating production-ready applications that deliver consistent user experiences across diverse hardware configurations.

Model Integration and Asset Management

Integrating TensorFlow Lite models into Flutter applications requires systematic approach to asset management, model loading strategies, and runtime optimization techniques that ensure efficient model deployment and execution. The process typically involves converting existing TensorFlow models to TensorFlow Lite format, optimizing them for mobile deployment through quantization and pruning techniques, and packaging them as application assets that can be loaded dynamically during runtime.

Model asset management encompasses several critical aspects including model versioning, dynamic loading capabilities, and fallback mechanisms that ensure application stability even when models fail to load or execute properly. Developers must implement robust error handling and graceful degradation strategies that maintain application functionality while providing meaningful feedback to users when AI features are unavailable or experiencing performance issues.

The integration process also requires careful consideration of model update mechanisms that allow for seamless deployment of improved models without requiring full application updates. This capability is particularly important for AI applications that rely on continuously improving models or need to adapt to changing user behaviors and data patterns over time.

The architectural relationship between Flutter components and TensorFlow Lite inference engines demonstrates the seamless integration pathways that enable efficient communication between the application layer and machine learning models. This structure ensures optimal performance while maintaining clean separation of concerns between UI logic and AI processing capabilities.

Implementing Real-Time Image Classification

Real-time image classification represents one of the most common and powerful applications of Flutter TensorFlow Lite integration, enabling developers to create applications that can instantly recognize and categorize visual content captured through device cameras or selected from photo libraries. The implementation process involves configuring camera plugins, preprocessing image data for model compatibility, executing inference operations, and presenting classification results through intuitive user interfaces.

The development of real-time image classification features requires careful attention to performance optimization strategies including image preprocessing techniques, batch processing capabilities, and result caching mechanisms that minimize latency while maintaining classification accuracy. These optimizations are essential for creating responsive applications that provide immediate feedback to users without introducing noticeable delays or performance degradation.

Advanced image classification implementations can incorporate multiple models for different classification tasks, confidence threshold adjustments that improve result reliability, and custom visualization techniques that help users understand classification results and model confidence levels. These enhanced features create more engaging and educational user experiences while demonstrating the practical applications of on-device machine learning capabilities.

Building Object Detection Applications

Object detection functionality within Flutter TensorFlow Lite applications enables the creation of sophisticated computer vision applications that can identify, locate, and track multiple objects within images or video streams in real-time. This capability opens up numerous application possibilities including augmented reality experiences, inventory management systems, security monitoring applications, and interactive gaming experiences that respond to real-world objects and environments.

The implementation of object detection features requires integration of specialized TensorFlow Lite models such as MobileNet SSD or YOLO variants that have been optimized for mobile deployment. These models provide bounding box coordinates, object classifications, and confidence scores that can be used to create rich visual overlays and interactive user experiences that highlight detected objects and provide contextual information about identified items.

Advanced object detection implementations can include multi-object tracking capabilities, custom object training integration, and real-time performance monitoring that ensures consistent detection accuracy across different lighting conditions and device orientations. These sophisticated features enable the creation of production-ready applications that can reliably perform complex computer vision tasks in diverse real-world environments.

Natural Language Processing Integration

Natural language processing capabilities within Flutter TensorFlow Lite applications enable developers to create intelligent text analysis features including sentiment analysis, language translation, text classification, and conversational AI interfaces that operate entirely on device without requiring internet connectivity. These capabilities are particularly valuable for applications that handle sensitive text data or need to provide immediate language processing responses in offline environments.

The integration of NLP models requires careful consideration of text preprocessing techniques, tokenization strategies, and result interpretation methods that ensure accurate language understanding and appropriate response generation. Developers must implement robust text handling mechanisms that account for different languages, character encodings, and text formatting variations that may affect model performance and accuracy.

Leverage advanced AI research capabilities with Perplexity to stay current with the latest natural language processing techniques and model architectures that can enhance Flutter TensorFlow Lite NLP implementations. The development of sophisticated NLP features may also require custom vocabulary handling, context awareness mechanisms, and multi-language support that creates inclusive applications capable of serving diverse user populations and linguistic requirements.

Performance Optimization Strategies

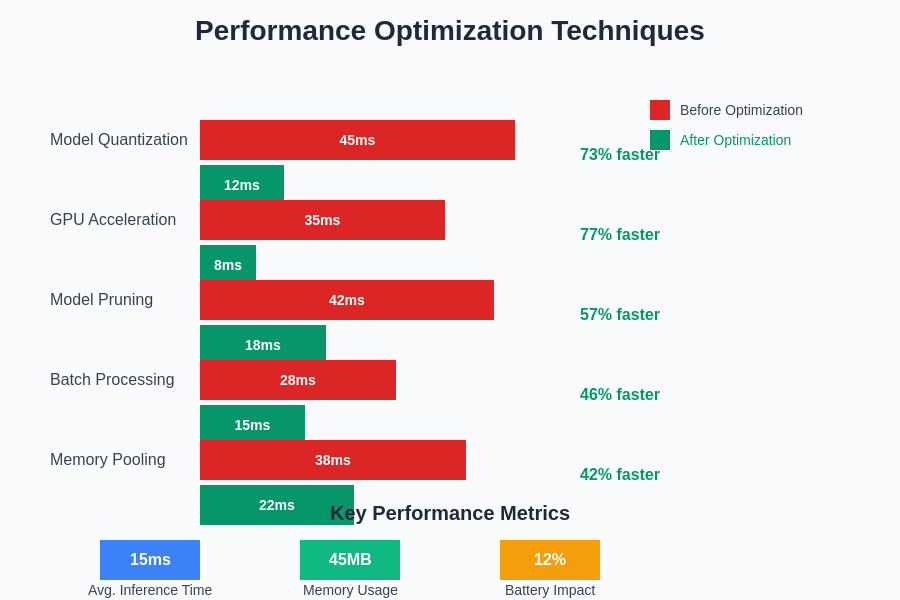

Performance optimization for Flutter TensorFlow Lite applications encompasses multiple dimensions including model optimization techniques, runtime performance tuning, memory management strategies, and battery usage minimization approaches that ensure smooth application operation across diverse device configurations and usage patterns. These optimizations are critical for creating production-ready applications that maintain responsive user interfaces while executing computationally intensive machine learning operations.

Model optimization strategies include quantization techniques that reduce model size and improve inference speed, pruning methods that eliminate unnecessary model parameters, and knowledge distillation approaches that create smaller student models that maintain the accuracy of larger teacher models. These techniques can significantly improve application performance while reducing storage requirements and memory usage during model execution.

Runtime optimization involves implementing efficient data preprocessing pipelines, utilizing hardware acceleration capabilities when available, and implementing intelligent caching mechanisms that minimize redundant computations and data transfers. These optimizations ensure that AI features integrate seamlessly with standard application functionality without introducing performance bottlenecks or user experience degradation.

The comparative analysis of different optimization techniques demonstrates the significant performance improvements achievable through systematic optimization of Flutter TensorFlow Lite implementations. These optimizations enable developers to create responsive applications that deliver consistent AI functionality across diverse device capabilities and performance characteristics.

Cross-Platform Deployment Considerations

Cross-platform deployment of Flutter TensorFlow Lite applications requires careful attention to platform-specific requirements, performance characteristics, and user experience considerations that ensure consistent functionality across iOS and Android devices. This process involves configuring platform-specific build settings, managing model compatibility across different hardware architectures, and implementing adaptive user interfaces that accommodate varying device capabilities and screen configurations.

The deployment process must account for platform-specific optimization opportunities such as iOS Core ML integration for enhanced performance on Apple devices, Android GPU delegate utilization for improved inference speed, and platform-specific memory management strategies that optimize resource usage based on operating system characteristics and device specifications.

Testing and validation across multiple platforms requires comprehensive quality assurance strategies that verify model accuracy, performance consistency, and user interface functionality across diverse device configurations, operating system versions, and hardware capabilities. This thorough testing approach ensures that deployed applications provide reliable AI functionality regardless of the specific device or platform configuration used by end users.

Handling Model Updates and Versioning

Model versioning and update mechanisms represent critical components of production Flutter TensorFlow Lite applications that need to adapt to improving AI models, changing business requirements, or evolving user needs over time. Implementing robust model update systems enables applications to benefit from enhanced AI capabilities without requiring full application reinstallation or complex migration procedures that disrupt user experiences.

The development of model update systems requires careful consideration of backward compatibility requirements, graceful fallback mechanisms, and user communication strategies that inform users about improved AI capabilities while maintaining application stability during transition periods. These systems must also account for model validation procedures that ensure updated models maintain or improve accuracy while remaining compatible with existing application logic and user interface components.

Advanced model update implementations can include A/B testing capabilities that enable gradual rollout of new models, performance monitoring systems that track model effectiveness in production environments, and automatic rollback mechanisms that restore previous model versions when performance degradation or compatibility issues are detected. These sophisticated update systems enable continuous improvement of AI functionality while minimizing risks associated with model deployment and version management.

Security and Privacy Considerations

Security and privacy considerations for Flutter TensorFlow Lite applications encompass model protection strategies, data handling best practices, and user privacy preservation techniques that ensure sensitive information remains secure while providing valuable AI functionality. On-device processing inherently provides privacy benefits by eliminating the need to transmit user data to external servers, but additional security measures may be necessary for applications handling particularly sensitive information or operating in regulated environments.

Model security involves protecting proprietary AI models from reverse engineering or unauthorized access through techniques such as model encryption, obfuscation, and secure loading mechanisms that prevent model extraction or tampering. These security measures are particularly important for applications that rely on custom-trained models or incorporate business-critical AI algorithms that provide competitive advantages or contain sensitive intellectual property.

Data privacy considerations include implementing proper data sanitization procedures, secure data storage mechanisms, and transparent user consent processes that clearly communicate how AI features process user data and what information is collected or analyzed during application usage. These privacy practices help build user trust while ensuring compliance with relevant data protection regulations and industry standards for mobile application development.

Testing and Quality Assurance

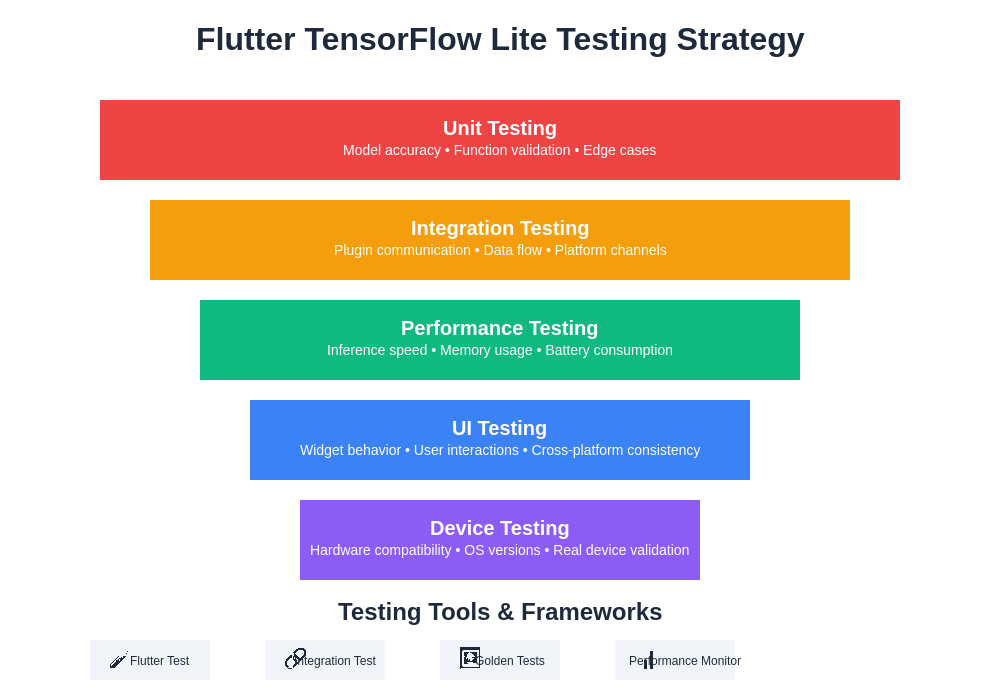

Testing and quality assurance for Flutter TensorFlow Lite applications requires comprehensive strategies that validate both traditional application functionality and AI-specific features including model accuracy, performance consistency, and edge case handling across diverse usage scenarios and device configurations. This testing approach must account for the unique challenges associated with machine learning applications such as model behavior variability, performance dependency on device capabilities, and accuracy variations based on input data characteristics.

Automated testing frameworks for AI applications should include model accuracy validation suites, performance benchmarking tools, and regression testing mechanisms that ensure model updates or application changes do not negatively impact AI functionality or overall application performance. These automated testing systems enable continuous integration and deployment workflows that maintain high quality standards while supporting rapid development iterations and feature enhancements.

Manual testing procedures should focus on user experience validation, edge case identification, and real-world usage scenario verification that automated tests may not adequately cover. This includes testing AI features under various lighting conditions for computer vision applications, validating natural language processing accuracy with diverse text inputs, and ensuring graceful handling of unexpected or invalid data inputs that may occur in production environments.

The comprehensive testing strategy framework illustrates the multi-layered approach necessary for ensuring robust Flutter TensorFlow Lite application quality across all components from model validation to user experience verification. This systematic approach helps identify and resolve issues before they impact end users.

Future Trends and Advanced Features

The future of Flutter TensorFlow Lite development is characterized by rapidly evolving capabilities including federated learning integration, edge AI optimization techniques, and advanced model architectures that enable increasingly sophisticated on-device AI applications. These emerging trends represent significant opportunities for developers to create next-generation applications that leverage cutting-edge machine learning techniques while maintaining the cross-platform advantages and performance characteristics that make Flutter TensorFlow Lite an attractive development choice.

Federated learning capabilities enable applications to contribute to model improvement while preserving user privacy through decentralized learning approaches that update models based on aggregated insights from multiple users without requiring individual data sharing. This approach enables continuous model enhancement while addressing privacy concerns and regulatory requirements that may limit traditional centralized learning approaches.

Advanced optimization techniques including neural architecture search, automatic model compression, and adaptive inference strategies promise to further improve the performance and efficiency of Flutter TensorFlow Lite applications while reducing development complexity and enabling more sophisticated AI functionality on resource-constrained mobile devices. These innovations will continue to expand the possibilities for on-device AI applications and create new opportunities for intelligent mobile experiences.

The integration of emerging hardware capabilities such as dedicated neural processing units, advanced GPU architectures, and specialized AI accelerators will enable Flutter TensorFlow Lite applications to achieve even higher performance levels while maintaining energy efficiency and extending battery life. These hardware advancements, combined with software optimizations, will enable the deployment of larger and more sophisticated models that were previously impractical for mobile deployment.

Conclusion and Best Practices

Flutter TensorFlow Lite represents a powerful combination of technologies that enables developers to create sophisticated cross-platform AI applications with on-device machine learning capabilities. The successful implementation of these technologies requires careful attention to architecture design, performance optimization, security considerations, and user experience principles that ensure applications provide valuable AI functionality while maintaining the reliability and responsiveness that users expect from mobile applications.

Best practices for Flutter TensorFlow Lite development include systematic model optimization, comprehensive testing strategies, robust error handling mechanisms, and continuous performance monitoring that ensures applications maintain high quality standards throughout their lifecycle. These practices enable developers to create production-ready applications that leverage the full potential of on-device AI while minimizing risks associated with complex machine learning integration.

The continued evolution of Flutter TensorFlow Lite capabilities promises to unlock new possibilities for intelligent mobile applications while maintaining the development efficiency and cross-platform advantages that make this technology stack attractive for modern mobile development projects. Developers who invest in mastering these technologies will be well-positioned to create the next generation of AI-powered mobile applications that provide exceptional user experiences and valuable functionality across diverse use cases and application domains.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Flutter TensorFlow Lite technologies and their applications in mobile AI development. Readers should conduct their own research and consider their specific requirements when implementing Flutter TensorFlow Lite solutions. The effectiveness of AI integration may vary depending on specific use cases, model complexity, device capabilities, and implementation approaches.