The architectural landscape of artificial intelligence applications has evolved dramatically with the rise of microservices, creating new challenges and opportunities for developers choosing between programming languages. The decision between Go and Python for building scalable AI microservices represents one of the most critical technical choices facing modern development teams, as each language brings distinct advantages that can significantly impact system performance, maintainability, and operational complexity.

Discover the latest AI development trends to understand how microservices architectures are shaping the future of AI applications. The choice between Go and Python extends far beyond simple language preferences, encompassing fundamental considerations about system design, team expertise, deployment strategies, and long-term scalability requirements that will define the success of AI-powered platforms.

The Microservices Revolution in AI Development

The transition from monolithic AI applications to distributed microservices architectures has fundamentally transformed how artificial intelligence systems are designed, deployed, and scaled. This architectural paradigm shift addresses the unique challenges of AI workloads, including the need for independent scaling of different AI models, isolation of resource-intensive computations, and the ability to update individual components without disrupting entire systems.

Microservices architecture enables AI teams to develop specialized services for distinct functions such as data preprocessing, model inference, result post-processing, and API management. This separation of concerns allows different teams to work independently on specific components while maintaining clear interfaces and contracts between services. The modularity inherent in microservices also facilitates the integration of different AI models and algorithms, enabling organizations to build sophisticated AI platforms that combine multiple machine learning approaches.

The distributed nature of microservices presents unique considerations for AI applications, particularly around data flow, latency requirements, and resource allocation. Unlike traditional web applications, AI microservices often handle large datasets, perform computationally intensive operations, and require careful orchestration to maintain acceptable response times while managing resource consumption effectively.

Go: The Performance Powerhouse

Go has emerged as a compelling choice for AI microservices due to its exceptional performance characteristics, built-in concurrency support, and operational simplicity. The language’s compiled nature delivers superior runtime performance compared to interpreted languages, making it particularly well-suited for high-throughput AI services that require consistent low-latency responses. The garbage collector in Go has been specifically optimized for low-latency applications, ensuring predictable performance even under heavy load conditions.

The concurrency model in Go, built around goroutines and channels, provides elegant solutions for handling the parallel processing requirements common in AI workloads. This design enables efficient handling of multiple concurrent inference requests, parallel data processing pipelines, and asynchronous communication between microservices. The lightweight nature of goroutines allows Go services to handle thousands of concurrent connections with minimal resource overhead, making it ideal for high-scale AI platforms.

Go’s standard library includes robust networking and HTTP handling capabilities that simplify the development of RESTful APIs and gRPC services commonly used in microservices architectures. The language’s emphasis on simplicity and explicit error handling contributes to more reliable and maintainable AI services, reducing the likelihood of runtime failures that could cascade through distributed systems.

Enhance your development workflow with Claude to accelerate the implementation of complex microservices architectures across different programming languages. The combination of AI-assisted development and carefully chosen technology stacks creates powerful synergies that improve both development velocity and system quality.

Python: The AI Ecosystem Champion

Python’s dominance in the artificial intelligence and machine learning ecosystem makes it a natural choice for AI microservices, particularly those that require direct integration with machine learning frameworks, data science libraries, and AI model serving infrastructure. The extensive ecosystem of Python libraries, including TensorFlow, PyTorch, scikit-learn, NumPy, and Pandas, provides unmatched capabilities for implementing sophisticated AI algorithms and data processing pipelines within microservices.

The rapid prototyping capabilities of Python enable AI teams to quickly experiment with different approaches, implement proof-of-concept services, and iterate on AI models without extensive development overhead. This agility is particularly valuable in AI development, where requirements often evolve based on model performance, data availability, and business needs. The dynamic nature of Python facilitates rapid adaptation to changing requirements and seamless integration of new AI capabilities.

Python’s extensive community support and documentation ecosystem provide significant advantages for teams building AI microservices. The availability of pre-trained models, ready-to-use implementations of complex algorithms, and comprehensive tutorials accelerates development timelines while reducing the risk associated with implementing cutting-edge AI techniques. The language’s readability and expressiveness also facilitate knowledge transfer and collaboration among team members with varying levels of AI expertise.

Performance Considerations and Benchmarking

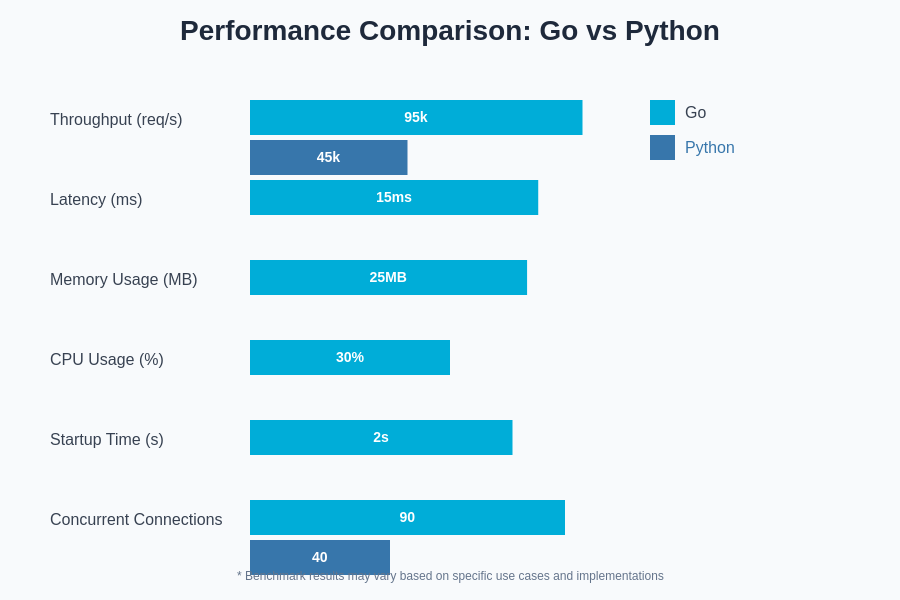

Performance analysis reveals significant differences between Go and Python in microservices scenarios, particularly under high-load conditions typical of production AI systems. Go consistently demonstrates superior throughput and lower latency for API endpoints, network I/O operations, and concurrent request handling. These performance advantages become increasingly important as AI services scale to handle thousands of concurrent inference requests or process large volumes of real-time data.

Memory utilization patterns also differ substantially between the two languages. Go’s efficient memory management and smaller runtime footprint result in lower resource consumption per service instance, enabling higher deployment density and reduced infrastructure costs. Python’s memory overhead, while manageable for smaller services, can become significant when deploying numerous microservices instances, particularly in containerized environments where resource limits are strictly enforced.

The computational performance gap between Go and Python is most pronounced in CPU-intensive operations such as data transformation, serialization, and protocol handling. However, this gap narrows considerably when Python services leverage optimized libraries written in C or Fortran, or when using specialized AI inference engines that handle the computationally intensive aspects of machine learning workloads.

The performance characteristics of each language create distinct optimization opportunities and architectural considerations that influence the overall design of AI microservices platforms.

Development Velocity and Team Productivity

Development velocity represents a critical factor in choosing between Go and Python for AI microservices, as time-to-market pressures often drive technology decisions in competitive AI landscapes. Python’s concise syntax, extensive libraries, and rapid prototyping capabilities typically result in faster initial development cycles, particularly for services that heavily leverage existing AI frameworks and data science tools.

The learning curve considerations differ significantly between the two languages. Python’s accessibility and widespread adoption in the AI community mean that most AI engineers and data scientists can quickly contribute to Python-based microservices. Go, while having a relatively simple syntax, requires developers to learn new paradigms around concurrency, error handling, and system-level programming that may not be familiar to AI practitioners.

However, the long-term maintainability advantages of Go often compensate for initial development overhead. The language’s emphasis on explicit error handling, strong typing, and clear interfaces results in more robust services that require less debugging and maintenance effort over time. The compilation step in Go also catches many classes of errors at build time that might only surface during runtime in Python applications.

Leverage Perplexity for comprehensive research on emerging best practices and architectural patterns that can inform technology choices for AI microservices development. The combination of thorough research and practical experience guides teams toward optimal technology selections.

Ecosystem Integration and Library Support

The ecosystem considerations for AI microservices extend beyond the core programming language to encompass the entire technology stack, including machine learning frameworks, data processing libraries, monitoring tools, and deployment platforms. Python’s mature AI ecosystem provides seamless integration with popular frameworks such as TensorFlow Serving, MLflow, Kubeflow, and various model management platforms that are specifically designed for AI workloads.

Go’s ecosystem, while smaller in the AI domain, offers robust support for the infrastructure and operational aspects of microservices. Libraries for service discovery, load balancing, circuit breakers, and observability are well-developed and production-tested. The growing adoption of Go in cloud-native environments has resulted in excellent tooling for containerization, orchestration, and service mesh integration.

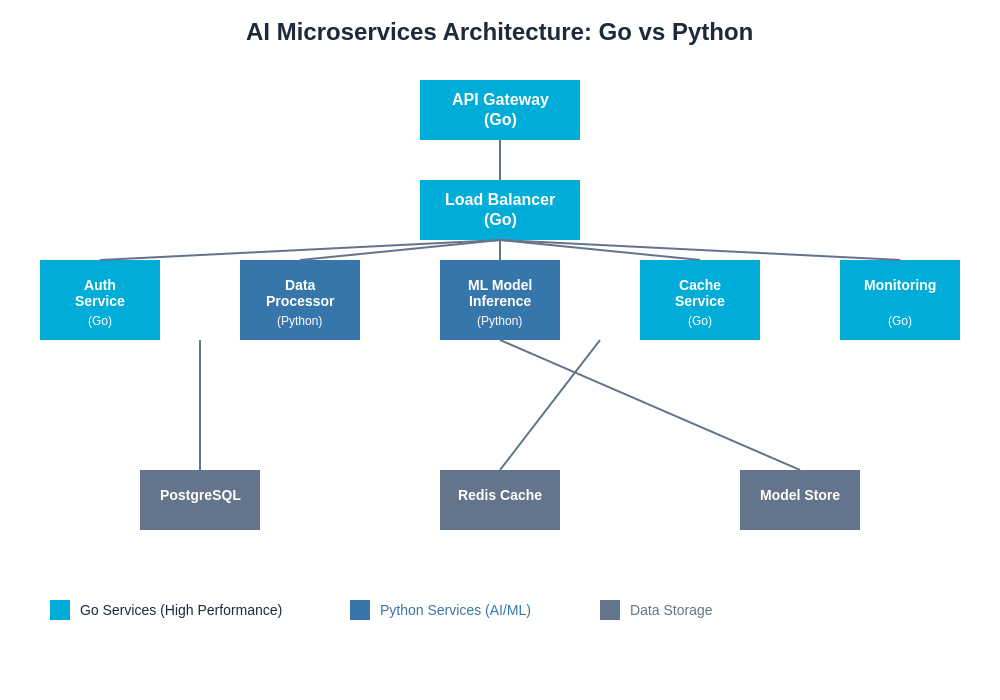

The integration patterns between Go and Python services create interesting hybrid architectures where each language is utilized for its strengths. Common approaches include using Python for AI model inference services while implementing API gateways, load balancers, and coordination services in Go. This polyglot approach maximizes the benefits of both languages while managing the complexity of multi-language systems.

Scalability Patterns and Architecture Design

Scalability patterns for AI microservices must account for the unique characteristics of AI workloads, including variable computational requirements, data dependencies, and model lifecycle management. Go’s efficient resource utilization and fast startup times make it particularly well-suited for auto-scaling scenarios where services need to rapidly respond to changing load patterns.

The horizontal scaling characteristics of Go services enable fine-grained resource allocation and efficient utilization of cloud infrastructure. The small memory footprint and fast startup times facilitate rapid scaling events, reducing the time required to respond to traffic spikes or batch processing requirements. These characteristics are particularly valuable for AI services that experience irregular load patterns based on user activity or scheduled data processing workflows.

Python services often require more careful scaling strategies due to higher resource requirements and longer startup times. However, the rich ecosystem of Python-based AI tools provides sophisticated approaches to model serving, including GPU acceleration, model parallelization, and intelligent caching strategies that can overcome some performance limitations. The integration with specialized AI serving frameworks can provide scalability characteristics that rival or exceed traditional application servers.

The architectural patterns for AI microservices must balance performance requirements, development velocity, operational complexity, and cost considerations across diverse service types and deployment scenarios.

Container Orchestration and Deployment

Container orchestration strategies differ significantly between Go and Python microservices, primarily due to resource requirements, startup characteristics, and runtime dependencies. Go’s ability to produce statically linked binaries simplifies container creation and reduces image sizes, resulting in faster deployment times and reduced storage costs. The minimal runtime dependencies of Go applications also reduce security surface area and simplify dependency management in containerized environments.

Python services typically require more complex containerization strategies to manage dependencies, virtual environments, and runtime requirements. However, the AI ecosystem has developed sophisticated container optimization techniques, including multi-stage builds, dependency layer caching, and specialized base images optimized for machine learning workloads. These optimizations can significantly reduce the operational overhead associated with Python-based AI services.

The resource scheduling considerations for container orchestration platforms must account for the different characteristics of Go and Python services. Go services typically benefit from CPU-optimized scheduling due to their computational efficiency, while Python AI services often require GPU-aware scheduling and specialized resource allocation for machine learning workloads. Modern orchestration platforms like Kubernetes provide flexible scheduling mechanisms that can accommodate both service types within unified deployment pipelines.

Monitoring, Observability, and Debugging

Observability requirements for AI microservices encompass traditional application metrics along with AI-specific concerns such as model performance, inference latency, data drift detection, and resource utilization patterns. Go’s built-in support for structured logging, metrics collection, and distributed tracing simplifies the implementation of comprehensive observability solutions. The language’s performance characteristics also minimize the overhead associated with extensive monitoring instrumentation.

Python’s rich ecosystem includes sophisticated monitoring and debugging tools specifically designed for AI applications. Libraries for model monitoring, performance profiling, and automated alerting provide comprehensive visibility into AI service behavior. The integration with popular observability platforms and the availability of AI-specific monitoring solutions create powerful debugging and performance optimization capabilities.

The debugging experience differs considerably between the two languages. Go’s explicit error handling and strong typing provide clear error propagation and debugging information, while Python’s dynamic nature enables interactive debugging and runtime inspection capabilities. The choice between these approaches often depends on team preferences and the complexity of the AI algorithms being implemented.

Cost Optimization and Resource Efficiency

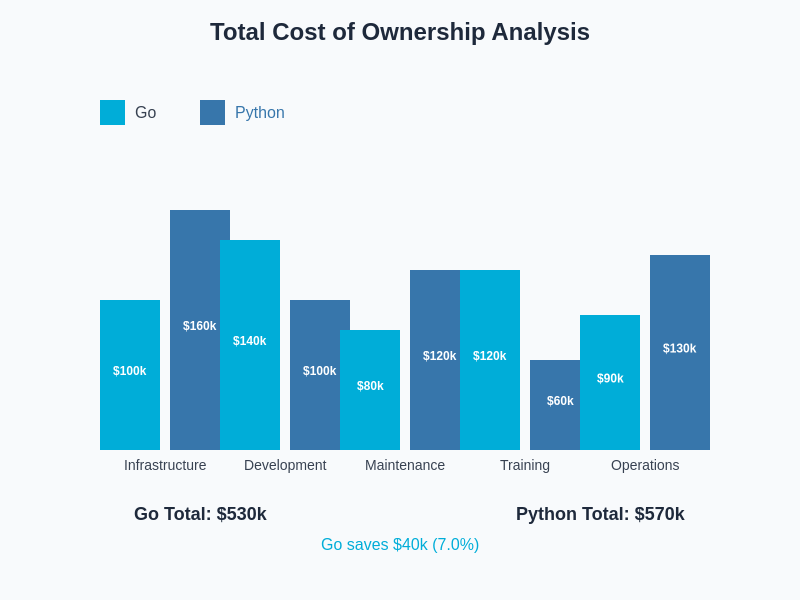

Cost considerations for AI microservices encompass infrastructure expenses, development resources, operational overhead, and long-term maintenance costs. Go’s superior resource efficiency typically results in lower infrastructure costs due to reduced CPU and memory requirements per service instance. This efficiency becomes particularly significant at scale, where even small per-instance savings can result in substantial cost reductions across large deployments.

The operational efficiency gains from Go services include faster deployment times, reduced debugging overhead, and simplified dependency management that can significantly impact total cost of ownership. However, these benefits must be weighed against potentially higher development costs if teams lack Go expertise or require extensive training to achieve productivity.

Python services may incur higher infrastructure costs due to resource requirements, but these costs can be offset by faster development cycles, reduced time-to-market, and the availability of pre-built solutions that minimize custom development efforts. The mature ecosystem of Python AI tools also provides cost-effective solutions for common AI service requirements, reducing the need for custom implementations.

The total cost of ownership analysis must consider both immediate development costs and long-term operational expenses across the entire service lifecycle.

Security Considerations and Best Practices

Security requirements for AI microservices must address both traditional application security concerns and AI-specific vulnerabilities such as model extraction, adversarial attacks, and data privacy protection. Go’s memory safety features and explicit error handling contribute to more secure service implementations by reducing common vulnerability classes such as buffer overflows and null pointer dereferences.

The smaller attack surface of Go services, combined with static compilation and minimal runtime dependencies, simplifies security auditing and vulnerability management. The language’s standard library includes robust cryptographic functions and secure networking capabilities that facilitate the implementation of security best practices without relying on external dependencies.

Python’s dynamic nature and extensive ecosystem require more careful security management, particularly around dependency vulnerabilities and runtime security concerns. However, the AI ecosystem has developed sophisticated security tools and frameworks specifically designed for machine learning applications, including secure model serving platforms, privacy-preserving computation libraries, and automated vulnerability scanning tools.

Hybrid Architecture Strategies

Modern AI platforms increasingly adopt hybrid approaches that leverage both Go and Python services within unified architectures, maximizing the strengths of each language while mitigating their respective limitations. Common patterns include using Go for high-performance gateway services, load balancing, and service coordination while implementing AI inference and data processing services in Python.

The communication protocols between Go and Python services typically utilize standard interfaces such as REST APIs, gRPC, or message queues that abstract language-specific implementations. This approach enables teams to choose the optimal language for each service type while maintaining loose coupling and operational flexibility.

Service mesh technologies provide sophisticated traffic management, security, and observability capabilities that simplify the operation of polyglot microservices architectures. These platforms enable consistent operational practices across services regardless of implementation language while providing the flexibility to optimize individual services based on their specific requirements.

Future Considerations and Technology Evolution

The evolution of both Go and Python continues to address the specific needs of AI microservices development. Go’s ongoing improvements in areas such as generics, dependency management, and debugging tools enhance its suitability for complex AI systems. Similarly, Python’s performance optimizations, type system enhancements, and AI framework innovations continue to strengthen its position in the AI ecosystem.

Emerging technologies such as WebAssembly, edge computing, and specialized AI hardware create new considerations for language selection in AI microservices. Go’s compilation model and performance characteristics position it well for edge deployment scenarios, while Python’s ecosystem advantages continue to drive innovation in cloud-based AI platforms.

The convergence of AI and traditional software development practices suggests that future AI microservices platforms will increasingly resemble traditional distributed systems with AI-specific optimizations. This evolution favors approaches that balance AI capabilities with proven software engineering practices, operational reliability, and long-term maintainability.

The decision between Go and Python for AI microservices ultimately depends on specific project requirements, team capabilities, performance constraints, and strategic objectives. Organizations must carefully evaluate their priorities across performance, development velocity, operational complexity, and ecosystem compatibility to make informed technology choices that support their AI initiatives effectively.

Understanding the strengths and limitations of each approach enables teams to design AI microservices architectures that deliver exceptional performance, maintainability, and scalability while supporting rapid innovation and competitive differentiation in the evolving AI landscape.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The comparisons and recommendations presented are based on general characteristics and common use cases. Actual performance, costs, and suitability may vary significantly based on specific requirements, implementation details, and deployment environments. Readers should conduct thorough evaluation and testing based on their specific use cases before making technology decisions for production systems.