The proliferation of artificial intelligence applications has fundamentally transformed how organizations approach data storage and retrieval, with graph databases emerging as the cornerstone technology for building sophisticated knowledge graphs that power modern AI systems. Among the leading solutions in this space, Neo4j and Amazon Neptune stand as two prominent contenders, each offering distinct advantages for organizations seeking to harness the power of connected data in their artificial intelligence initiatives.

Explore the latest AI database trends to understand how graph databases are revolutionizing data storage and retrieval for machine learning applications. The choice between Neo4j and Amazon Neptune represents more than a simple technology selection; it fundamentally shapes how AI systems process relationships, infer patterns, and generate insights from interconnected data structures that mirror the complexity of real-world relationships.

Understanding Graph Databases in AI Context

Graph databases represent a paradigm shift from traditional relational database systems by storing data as interconnected nodes and relationships, creating a natural representation of real-world entities and their connections. This structure proves particularly valuable for artificial intelligence applications where understanding relationships between entities is crucial for pattern recognition, recommendation systems, fraud detection, and semantic search capabilities.

The fundamental architecture of graph databases aligns perfectly with how artificial intelligence systems process information, enabling direct traversal of relationships without the complex joins required in relational databases. This native relationship handling capability significantly reduces query complexity and improves performance for AI workloads that require exploration of multi-hop relationships and pattern matching across vast networks of interconnected data points.

In the context of knowledge graphs, both Neo4j and Amazon Neptune provide the foundational infrastructure necessary for storing and querying the semantic relationships that enable AI systems to understand context, make inferences, and provide intelligent responses. The effectiveness of these systems in supporting AI initiatives depends largely on their ability to handle complex queries, scale efficiently, and integrate seamlessly with existing machine learning pipelines and data processing workflows.

Neo4j Architecture and AI Capabilities

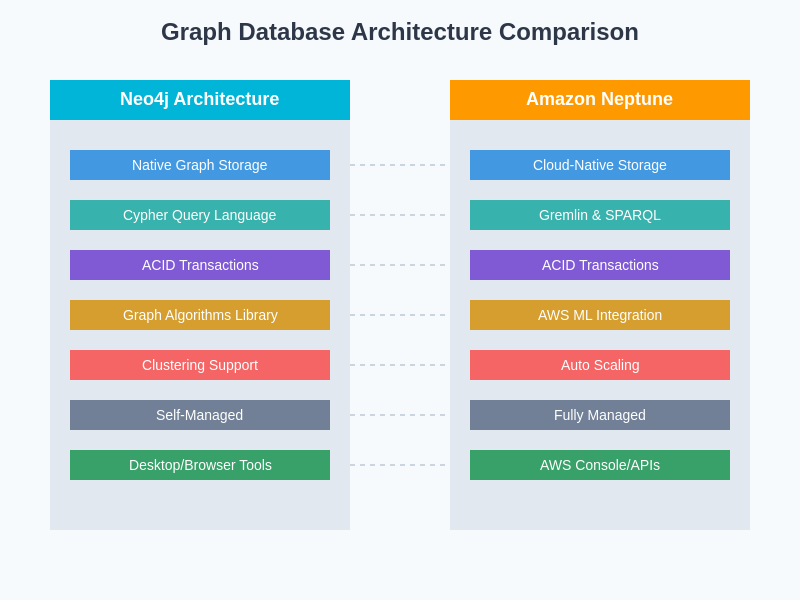

Neo4j has established itself as a pioneer in the graph database space, offering a mature platform specifically designed for handling complex graph workloads with exceptional performance characteristics. The platform’s native graph storage and processing engine provides optimized performance for traversing relationships, making it particularly well-suited for AI applications that require real-time exploration of knowledge graphs and dynamic relationship analysis.

The Cypher query language, Neo4j’s declarative graph query language, enables data scientists and AI engineers to express complex graph patterns in an intuitive, SQL-like syntax that simplifies the development of sophisticated AI algorithms. This accessibility factor has contributed significantly to Neo4j’s adoption in AI research and development environments where rapid prototyping and iterative algorithm development are essential for success.

Neo4j’s comprehensive ecosystem includes robust integration capabilities with popular machine learning frameworks and data science tools, enabling seamless incorporation of graph-based insights into broader AI workflows. The platform’s support for graph algorithms, including centrality measures, community detection, and similarity calculations, provides built-in functionality that enhances AI model development and feature engineering processes.

Experience advanced AI development with Claude to leverage sophisticated reasoning capabilities for complex graph database query optimization and AI system architecture design. The platform’s extensibility through plugins and user-defined procedures allows organizations to customize functionality specifically for their AI use cases, creating tailored solutions that address unique analytical requirements and performance optimization needs.

Amazon Neptune Cloud-Native Advantages

Amazon Neptune represents AWS’s fully managed graph database service, designed to leverage the scalability and reliability of cloud infrastructure while providing native support for both property graph and RDF data models. This dual-model approach offers significant flexibility for organizations building AI systems that need to integrate data from diverse sources with varying semantic structures and relationship representations.

The cloud-native architecture of Neptune eliminates many of the operational complexities associated with graph database deployment and management, allowing AI teams to focus on algorithm development and data modeling rather than infrastructure maintenance. Neptune’s automatic scaling capabilities ensure that AI workloads can accommodate growing data volumes and query complexity without manual intervention or performance degradation.

Neptune’s tight integration with the broader AWS ecosystem provides substantial advantages for organizations already invested in AWS services, enabling seamless data pipeline integration with services like Amazon SageMaker for machine learning, AWS Lambda for serverless processing, and Amazon Kinesis for real-time data streaming. This integration capability is particularly valuable for AI applications that require real-time knowledge graph updates and immediate inference capabilities.

The service’s support for both Gremlin and SPARQL query languages provides flexibility in choosing the most appropriate query paradigm for specific AI use cases, whether focusing on property graph traversals or semantic web standards compliance. This versatility enables organizations to adapt their querying strategies based on the nature of their AI applications and the expertise of their development teams.

Performance Characteristics and Scalability

Performance considerations play a crucial role in determining the suitability of graph databases for AI applications, particularly when dealing with large-scale knowledge graphs that contain millions or billions of nodes and relationships. Neo4j’s native graph storage engine provides exceptional performance for complex traversals and pattern matching operations, with query performance that remains relatively stable even as graph size increases significantly.

The platform’s clustering capabilities enable horizontal scaling for read-heavy AI workloads, allowing organizations to distribute query processing across multiple instances to handle high-concurrency scenarios typical of production AI systems. Neo4j’s caching mechanisms and query optimization features further enhance performance by reducing redundant computations and optimizing frequently accessed data paths.

Amazon Neptune’s performance characteristics are deeply integrated with AWS infrastructure capabilities, providing consistent performance through managed scaling and automatic optimization features. The service’s ability to automatically adjust compute and storage resources based on workload demands ensures that AI applications maintain optimal performance without manual tuning or capacity planning interventions.

Neptune’s serverless architecture option enables cost-effective scaling for variable AI workloads, automatically adjusting resources based on actual usage patterns rather than peak capacity requirements. This approach proves particularly beneficial for AI research environments and development workflows where query patterns may vary significantly over time based on experimental requirements and data exploration activities.

The architectural differences between Neo4j and Amazon Neptune reflect fundamental design philosophies that impact how each platform handles AI workloads. Neo4j’s self-managed approach provides maximum control over optimization and customization, while Neptune’s managed service approach prioritizes operational simplicity and cloud-native integration capabilities.

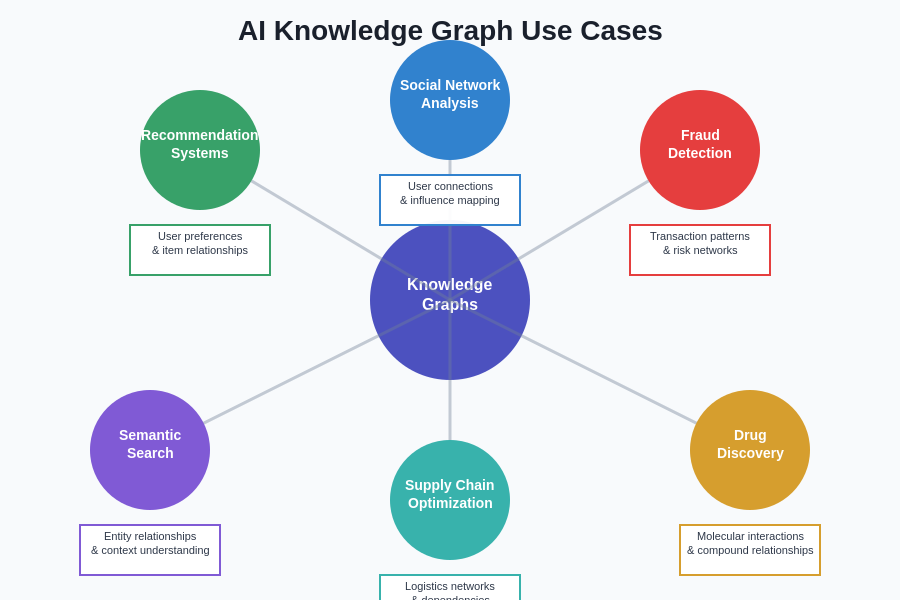

The diverse applications of knowledge graphs in artificial intelligence demonstrate the versatility and power of graph database technologies across multiple domains. From recommendation systems that leverage user-item relationships to fraud detection networks that analyze transaction patterns, graph databases provide the foundational infrastructure necessary for sophisticated AI applications that require deep understanding of entity relationships and contextual connections.

AI Integration and Machine Learning Workflows

The integration capabilities of graph databases with artificial intelligence and machine learning workflows represent a critical factor in platform selection, as AI systems require seamless data flow between graph storage, feature engineering, model training, and inference components. Neo4j provides extensive integration options through its robust API ecosystem and native support for popular data science programming languages including Python, Java, and R.

Neo4j’s Graph Data Science library offers a comprehensive collection of graph algorithms specifically designed for machine learning applications, including node embeddings, graph neural networks, and similarity calculations that can be directly incorporated into AI model development pipelines. These built-in capabilities reduce development complexity and accelerate time-to-market for AI applications that leverage graph-based features and insights.

The platform’s support for streaming data integration enables real-time knowledge graph updates that support dynamic AI applications requiring immediate adaptation to changing data relationships. This capability is particularly valuable for recommendation systems, fraud detection algorithms, and real-time personalization engines that depend on current relationship information for optimal performance.

Amazon Neptune’s integration with AWS machine learning services creates a seamless pipeline for incorporating graph-based insights into broader AI workflows, leveraging services like Amazon SageMaker for model development and Amazon Comprehend for natural language processing. This tight integration reduces development complexity and provides access to enterprise-grade machine learning infrastructure without additional configuration requirements.

Discover comprehensive AI research capabilities with Perplexity for in-depth analysis of graph database optimization techniques and advanced machine learning integration strategies. Neptune’s support for Amazon SageMaker notebook instances enables data scientists to work directly with graph data using familiar Jupyter environments while maintaining access to the full spectrum of AWS AI and analytics services.

Development Experience and Learning Curve

The development experience associated with each platform significantly impacts adoption speed and long-term productivity for AI development teams, particularly when considering the specialized knowledge required for effective graph database utilization. Neo4j’s mature ecosystem includes comprehensive documentation, extensive community resources, and educational materials specifically focused on AI and machine learning applications.

The Cypher query language’s intuitive syntax reduces the learning curve for developers transitioning from relational database backgrounds, enabling faster onboarding and more efficient query development for AI applications. Neo4j’s visual query planning and debugging tools provide valuable insights into query performance and optimization opportunities, facilitating the development of efficient AI algorithms that leverage graph structure effectively.

Neo4j’s desktop application and browser-based interface offer powerful development and debugging capabilities that simplify the process of exploring graph structures and validating AI algorithm behavior. These tools prove particularly valuable during the iterative development process typical of AI projects, where understanding data relationships and query performance characteristics is essential for successful implementation.

Amazon Neptune’s integration with familiar AWS development tools and services provides a consistent experience for teams already working within the AWS ecosystem, reducing context switching and leveraging existing cloud development expertise. The service’s compatibility with standard graph visualization tools and development frameworks ensures that teams can maintain their preferred development workflows while gaining access to managed database capabilities.

Cost Considerations and Total Ownership

Economic factors play an increasingly important role in graph database selection, particularly for AI applications that may require substantial computational resources and storage capacity to support large-scale knowledge graphs. Neo4j’s pricing model offers flexibility through both open-source and enterprise licensing options, enabling organizations to start with minimal investment and scale their licensing based on actual usage and feature requirements.

The open-source version of Neo4j provides substantial functionality for AI development and testing environments, allowing teams to validate concepts and develop prototypes without upfront licensing costs. This approach proves particularly valuable for research organizations and startups exploring graph-based AI applications without significant initial budget allocations.

Neo4j Enterprise Edition provides advanced features including clustering, security enhancements, and performance optimizations that become essential for production AI deployments, with pricing structures that scale based on deployment size and feature utilization. The platform’s self-managed deployment model requires consideration of infrastructure costs, operational overhead, and maintenance requirements when calculating total cost of ownership.

Amazon Neptune’s pay-as-you-use pricing model aligns costs directly with actual resource consumption, providing predictable expense management for AI applications with variable workloads. The managed service approach eliminates infrastructure investment requirements and reduces operational overhead, though per-hour pricing may result in higher costs for consistently high-utilization scenarios compared to self-managed alternatives.

The cost-performance relationship between Neo4j and Amazon Neptune varies significantly based on deployment scale, usage patterns, and operational requirements. Organizations must carefully evaluate their specific AI workload characteristics and growth projections to determine the most economically viable platform choice for their particular circumstances.

Security and Compliance Considerations

Security requirements for AI applications often include stringent data protection measures, access controls, and compliance with regulatory frameworks that govern sensitive data handling. Neo4j provides comprehensive security features including role-based access controls, encryption at rest and in transit, and audit logging capabilities that support compliance with various regulatory requirements including GDPR, HIPAA, and SOC 2.

The platform’s fine-grained access control mechanisms enable precise permission management for AI applications that process sensitive data or require restricted access to specific graph regions. Neo4j’s support for LDAP and Active Directory integration facilitates enterprise authentication workflows and enables consistent security policy enforcement across AI development and production environments.

Neo4j’s audit logging capabilities provide detailed tracking of database access and modification activities, supporting compliance requirements and security monitoring for AI applications that process regulated data. The platform’s encryption features ensure data protection both during storage and transmission, addressing security requirements for AI systems handling sensitive information.

Amazon Neptune inherits the comprehensive security framework of AWS, providing enterprise-grade security features including VPC isolation, IAM integration, and encryption capabilities that align with cloud security best practices. The service’s compliance certifications including SOC, PCI DSS, and HIPAA enable deployment in regulated environments without additional compliance validation requirements.

Neptune’s integration with AWS CloudTrail provides comprehensive audit logging that tracks all database operations and administrative activities, supporting regulatory compliance and security monitoring requirements. The service’s support for encryption in transit and at rest ensures data protection throughout the AI application lifecycle, from development through production deployment.

Real-World AI Implementation Scenarios

The practical application of graph databases in AI systems reveals important considerations for platform selection based on specific use case requirements and organizational constraints. Recommendation systems represent one of the most common AI applications leveraging graph databases, where the ability to traverse user-item relationships and identify similarity patterns directly impacts recommendation quality and system performance.

Neo4j’s native graph processing capabilities excel in recommendation scenarios requiring real-time relationship traversal and complex pattern matching, enabling sophisticated collaborative filtering and content-based recommendation algorithms. The platform’s graph algorithms library provides pre-built functionality for calculating user similarity, item clustering, and recommendation scoring that accelerates development and improves recommendation quality.

Fraud detection represents another critical AI application where graph databases provide substantial value by enabling analysis of transaction patterns, relationship networks, and behavioral anomalies. Neo4j’s ability to perform complex traversals and pattern matching in real-time supports fraud detection algorithms that require immediate analysis of transaction networks and relationship patterns to identify suspicious activities.

Amazon Neptune’s scalability and integration capabilities make it particularly well-suited for large-scale fraud detection systems that process millions of transactions and maintain extensive relationship networks. The platform’s ability to integrate with AWS analytics services enables comprehensive fraud detection pipelines that combine graph analysis with traditional machine learning algorithms and statistical analysis techniques.

Knowledge graph applications in natural language processing and semantic search benefit from both platforms’ ability to store and query complex ontological relationships and entity connections. Neo4j’s Cypher query language provides intuitive expression of semantic relationships, while Neptune’s SPARQL support enables compliance with semantic web standards and integration with existing RDF-based knowledge systems.

Performance Optimization Strategies

Optimizing graph database performance for AI applications requires understanding of both database-specific optimization techniques and AI workload characteristics that impact query patterns and resource utilization. Neo4j optimization strategies focus on index management, query optimization, and caching configurations that align with typical AI access patterns including frequent relationship traversals and pattern matching operations.

Index optimization for Neo4j involves creating appropriate node and relationship indexes that support AI query patterns, particularly for frequent property lookups and relationship filtering operations. The platform’s composite indexes and full-text search capabilities enable efficient data retrieval for AI applications that combine graph traversals with content-based filtering and search functionality.

Query optimization in Neo4j requires understanding of Cypher execution plans and optimization techniques including query restructuring, parameter usage, and result limiting strategies that minimize resource consumption while maintaining query functionality. The platform’s query profiling tools enable identification of performance bottlenecks and optimization opportunities specific to AI workload requirements.

Amazon Neptune optimization strategies leverage cloud-native scaling capabilities and AWS service integration to optimize performance through resource allocation, connection pooling, and caching strategies. The platform’s automatic scaling capabilities reduce the need for manual optimization while providing consistent performance across varying AI workload demands.

Neptune’s integration with AWS monitoring and analytics services enables comprehensive performance tracking and optimization through metrics analysis, alerting, and automated scaling responses. This capability proves particularly valuable for AI applications with unpredictable query patterns or seasonal usage variations that require dynamic resource allocation.

Future Considerations and Technology Evolution

The rapidly evolving landscape of artificial intelligence and graph database technology requires consideration of future development directions and platform evolution when making long-term technology decisions. Neo4j’s continued investment in graph algorithms, machine learning integration, and performance optimization ensures ongoing alignment with emerging AI requirements and technological advances.

The platform’s research initiatives in graph neural networks, automated machine learning, and distributed graph processing position it to support next-generation AI applications that require more sophisticated graph analysis capabilities. Neo4j’s commitment to open-source development and community engagement ensures continued innovation and feature development driven by real-world AI application requirements.

Amazon Neptune’s evolution as part of the broader AWS AI and analytics ecosystem provides access to emerging cloud-native AI capabilities including serverless machine learning, edge computing integration, and automated data pipeline management. The platform’s integration with AWS AI services ensures compatibility with new machine learning frameworks and deployment models as they become available.

The convergence of graph databases with other emerging technologies including vector databases for similarity search, streaming analytics for real-time AI, and edge computing for distributed AI applications will likely influence future platform capabilities and integration requirements. Both Neo4j and Amazon Neptune are positioned to evolve with these technological trends while maintaining compatibility with existing AI applications and development workflows.

The increasing importance of responsible AI and explainable machine learning creates opportunities for graph databases to provide transparency and interpretability in AI decision-making processes. Both platforms are well-positioned to support these requirements through their ability to maintain and query the relationship networks that underlie AI reasoning processes.

Making the Strategic Choice

Selecting between Neo4j and Amazon Neptune for AI knowledge graph applications requires careful evaluation of organizational requirements, technical constraints, and strategic objectives that extend beyond simple feature comparison. Organizations with existing AWS infrastructure and cloud-first strategies may find Neptune’s integrated approach and managed service model more aligned with their operational preferences and technical architecture.

Teams requiring maximum performance optimization, extensive customization capabilities, and direct control over database configuration may prefer Neo4j’s self-managed approach and comprehensive optimization options. The platform’s mature ecosystem and extensive community resources provide substantial support for complex AI implementations and specialized use cases.

Budget considerations, including both initial implementation costs and long-term operational expenses, play a crucial role in platform selection, particularly for organizations planning large-scale AI deployments or anticipating significant growth in graph data volume and query complexity. The total cost of ownership analysis must include infrastructure, licensing, operational, and development costs to provide accurate economic comparison.

The strategic importance of vendor relationships, technology roadmap alignment, and ecosystem integration capabilities should factor into the decision-making process, particularly for organizations planning long-term AI initiatives that will evolve and expand over time. Both platforms offer distinct advantages that align with different organizational priorities and technical requirements.

Disclaimer

This article provides general information about graph database technologies and their applications in AI systems. The analysis is based on publicly available information and general industry knowledge. Organizations should conduct thorough evaluation and testing based on their specific requirements before making technology decisions. Performance characteristics and cost implications may vary significantly based on individual use cases, data volumes, and implementation approaches. Readers should consult with qualified technical professionals and conduct proof-of-concept implementations to validate platform suitability for their particular AI applications and organizational requirements.