The modern data landscape demands robust quality assurance mechanisms and comprehensive monitoring solutions to ensure reliable machine learning operations at scale. As organizations increasingly depend on data-driven decision making and automated ML systems, the choice between data quality frameworks becomes critical for maintaining system reliability, compliance, and performance. Two prominent solutions have emerged as leaders in this space: Great Expectations, with its comprehensive data validation capabilities, and Evidently, focusing specifically on ML model monitoring and data drift detection.

Discover the latest trends in data science and ML monitoring to stay informed about emerging tools and best practices that are shaping the future of data quality assurance and machine learning operations. The evolution of these frameworks represents a fundamental shift toward proactive data quality management and automated monitoring systems that can detect issues before they impact business outcomes.

Understanding the Data Quality Challenge

The complexity of modern data pipelines and machine learning workflows has created unprecedented challenges in maintaining data quality and model performance across diverse environments and use cases. Traditional approaches to data validation often fall short when dealing with streaming data, complex schema evolution, and the nuanced requirements of machine learning models that can degrade silently over time due to data drift, concept drift, or changes in underlying data distributions.

Great Expectations and Evidently have emerged as sophisticated solutions addressing different aspects of this challenge, each bringing unique strengths and approaches to data quality assurance and model monitoring. Understanding their respective capabilities, architectural differences, and use case suitability becomes essential for organizations seeking to implement robust data quality frameworks that can scale with their growing data infrastructure and machine learning initiatives.

Great Expectations: Comprehensive Data Validation Framework

Great Expectations represents a mature, enterprise-grade data validation framework designed to bring software engineering best practices to data quality assurance. The platform provides a comprehensive suite of tools for defining, documenting, and validating data expectations across diverse data sources and formats. Its strength lies in creating human-readable documentation of data assumptions while maintaining rigorous validation capabilities that can be integrated into existing data pipelines and workflows.

The framework’s approach centers around the concept of “expectations” - explicit statements about data properties that can be automatically validated, documented, and monitored over time. These expectations range from simple statistical checks like value ranges and null counts to complex business logic validations and cross-dataset consistency checks. The platform’s ability to generate comprehensive data documentation automatically while performing validation creates significant value for data governance and compliance requirements.

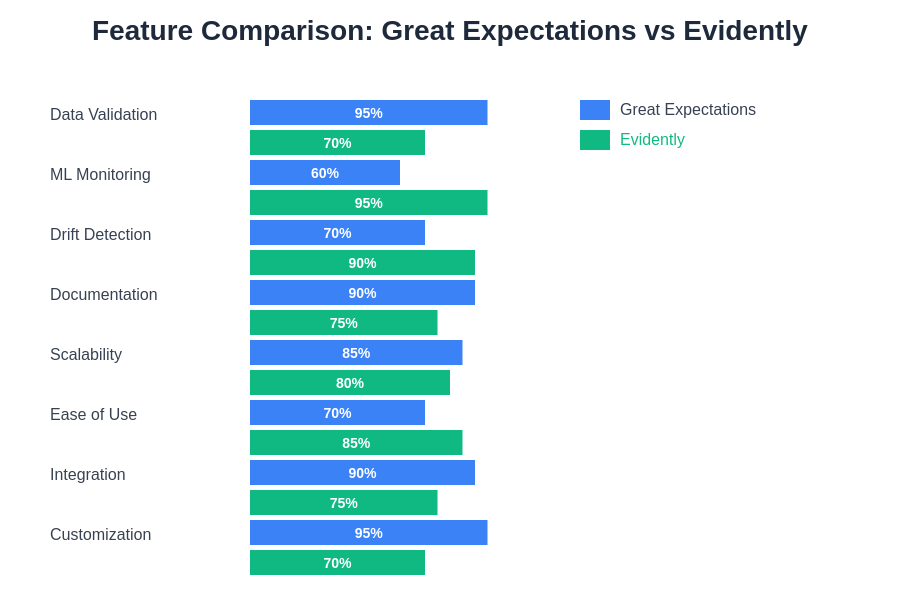

The comprehensive feature comparison reveals distinct strengths in each platform’s approach to data quality and monitoring challenges. While Great Expectations excels in traditional data validation and documentation capabilities, Evidently demonstrates superior performance in ML-specific monitoring and drift detection scenarios.

Great Expectations excels in environments where comprehensive data cataloging, extensive validation rule libraries, and detailed reporting are essential. The platform’s integration capabilities with popular data processing frameworks, cloud storage systems, and orchestration tools make it suitable for large-scale data operations requiring robust quality assurance processes.

Evidently: Specialized ML Monitoring Solution

Evidently focuses specifically on machine learning model monitoring and data drift detection, providing specialized capabilities for tracking model performance degradation and identifying changes in data distributions that could impact model accuracy. The platform excels at detecting subtle changes in data characteristics that might not trigger traditional data quality alerts but could significantly impact machine learning model performance over time.

Enhance your ML workflows with advanced AI assistants like Claude that can help analyze model performance patterns and suggest optimization strategies for your machine learning monitoring systems. The specialized nature of Evidently makes it particularly valuable for organizations with mature ML operations requiring sophisticated drift detection and model performance tracking capabilities.

The framework provides interactive dashboards and detailed reports specifically designed for data scientists and ML engineers who need to understand how their models are performing in production environments. Evidently’s strength lies in its ability to identify and visualize different types of drift, including data drift, prediction drift, and target drift, providing actionable insights for model maintenance and retraining decisions.

Architectural Differences and Design Philosophy

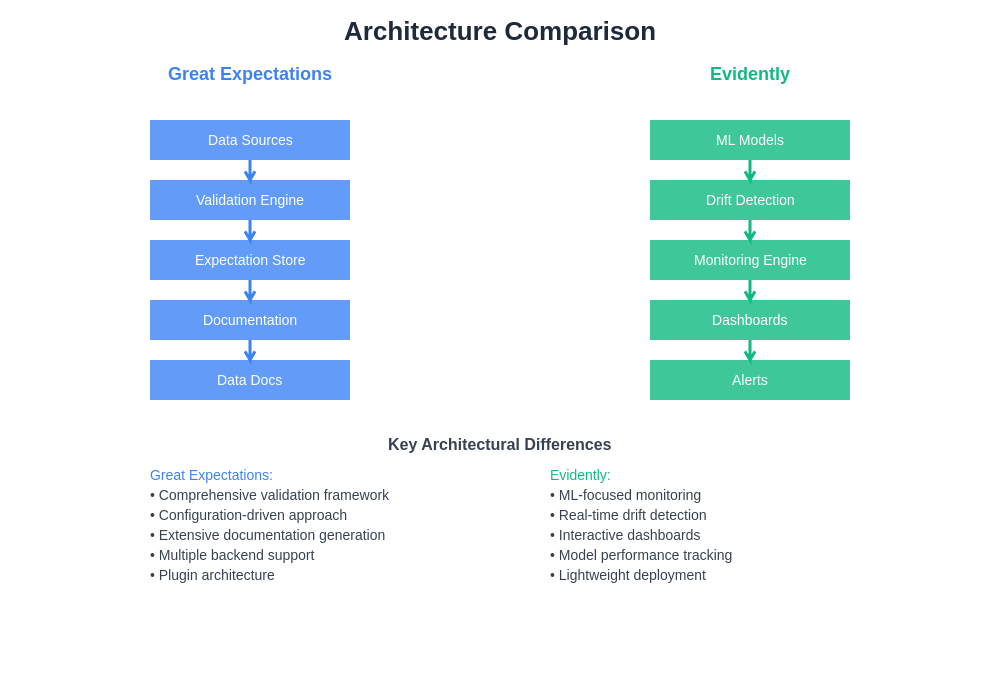

The architectural approaches of Great Expectations and Evidently reflect their different design philosophies and target use cases. Great Expectations follows a comprehensive validation framework model where expectations are defined declaratively and executed across various data processing environments. The platform emphasizes configuration-driven validation with extensive customization capabilities and supports complex validation scenarios through its flexible expectation library and plugin architecture.

Evidently adopts a more focused approach centered specifically on machine learning monitoring workflows. The platform is designed to integrate seamlessly with ML pipelines and provides specialized metrics and visualizations tailored for ML practitioners. Its architecture prioritizes ease of use for ML-specific monitoring tasks while maintaining the flexibility to handle various data formats and model types.

The difference in architectural philosophy extends to their respective ecosystems and integration patterns. Great Expectations provides extensive integration options with data processing frameworks, storage systems, and orchestration tools, making it suitable for diverse data engineering environments. Evidently focuses on ML-specific integrations and provides specialized connectors for popular ML frameworks and model serving platforms.

The architectural differences between these platforms reflect their distinct design philosophies and target use cases. Great Expectations employs a comprehensive validation framework approach with extensive configuration options, while Evidently focuses on streamlined ML monitoring with specialized drift detection capabilities.

Data Validation Capabilities Comparison

Great Expectations offers an extensive library of built-in expectations covering statistical validations, schema checks, business logic validations, and custom validation functions. The platform supports complex validation scenarios involving multiple datasets, temporal checks, and sophisticated business rule implementations. Its validation capabilities extend beyond simple statistical checks to include comprehensive data profiling, anomaly detection, and compliance validation features.

The platform’s strength in data validation lies in its comprehensive approach that covers both technical data quality aspects and business logic validation requirements. Organizations can define expectations that encode business rules, regulatory compliance requirements, and data governance policies alongside traditional technical validations for completeness, accuracy, and consistency.

Evidently’s validation capabilities are specifically optimized for machine learning contexts, focusing on detecting changes in data distributions, feature drift, and model performance degradation. While it may not offer the breadth of general-purpose data validation features found in Great Expectations, its specialized ML-focused validations provide deeper insights into model-relevant data quality issues.

The platform excels at identifying subtle changes in feature distributions, correlation patterns, and statistical properties that are particularly relevant for machine learning model performance. These specialized capabilities make Evidently especially valuable for organizations where ML model reliability is critical and where traditional data quality metrics might miss model-relevant data quality issues.

Monitoring and Alerting Systems

The monitoring and alerting capabilities of both platforms reflect their different focus areas and target audiences. Great Expectations provides comprehensive monitoring infrastructure that can track data quality metrics over time, generate detailed validation reports, and integrate with various alerting systems for proactive quality issue detection. The platform’s monitoring capabilities extend to data freshness, validation success rates, and trend analysis across multiple data sources and validation suites.

Great Expectations’ alerting system can be configured to notify stakeholders about validation failures, data quality degradation trends, and compliance violations through various communication channels. The platform’s flexibility allows for customized alerting rules based on business priorities and operational requirements, enabling organizations to implement sophisticated quality monitoring workflows that align with their specific needs and constraints.

Evidently’s monitoring approach is tailored specifically for ML operations, providing specialized dashboards and alerting mechanisms focused on model performance and data drift detection. The platform can detect gradual drift patterns that might not trigger immediate alerts but could indicate the need for model retraining or data pipeline adjustments.

Leverage Perplexity for comprehensive research on the latest developments in ML monitoring techniques and data drift detection methodologies that can enhance your understanding of these critical aspects of machine learning operations.

Integration Ecosystem and Deployment Options

Great Expectations offers extensive integration capabilities with popular data processing frameworks including Apache Spark, Pandas, SQL databases, and cloud data warehouses. The platform supports deployment across various environments from local development to cloud-scale production systems, with native integrations for major cloud platforms and data engineering tools.

The framework’s plugin architecture enables custom integrations and extensibility for specialized use cases, while its API-first design facilitates integration with existing data pipelines and orchestration systems. Organizations can deploy Great Expectations as part of their existing data infrastructure with minimal disruption to current workflows.

Evidently provides specialized integrations focused on ML workflows and model serving platforms. The framework integrates well with popular ML frameworks like MLflow, Weights & Biases, and various model serving solutions. Its deployment options are optimized for ML operations environments where integration with model training and serving pipelines is essential.

The platform’s focus on ML-specific integrations means it may require additional tooling for comprehensive data quality management beyond ML contexts, but it excels in environments where ML model monitoring is the primary concern and where specialized ML operations tooling is already in place.

Performance and Scalability Considerations

Performance characteristics differ significantly between the two platforms due to their architectural designs and intended use cases. Great Expectations is designed to handle large-scale data validation across diverse data sources and formats, with optimizations for batch processing scenarios and distributed computing environments. The platform can scale to handle enterprise-grade data volumes through its integration with distributed processing frameworks.

The framework’s performance optimization includes support for sampling strategies, parallel validation execution, and efficient resource utilization across different data processing backends. Organizations can tune performance based on their specific requirements, balancing validation comprehensiveness with processing speed and resource consumption.

Evidently’s performance characteristics are optimized for ML monitoring workflows, focusing on efficient calculation of drift metrics and model performance statistics. The platform is designed to handle real-time monitoring scenarios where quick detection of model performance issues is critical for maintaining system reliability.

The specialized nature of Evidently’s calculations allows for optimized performance in ML-specific monitoring tasks, though it may not match Great Expectations’ scalability for general-purpose data validation across extremely large datasets or complex enterprise data environments.

Use Case Suitability and Recommendations

Great Expectations is particularly well-suited for organizations requiring comprehensive data quality management across diverse data sources and use cases. The platform excels in environments where data governance, regulatory compliance, and extensive documentation requirements are critical. Organizations with complex data pipelines, multiple data sources, and requirements for detailed data quality reporting will find Great Expectations’ comprehensive approach valuable.

The framework is ideal for data engineering teams managing large-scale data operations where systematic validation, documentation, and quality monitoring across multiple systems and formats are essential. Its extensive customization capabilities make it suitable for organizations with unique validation requirements or complex business logic that needs to be encoded in data quality checks.

Evidently is specifically designed for organizations with mature ML operations requiring specialized model monitoring and drift detection capabilities. The platform is particularly valuable for teams deploying ML models in production environments where model performance degradation could have significant business impact.

Organizations with multiple ML models in production, complex feature engineering pipelines, and requirements for sophisticated drift detection will find Evidently’s specialized capabilities essential for maintaining model reliability and performance over time.

The use case suitability matrix provides a clear visualization of where each platform excels across different operational contexts. Great Expectations demonstrates superior suitability for data governance and general data engineering tasks, while Evidently shows exceptional strength in ML operations and real-time monitoring scenarios.

Implementation Complexity and Learning Curve

The implementation complexity of Great Expectations reflects its comprehensive feature set and extensive customization options. Organizations implementing Great Expectations should plan for significant initial setup and configuration efforts, particularly when implementing complex validation suites and custom expectations. The platform’s flexibility comes with the cost of increased complexity in initial deployment and ongoing maintenance.

However, the investment in Great Expectations implementation typically pays dividends through comprehensive data quality coverage and detailed documentation that improves overall data governance and operational efficiency. The platform’s extensive documentation and community support help organizations navigate the implementation process effectively.

Evidently offers a more streamlined implementation path for organizations focused specifically on ML monitoring. The platform’s specialized nature means less configuration complexity for ML-specific use cases, though it may require additional tooling for comprehensive data quality management beyond ML contexts.

The focused scope of Evidently can result in faster time-to-value for ML monitoring use cases, making it attractive for organizations that need to quickly implement model monitoring capabilities without the overhead of a comprehensive data quality framework.

Cost Considerations and Total Ownership

Cost considerations for both platforms extend beyond initial licensing or deployment costs to include implementation effort, ongoing maintenance, infrastructure requirements, and operational overhead. Great Expectations, being an open-source framework with commercial support options, provides flexibility in cost management while offering enterprise features for organizations requiring additional support and capabilities.

The total cost of ownership for Great Expectations includes the investment in initial setup, configuration, and integration efforts, plus ongoing maintenance and potential infrastructure costs for running validation processes at scale. Organizations should consider the long-term benefits of comprehensive data quality management when evaluating these costs.

Evidently’s cost structure reflects its specialized focus on ML monitoring, potentially offering lower total ownership costs for organizations with specific ML monitoring requirements. The platform’s efficiency in ML-specific monitoring tasks can result in lower infrastructure costs compared to more general-purpose solutions.

Future Development and Community Support

Both platforms benefit from active development communities and ongoing feature enhancement programs. Great Expectations has established a mature ecosystem with extensive community contributions, comprehensive documentation, and regular feature updates that address evolving data quality requirements and emerging technologies.

The platform’s roadmap includes continued integration enhancements, performance optimizations, and expanded validation capabilities that address emerging data quality challenges in cloud-native and real-time processing environments.

Evidently’s development focuses on advancing ML monitoring capabilities, incorporating new drift detection algorithms, and expanding integration options with emerging ML operations tools and platforms. The platform’s specialized focus allows for rapid innovation in ML-specific monitoring capabilities.

The choice between Great Expectations and Evidently ultimately depends on organizational requirements, existing infrastructure, and specific use case priorities. Organizations requiring comprehensive data quality management across diverse use cases will find Great Expectations’ extensive capabilities valuable, while those focused specifically on ML operations will benefit from Evidently’s specialized monitoring features and streamlined implementation approach.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The comparison is based on publicly available information and general understanding of the platforms’ capabilities. Organizations should conduct their own evaluation and testing to determine the most suitable solution for their specific requirements. The effectiveness and suitability of either platform may vary depending on specific use cases, infrastructure requirements, and organizational constraints.