The democratization of artificial intelligence has reached unprecedented levels with Hugging Face Transformers, a revolutionary library that has fundamentally transformed how developers approach machine learning tasks. This comprehensive ecosystem provides seamless access to thousands of pre-trained models, enabling developers to implement sophisticated AI capabilities without requiring extensive machine learning expertise or computational resources for training models from scratch. The transformative impact of Hugging Face extends far beyond mere convenience, representing a paradigm shift that has made cutting-edge AI accessible to developers across all skill levels and organizational contexts.

Explore the latest AI development trends to understand how transformer-based models are reshaping the technological landscape and creating new possibilities for intelligent applications. The emergence of Hugging Face as the central hub for pre-trained models has catalyzed innovation across industries, from natural language processing and computer vision to audio analysis and multimodal applications, fundamentally altering the trajectory of AI development and deployment.

The Revolution of Pre-Trained Models

The concept of pre-trained models represents one of the most significant breakthroughs in modern machine learning, fundamentally changing the economics and accessibility of AI development. Traditional machine learning approaches required extensive datasets, computational resources, and specialized expertise to train models capable of performing complex tasks. Hugging Face Transformers has eliminated these barriers by providing access to models that have already been trained on massive datasets using state-of-the-art architectures, allowing developers to leverage years of research and billions of training examples with just a few lines of code.

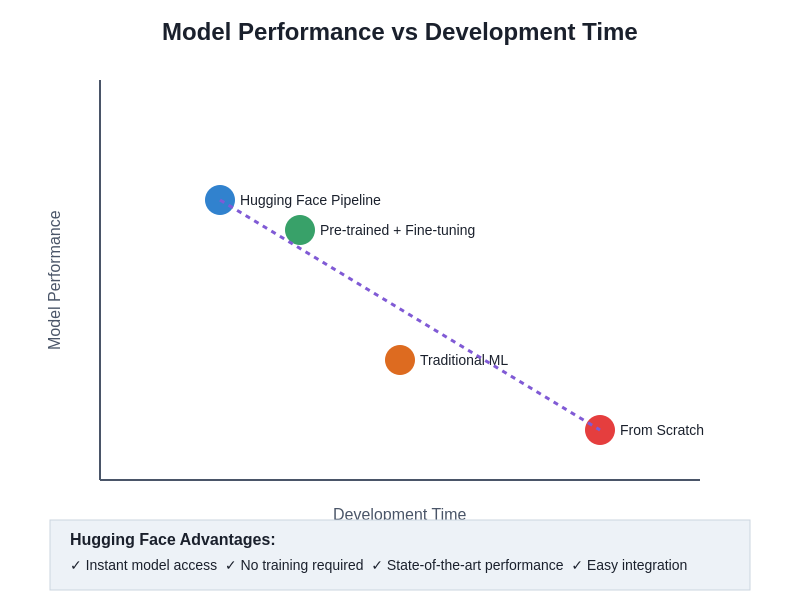

The revolutionary nature of this approach extends beyond mere convenience to encompass fundamental changes in how developers conceptualize and implement AI solutions. Rather than starting from scratch, developers can now build upon the accumulated knowledge embedded in pre-trained models, fine-tuning them for specific use cases or deploying them directly for common tasks. This transfer learning paradigm has democratized access to sophisticated AI capabilities while dramatically reducing the time, cost, and expertise required to implement intelligent features in applications.

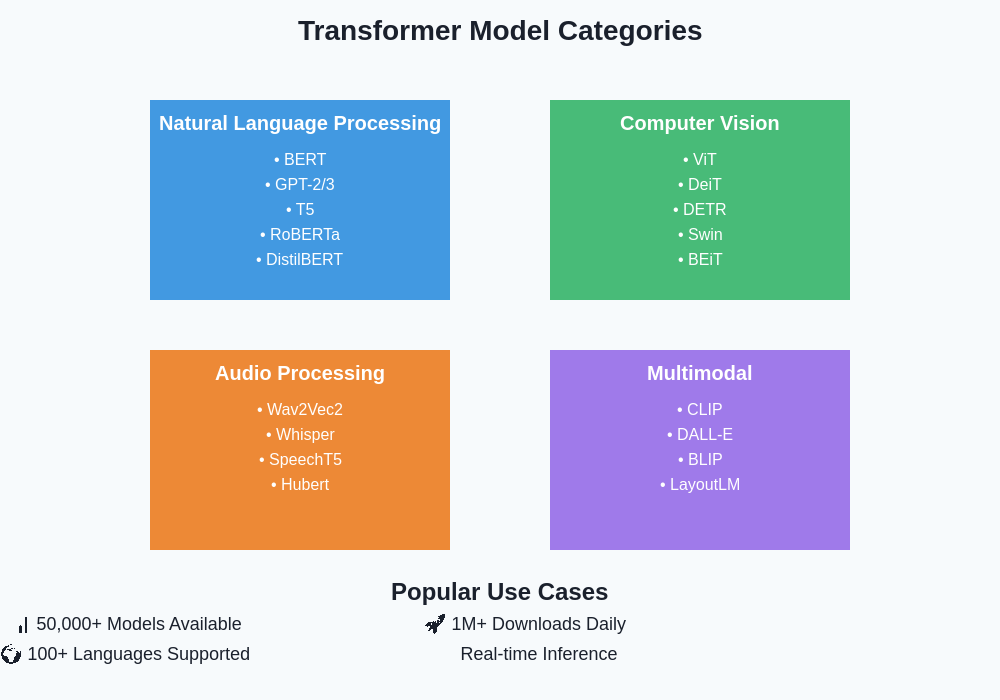

The comprehensive model hub maintained by Hugging Face hosts thousands of models spanning diverse domains and architectures, from large language models capable of generating human-like text to computer vision models that can analyze and understand visual content with remarkable accuracy. This vast ecosystem ensures that developers can find appropriate models for virtually any machine learning task, whether they require state-of-the-art performance for research applications or lightweight models optimized for production deployment.

The diverse ecosystem of transformer models spans multiple domains, each optimized for specific types of tasks and data modalities. This comprehensive coverage ensures that developers can find appropriate models regardless of their application requirements, from natural language processing and computer vision to audio analysis and multimodal understanding.

Transformers Architecture: The Foundation of Modern AI

The transformer architecture represents the theoretical foundation upon which modern AI breakthroughs are built, and understanding its principles is crucial for developers seeking to leverage its capabilities effectively. Originally introduced for natural language processing tasks, transformers have proven remarkably versatile, demonstrating exceptional performance across diverse domains including computer vision, audio processing, and multimodal understanding. The self-attention mechanism that defines transformer architectures enables models to process input sequences in parallel while maintaining awareness of relationships between distant elements, resulting in superior performance and training efficiency compared to previous sequential approaches.

Enhance your AI development with Claude’s advanced capabilities to complement Hugging Face models with sophisticated reasoning and analysis tools that extend beyond traditional machine learning approaches. The synergy between transformer-based models and advanced AI assistants creates powerful development workflows that combine pre-trained model capabilities with interactive problem-solving and code generation support.

The mathematical elegance of the transformer architecture lies in its ability to learn complex representations through multiple layers of self-attention and feed-forward networks, enabling models to capture intricate patterns and relationships within data. This architectural innovation has enabled the creation of increasingly powerful models that demonstrate emergent capabilities as they scale, leading to breakthrough applications in language understanding, code generation, creative writing, and complex reasoning tasks that were previously considered beyond the reach of artificial intelligence.

The modular nature of transformer architectures also facilitates the development of specialized variants optimized for specific tasks and computational constraints. From lightweight models designed for mobile deployment to massive models capable of human-level performance on complex reasoning tasks, the transformer framework provides a flexible foundation that can be adapted to diverse requirements while maintaining consistent interfaces and methodologies for implementation and deployment.

Natural Language Processing Excellence

Natural language processing represents perhaps the most mature and widely adopted application domain for Hugging Face Transformers, encompassing a comprehensive range of tasks from basic text classification to sophisticated language generation and understanding. The library provides seamless access to models trained on diverse linguistic datasets, enabling developers to implement multilingual applications, sentiment analysis systems, text summarization tools, and question-answering platforms with minimal implementation complexity.

The sophistication of modern language models available through Hugging Face extends far beyond simple pattern matching to encompass genuine understanding of linguistic nuances, contextual relationships, and semantic meaning. These models can perform complex reasoning tasks, maintain coherent conversations across extended dialogues, and generate creative content that demonstrates understanding of style, tone, and audience considerations. The practical implications for developers are profound, enabling the creation of applications that can interpret user intent, generate personalized responses, and provide intelligent assistance across diverse domains.

The multilingual capabilities of many Hugging Face models represent a particularly significant advantage for developers building global applications. Rather than requiring separate models for different languages, many transformer-based models demonstrate cross-lingual transfer capabilities, enabling applications to understand and generate content in multiple languages using a single model. This unified approach significantly simplifies the development of international applications while ensuring consistent quality and behavior across different linguistic contexts.

Fine-tuning capabilities provide developers with powerful tools for customizing pre-trained language models to specific domains, use cases, or organizational requirements. Whether adapting models for legal document analysis, medical text processing, or industry-specific terminology, the transfer learning approach enables rapid customization while preserving the general language understanding capabilities developed during pre-training on massive corpora.

Computer Vision Integration and Applications

The expansion of Hugging Face Transformers beyond natural language processing into computer vision represents a significant evolution that has brought transformer-based approaches to image analysis, object detection, and visual understanding tasks. Vision transformers have demonstrated remarkable capabilities in image classification, achieving state-of-the-art performance on standard benchmarks while providing consistent interfaces that align with the broader Hugging Face ecosystem.

The application of transformer architectures to computer vision tasks has revealed unexpected capabilities and advantages compared to traditional convolutional approaches. Vision transformers excel at capturing long-range dependencies within images, enabling superior performance on tasks requiring global understanding of visual content. This capability proves particularly valuable for complex scene understanding, detailed image analysis, and applications requiring fine-grained visual discrimination.

Discover comprehensive AI research capabilities with Perplexity to support your computer vision development with access to the latest research papers, implementation guides, and best practices for deploying vision transformers in production environments. The rapidly evolving landscape of computer vision research benefits significantly from comprehensive research tools that can quickly identify relevant methodologies and implementation strategies.

The integration of computer vision models within the Hugging Face ecosystem provides developers with powerful tools for implementing sophisticated visual analysis capabilities without requiring deep expertise in computer vision algorithms or neural network architectures. From basic image classification and object detection to advanced applications such as image segmentation, pose estimation, and visual question answering, the comprehensive model hub offers solutions for diverse visual understanding requirements.

Multimodal capabilities represent an emerging frontier where vision transformers interact with language models to enable applications that can understand and reason about both textual and visual content simultaneously. These capabilities open new possibilities for applications such as visual storytelling, image captioning, document understanding, and interactive visual assistants that can interpret complex visual scenes and respond to natural language queries about visual content.

Audio Processing and Speech Recognition

The application of transformer architectures to audio processing has revolutionized speech recognition, audio classification, and music analysis, providing developers with powerful tools for implementing sophisticated audio understanding capabilities. Hugging Face Transformers includes comprehensive support for audio models that can transcribe speech with remarkable accuracy, classify audio content, and even generate audio representations from textual descriptions.

Speech recognition capabilities available through Hugging Face models demonstrate exceptional accuracy across diverse acoustic conditions, languages, and speaking styles. These models can handle challenging scenarios such as noisy environments, accented speech, and domain-specific terminology while maintaining real-time processing capabilities suitable for interactive applications. The robust performance of these models enables developers to implement voice interfaces, transcription services, and audio analysis tools that provide reliable functionality across diverse deployment contexts.

The multilingual nature of many audio models reflects the global reach and accessibility priorities of the Hugging Face ecosystem. Rather than requiring separate models for different languages, many speech recognition models support multiple languages simultaneously, enabling the development of applications that can automatically detect and transcribe speech in various languages without requiring explicit language specification.

Audio classification capabilities extend beyond speech recognition to encompass music analysis, environmental sound recognition, and acoustic event detection. These models can identify musical genres, detect specific sounds within complex audio environments, and classify audio content according to various taxonomies, enabling applications such as smart audio filtering, content recommendation systems, and automated audio tagging for media management platforms.

Implementation Strategies and Best Practices

The practical implementation of Hugging Face Transformers requires understanding of various deployment strategies, optimization techniques, and integration patterns that ensure optimal performance and reliability in production environments. The library provides multiple interfaces and deployment options, from simple Python scripts suitable for experimentation to robust production-ready solutions that can handle high-volume requests with low latency requirements.

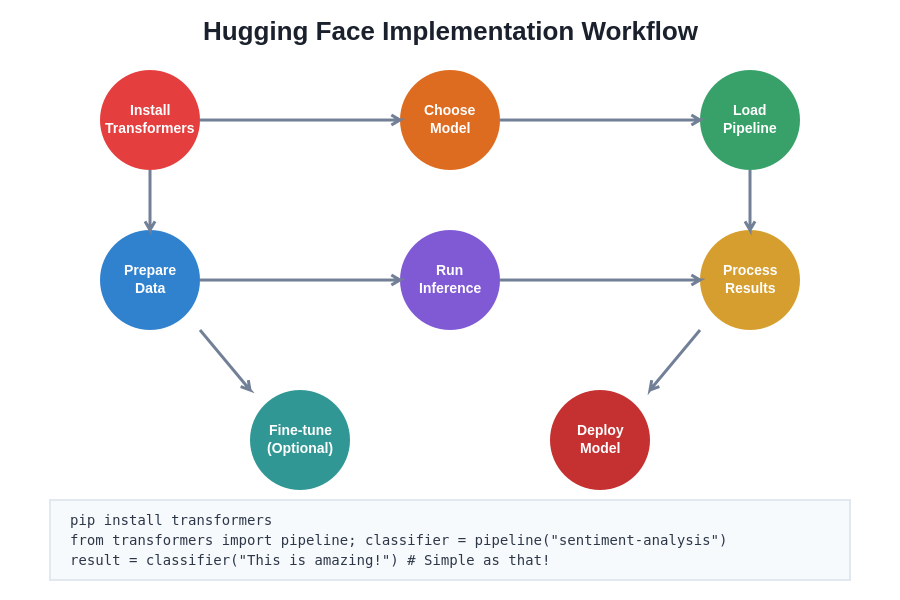

Pipeline interfaces represent the most accessible entry point for developers new to machine learning, providing high-level abstractions that encapsulate complex model initialization, preprocessing, and postprocessing steps into simple function calls. These pipelines enable rapid prototyping and experimentation while maintaining consistency with best practices for model deployment and error handling.

Fine-tuning strategies require careful consideration of dataset preparation, training parameters, and evaluation methodologies to ensure optimal results for specific use cases. The Hugging Face ecosystem provides comprehensive support for fine-tuning workflows, including dataset management tools, training scripts, and evaluation frameworks that streamline the customization process while maintaining reproducibility and experiment tracking capabilities.

Model optimization techniques such as quantization, pruning, and knowledge distillation enable developers to deploy sophisticated models in resource-constrained environments such as mobile devices, edge computing platforms, and embedded systems. These optimization approaches can significantly reduce model size and inference latency while preserving essential functionality, enabling broader deployment of AI capabilities across diverse hardware platforms.

Performance Optimization and Scaling

The deployment of transformer models in production environments requires careful attention to performance optimization, resource management, and scaling strategies that ensure reliable operation under varying load conditions. Hugging Face provides comprehensive tools and guidelines for optimizing model inference, managing memory usage, and implementing efficient batch processing that maximizes throughput while minimizing computational costs.

Hardware acceleration through GPU and specialized AI accelerators represents a critical consideration for applications requiring high throughput or low latency. The Hugging Face ecosystem provides seamless integration with various acceleration frameworks, enabling developers to leverage specialized hardware without requiring deep understanding of low-level optimization techniques or hardware-specific programming models.

Distributed inference strategies enable applications to scale beyond the capacity of individual machines by distributing model computation across multiple devices or cloud instances. These approaches prove particularly valuable for applications serving large numbers of concurrent users or processing high-volume data streams that exceed the capacity of single-node deployments.

Caching and optimization strategies can significantly improve application performance by reducing redundant computations, precomputing common results, and implementing intelligent request routing that minimizes latency and resource consumption. The stateless nature of most transformer models facilitates the implementation of sophisticated caching strategies that can dramatically improve user experience while reducing operational costs.

The streamlined implementation workflow demonstrates how Hugging Face Transformers simplifies the entire development process, from initial installation through deployment. This systematic approach enables developers to rapidly progress from concept to production while maintaining best practices for model integration and optimization.

Integration with Modern Development Workflows

The integration of Hugging Face Transformers with contemporary development practices requires consideration of version control, dependency management, continuous integration, and deployment automation that ensures reliable and maintainable AI-powered applications. The library’s design philosophy emphasizes compatibility with standard Python development tools and frameworks, facilitating seamless integration into existing development workflows and deployment pipelines.

Containerization strategies enable consistent deployment across diverse environments while isolating model dependencies and ensuring reproducible behavior. Docker containers provide an effective approach for packaging transformer models with their runtime requirements, enabling reliable deployment across development, testing, and production environments without dependency conflicts or environment-specific issues.

API development patterns facilitate the creation of scalable microservices that expose transformer model capabilities through well-defined interfaces, enabling integration with web applications, mobile apps, and other systems without requiring direct machine learning expertise from consuming applications. RESTful APIs and GraphQL interfaces provide flexible integration options that support diverse client requirements while maintaining clear separation of concerns.

Monitoring and observability strategies ensure reliable operation of AI-powered applications by providing insights into model performance, resource utilization, and user behavior patterns. Comprehensive logging, metrics collection, and alerting systems enable proactive identification of issues and optimization opportunities while providing data-driven insights for improving application performance and user experience.

Security and Privacy Considerations

The deployment of pre-trained models raises important security and privacy considerations that developers must address to ensure responsible and compliant implementation of AI capabilities. Data privacy concerns encompass both the handling of user inputs to models and the potential for models to inadvertently expose information from their training datasets through various attack vectors or unintended behavior patterns.

Model security encompasses protection against adversarial attacks, prompt injection, and other malicious attempts to manipulate model behavior or extract sensitive information. Understanding these vulnerability patterns enables developers to implement appropriate safeguards, input validation, and output filtering that maintain application security while preserving model functionality.

Compliance requirements vary significantly across industries and jurisdictions, requiring careful consideration of data handling practices, model documentation, and audit trails that demonstrate responsible AI deployment. The Hugging Face ecosystem provides tools and documentation to support compliance efforts while maintaining transparency about model capabilities and limitations.

Ethical considerations encompass bias detection, fairness evaluation, and responsible disclosure of model limitations that ensure AI applications serve diverse user populations equitably. Regular evaluation of model outputs across different demographic groups and use cases helps identify and mitigate potential biases while improving overall application quality and user trust.

Future Developments and Community Ecosystem

The rapid evolution of the Hugging Face ecosystem reflects the dynamic nature of machine learning research and the growing demand for accessible AI tools that enable innovation across diverse domains and applications. Emerging model architectures, training techniques, and application domains continue to expand the capabilities available through the platform while maintaining consistency with established interfaces and development practices.

Community contributions represent a driving force behind the continued growth and improvement of the Hugging Face ecosystem, with researchers, developers, and organizations contributing models, datasets, and tools that benefit the broader AI development community. This collaborative approach accelerates innovation while ensuring that cutting-edge capabilities remain accessible to developers regardless of organizational size or resources.

Research integration ensures that the latest developments in machine learning research rapidly become available through the Hugging Face platform, enabling developers to leverage state-of-the-art capabilities without requiring deep expertise in research methodologies or implementation details. This seamless transfer from research to practical application represents a significant advantage for organizations seeking to implement the latest AI capabilities.

Educational resources and community support provide comprehensive learning opportunities for developers seeking to expand their machine learning expertise while building practical applications. From documentation and tutorials to community forums and expert guidance, the Hugging Face ecosystem supports continuous learning and skill development that enables developers to grow alongside the rapidly evolving field of artificial intelligence.

The compelling advantages of Hugging Face Transformers become evident when comparing development approaches across performance and time dimensions. Traditional methods require extensive development time with uncertain performance outcomes, while Hugging Face pipelines deliver state-of-the-art results with minimal implementation effort.

The future trajectory of Hugging Face Transformers points toward even greater accessibility, performance, and capability diversity as the platform continues to evolve in response to developer needs and technological advances. The commitment to open science, community collaboration, and practical utility ensures that this essential tool will remain at the forefront of AI democratization, enabling developers worldwide to create intelligent applications that benefit society while pushing the boundaries of what is possible with artificial intelligence.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Hugging Face Transformers and machine learning technologies. Readers should conduct their own research and consider their specific requirements when implementing AI-powered solutions. Model performance and capabilities may vary depending on specific use cases, data characteristics, and implementation approaches. Users should review applicable terms of service and licensing requirements when deploying pre-trained models in commercial applications.