The modern enterprise landscape demands a sophisticated approach to artificial intelligence deployment that seamlessly bridges the gap between on-premise infrastructure and cloud-based resources. Hybrid cloud architectures have emerged as the definitive solution for organizations seeking to harness the power of artificial intelligence while maintaining control over sensitive data, compliance requirements, and existing infrastructure investments. This strategic approach enables businesses to leverage the scalability and advanced services of public cloud platforms while preserving the security and customization benefits of on-premise systems.

Stay updated with the latest AI infrastructure trends that are reshaping how enterprises approach data management and machine learning deployment across hybrid environments. The evolution of hybrid cloud solutions represents a fundamental shift in how organizations conceptualize and implement AI-driven business strategies, creating unprecedented opportunities for innovation and competitive advantage.

The Strategic Imperative for Hybrid AI Infrastructure

Organizations today face an increasingly complex decision matrix when determining the optimal deployment strategy for their artificial intelligence initiatives. The traditional binary choice between on-premise and cloud-only solutions has evolved into a nuanced understanding that different components of AI workflows benefit from different deployment models. Sensitive data processing may require on-premise security and compliance controls, while model training and inference might benefit from the elastic scalability and specialized hardware available in public cloud environments.

The hybrid approach recognizes that artificial intelligence workloads are inherently diverse, encompassing everything from real-time edge processing to massive batch analytics operations. This diversity demands an infrastructure strategy that can accommodate varying performance requirements, security constraints, and cost optimization goals without compromising the overall effectiveness of AI implementations. The result is a sophisticated ecosystem where data flows seamlessly between environments while maintaining strict governance and security protocols.

Architectural Foundations of Hybrid AI Systems

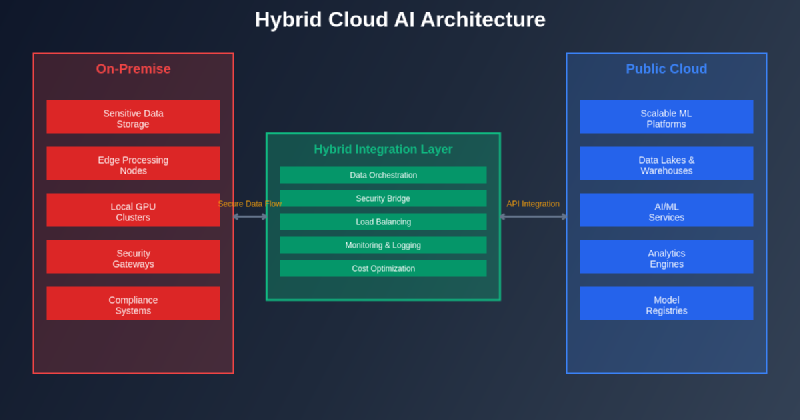

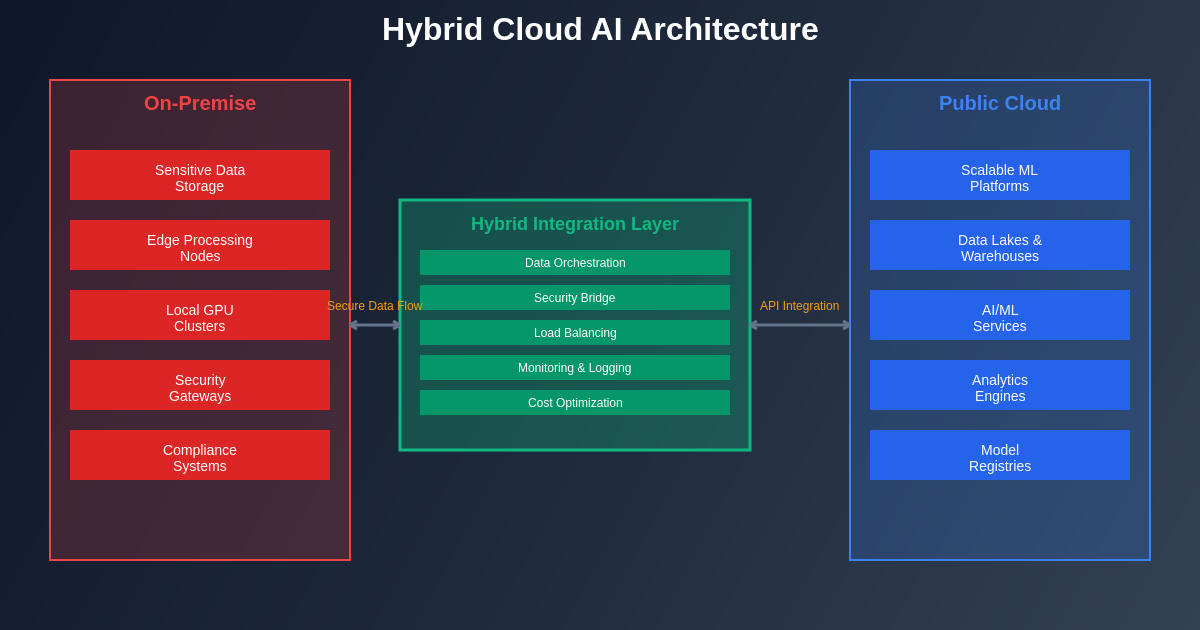

The successful implementation of hybrid cloud AI architectures requires a deep understanding of the interconnected components that enable seamless data and model management across diverse environments. At the core of these systems lies a sophisticated data orchestration layer that manages the movement, transformation, and synchronization of information between on-premise data stores and cloud-based analytics platforms. This orchestration must handle everything from near real-time streaming data to massive historical datasets while maintaining data lineage, quality, and security throughout the entire pipeline.

Modern hybrid architectures leverage containerization technologies and microservices patterns to create portable AI workloads that can execute efficiently across different environments. These containerized solutions enable organizations to develop AI models in cloud environments while deploying them on-premise for low-latency applications, or vice versa, depending on specific operational requirements. The flexibility inherent in these architectures allows organizations to optimize their AI deployments based on factors such as data gravity, latency requirements, regulatory constraints, and cost considerations.

Enhance your AI development capabilities with Claude for sophisticated reasoning and analysis of complex hybrid cloud architectures and integration patterns. The integration of advanced AI assistants into the development and deployment process enables teams to navigate the complexities of hybrid environments more effectively while maintaining best practices for security and performance optimization.

Data Management and Governance in Hybrid Environments

The complexity of managing data across hybrid cloud environments for AI applications cannot be overstated, as it involves orchestrating the movement and processing of potentially petabytes of information while maintaining strict governance controls and ensuring optimal performance. Modern organizations must implement sophisticated data cataloging systems that provide comprehensive visibility into data assets regardless of their physical location, enabling data scientists and engineers to discover, understand, and utilize relevant datasets efficiently across the hybrid landscape.

Data governance in hybrid AI environments requires the implementation of unified policies that can be consistently applied across on-premise and cloud resources, ensuring that compliance requirements, privacy regulations, and security standards are maintained throughout the entire data lifecycle. This includes establishing clear data classification schemes, implementing robust access controls, and maintaining detailed audit trails that track data usage patterns and transformations across the hybrid infrastructure.

Security and Compliance Considerations

The security landscape for hybrid AI systems presents unique challenges that require specialized approaches to protect sensitive data and intellectual property while enabling the collaborative and iterative nature of modern AI development workflows. Organizations must implement zero-trust security models that assume no implicit trust based on network location, instead relying on continuous verification and authentication of all access requests regardless of whether they originate from on-premise or cloud environments.

Compliance considerations become particularly complex in hybrid environments where data may traverse multiple jurisdictions and regulatory frameworks during processing and analysis. Organizations operating in heavily regulated industries such as healthcare, financial services, or government must implement sophisticated controls that ensure data sovereignty requirements are met while still enabling the cross-environment collaboration necessary for effective AI development and deployment.

The implementation of end-to-end encryption, both at rest and in transit, becomes critical in hybrid environments where data flows between different security domains. Advanced key management systems must be deployed to ensure that encryption keys are properly managed and rotated across the entire hybrid infrastructure while maintaining the performance levels required for AI workloads.

Performance Optimization Across Hybrid Architectures

Optimizing performance in hybrid AI environments requires a sophisticated understanding of the unique characteristics and constraints of both on-premise and cloud resources, as well as the network connectivity that links these environments. Organizations must carefully analyze their AI workloads to determine the optimal placement of different components based on factors such as data locality, computational requirements, latency sensitivity, and cost considerations.

Network optimization becomes particularly critical in hybrid environments where large datasets and model artifacts must be transferred between on-premise and cloud resources. Organizations must implement intelligent caching strategies, data compression techniques, and network acceleration technologies to minimize the impact of network latency and bandwidth constraints on AI performance. This may include deploying edge computing nodes that can perform preprocessing and feature extraction closer to data sources, reducing the volume of data that must be transmitted across the network.

The architectural complexity of hybrid AI systems requires careful consideration of data flow patterns, security boundaries, and performance optimization strategies to ensure seamless operation across diverse computing environments.

Cost Management and Resource Optimization

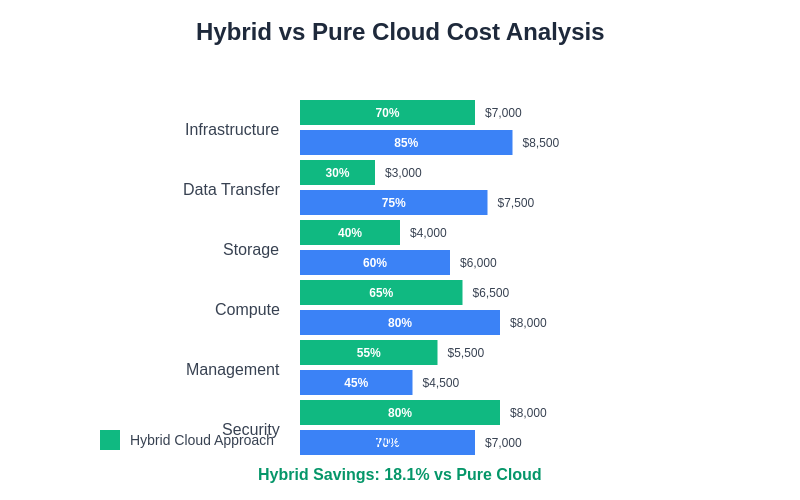

The financial aspects of hybrid AI deployments require sophisticated cost modeling and optimization strategies that account for the complex interplay between on-premise infrastructure investments, cloud consumption costs, and network transfer fees. Organizations must develop comprehensive cost allocation models that provide visibility into the true cost of AI workloads across hybrid environments, enabling informed decisions about resource allocation and workload placement.

Dynamic resource provisioning becomes essential in hybrid environments where AI workloads may experience significant variations in computational requirements based on factors such as training cycles, inference volumes, and data processing schedules. Organizations must implement intelligent workload management systems that can automatically scale resources up or down based on demand while optimizing costs by leveraging the most appropriate mix of on-premise and cloud resources.

Explore advanced AI research capabilities with Perplexity to stay informed about emerging cost optimization techniques and resource management strategies for hybrid cloud AI deployments. The rapidly evolving landscape of cloud pricing models and optimization techniques requires continuous learning and adaptation to maintain cost-effective operations.

Machine Learning Operations in Hybrid Environments

The implementation of robust MLOps practices becomes particularly challenging in hybrid environments where model development, training, validation, and deployment may occur across multiple computing environments with different tools, platforms, and operational procedures. Organizations must establish unified MLOps pipelines that can seamlessly orchestrate the entire machine learning lifecycle while accommodating the unique requirements and constraints of each environment.

Version control and artifact management become critical components of hybrid MLOps implementations, as teams must maintain consistency and traceability across different environments while enabling collaborative development practices. This includes implementing sophisticated model registries that can track model versions, performance metrics, and deployment status across the entire hybrid infrastructure while providing easy access to models and associated metadata for development and production teams.

Automated testing and validation processes must be implemented to ensure that models perform consistently across different environments, accounting for potential differences in hardware configurations, software versions, and data characteristics. This may involve implementing comprehensive testing frameworks that can validate model performance, data quality, and system integration across the entire hybrid deployment pipeline.

Data Pipeline Architecture and Orchestration

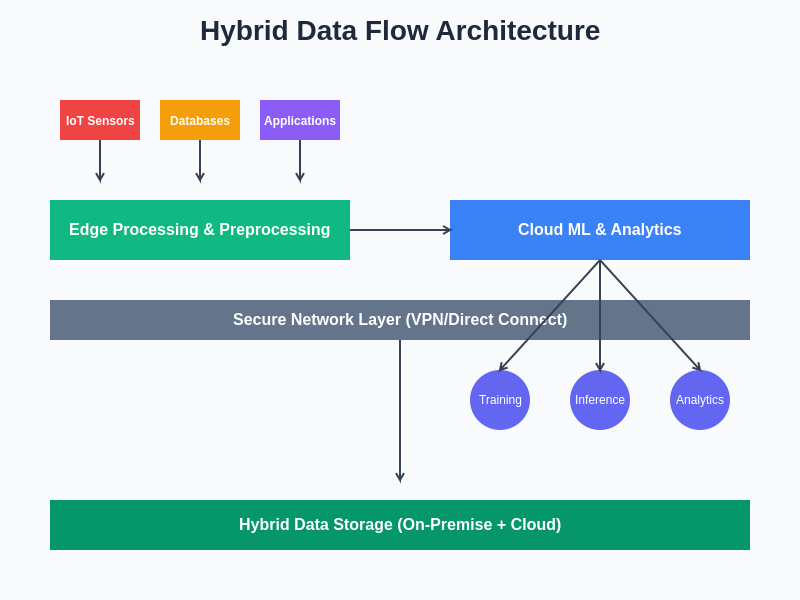

The design and implementation of data pipelines in hybrid AI environments requires sophisticated orchestration capabilities that can manage complex workflows spanning multiple computing environments while maintaining data quality, security, and performance standards. Modern data pipeline architectures must be resilient to network interruptions, hardware failures, and other operational challenges while providing comprehensive monitoring and alerting capabilities.

Real-time stream processing capabilities become particularly important in hybrid environments where organizations need to process continuous data streams from on-premise sources while leveraging cloud-based analytics and machine learning services. This requires implementing robust streaming architectures that can handle data ingestion, transformation, and routing across hybrid environments while maintaining low latency and high throughput requirements.

Batch processing orchestration must account for the complexities of scheduling and managing large-scale data processing jobs that may require coordination between on-premise and cloud resources. This includes implementing intelligent job scheduling systems that can optimize resource utilization while respecting constraints such as data locality, security requirements, and cost considerations.

The seamless flow of data between on-premise and cloud environments requires sophisticated orchestration and management capabilities to ensure optimal performance and security throughout the AI pipeline.

Edge Computing Integration

The integration of edge computing capabilities into hybrid AI architectures enables organizations to extend their AI processing capabilities closer to data sources and end users, reducing latency and bandwidth requirements while improving overall system performance. Edge nodes can perform initial data preprocessing, feature extraction, and simple inference tasks before transmitting results to central processing facilities for more complex analysis and model training.

The management of edge deployments in hybrid AI environments requires sophisticated device management and orchestration capabilities that can handle software updates, configuration management, and monitoring across potentially thousands of distributed edge nodes. Organizations must implement robust over-the-air update mechanisms and remote management capabilities to maintain the security and performance of their edge AI deployments.

Disaster Recovery and Business Continuity

Implementing comprehensive disaster recovery and business continuity plans for hybrid AI environments requires careful consideration of data replication, failover procedures, and recovery time objectives across multiple computing environments. Organizations must design resilient architectures that can maintain AI service availability even in the event of significant infrastructure failures or other operational disruptions.

The complexity of disaster recovery in hybrid environments stems from the need to coordinate recovery procedures across different platforms, ensure data consistency, and maintain service availability while potentially operating with reduced capacity. This requires implementing sophisticated backup and replication strategies that can protect both data and model artifacts while enabling rapid recovery procedures.

Future Evolution and Emerging Technologies

The future of hybrid cloud AI architectures will be shaped by emerging technologies such as quantum computing, advanced networking protocols, and next-generation hardware platforms that promise to further blur the boundaries between on-premise and cloud computing. Organizations must prepare for these technological advances by designing flexible architectures that can accommodate new computing paradigms while protecting existing investments.

The evolution of artificial intelligence itself will continue to drive changes in hybrid architecture requirements, as new AI techniques and applications emerge that may have different performance, security, and operational characteristics. Organizations must maintain the agility to adapt their hybrid infrastructures to support new AI capabilities while continuing to derive value from existing investments and implementations.

The economic advantages of hybrid cloud approaches become apparent when analyzing total cost of ownership across different AI workload patterns and organizational requirements.

Implementation Best Practices and Recommendations

Successfully implementing hybrid cloud AI architectures requires adherence to proven best practices that address the unique challenges and opportunities of these complex environments. Organizations should begin with comprehensive assessment and planning phases that clearly define requirements, constraints, and success criteria before proceeding with implementation activities.

The importance of starting with pilot projects and proof-of-concept implementations cannot be overstated, as these smaller-scale efforts provide valuable learning opportunities and help validate architectural decisions before committing to full-scale deployments. Organizations should focus on achieving early wins with less complex use cases before tackling more challenging integration scenarios.

Continuous monitoring and optimization should be built into hybrid AI architectures from the beginning, rather than being added as an afterthought. This includes implementing comprehensive observability platforms that provide visibility into performance, costs, and operational metrics across the entire hybrid infrastructure.

The human element of hybrid AI implementations is often underestimated, but successful deployments require significant investment in training and change management to help teams adapt to new tools, processes, and operational procedures. Organizations must provide comprehensive training programs and support systems to ensure that their teams can effectively leverage the capabilities of hybrid AI infrastructures.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding cloud architecture, security implementations, or AI deployment strategies. The views expressed are based on current understanding of hybrid cloud technologies and their applications in artificial intelligence contexts. Readers should conduct thorough assessments of their specific requirements and consult with qualified professionals when designing and implementing hybrid cloud AI architectures. The effectiveness and suitability of different approaches may vary significantly based on organizational needs, regulatory requirements, and technical constraints.