The convergence of artificial intelligence and Internet of Things technologies has created unprecedented opportunities for intelligent edge computing, and Intel OpenVINO stands at the forefront of this technological revolution. As organizations increasingly seek to deploy sophisticated AI capabilities directly on edge devices rather than relying on cloud-based processing, the need for efficient model optimization and hardware acceleration has become paramount. Intel OpenVINO toolkit addresses these challenges by providing a comprehensive platform that enables developers to optimize, tune, and deploy deep learning models across diverse hardware architectures while maintaining exceptional performance and minimal resource consumption.

Explore the latest AI trends and innovations that are shaping the future of edge computing and IoT deployments. The transformation from cloud-centric AI processing to distributed edge intelligence represents a fundamental shift in how we conceive and implement artificial intelligence solutions, with Intel OpenVINO serving as a critical enabler of this paradigm change.

Understanding Intel OpenVINO Architecture

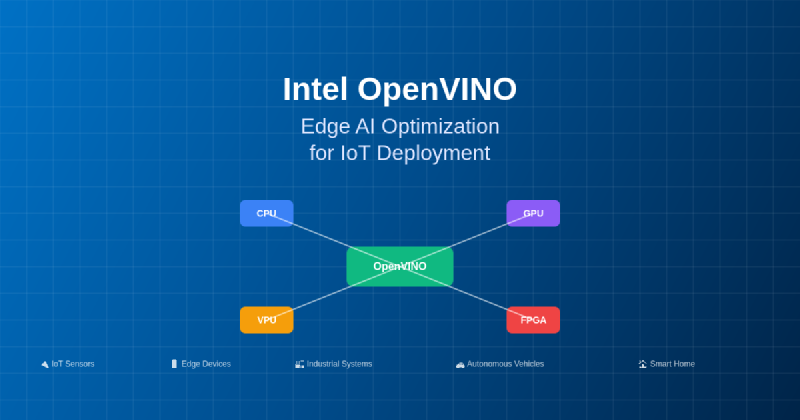

Intel OpenVINO, which stands for Open Visual Inference and Neural Network Optimization, represents a sophisticated toolkit designed specifically for optimizing and deploying deep learning models across heterogeneous hardware platforms. The architecture of OpenVINO is built around several core components that work in harmony to provide seamless model optimization and deployment capabilities. The Model Optimizer serves as the initial entry point, converting models from popular deep learning frameworks such as TensorFlow, PyTorch, ONNX, and Caffe into an intermediate representation that can be efficiently processed by the Inference Engine.

The Inference Engine constitutes the runtime component of OpenVINO, responsible for executing optimized models on target hardware with maximum efficiency. This engine supports a wide range of Intel hardware platforms, including CPUs, integrated GPUs, VPUs (Vision Processing Units), and FPGAs, enabling developers to choose the most appropriate hardware configuration for their specific use cases. The toolkit also includes comprehensive development tools, pre-trained models, and optimization utilities that streamline the entire development workflow from model conversion to deployment.

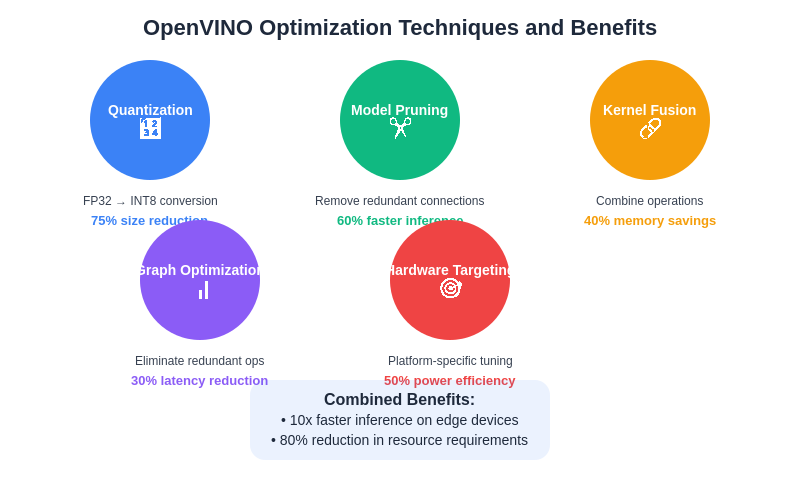

The OpenVINO architecture incorporates advanced optimization techniques including quantization, pruning, and kernel fusion that significantly reduce model size and computational requirements while maintaining acceptable accuracy levels. These optimizations are particularly crucial for edge and IoT deployments where computational resources, power consumption, and memory bandwidth are severely constrained compared to cloud-based environments.

Edge AI Optimization Strategies

The optimization of AI models for edge deployment requires a multifaceted approach that addresses the unique constraints and requirements of resource-limited environments. Intel OpenVINO employs several sophisticated optimization strategies that enable deep learning models to operate efficiently on edge devices without compromising performance or accuracy beyond acceptable thresholds. Quantization represents one of the most impactful optimization techniques, converting floating-point model weights and activations to lower-precision integer representations, typically reducing model size by 75% while maintaining comparable accuracy.

Model pruning constitutes another critical optimization strategy where redundant or less important neural network connections are systematically removed, resulting in smaller, faster models that require less computational resources and memory bandwidth. OpenVINO’s pruning algorithms analyze the contribution of individual neurons and connections to overall model performance, selectively removing elements that have minimal impact on accuracy while significantly reducing computational overhead.

Experience advanced AI optimization with Claude for comprehensive model analysis and optimization strategies that complement OpenVINO’s capabilities. The combination of multiple optimization techniques creates a multiplicative effect that enables deployment of sophisticated AI models on hardware platforms that would otherwise be incapable of supporting such computational workloads.

IoT Device Integration and Deployment

The integration of Intel OpenVINO with IoT devices requires careful consideration of hardware capabilities, power constraints, and real-time processing requirements that are characteristic of edge computing environments. OpenVINO’s device plugins provide abstracted interfaces to different hardware accelerators, enabling developers to write device-agnostic code that can be seamlessly deployed across various hardware configurations without requiring extensive modifications or optimizations for each target platform.

The toolkit supports dynamic device selection and load balancing, allowing applications to automatically utilize the most appropriate hardware resources based on current system conditions and workload requirements. This capability is particularly valuable in IoT environments where devices may have heterogeneous hardware configurations and varying computational capabilities, enabling optimal resource utilization across the entire edge computing infrastructure.

OpenVINO’s integration with popular IoT frameworks and protocols ensures compatibility with existing infrastructure and deployment pipelines. The toolkit supports containerized deployments through Docker integration, enabling consistent deployment experiences across different edge computing platforms while maintaining isolation and security boundaries between different AI workloads.

The architectural design of OpenVINO facilitates seamless integration with existing IoT ecosystems while providing the flexibility and performance required for demanding edge AI applications. The modular design enables selective deployment of only required components, minimizing resource footprint and optimizing system efficiency.

Performance Optimization and Hardware Acceleration

Intel OpenVINO achieves exceptional performance on edge devices through sophisticated hardware acceleration techniques that leverage the unique capabilities of different processor architectures. The toolkit’s ability to automatically optimize model execution for specific hardware configurations ensures that applications achieve maximum throughput and minimum latency regardless of the underlying computational platform. CPU optimizations include vectorization, parallel processing, and cache optimization strategies that maximize utilization of available computational resources.

Graphics processing unit acceleration through OpenVINO enables parallel execution of neural network operations, particularly beneficial for computer vision and image processing applications that can leverage the massive parallel processing capabilities of modern integrated and discrete graphics hardware. The toolkit automatically partitions computational workloads across available GPU compute units while managing memory transfers and synchronization to minimize overhead and maximize throughput.

Vision Processing Unit support provides dedicated acceleration for computer vision workloads, offering exceptional power efficiency for applications that require continuous image processing and analysis. VPUs are specifically designed for neural network inference operations, providing optimal performance per watt for battery-powered edge devices where power consumption is a critical consideration.

Field-Programmable Gate Array integration enables custom hardware acceleration for specialized workloads that require ultra-low latency or highly specific computational patterns. OpenVINO’s FPGA support allows developers to achieve near-ASIC performance levels while maintaining the flexibility to modify and optimize acceleration logic for specific application requirements.

Real-World Applications and Use Cases

The practical applications of Intel OpenVINO in edge AI and IoT deployments span numerous industries and use cases, demonstrating the versatility and effectiveness of optimized edge intelligence. Industrial automation represents one of the most compelling application areas, where real-time object detection, quality inspection, and predictive maintenance capabilities enable manufacturing facilities to improve efficiency, reduce defects, and minimize unplanned downtime through continuous monitoring and analysis of production processes.

Smart city infrastructure leverages OpenVINO-optimized models for traffic management, pedestrian safety, and environmental monitoring applications that require real-time processing of sensor data from distributed camera networks, environmental sensors, and infrastructure monitoring systems. These applications demonstrate the scalability of edge AI solutions that can operate continuously in challenging environmental conditions while providing actionable insights for urban planning and management decisions.

Healthcare and medical device applications benefit from OpenVINO’s optimization capabilities for portable diagnostic equipment, patient monitoring systems, and medical imaging devices that require sophisticated AI analysis capabilities in resource-constrained environments. The ability to deploy complex computer vision and pattern recognition models on portable devices enables point-of-care diagnostics and remote patient monitoring applications that were previously impossible due to computational limitations.

Enhance your research capabilities with Perplexity for comprehensive analysis of emerging applications and market trends in edge AI and IoT deployments. The continuous evolution of edge computing applications creates new opportunities for innovative solutions that leverage optimized AI capabilities.

Model Conversion and Optimization Workflow

The process of converting and optimizing deep learning models for edge deployment using Intel OpenVINO follows a systematic workflow that ensures maximum efficiency and compatibility across target hardware platforms. The Model Optimizer component serves as the primary interface for converting models from popular training frameworks into OpenVINO’s intermediate representation format, which provides a standardized, hardware-agnostic representation of neural network architectures and parameters.

During the conversion process, the Model Optimizer performs various graph optimizations including constant folding, dead code elimination, and operation fusion that reduce computational complexity and improve execution efficiency. These optimizations are applied automatically based on the source model architecture and target hardware capabilities, ensuring that the resulting intermediate representation is optimally configured for the intended deployment environment.

The optimization workflow includes comprehensive validation and accuracy assessment tools that enable developers to verify that optimized models maintain acceptable performance levels compared to their original counterparts. These validation tools provide detailed analysis of accuracy degradation, performance improvements, and resource utilization changes that result from various optimization strategies, enabling informed decision-making about optimization trade-offs.

Post-training optimization techniques including calibration and quantization are seamlessly integrated into the workflow, allowing developers to fine-tune model performance for specific deployment scenarios without requiring access to original training datasets or extensive retraining procedures. This capability significantly reduces the time and computational resources required to deploy optimized models in production environments.

Development Tools and SDK Integration

Intel OpenVINO provides comprehensive development tools and software development kit integration that streamline the entire edge AI development lifecycle from initial model selection through production deployment and monitoring. The OpenVINO Development Tools suite includes model accuracy checking utilities, benchmark applications, and deployment tools that enable developers to validate and optimize their AI solutions throughout the development process.

The Deep Learning Workbench provides a web-based interface for model optimization, performance analysis, and accuracy assessment that enables developers to experiment with different optimization strategies and hardware configurations without requiring extensive command-line expertise or detailed knowledge of underlying optimization algorithms. This graphical interface significantly reduces the learning curve for developers who are new to edge AI optimization while providing advanced capabilities for experienced practitioners.

SDK integration supports popular development environments and programming languages including Python, C++, and OpenCV, enabling seamless integration with existing development workflows and infrastructure. The comprehensive API documentation and code examples facilitate rapid adoption and implementation of OpenVINO capabilities in both new and existing applications.

The toolkit includes extensive model zoo with pre-trained, pre-optimized models for common computer vision, natural language processing, and time series analysis tasks that can be immediately deployed or used as starting points for custom model development. These pre-optimized models demonstrate best practices for edge deployment while providing baseline performance metrics for comparison and optimization efforts.

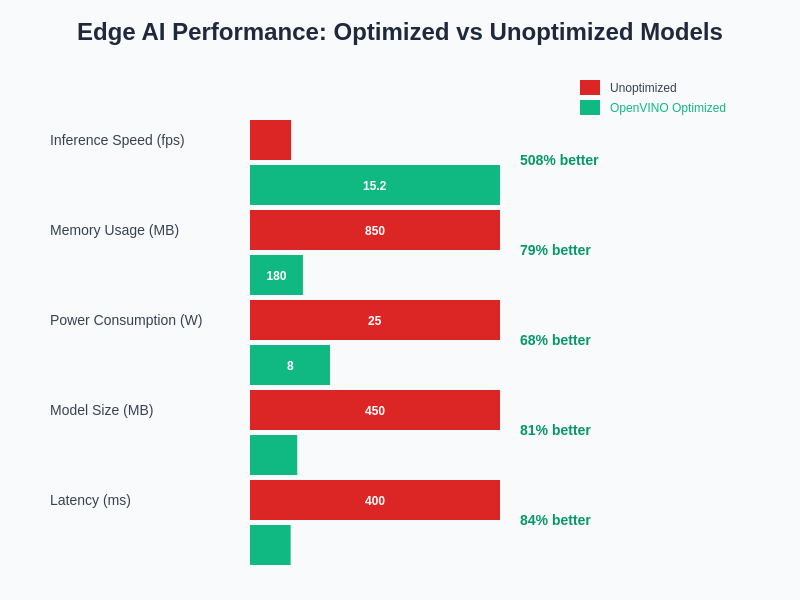

The performance advantages of OpenVINO optimization become evident when comparing inference speeds, power consumption, and model sizes across different optimization levels and hardware configurations. These improvements enable deployment scenarios that would be impractical with unoptimized models.

Security and Privacy Considerations

Edge AI deployments using Intel OpenVINO must address comprehensive security and privacy requirements that are particularly critical in IoT environments where devices may operate in unsecured locations and process sensitive data. The toolkit incorporates several security features including model encryption, secure boot capabilities, and hardware-based security extensions that protect intellectual property and prevent unauthorized access to AI models and training data.

Privacy-preserving inference techniques enable edge devices to process sensitive information without transmitting raw data to external systems or cloud-based services, addressing regulatory compliance requirements and user privacy concerns that are increasingly important in healthcare, financial services, and personal IoT applications. Local processing capabilities ensure that sensitive data remains on-device throughout the inference process while still enabling sophisticated AI analysis and decision-making.

OpenVINO’s support for trusted execution environments and hardware security modules provides additional layers of protection for high-security applications that require cryptographic verification of model integrity and execution environment security. These capabilities are particularly important for industrial control systems, autonomous vehicles, and critical infrastructure applications where unauthorized modification of AI models could have significant safety or security implications.

The toolkit includes comprehensive audit and logging capabilities that enable security monitoring and compliance verification for deployed edge AI systems, providing detailed records of model execution, data access patterns, and system interactions that may be required for regulatory compliance or security incident investigation.

Scalability and Fleet Management

Managing large-scale deployments of edge AI systems requires sophisticated orchestration and management capabilities that Intel OpenVINO supports through integration with containerization technologies and edge computing management platforms. The toolkit’s containerized deployment options enable consistent, reproducible deployments across heterogeneous hardware environments while providing isolation and resource management capabilities that are essential for multi-tenant edge computing scenarios.

Fleet management capabilities include remote model updates, performance monitoring, and health assessment tools that enable centralized management of distributed edge AI deployments without requiring physical access to individual devices. These capabilities are particularly valuable for IoT applications that may involve thousands or tens of thousands of distributed devices across wide geographic areas.

OpenVINO’s telemetry and monitoring integration provides detailed visibility into model performance, resource utilization, and system health across entire edge computing fleets, enabling proactive maintenance and optimization of deployed AI systems. The comprehensive metrics collection and analysis capabilities support data-driven decision-making about hardware upgrades, model updates, and deployment optimization strategies.

The toolkit supports rolling updates and A/B testing methodologies that enable safe deployment of model updates and new AI capabilities across production edge computing environments while minimizing service disruption and providing rollback capabilities in case of performance degradation or compatibility issues.

Future Developments and Roadmap

The continued evolution of Intel OpenVINO reflects the rapidly changing landscape of edge computing, AI hardware acceleration, and IoT deployment requirements. Future developments focus on expanding support for emerging AI model architectures including transformer-based models, graph neural networks, and federated learning approaches that are becoming increasingly important for edge AI applications.

Hardware support expansion includes optimization for next-generation Intel processors, discrete graphics cards, and specialized AI acceleration hardware that will provide even greater performance and efficiency improvements for edge AI workloads. The integration of advanced manufacturing process nodes and architectural improvements in Intel hardware platforms will enable more sophisticated AI capabilities on edge devices while maintaining or improving power efficiency.

Enhanced automation capabilities including AutoML integration, automated hyperparameter optimization, and intelligent hardware selection will reduce the expertise required for effective edge AI deployment while improving the quality and performance of optimized models. These developments will democratize access to sophisticated edge AI capabilities and enable broader adoption across industries and applications.

The roadmap includes expanded ecosystem integration with popular IoT platforms, cloud services, and edge computing frameworks that will simplify deployment and management of edge AI solutions while providing seamless integration with existing infrastructure and development workflows.

The quantitative benefits of OpenVINO optimization demonstrate significant improvements in inference speed, memory usage, power consumption, and model size that enable practical deployment of sophisticated AI capabilities on resource-constrained edge devices.

Best Practices and Implementation Guidelines

Successful implementation of Intel OpenVINO for edge AI and IoT deployments requires adherence to established best practices that address the unique challenges and requirements of edge computing environments. Model selection represents a critical first step, where developers must balance model complexity, accuracy requirements, and available computational resources to identify optimal solutions for specific deployment scenarios.

Optimization strategy selection should be based on comprehensive analysis of target hardware capabilities, performance requirements, and acceptable accuracy trade-offs that may vary significantly between different applications and use cases. The iterative optimization approach enables developers to progressively apply different optimization techniques while monitoring their impact on model performance and resource utilization.

Hardware configuration optimization includes careful consideration of memory allocation, thermal management, and power consumption patterns that may significantly impact system reliability and performance in edge computing environments. Proper thermal design and power management strategies ensure stable operation under varying environmental conditions and workload demands.

Testing and validation procedures must account for the distributed nature of edge deployments and the potential for varying environmental conditions, hardware configurations, and network connectivity that may impact system performance and reliability. Comprehensive testing strategies include stress testing, environmental simulation, and long-term reliability assessment that ensure robust operation in production environments.

The implementation of monitoring and maintenance procedures enables proactive identification and resolution of performance issues, hardware failures, and optimization opportunities that may emerge during production deployment. These procedures should include automated alerting, performance trend analysis, and capacity planning capabilities that support long-term system reliability and optimization.

Intel OpenVINO represents a transformative technology that enables sophisticated artificial intelligence capabilities to operate efficiently on edge devices and IoT platforms that were previously incapable of supporting such computational workloads. The comprehensive optimization strategies, hardware acceleration capabilities, and development tools provided by the toolkit have democratized access to edge AI capabilities while maintaining the performance and efficiency requirements necessary for practical deployment in resource-constrained environments.

The continued evolution of edge computing and IoT technologies, combined with ongoing improvements in AI model architectures and optimization techniques, promises to unlock new applications and capabilities that will further expand the impact of edge AI solutions across industries and use cases. The foundation provided by Intel OpenVINO positions developers and organizations to take advantage of these emerging opportunities while building on proven, production-ready technology that has demonstrated its effectiveness in real-world deployments.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Intel OpenVINO technology and edge AI deployment practices. Readers should conduct their own research and consider their specific requirements when implementing edge AI solutions. Performance results may vary depending on hardware configuration, model complexity, and specific use case requirements. Intel OpenVINO is a trademark of Intel Corporation.