The mobile artificial intelligence landscape has evolved dramatically, with both iOS and Android platforms offering sophisticated native machine learning frameworks that enable developers to integrate powerful AI capabilities directly into their applications. At the forefront of this revolution are Apple’s CoreML framework and Google’s ML Kit, two comprehensive solutions that have fundamentally transformed how developers approach on-device machine learning implementation.

Explore the latest AI mobile development trends to stay current with rapidly advancing mobile AI technologies that are reshaping user experiences across iOS and Android platforms. The choice between CoreML and ML Kit represents more than a simple technical decision; it involves understanding the philosophical differences between Apple’s tightly integrated ecosystem approach and Google’s cloud-first, cross-platform strategy.

Understanding the Mobile AI Landscape

The emergence of on-device machine learning has addressed critical concerns around privacy, latency, and offline functionality that plagued earlier cloud-dependent AI implementations. Both CoreML and ML Kit represent mature solutions that enable developers to deploy sophisticated AI models directly on mobile devices, but they approach this challenge from distinctly different perspectives shaped by their respective platform philosophies and target audiences.

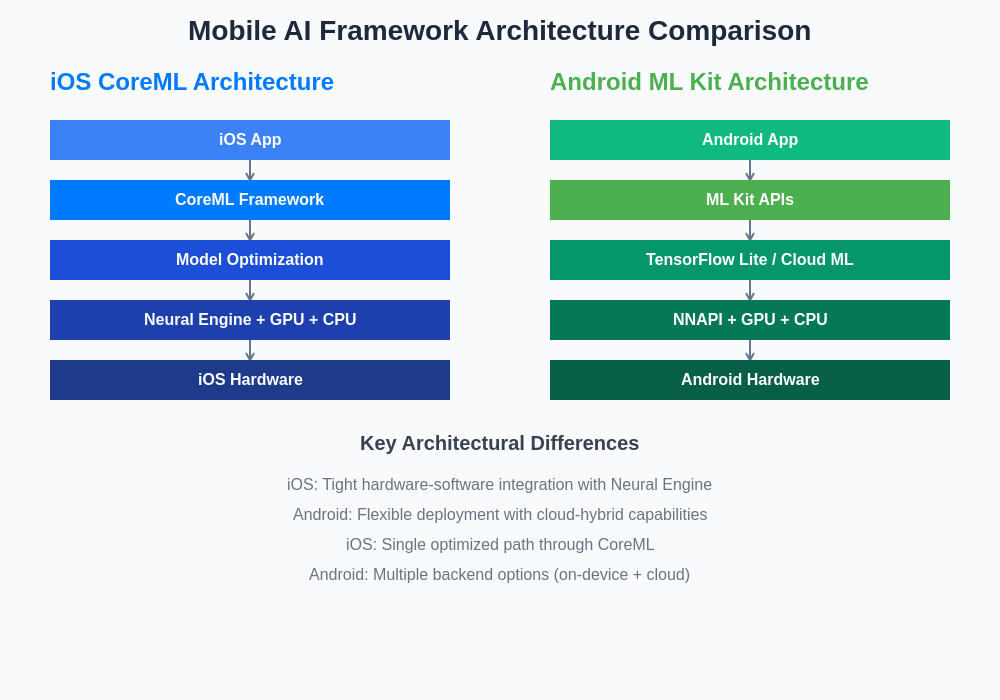

CoreML embodies Apple’s commitment to seamless integration within the iOS ecosystem, offering deep hardware optimization and tight coupling with iOS development frameworks. Meanwhile, ML Kit reflects Google’s expertise in machine learning and cloud services, providing a more flexible approach that supports both on-device and cloud-based inference while maintaining compatibility across multiple platforms. This fundamental difference in approach influences every aspect of development, from initial setup to performance optimization and long-term maintenance.

CoreML: Apple’s Native AI Framework

Apple’s CoreML framework represents a comprehensive approach to on-device machine learning that leverages the company’s expertise in hardware-software integration and optimization. Introduced as part of iOS 11, CoreML has evolved into a sophisticated platform that supports a wide range of machine learning model types while maintaining Apple’s characteristic focus on privacy and performance.

The framework’s tight integration with Apple’s Neural Engine, available on devices equipped with A11 Bionic chips and later, provides exceptional performance for supported operations. This dedicated AI hardware acceleration, combined with CoreML’s optimized model formats and runtime, enables developers to deploy complex neural networks that would be computationally prohibitive on general-purpose mobile processors.

CoreML’s model format serves as a unified interface that abstracts the underlying complexity of different machine learning frameworks, allowing developers to integrate models trained in popular frameworks like TensorFlow, PyTorch, and Keras without extensive knowledge of the original training environment. The framework handles model conversion, optimization, and runtime execution, providing a streamlined development experience that aligns with Apple’s broader developer ecosystem philosophy.

ML Kit: Google’s Cross-Platform Solution

Google’s ML Kit approaches mobile machine learning from a different angle, emphasizing flexibility, cloud integration, and cross-platform compatibility. Built on Google’s extensive machine learning infrastructure and expertise, ML Kit provides developers with both ready-to-use APIs for common AI tasks and the flexibility to deploy custom models using TensorFlow Lite as its underlying engine.

The framework’s hybrid approach allows developers to seamlessly transition between on-device and cloud-based inference depending on specific requirements around accuracy, model size, and computational complexity. This flexibility proves particularly valuable for applications that need to balance performance constraints with the desire to leverage Google’s most advanced AI models, which may be too large or computationally intensive for mobile deployment.

ML Kit’s ready-to-use APIs cover common mobile AI scenarios including text recognition, face detection, barcode scanning, and language identification, providing developers with production-ready solutions that require minimal implementation effort. These pre-built capabilities are continuously updated and improved by Google’s AI teams, ensuring that applications benefit from ongoing advances in machine learning without requiring developer intervention.

Experience advanced AI development with Claude for comprehensive assistance in mobile AI implementation strategies and cross-platform development challenges. The integration of intelligent development tools enhances productivity when working with complex mobile AI frameworks across different platforms.

Performance and Hardware Optimization

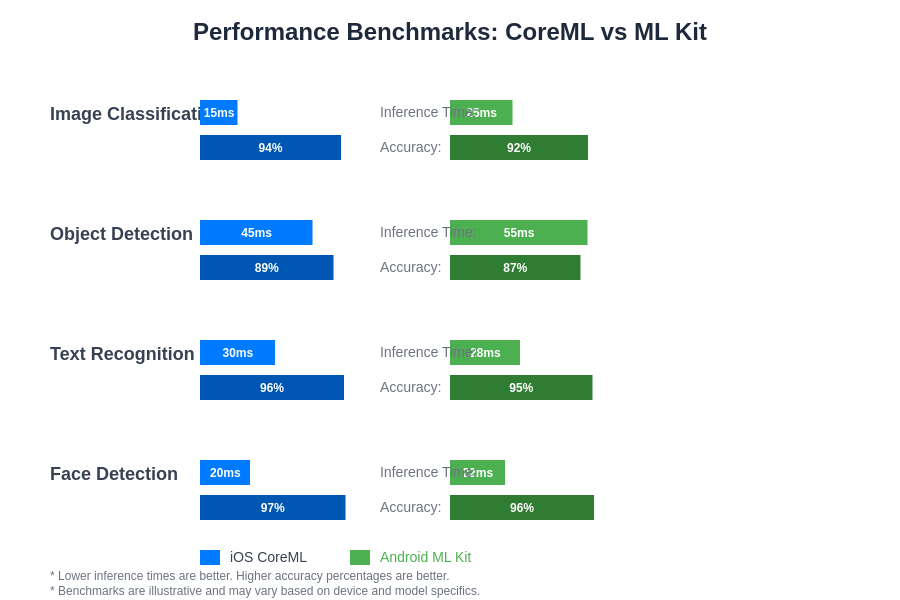

The performance characteristics of CoreML and ML Kit reflect their different approaches to hardware utilization and optimization. CoreML’s tight integration with Apple’s custom silicon provides significant advantages for supported operations, particularly those that can leverage the Neural Engine’s specialized AI processing capabilities. This dedicated hardware acceleration results in dramatically reduced inference times and improved energy efficiency for compatible models.

Apple’s control over both hardware and software enables CoreML to implement aggressive optimizations that would be challenging in a more heterogeneous environment. The framework automatically selects the most appropriate processing unit for different operations, distributing workloads across the CPU, GPU, and Neural Engine based on model characteristics and device capabilities. This intelligent resource allocation ensures optimal performance without requiring explicit developer intervention.

ML Kit’s performance profile reflects the diversity of the Android ecosystem, with optimization strategies that must account for a wide range of hardware configurations and capabilities. The framework’s TensorFlow Lite foundation provides solid performance across different Android devices, with support for hardware acceleration through Android’s Neural Networks API (NNAPI) when available on supported hardware. The performance benchmarks demonstrate the practical implications of these architectural differences across common mobile AI tasks.

The heterogeneous nature of Android hardware presents both challenges and opportunities for ML Kit optimization. While the framework cannot achieve the same level of hardware-specific optimization as CoreML, it compensates through flexible deployment strategies that can adapt to different device capabilities and performance requirements.

Model Support and Compatibility

CoreML supports a comprehensive range of machine learning model types, including neural networks, tree ensembles, support vector machines, and generalized linear models. The framework’s model format provides a unified interface that abstracts differences between various machine learning approaches, enabling developers to integrate models regardless of their original training framework.

The CoreML Tools ecosystem facilitates model conversion from popular machine learning frameworks, with official support for TensorFlow, PyTorch, Keras, and scikit-learn. These conversion tools handle the complex process of translating models between different representations while optimizing for iOS deployment, including quantization options that reduce model size and improve inference speed.

ML Kit’s approach to model support emphasizes flexibility and developer choice. The framework provides pre-built models for common tasks through its ready-to-use APIs, while also supporting custom model deployment through TensorFlow Lite. This dual approach allows developers to quickly implement standard functionality while retaining the flexibility to deploy specialized models when needed.

Custom model support in ML Kit leverages TensorFlow Lite’s extensive compatibility with TensorFlow models, providing a straightforward path for deploying models trained in Google’s ecosystem. The framework also supports model updates through Google Play Services, enabling dynamic model delivery and updates without requiring application updates.

Development Experience and Integration

The development experience for CoreML reflects Apple’s broader approach to developer tools and ecosystem integration. The framework integrates seamlessly with Xcode and provides comprehensive debugging and profiling tools that help developers optimize their implementations. CoreML models appear as first-class objects in Swift and Objective-C, with automatically generated interfaces that provide type safety and code completion.

Xcode’s integrated machine learning tools include performance profiling capabilities that help developers understand model behavior and optimize for different device targets. The development environment provides detailed metrics on inference time, memory usage, and energy consumption, enabling data-driven optimization decisions.

ML Kit’s development experience emphasizes simplicity and rapid integration, with straightforward APIs that can be implemented with minimal code. The framework’s documentation and sample code provide clear guidance for both common use cases and advanced implementations, while Google’s broader developer ecosystem offers extensive learning resources and community support.

The Firebase integration available in ML Kit provides additional capabilities around analytics, A/B testing, and gradual rollout of machine learning features. This integration enables developers to monitor model performance in production and make data-driven decisions about feature deployment and optimization.

Privacy and Data Handling

Privacy considerations represent a critical differentiator between CoreML and ML Kit, reflecting the broader privacy philosophies of their respective platforms. CoreML’s on-device processing model aligns closely with Apple’s privacy-focused approach, ensuring that sensitive data never leaves the device during inference operations.

Apple’s commitment to differential privacy and on-device processing extends throughout the CoreML ecosystem, with comprehensive documentation and best practices that help developers implement privacy-preserving AI features. The framework’s design inherently supports privacy-preserving implementations by eliminating the need for cloud-based inference in most scenarios.

ML Kit offers flexibility in privacy implementation through its hybrid on-device and cloud-based approach. Developers can choose between purely on-device processing for privacy-sensitive applications or cloud-based inference for scenarios that require access to Google’s most advanced AI models. This flexibility requires careful consideration of privacy implications and appropriate implementation of data handling practices.

Google’s extensive privacy controls and compliance frameworks provide tools for implementing ML Kit in privacy-conscious applications, though developers must actively choose appropriate configuration options and data handling practices to ensure compliance with privacy requirements.

Discover comprehensive AI research capabilities with Perplexity for in-depth analysis of mobile AI implementation strategies and privacy considerations across different platforms and frameworks.

Cross-Platform Development Considerations

CoreML’s iOS-exclusive nature presents both advantages and limitations for developers working on cross-platform applications. The framework’s deep integration with iOS provides exceptional performance and seamless user experiences within Apple’s ecosystem, but requires separate implementation strategies for Android deployment.

Developers working on cross-platform applications often implement CoreML for iOS while using TensorFlow Lite or ML Kit for Android, accepting the additional complexity in exchange for platform-optimized performance. This approach requires careful model management and testing strategies to ensure consistent behavior across platforms.

ML Kit’s Android focus, combined with its flexibility and Google’s cross-platform development tools, provides a more unified approach for developers targeting multiple platforms. The framework’s compatibility with Flutter and other cross-platform development frameworks simplifies implementation for applications that need to support both iOS and Android.

The availability of similar TensorFlow Lite implementations on iOS enables some degree of cross-platform consistency when using ML Kit’s underlying technologies, though developers sacrifice some platform-specific optimizations in exchange for implementation consistency.

Model Deployment and Updates

CoreML’s approach to model deployment emphasizes simplicity and integration with iOS application distribution mechanisms. Models are typically bundled with applications or downloaded through standard iOS networking APIs, with developers handling versioning and updates through their application update cycles.

The framework supports dynamic model loading and management, enabling applications to implement sophisticated model update strategies while maintaining the security and reliability characteristics expected in iOS applications. CoreML’s integration with iOS development tools simplifies model management and reduces the complexity of deployment workflows.

ML Kit provides more sophisticated model management capabilities through its integration with Google Play Services and Firebase. The framework supports automatic model updates, A/B testing of different model versions, and gradual rollout strategies that enable safe deployment of model improvements without requiring application updates.

Google’s cloud infrastructure enables advanced model management scenarios including personalized model delivery, geographic distribution optimization, and intelligent caching strategies that improve both performance and user experience.

Cost and Licensing Considerations

CoreML operates under Apple’s standard developer licensing terms, with no additional fees for framework usage beyond the standard iOS Developer Program membership. This straightforward licensing model simplifies budgeting and planning for applications that rely heavily on machine learning functionality.

The framework’s on-device processing model eliminates ongoing inference costs that might be associated with cloud-based AI services, making it particularly attractive for applications with high usage volumes or cost-sensitive business models.

ML Kit’s pricing model varies depending on the specific APIs and deployment strategies used. Ready-to-use APIs operate under Google’s standard mobile development pricing, while custom model deployment through TensorFlow Lite typically incurs no additional framework licensing costs.

Cloud-based inference options in ML Kit may incur usage-based charges depending on volume and specific API usage patterns. Developers must carefully consider these cost implications when designing applications that rely heavily on cloud-based AI capabilities.

Performance Optimization Strategies

Effective performance optimization in CoreML requires understanding the framework’s hardware utilization strategies and model characteristics. Developers can achieve significant performance improvements through careful model selection, appropriate data preprocessing, and strategic use of CoreML’s hardware acceleration capabilities.

The framework’s automatic hardware selection generally provides optimal performance, but developers can implement more sophisticated optimization strategies through careful profiling and understanding of specific model behaviors. CoreML’s integration with Instruments provides detailed performance analysis capabilities that guide optimization efforts.

ML Kit optimization strategies must account for the diversity of Android hardware while leveraging available acceleration capabilities. The framework provides configuration options that allow developers to balance performance, accuracy, and resource utilization based on specific application requirements and target device capabilities.

TensorFlow Lite’s underlying optimization capabilities, including quantization and pruning, provide additional optimization opportunities for custom models deployed through ML Kit. These techniques can significantly reduce model size and improve inference performance while maintaining acceptable accuracy levels.

Future Developments and Ecosystem Evolution

The evolution of both CoreML and ML Kit reflects broader trends in mobile AI development, including increased focus on privacy-preserving implementations, improved hardware acceleration, and more sophisticated model management capabilities. Apple’s continued investment in custom silicon and AI-specific hardware promises further performance improvements for CoreML applications.

Google’s extensive AI research and cloud infrastructure position ML Kit to benefit from ongoing advances in machine learning techniques and model architectures. The framework’s connection to Google’s broader AI ecosystem enables rapid integration of new capabilities and improvements.

Both frameworks are likely to evolve toward more sophisticated on-device capabilities, improved cross-platform compatibility, and better integration with emerging AI development workflows. The growing importance of edge AI and privacy-preserving machine learning will likely drive continued innovation in both ecosystems.

The competitive dynamics between Apple and Google in mobile AI will likely result in continued improvement and innovation in both frameworks, benefiting developers and end users through better performance, expanded capabilities, and improved development experiences.

Choosing the Right Framework

The decision between CoreML and ML Kit depends on multiple factors including target platforms, performance requirements, privacy considerations, and development team expertise. Applications targeting exclusively iOS users and requiring maximum performance should strongly consider CoreML’s advantages in hardware integration and ecosystem alignment.

Cross-platform applications or those requiring flexibility in deployment strategies may benefit from ML Kit’s broader compatibility and cloud integration capabilities. The framework’s ready-to-use APIs can significantly reduce development time for applications implementing common AI functionality.

Development teams with extensive iOS experience may find CoreML’s integration with Apple’s development tools and ecosystem more familiar and productive, while teams with backgrounds in Google’s development ecosystem may prefer ML Kit’s alignment with broader Google development practices.

The long-term strategic considerations around platform support, feature evolution, and business requirements should inform framework selection decisions, as switching between frameworks typically requires significant development effort and careful migration planning.

Implementation Best Practices

Successful implementation of either framework requires careful attention to model optimization, user experience design, and performance monitoring. Developers should implement comprehensive testing strategies that account for different device capabilities and usage patterns to ensure consistent user experiences across target devices.

Model selection and optimization represent critical factors in application success, requiring careful balance between accuracy, performance, and resource utilization. Both frameworks provide tools and guidance for optimizing these trade-offs, but successful implementation requires ongoing monitoring and adjustment based on real-world usage patterns.

Privacy and security considerations should be integrated throughout the development process, with particular attention to data handling practices, model security, and compliance with applicable privacy regulations. Both frameworks provide capabilities for implementing privacy-preserving AI features, but developers must actively implement appropriate practices.

Performance monitoring and optimization should continue throughout the application lifecycle, with regular analysis of model behavior, user feedback, and device performance metrics informing ongoing optimization efforts. Both frameworks provide tools for production monitoring and optimization that support continuous improvement of AI functionality.

The future of mobile AI development lies in the continued evolution of both frameworks, with improvements in performance, capabilities, and developer experience driven by competition and technological advancement. Developers who understand the strengths and limitations of both approaches will be best positioned to create exceptional mobile AI experiences that delight users while meeting business objectives.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of mobile AI frameworks and their capabilities. Readers should conduct their own research and consider their specific requirements when choosing mobile AI development frameworks. Framework capabilities, performance characteristics, and pricing models may change over time, and developers should consult official documentation for the most current information.