The landscape of deep learning frameworks has evolved dramatically over the past decade, with three major contenders emerging as the dominant forces shaping how researchers and practitioners approach artificial intelligence development. JAX, PyTorch, and TensorFlow represent fundamentally different philosophies and approaches to deep learning, each offering unique advantages and addressing specific needs within the rapidly expanding AI ecosystem. Understanding the nuances, strengths, and limitations of these frameworks has become crucial for anyone serious about machine learning development, as the choice of framework can significantly impact everything from research productivity to deployment scalability and long-term project maintainability.

Stay updated with the latest AI framework developments to understand emerging trends and breakthrough innovations that continue to reshape the deep learning landscape. The competition between these frameworks drives continuous innovation, resulting in better tools, improved performance, and more accessible machine learning capabilities for developers worldwide.

TensorFlow: The Enterprise Veteran

TensorFlow, developed by Google and open-sourced in 2015, emerged as the first major framework to democratize deep learning development at scale. Built with production deployment in mind from its inception, TensorFlow introduced the concept of computational graphs that could be optimized and executed across diverse hardware configurations, from mobile devices to massive distributed clusters. The framework’s architecture reflects Google’s internal needs for handling enormous datasets and deploying models at unprecedented scale, making it particularly well-suited for enterprise applications where reliability, performance, and production readiness are paramount.

The evolution of TensorFlow through its major versions demonstrates a framework that has continuously adapted to user feedback and changing requirements. TensorFlow 2.0 marked a significant philosophical shift toward eager execution by default, making the framework more intuitive and Python-native while maintaining its powerful graph optimization capabilities through the tf.function decorator. This hybrid approach allows developers to enjoy the flexibility of imperative programming during development and experimentation while automatically benefiting from graph optimizations during production deployment.

TensorFlow’s ecosystem extends far beyond the core framework, encompassing specialized tools like TensorFlow Serving for model deployment, TensorFlow Lite for mobile and edge deployment, TensorFlow.js for browser-based machine learning, and TensorFlow Extended (TFX) for complete machine learning pipeline management. This comprehensive ecosystem makes TensorFlow particularly attractive for organizations seeking end-to-end solutions that can scale from research prototypes to production systems serving millions of users.

PyTorch: The Research Darling

PyTorch emerged from Facebook AI Research (now Meta AI) in 2016 with a fundamentally different philosophy that prioritized developer experience, research flexibility, and intuitive design over production optimization. Built around the principle of dynamic computational graphs and eager execution, PyTorch feels more like native Python programming, making it immediately accessible to researchers and practitioners who value rapid experimentation and iterative development. The framework’s design reflects the needs of the research community, where model architectures frequently change, experiments require quick iterations, and debugging capabilities are crucial for understanding complex neural network behaviors.

The dynamic nature of PyTorch’s computational graph construction allows for unprecedented flexibility in model design and experimentation. Researchers can modify network architectures on-the-fly, implement complex control flows with standard Python constructs, and debug models using familiar Python debugging tools. This approach has made PyTorch the framework of choice for cutting-edge research, with many breakthrough papers in computer vision, natural language processing, and reinforcement learning being implemented and validated using PyTorch’s flexible architecture.

Experience advanced AI development with Claude for comprehensive support in framework selection, model architecture design, and optimization strategies tailored to your specific use case. The integration of AI assistance in deep learning development has become increasingly valuable as frameworks become more sophisticated and the decision space expands.

PyTorch’s ecosystem has grown rapidly to address production deployment needs that were initially considered secondary to research flexibility. PyTorch Lightning emerged as a high-level wrapper that provides structure and best practices for PyTorch projects, while TorchServe offers model serving capabilities comparable to TensorFlow Serving. The introduction of TorchScript enables the conversion of PyTorch models into serializable, optimizable formats suitable for production deployment, effectively bridging the gap between research flexibility and production requirements.

JAX: The Functional Programming Revolutionary

JAX represents the newest and perhaps most radical approach to deep learning framework design, emerging from Google Research with a focus on functional programming principles, automatic differentiation, and high-performance computing. Built on top of NumPy’s familiar API, JAX introduces transformations as first-class citizens, allowing developers to apply operations like automatic differentiation (grad), vectorization (vmap), just-in-time compilation (jit), and parallelization (pmap) to arbitrary Python functions. This functional approach enables unprecedented composability and mathematical elegance while delivering exceptional performance through XLA (Accelerated Linear Algebra) compilation.

The philosophical foundation of JAX rests on the principle that machine learning computations are fundamentally mathematical transformations that benefit from functional programming paradigms. By treating functions as immutable and side-effect-free, JAX enables aggressive optimizations and transformations that would be impossible in imperative frameworks. The automatic differentiation system in JAX is particularly sophisticated, supporting forward-mode and reverse-mode differentiation as well as higher-order derivatives, making it exceptionally well-suited for research areas that require complex mathematical operations and advanced optimization techniques.

JAX’s approach to hardware acceleration and parallelization represents a significant advancement in how deep learning frameworks handle distributed computation. The framework’s transformation-based design allows for seamless scaling from single-device execution to massive distributed clusters without requiring fundamental changes to the underlying code. This capability has made JAX increasingly attractive for large-scale research projects and applications that require extensive computational resources.

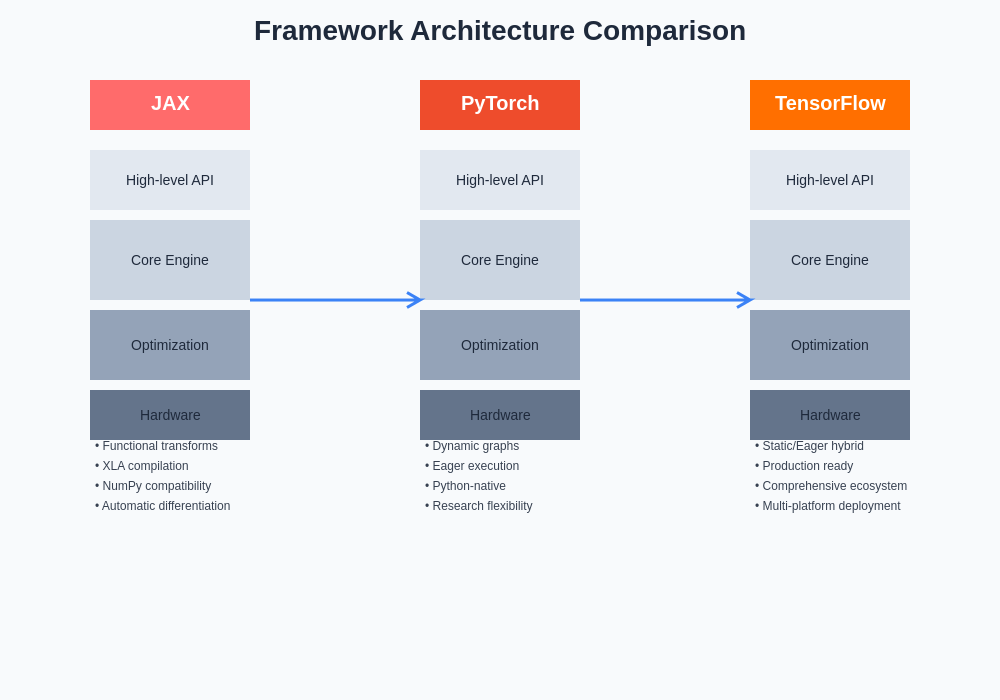

The architectural differences between these frameworks reflect their distinct design philosophies and target use cases. TensorFlow’s graph-based approach with eager execution capabilities provides a balance between flexibility and optimization, PyTorch’s dynamic graph construction prioritizes developer experience and research flexibility, while JAX’s functional transformation approach enables mathematical elegance and high-performance computing.

Performance and Scalability Considerations

Performance characteristics vary significantly across the three frameworks, with each excelling in different scenarios and use cases. TensorFlow’s mature graph optimization system and extensive hardware support make it particularly strong for large-scale training and inference workloads where consistent performance and resource utilization are critical. The framework’s ability to optimize computational graphs automatically, combined with its sophisticated distributed training capabilities, has made it the backbone of many production machine learning systems handling billions of predictions daily.

PyTorch’s performance profile has evolved considerably since its initial release, with significant improvements in compilation and optimization capabilities. The introduction of features like torch.compile and improvements to the JIT compiler have substantially reduced the performance gap with TensorFlow, particularly for research workloads where the flexibility benefits outweigh potential optimization limitations. PyTorch’s strength lies in its ability to maintain high performance while preserving the dynamic, flexible programming model that researchers value.

JAX’s performance characteristics are unique among the three frameworks, often delivering exceptional speed through its aggressive compilation and optimization strategies. The combination of XLA compilation and functional programming constraints allows JAX to achieve optimizations that are difficult or impossible in other frameworks. However, this performance comes with the requirement of adhering to functional programming principles and may require significant code restructuring for developers accustomed to imperative programming styles.

Ecosystem and Community Support

The ecosystem surrounding each framework reflects both its maturity and target audience. TensorFlow boasts the most comprehensive ecosystem, with extensive tooling for every aspect of the machine learning lifecycle from data preprocessing to model deployment and monitoring. The TensorFlow Hub provides a vast repository of pre-trained models, while TensorBoard offers sophisticated visualization and monitoring capabilities that have become industry standards for machine learning experiment tracking.

PyTorch’s ecosystem has grown rapidly and organically, driven by strong adoption in the research community. The Hugging Face Transformers library, built primarily on PyTorch, has become the de facto standard for natural language processing research and applications. PyTorch’s ecosystem strength lies in its research-oriented libraries and tools that prioritize flexibility and ease of use over comprehensive production features.

JAX’s ecosystem is the newest and most rapidly evolving among the three frameworks. Libraries like Flax, Haiku, and Optax provide high-level abstractions for neural network construction and training, while maintaining JAX’s functional programming principles. The JAX ecosystem reflects a focus on mathematical rigor and high-performance computing, with many libraries designed specifically for advanced research applications and scientific computing.

Explore comprehensive AI research capabilities with Perplexity to stay informed about the latest developments in deep learning frameworks and emerging research trends that influence framework evolution. The rapid pace of innovation in this space requires continuous learning and adaptation to maintain expertise across different frameworks and approaches.

Development Experience and Learning Curve

The development experience varies dramatically across the three frameworks, with each requiring different mental models and programming approaches. TensorFlow’s evolution from static graphs to eager execution has created a more approachable development experience, but the framework still requires understanding of concepts like tf.function, autograph, and the relationship between eager and graph execution modes. The comprehensive nature of TensorFlow’s API can be overwhelming for newcomers, but provides extensive capabilities for experienced developers.

PyTorch offers perhaps the most intuitive development experience for developers familiar with NumPy and standard Python programming. The dynamic nature of PyTorch’s computational graphs means that debugging, introspection, and experimentation feel natural and familiar. The learning curve for PyTorch is generally considered gentler than TensorFlow, particularly for researchers and practitioners who prioritize rapid prototyping and experimental flexibility.

JAX presents a unique learning curve that depends heavily on developers’ familiarity with functional programming concepts. While the NumPy-compatible API makes basic operations immediately familiar, fully leveraging JAX’s capabilities requires understanding functional programming principles, immutable data structures, and the implications of JAX’s transformation system. The learning investment is significant but can lead to more mathematically elegant and performant code.

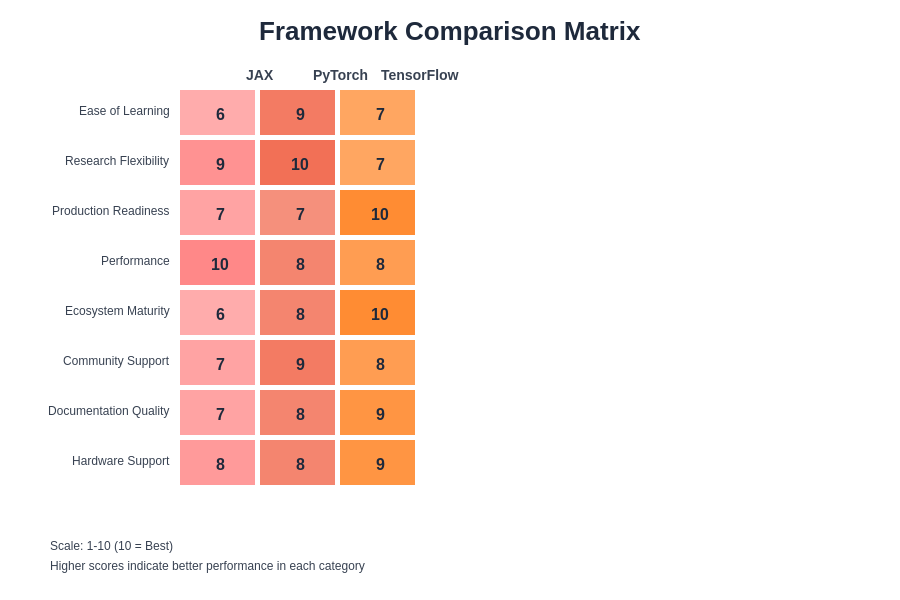

This comprehensive comparison matrix illustrates the relative strengths and characteristics of each framework across multiple dimensions including performance, ease of use, ecosystem maturity, research suitability, and production readiness. The visualization helps clarify the trade-offs involved in framework selection.

Use Case Specialization

Each framework has developed particular strengths that make it more suitable for specific use cases and application domains. TensorFlow’s comprehensive ecosystem and production focus make it the preferred choice for large-scale enterprise deployments, mobile applications, and scenarios where model serving performance and reliability are critical. The framework’s extensive support for different deployment targets and its mature tooling for model optimization and conversion make it particularly valuable for organizations with diverse deployment requirements.

PyTorch’s research-oriented design has made it the dominant framework in academic settings and research laboratories. Its flexibility and ease of experimentation have enabled rapid prototyping and implementation of cutting-edge research ideas, from transformer architectures to generative adversarial networks. The framework’s strength in computer vision and natural language processing research has created a virtuous cycle where new research implementations attract more researchers, further strengthening the ecosystem.

JAX’s unique capabilities have found particular traction in scientific computing applications, advanced optimization research, and scenarios requiring high-performance numerical computations. The framework’s functional approach and sophisticated automatic differentiation capabilities make it especially valuable for research involving complex mathematical operations, meta-learning algorithms, and applications where mathematical rigor and performance are equally important.

Production Deployment Considerations

The production deployment story varies significantly across the three frameworks, with each offering different advantages and challenges. TensorFlow’s production deployment ecosystem is the most mature and comprehensive, with TensorFlow Serving providing robust, scalable model serving capabilities that have been battle-tested in high-volume production environments. TensorFlow Lite enables efficient deployment on mobile and edge devices, while TensorFlow.js allows for browser-based inference, providing unmatched deployment flexibility.

PyTorch’s production deployment capabilities have improved substantially with the introduction of TorchServe and improvements to TorchScript. While not as comprehensive as TensorFlow’s deployment ecosystem, PyTorch now offers viable paths for production deployment that maintain much of the framework’s development flexibility. The growing ecosystem of PyTorch-focused deployment tools and services reflects the framework’s expanding role beyond pure research applications.

JAX’s approach to production deployment is still evolving, with the framework’s functional design enabling unique optimization opportunities but requiring careful consideration of deployment architectures. The compilation-based approach can lead to excellent inference performance, but the functional programming requirements may necessitate significant architectural considerations when designing production systems.

Future Trajectories and Evolution

The evolution of these frameworks continues to be driven by both technological advancement and community needs. TensorFlow’s development focuses on improving ease of use while maintaining its production and enterprise strengths. The ongoing work on TensorFlow’s unified programming model aims to further simplify the development experience while preserving the performance and deployment advantages that have made it successful in production environments.

PyTorch’s trajectory emphasizes bridging the gap between research flexibility and production requirements without compromising the developer experience that has made it popular in research settings. The framework’s evolution includes improved compilation capabilities, better performance optimization, and enhanced production deployment tools while maintaining the dynamic, intuitive programming model that defines its identity.

JAX’s future development focuses on expanding its ecosystem while maintaining the mathematical rigor and performance advantages that distinguish it from other frameworks. The continued development of high-level libraries and tools aims to make JAX’s powerful capabilities more accessible to a broader range of developers while preserving the functional programming principles that enable its unique optimization capabilities.

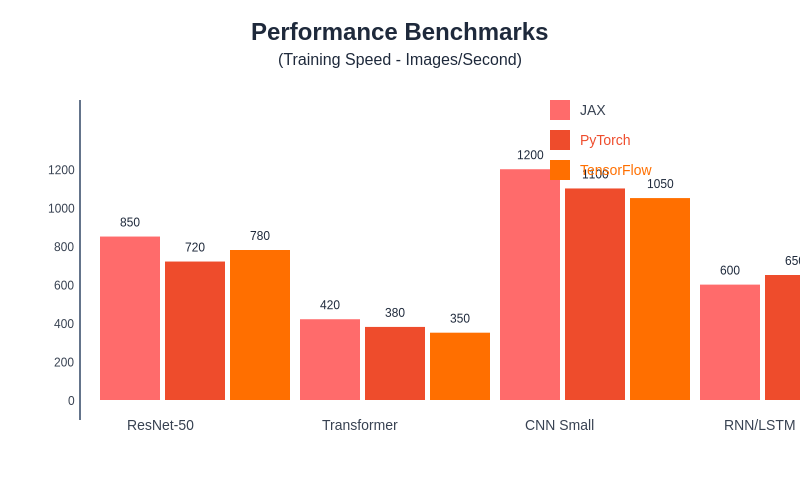

Performance benchmarking across different model types and scales reveals the relative strengths of each framework. While specific performance characteristics vary depending on model architecture, dataset size, and hardware configuration, these benchmarks provide insights into the performance trade-offs inherent in each framework’s design decisions.

Making the Right Choice

Selecting the appropriate framework requires careful consideration of multiple factors including project requirements, team expertise, deployment targets, and long-term maintenance considerations. Organizations with strong production requirements, diverse deployment targets, and established MLOps processes may find TensorFlow’s comprehensive ecosystem and proven scalability most valuable. The framework’s maturity and extensive tooling make it particularly attractive for enterprise applications where reliability and performance consistency are paramount.

Research-focused projects, rapid prototyping scenarios, and teams that prioritize development velocity and experimental flexibility may find PyTorch’s dynamic approach and research-oriented ecosystem more suitable. The framework’s intuitive programming model and strong research community support make it an excellent choice for projects where model architecture innovation and experimental flexibility are more important than production optimization.

Projects requiring high-performance numerical computing, advanced mathematical operations, or scenarios where functional programming principles align with project goals may benefit from JAX’s unique capabilities. While the learning curve is steeper, the framework’s potential for optimization and mathematical elegance can provide significant advantages for suitable applications.

The decision ultimately depends on weighing the specific requirements of your project against the strengths and limitations of each framework. Consider factors such as team expertise, deployment requirements, performance needs, ecosystem dependencies, and long-term maintenance when making this critical choice. The landscape continues to evolve rapidly, with each framework learning from the others and incorporating successful features and approaches.

Understanding these frameworks as part of a broader ecosystem rather than as mutually exclusive choices can also provide valuable perspective. Many organizations successfully use multiple frameworks for different purposes, leveraging TensorFlow for production deployment, PyTorch for research and prototyping, and JAX for specialized high-performance computing tasks. This multi-framework approach allows teams to leverage the strengths of each tool while minimizing the impact of their respective limitations.

The deep learning framework wars represent more than just technical competition; they reflect different philosophies about how artificial intelligence development should be approached and structured. Whether prioritizing production readiness, research flexibility, or mathematical rigor, the choice of framework shapes not just the technical implementation but also the development culture and long-term trajectory of machine learning projects.

Disclaimer

This article provides a comparative analysis based on publicly available information and general industry observations. Framework performance and capabilities can vary significantly based on specific use cases, implementation details, and hardware configurations. Readers should conduct their own evaluation and testing based on their specific requirements and constraints. The deep learning framework landscape continues to evolve rapidly, and the relative advantages of different frameworks may change with new releases and updates.