The convergence of Kotlin programming language capabilities with artificial intelligence technologies has created unprecedented opportunities for Android developers to build sophisticated machine learning applications that operate directly on mobile devices. This powerful combination enables developers to create intelligent mobile experiences that process data locally, maintain user privacy, and deliver real-time AI-powered features without requiring constant internet connectivity or cloud-based processing dependencies.

Explore the latest AI development trends to understand how mobile AI is reshaping user expectations and creating new possibilities for intelligent mobile applications. The integration of machine learning capabilities into Android applications represents a fundamental shift in mobile development paradigms, where devices become autonomous intelligent agents capable of understanding user behavior, processing complex data, and making informed decisions in real-time.

Understanding Mobile AI Architecture with Kotlin

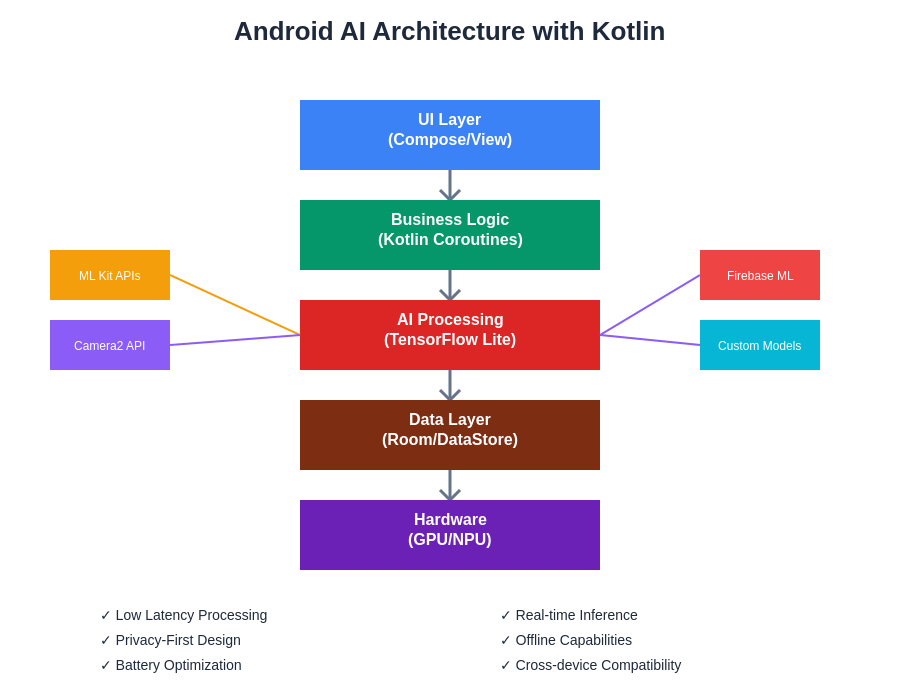

The foundation of successful Android AI development lies in understanding how Kotlin’s expressive syntax and powerful language features align with machine learning frameworks and mobile computing constraints. Kotlin’s null safety, coroutines for asynchronous processing, and extension functions provide an ideal programming environment for handling the complex data flows and computational requirements inherent in mobile AI applications.

Modern Android AI applications built with Kotlin leverage a multi-layered architecture that separates data preprocessing, model inference, and result interpretation into distinct, manageable components. This architectural approach ensures that machine learning operations remain efficient and responsive while maintaining clean code organization that facilitates testing, debugging, and future enhancements. The language’s interoperability with Java enables seamless integration with existing Android libraries and frameworks while providing access to the full ecosystem of Java-based machine learning tools.

The asynchronous nature of machine learning inference operations aligns perfectly with Kotlin’s coroutines, which provide elegant solutions for managing long-running AI computations without blocking the user interface thread. This combination ensures that Android applications remain responsive and provide smooth user experiences even when performing complex machine learning operations such as image recognition, natural language processing, or predictive analytics.

The layered architecture approach demonstrates how Kotlin integrates seamlessly with Android’s AI ecosystem, providing clean separation between user interface components, business logic processing, machine learning inference operations, and data management layers. This architectural pattern ensures maintainable code while maximizing performance across diverse Android hardware configurations.

TensorFlow Lite Integration with Kotlin

TensorFlow Lite represents the cornerstone of on-device machine learning for Android applications, and Kotlin provides exceptional support for integrating these capabilities into mobile applications. The framework’s optimization for mobile deployment ensures that pre-trained models can run efficiently on Android devices with limited computational resources and battery life constraints.

Kotlin’s type safety and expressive syntax significantly simplify the process of loading TensorFlow Lite models, preparing input data, and interpreting inference results. The language’s ability to handle nullable types gracefully reduces the likelihood of runtime errors that could crash applications during critical AI operations, while its functional programming capabilities enable elegant data transformation pipelines that prepare raw input for model consumption.

The integration process involves creating Kotlin classes that encapsulate model loading, input preprocessing, inference execution, and output postprocessing operations. These wrapper classes abstract the complexity of TensorFlow Lite operations while providing clean, type-safe interfaces that other application components can utilize without requiring detailed knowledge of the underlying machine learning implementation details.

ML Kit and Firebase Integration

Google’s ML Kit provides a comprehensive suite of ready-to-use machine learning APIs that integrate seamlessly with Kotlin-based Android applications. These pre-trained models offer capabilities ranging from text recognition and face detection to language translation and pose estimation, enabling developers to incorporate sophisticated AI features without requiring extensive machine learning expertise or custom model development.

Enhance your development workflow with Claude for intelligent code assistance and architectural guidance when integrating complex ML Kit features into your Android applications. The combination of ML Kit’s pre-built capabilities with Kotlin’s expressive programming model creates powerful opportunities for rapid prototyping and deployment of AI-powered mobile features.

The Firebase integration aspect of ML Kit enables cloud-based processing for computationally intensive operations while maintaining the flexibility to perform simpler tasks locally on the device. This hybrid approach optimizes performance and battery life while ensuring that applications can gracefully handle scenarios with limited or intermittent internet connectivity. Kotlin’s coroutines provide excellent support for managing these asynchronous cloud operations while maintaining responsive user interfaces.

Real-Time Computer Vision Applications

Computer vision represents one of the most compelling applications of mobile AI technology, and Kotlin provides exceptional support for building real-time image and video processing applications on Android. The combination of camera API integration, efficient image processing pipelines, and machine learning inference creates opportunities for applications ranging from augmented reality experiences to automated quality control systems.

Kotlin’s extension functions enable developers to create elegant APIs for common computer vision operations such as image cropping, rotation, format conversion, and enhancement. These extensions abstract the complexity of Android’s graphics APIs while providing intuitive interfaces that simplify the development of computer vision features. The language’s ability to seamlessly integrate with existing Java libraries ensures compatibility with popular image processing frameworks and computer vision utilities.

Real-time performance requirements in computer vision applications demand careful attention to memory management and computational efficiency. Kotlin’s support for efficient data structures, combined with proper utilization of Android’s graphics hardware acceleration capabilities, enables the development of responsive computer vision applications that can process camera feeds at high frame rates without compromising system stability or battery life.

Natural Language Processing in Mobile Apps

The implementation of natural language processing capabilities in Android applications has been significantly enhanced by Kotlin’s string manipulation capabilities and functional programming features. Modern mobile NLP applications can perform tasks ranging from sentiment analysis and text classification to language translation and voice recognition, all while operating entirely on the device to protect user privacy and ensure consistent performance.

Kotlin’s robust string processing capabilities, combined with its support for regular expressions and text parsing operations, provide an excellent foundation for implementing preprocessing pipelines that prepare text data for machine learning model consumption. The language’s null safety features are particularly valuable when handling user-generated text content, which often contains unexpected formatting, special characters, or empty values that could cause application crashes if not handled properly.

The integration of speech recognition and text-to-speech capabilities with natural language processing models creates opportunities for building conversational AI interfaces that can understand user intent and provide intelligent responses. Kotlin’s coroutines excel at managing the asynchronous operations required for these multi-step processing pipelines while maintaining responsive user interfaces that provide real-time feedback during processing operations.

Building Recommendation Systems

Mobile recommendation systems represent a sophisticated application of machine learning technology that requires careful consideration of user privacy, data efficiency, and personalization capabilities. Kotlin’s object-oriented programming features provide excellent support for implementing recommendation algorithms that can learn from user behavior patterns while maintaining clean, maintainable code architectures.

The development of effective recommendation systems involves implementing collaborative filtering algorithms, content-based recommendation engines, or hybrid approaches that combine multiple recommendation strategies. Kotlin’s collection processing capabilities and functional programming features simplify the implementation of these algorithms while ensuring that recommendation calculations remain efficient and responsive on mobile hardware.

Leverage advanced AI research capabilities with Perplexity to stay informed about the latest developments in recommendation system algorithms and mobile machine learning optimization techniques. The rapid evolution of recommendation system methodologies requires continuous learning and adaptation to maintain competitive advantage in mobile AI applications.

User behavior tracking and preference learning must be implemented with careful attention to privacy considerations and data minimization principles. Kotlin’s type safety features help prevent accidental data leakage while its structured concurrency support enables efficient processing of user interaction data without impacting application performance or responsiveness.

Performance Optimization Strategies

Mobile AI applications face unique performance challenges that require specialized optimization strategies to ensure smooth operation across diverse Android hardware configurations. Kotlin’s performance characteristics, combined with careful attention to memory management and computational efficiency, enable the development of AI applications that perform well even on older or lower-specification devices.

Memory management represents a critical aspect of mobile AI optimization, particularly when working with large machine learning models or processing high-resolution images and video content. Kotlin’s integration with Android’s memory management systems, combined with proper utilization of garbage collection optimization techniques, ensures that AI applications can operate efficiently without causing memory pressure or system instability.

Model quantization and pruning techniques can significantly reduce the computational requirements of machine learning inference operations while maintaining acceptable accuracy levels for most mobile applications. Kotlin’s support for these optimization processes, combined with TensorFlow Lite’s model optimization tools, enables developers to create AI applications that balance performance requirements with accuracy expectations across diverse use cases and hardware configurations.

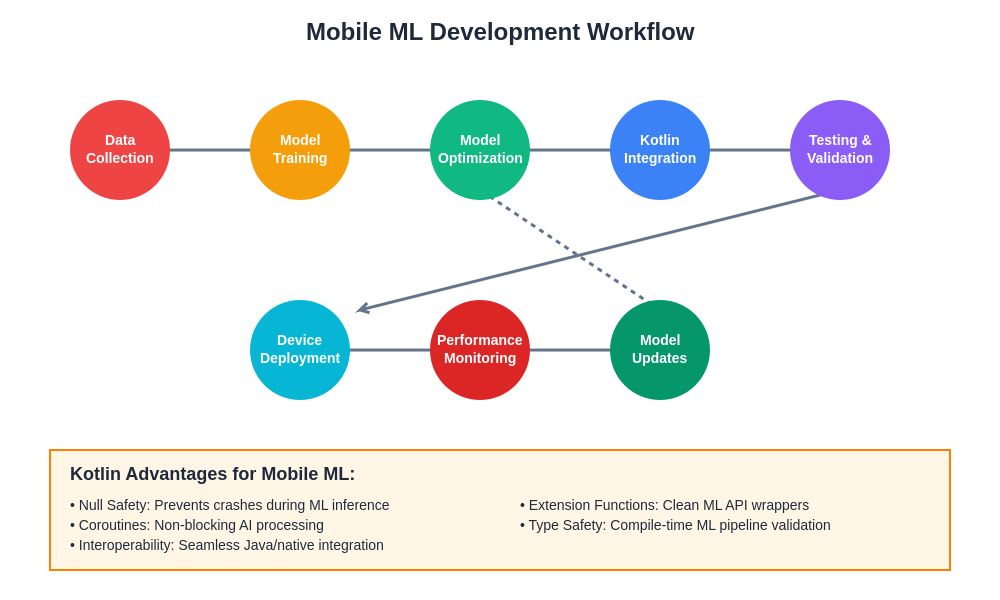

The comprehensive development workflow illustrates how Kotlin integrates into every stage of mobile machine learning development, from initial data collection through model deployment and continuous monitoring. The feedback loop ensures that deployed models remain effective and can be updated based on real-world performance metrics and user feedback.

Data Privacy and Security Considerations

The implementation of AI capabilities in mobile applications raises important considerations regarding user data privacy and security that must be addressed through careful architectural design and secure coding practices. Kotlin’s type safety features and null handling capabilities provide a strong foundation for implementing privacy-preserving AI systems that protect user data while delivering intelligent functionality.

On-device processing represents the gold standard for privacy-conscious AI applications, as it ensures that sensitive user data never leaves the device and cannot be intercepted or compromised during transmission. Kotlin’s efficient processing capabilities enable the implementation of sophisticated AI features that operate entirely locally while maintaining the performance levels users expect from modern mobile applications.

Data encryption and secure storage implementations become crucial when AI applications must persist user data or model parameters for future use. Kotlin’s integration with Android’s security frameworks provides access to hardware-backed encryption capabilities and secure key management systems that protect sensitive AI-related data from unauthorized access or tampering.

Testing and Debugging AI Features

The testing and debugging of AI-powered mobile applications require specialized approaches that account for the non-deterministic nature of machine learning models and the complexity of AI processing pipelines. Kotlin’s support for unit testing frameworks and mock object creation provides excellent tools for testing individual components of AI applications while isolating them from external dependencies and random variations.

Model validation and accuracy testing represent critical aspects of AI application development that ensure deployed models perform as expected across diverse real-world scenarios. Kotlin’s data processing capabilities enable the implementation of comprehensive testing suites that validate model performance against known datasets while identifying potential edge cases that could cause unexpected behavior in production environments.

The debugging of AI applications often involves analyzing large volumes of intermediate processing results and model outputs to identify performance bottlenecks or accuracy issues. Kotlin’s logging and debugging capabilities, combined with Android’s development tools, provide comprehensive visibility into AI processing pipelines and enable developers to optimize performance and resolve issues efficiently.

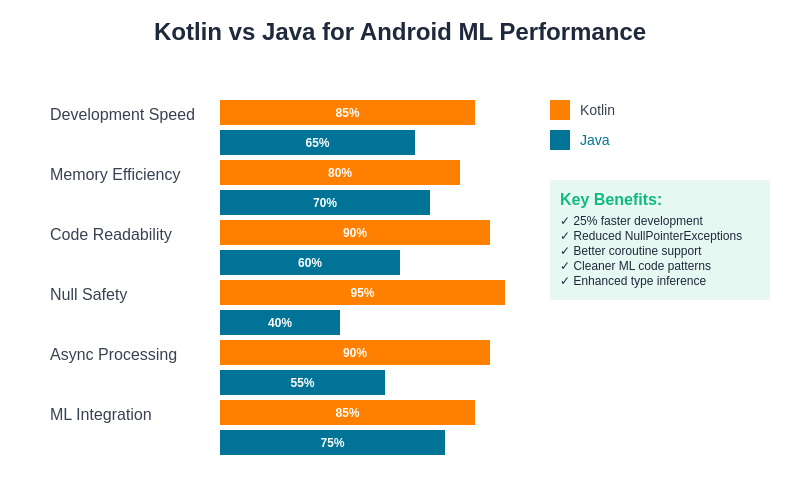

The performance comparison highlights Kotlin’s advantages over traditional Java development for Android AI applications. Kotlin’s superior development speed, null safety features, and enhanced async processing capabilities make it the preferred choice for building robust and efficient mobile machine learning applications.

Deployment and Distribution Strategies

The deployment of AI-powered Android applications requires careful consideration of application size, update mechanisms, and model versioning strategies that ensure users receive optimal experiences while maintaining reasonable download sizes and update frequencies. Kotlin’s support for modular application architectures enables the implementation of dynamic feature delivery systems that can download AI models on-demand based on user requirements.

Application bundle optimization techniques can significantly reduce the initial download size of AI applications by separating core functionality from optional AI features that users can activate as needed. Kotlin’s module system provides excellent support for these architectural approaches while maintaining clean separation of concerns between different application components and AI processing capabilities.

Model versioning and update strategies become crucial for maintaining AI application effectiveness as new models become available or existing models require retraining based on updated datasets. Kotlin’s support for these update mechanisms, combined with Android’s app bundle delivery system, enables seamless delivery of model updates without requiring full application updates or user intervention.

Future Trends and Emerging Technologies

The landscape of mobile AI development continues to evolve rapidly, with emerging technologies such as federated learning, edge computing optimization, and neural architecture search creating new opportunities for Kotlin-based Android AI applications. These advances promise to further enhance the capabilities of mobile AI while addressing current limitations in areas such as model size, processing efficiency, and cross-device collaboration.

Federated learning represents a particularly promising development for mobile AI applications, as it enables collaborative model training across multiple devices while preserving individual user privacy. Kotlin’s networking capabilities and asynchronous processing support provide an excellent foundation for implementing federated learning systems that can improve model performance through collective learning without compromising user data security.

The integration of specialized AI hardware acceleration capabilities into mobile devices creates opportunities for even more sophisticated AI applications that can perform complex processing tasks with improved efficiency and reduced battery consumption. Kotlin’s interoperability with native code enables developers to take advantage of these hardware acceleration features while maintaining the productivity benefits of high-level language development.

The continued evolution of machine learning frameworks and tools specifically designed for mobile deployment ensures that Kotlin developers will have access to increasingly powerful and efficient AI development resources. This ongoing advancement promises to democratize AI development further while enabling the creation of mobile applications that deliver intelligent experiences previously thought impossible on mobile devices.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Kotlin programming and mobile AI development technologies. Readers should conduct their own research and consider their specific requirements when implementing AI features in mobile applications. The effectiveness of AI implementations may vary depending on specific use cases, device capabilities, and user requirements. Always ensure compliance with relevant privacy regulations and platform policies when developing AI-powered mobile applications.