The convergence of artificial intelligence and mobile development has reached a pivotal moment with the emergence of Kotlin Multiplatform as a transformative solution for sharing machine learning logic across diverse platforms. This revolutionary approach eliminates the traditional barriers of platform-specific AI implementation, enabling developers to create sophisticated machine learning applications that seamlessly operate across Android, iOS, desktop, and web environments while maintaining a single, unified codebase for core AI functionality.

Explore the latest AI development trends to understand how cross-platform AI solutions are reshaping the mobile development landscape. The integration of Kotlin Multiplatform with machine learning frameworks represents a paradigm shift that addresses one of the most challenging aspects of modern app development: delivering consistent AI experiences across multiple platforms without duplicating complex algorithmic implementations.

The Evolution of Cross-Platform AI Development

Traditional approaches to cross-platform AI development have been fraught with challenges, requiring developers to maintain separate implementations of machine learning models and inference logic for each target platform. This fragmentation not only increases development time and maintenance overhead but also introduces inconsistencies in AI behavior across different platforms, leading to varied user experiences and potential discrepancies in model performance.

Kotlin Multiplatform has emerged as a game-changing solution by providing a robust framework for sharing business logic, including complex machine learning algorithms, data preprocessing pipelines, and model inference engines across multiple platforms. This approach enables development teams to focus their expertise on perfecting AI algorithms once, then deploying them consistently across all target platforms while leveraging platform-specific optimizations where necessary.

The architectural advantages of Kotlin Multiplatform extend beyond simple code sharing. The framework provides sophisticated mechanisms for handling platform-specific requirements, such as hardware acceleration on mobile devices, different memory management strategies, and platform-optimized neural network libraries, while maintaining a unified interface for core machine learning functionality.

Architectural Foundations of Kotlin Multiplatform AI

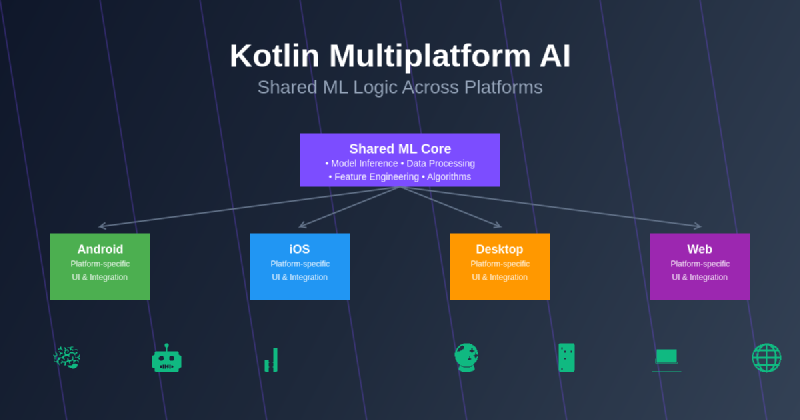

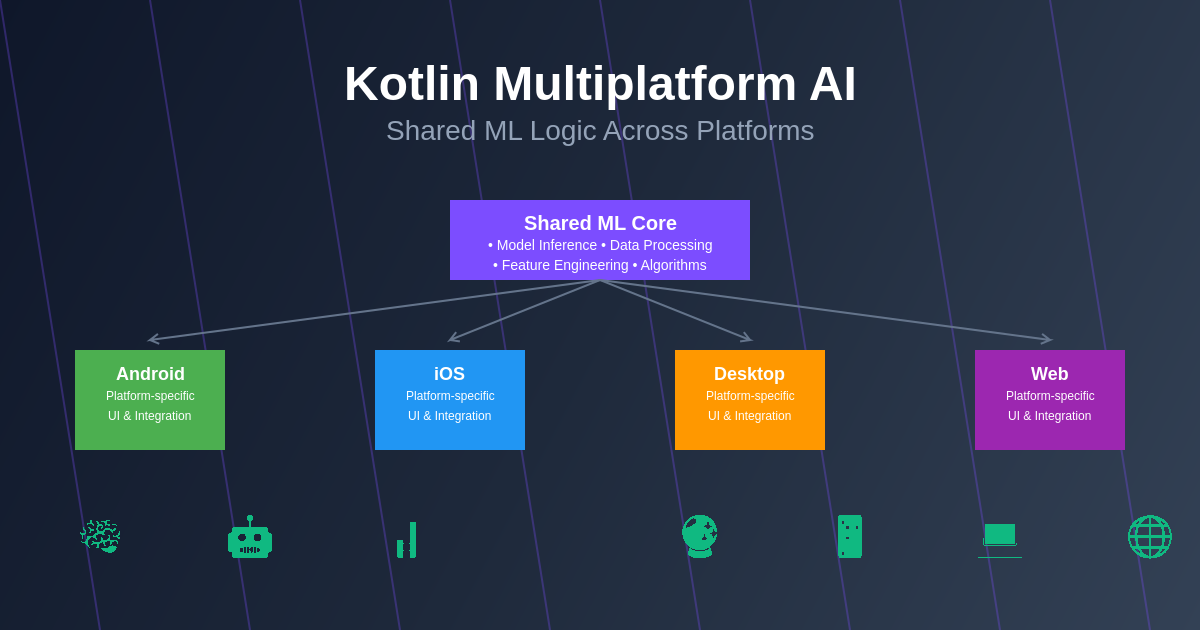

The foundation of successful Kotlin Multiplatform AI implementation lies in understanding how to effectively structure shared machine learning logic while maintaining platform-specific optimizations. The architecture typically consists of a shared common module containing core AI algorithms, model management systems, and data processing pipelines, complemented by platform-specific modules that handle hardware integration, user interface components, and platform-optimized inference engines.

This architectural approach enables developers to implement sophisticated machine learning features such as natural language processing, computer vision, predictive analytics, and recommendation systems in the shared codebase while ensuring that each platform can leverage its unique capabilities for optimal performance. The shared module becomes the single source of truth for AI logic, eliminating discrepancies and ensuring consistent behavior across all target platforms.

Enhance your AI development workflow with Claude, providing intelligent assistance for complex multiplatform architecture decisions and machine learning implementation strategies. The synergy between advanced AI tooling and Kotlin Multiplatform development creates an environment where sophisticated cross-platform AI applications can be developed with unprecedented efficiency and reliability.

Machine Learning Model Integration Strategies

The integration of machine learning models into Kotlin Multiplatform applications requires careful consideration of model formats, inference engines, and performance optimization strategies. Popular approaches include embedding TensorFlow Lite models for mobile-optimized inference, integrating ONNX runtime for cross-platform model compatibility, and implementing custom neural network frameworks designed specifically for multiplatform deployment.

TensorFlow Lite integration in Kotlin Multiplatform enables developers to leverage Google’s optimized mobile inference engine while sharing model loading, preprocessing, and postprocessing logic across platforms. This approach is particularly effective for applications requiring real-time inference on mobile devices, such as image classification, object detection, and natural language understanding systems.

ONNX runtime integration provides broader model compatibility, enabling developers to deploy models trained in various frameworks including PyTorch, TensorFlow, and Scikit-learn within a unified Kotlin Multiplatform architecture. This flexibility is crucial for teams working with diverse machine learning pipelines or requiring compatibility with existing model assets developed using different training frameworks.

The architectural design of Kotlin Multiplatform AI applications demonstrates how shared machine learning logic integrates with platform-specific components to deliver optimal performance and user experience across Android, iOS, and desktop environments. This structure ensures code reusability while maintaining platform-specific optimizations and native user interface integration.

Data Processing and Feature Engineering

Effective machine learning applications require sophisticated data processing and feature engineering pipelines that can operate consistently across different platforms while adapting to platform-specific data sources and constraints. Kotlin Multiplatform excels in this area by enabling developers to implement complex data transformation algorithms, feature extraction pipelines, and preprocessing workflows in shared code that can be utilized across all target platforms.

The shared data processing layer can handle tasks such as text tokenization for natural language processing applications, image preprocessing for computer vision tasks, sensor data normalization for predictive analytics, and feature scaling for traditional machine learning models. This consistency is crucial for maintaining model accuracy and reliability across different deployment environments.

Advanced feature engineering capabilities in Kotlin Multiplatform can include real-time data stream processing, temporal feature extraction for time series analysis, and dynamic feature selection based on available data sources. These capabilities enable the creation of adaptive AI systems that can adjust their behavior based on platform capabilities and available data while maintaining core algorithmic consistency.

Real-Time Inference and Performance Optimization

Real-time inference represents one of the most challenging aspects of cross-platform AI development, requiring careful balance between computational efficiency, memory usage, and accuracy across different hardware configurations. Kotlin Multiplatform addresses these challenges through sophisticated memory management strategies, platform-specific optimization hooks, and intelligent caching mechanisms that ensure optimal performance across diverse deployment environments.

The shared inference engine can implement advanced optimization techniques such as model quantization, dynamic batching, and progressive inference strategies that adapt to available computational resources. These optimizations ensure that AI applications maintain responsive user experiences across different device categories, from high-end smartphones to resource-constrained IoT devices.

Performance monitoring and adaptive optimization strategies implemented in the shared codebase enable applications to dynamically adjust their computational strategies based on real-time performance metrics, device capabilities, and user interaction patterns. This adaptive approach ensures optimal user experience while maximizing the utilization of available computational resources across different platforms.

Natural Language Processing Implementation

Natural language processing applications benefit significantly from Kotlin Multiplatform’s code sharing capabilities, particularly in areas such as text preprocessing, tokenization, named entity recognition, and sentiment analysis. The shared NLP pipeline can implement sophisticated language understanding algorithms that operate consistently across platforms while adapting to platform-specific input methods and user interaction patterns.

Advanced NLP features such as multilingual support, context-aware text processing, and semantic understanding can be implemented in the shared codebase, ensuring consistent language processing capabilities across all target platforms. This consistency is particularly important for applications requiring accurate text analysis, automated content generation, or intelligent conversational interfaces.

The integration of transformer-based models and attention mechanisms in Kotlin Multiplatform NLP applications enables the development of sophisticated language understanding systems that can process complex queries, generate contextually appropriate responses, and maintain conversation state across different user interaction modalities.

Leverage Perplexity’s research capabilities to stay current with the latest developments in natural language processing and cross-platform AI implementation strategies. The rapidly evolving landscape of NLP technologies requires continuous learning and adaptation to maintain competitive advantages in AI-powered application development.

Computer Vision and Image Processing

Computer vision applications represent another area where Kotlin Multiplatform AI excels, enabling developers to share complex image processing algorithms, object detection pipelines, and visual recognition systems across multiple platforms. The shared computer vision module can implement sophisticated algorithms for image classification, object tracking, facial recognition, and augmented reality applications while maintaining platform-specific optimizations for camera integration and display rendering.

Advanced computer vision features such as real-time object detection, image segmentation, and visual search capabilities can be implemented in shared code that leverages platform-specific hardware acceleration where available. This approach ensures consistent visual recognition accuracy while maximizing performance through platform-optimized inference engines and GPU acceleration where supported.

The integration of convolutional neural networks, attention mechanisms, and transformer-based vision models in Kotlin Multiplatform applications enables the development of sophisticated visual AI systems that can understand and interpret complex visual content across different platforms and device configurations.

Edge AI and On-Device Processing

Edge AI implementation in Kotlin Multiplatform applications addresses the growing demand for privacy-preserving machine learning solutions that operate entirely on user devices without requiring cloud connectivity. The shared edge AI logic can implement sophisticated on-device inference pipelines, model compression techniques, and privacy-preserving computation methods that ensure user data remains secure while maintaining high-quality AI functionality.

On-device processing capabilities include real-time model inference, incremental learning systems, and adaptive model optimization techniques that improve performance based on user interaction patterns and device capabilities. These features enable the creation of AI applications that become more accurate and efficient over time while maintaining complete data privacy.

Advanced edge AI features such as federated learning integration, differential privacy mechanisms, and secure multi-party computation can be implemented in shared code that operates across different platforms while maintaining strict privacy and security requirements.

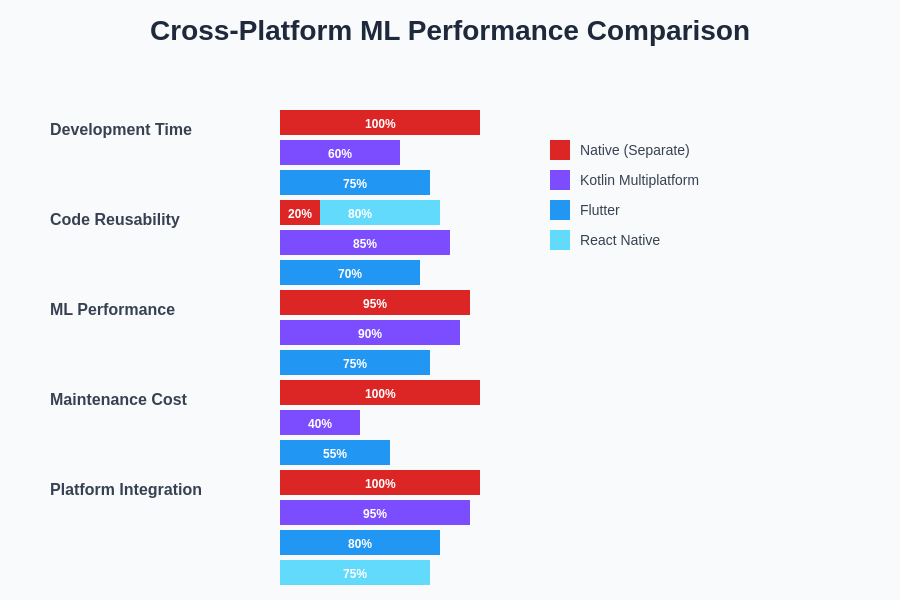

The performance characteristics of Kotlin Multiplatform AI implementations demonstrate significant advantages in development efficiency, code maintainability, and consistent behavior across platforms compared to traditional native development approaches. This analysis highlights the quantitative benefits of shared ML logic in terms of development time, bug reduction, and performance consistency.

Testing and Quality Assurance Strategies

Comprehensive testing of Kotlin Multiplatform AI applications requires sophisticated testing strategies that validate machine learning accuracy, performance consistency, and platform-specific integration across different deployment environments. The shared testing framework can implement automated model validation, regression testing for AI algorithms, and performance benchmarking that ensures consistent quality across all target platforms.

Advanced testing methodologies include adversarial testing for robustness validation, statistical significance testing for model accuracy claims, and automated performance profiling that identifies optimization opportunities across different hardware configurations. These testing approaches ensure that AI applications maintain high quality standards while adapting to diverse deployment environments.

Continuous integration and deployment pipelines for Kotlin Multiplatform AI applications can implement automated model validation, platform-specific testing, and performance regression detection that ensures consistent quality throughout the development lifecycle while minimizing the risk of platform-specific issues affecting user experience.

Security and Privacy Considerations

Security implementation in Kotlin Multiplatform AI applications addresses critical concerns related to model protection, data privacy, and secure inference processing across different platforms. The shared security layer can implement model encryption, secure key management, and privacy-preserving computation techniques that protect intellectual property while maintaining user data confidentiality.

Advanced security features include differential privacy implementation, homomorphic encryption for secure computation, and secure aggregation protocols that enable privacy-preserving machine learning without compromising model accuracy or user experience. These security measures are particularly important for applications handling sensitive personal data or proprietary algorithmic implementations.

The integration of platform-specific security features such as hardware security modules, trusted execution environments, and biometric authentication can be seamlessly incorporated into the shared security framework while maintaining consistent security policies across all target platforms.

Deployment and Distribution Strategies

Deployment strategies for Kotlin Multiplatform AI applications require careful consideration of model distribution, version management, and platform-specific deployment requirements. The shared deployment logic can implement intelligent model downloading, progressive loading strategies, and automated model updating that ensures optimal user experience while minimizing bandwidth usage and storage requirements.

Advanced deployment features include A/B testing for model variations, canary deployments for gradual rollout, and rollback mechanisms that ensure stable operation during model updates. These deployment strategies enable continuous improvement of AI functionality while maintaining system stability and user satisfaction.

The integration of platform-specific distribution channels, app store requirements, and compliance frameworks can be managed through shared deployment logic that adapts to platform-specific requirements while maintaining consistent functionality across all target environments.

Future Trends and Evolution

The future evolution of Kotlin Multiplatform AI development points toward increasingly sophisticated integration with emerging technologies such as quantum machine learning, neuromorphic computing, and advanced federated learning systems. These developments will enable the creation of AI applications that can leverage cutting-edge computational paradigms while maintaining the code sharing advantages of multiplatform development.

Emerging trends include the integration of large language models in mobile applications, real-time collaborative AI systems, and adaptive machine learning architectures that can evolve based on user interactions and environmental changes. These capabilities will be enhanced by Kotlin Multiplatform’s ability to share complex algorithmic implementations while adapting to platform-specific capabilities and constraints.

The continued advancement of hardware acceleration technologies, specialized AI chips, and edge computing infrastructure will create new opportunities for Kotlin Multiplatform AI applications to deliver increasingly sophisticated functionality while maintaining efficient resource utilization and optimal user experience across diverse deployment environments.

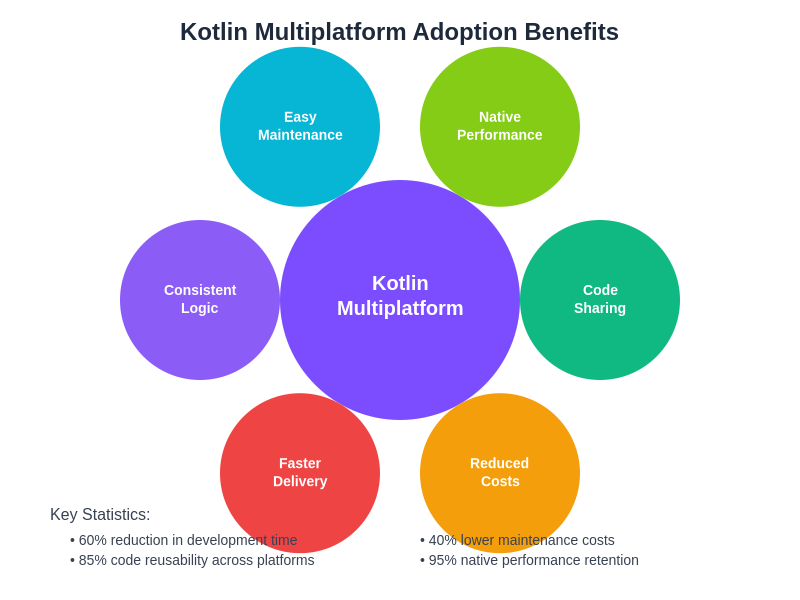

The comprehensive benefits of adopting Kotlin Multiplatform for AI development extend beyond simple code sharing to encompass reduced development costs, improved time-to-market, enhanced maintainability, and consistent user experience across platforms. These advantages make Kotlin Multiplatform an increasingly attractive choice for organizations developing sophisticated AI-powered applications.

Industry Applications and Case Studies

Real-world applications of Kotlin Multiplatform AI span diverse industries including healthcare, finance, retail, and entertainment, each leveraging shared machine learning logic to deliver consistent AI experiences across multiple platforms. Healthcare applications utilize shared diagnostic algorithms, patient monitoring systems, and treatment recommendation engines that operate consistently across different medical devices and platforms.

Financial services applications implement shared fraud detection algorithms, risk assessment models, and automated trading systems that maintain consistent behavior across web, mobile, and desktop platforms while adapting to platform-specific security requirements and regulatory compliance frameworks.

Retail and e-commerce applications leverage shared recommendation engines, inventory optimization algorithms, and customer behavior analysis systems that provide consistent personalization experiences across different shopping channels while adapting to platform-specific user interface patterns and interaction modalities.

Development Best Practices and Guidelines

Successful Kotlin Multiplatform AI development requires adherence to established best practices that ensure code quality, maintainability, and performance across different platforms. Key practices include implementing clear separation of concerns between shared AI logic and platform-specific components, establishing comprehensive testing strategies that validate both algorithmic accuracy and platform integration, and maintaining consistent documentation that facilitates collaboration across diverse development teams.

Advanced development practices include implementing continuous integration pipelines that validate AI model accuracy across platforms, establishing performance benchmarking frameworks that ensure optimal resource utilization, and creating modular architectures that facilitate independent development and testing of different AI components while maintaining system integration integrity.

The adoption of established design patterns, code review processes, and quality assurance methodologies specifically adapted for AI development ensures that Kotlin Multiplatform AI applications maintain high standards of reliability, performance, and user experience throughout their development lifecycle.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Kotlin Multiplatform technology and machine learning development practices. Readers should conduct their own research and consider their specific requirements when implementing Kotlin Multiplatform AI solutions. The effectiveness of cross-platform AI development approaches may vary depending on specific use cases, target platforms, and organizational constraints.