The rapid evolution of large language model applications has created an urgent need for robust development frameworks that can streamline the process of building, deploying, and maintaining sophisticated AI-powered solutions. Among the most prominent frameworks that have emerged to address this challenge are Langchain and LlamaIndex, two powerful platforms that offer distinct approaches to LLM application development. These frameworks have fundamentally transformed how developers conceptualize and implement complex AI systems, each bringing unique strengths and methodologies to the rapidly expanding field of artificial intelligence application development.

Explore the latest AI development trends and tools to stay current with the evolving landscape of large language model frameworks and their practical applications in modern software development. The choice between these frameworks often determines not only the technical architecture of an application but also influences development velocity, maintenance complexity, and scalability potential for organizations venturing into AI-powered solutions.

Understanding Langchain: The Comprehensive LLM Framework

Langchain represents a comprehensive approach to large language model application development, providing developers with an extensive toolkit for building complex AI applications that can chain together multiple LLM operations, integrate with external data sources, and maintain conversational context across extended interactions. The framework’s architecture is built around the concept of modular components that can be combined in sophisticated ways to create applications ranging from simple chatbots to complex reasoning systems that incorporate multiple AI models, databases, and external APIs.

The fundamental philosophy behind Langchain centers on the idea that modern LLM applications require more than simple prompt-response interactions. Instead, they need sophisticated orchestration of multiple components including memory management, external tool integration, prompt templating, and response processing. Langchain addresses these requirements through its modular architecture, which allows developers to construct complex workflows by combining pre-built components or creating custom modules that integrate seamlessly with the broader ecosystem.

The framework’s emphasis on chains and agents provides developers with powerful abstractions for building applications that can perform multi-step reasoning, maintain context across conversations, and dynamically select appropriate tools based on user inputs and application requirements. This architectural approach has made Langchain particularly popular for building applications that require complex decision-making processes, integration with multiple data sources, and sophisticated conversation management capabilities.

Exploring LlamaIndex: The Data-Centric Approach

LlamaIndex takes a fundamentally different approach to LLM application development, focusing primarily on data ingestion, indexing, and retrieval to create powerful question-answering and information extraction systems. The framework excels in scenarios where applications need to work with large volumes of structured and unstructured data, providing sophisticated indexing mechanisms that enable efficient retrieval of relevant information for LLM processing.

The core strength of LlamaIndex lies in its ability to transform raw data from various sources into searchable, semantically meaningful indexes that can be queried using natural language. This data-centric approach makes the framework particularly valuable for building applications such as document search systems, knowledge bases, and intelligent data analysis tools that require sophisticated understanding of content relationships and semantic similarity.

LlamaIndex’s architecture is designed around the concept of data connectors, indexes, and query engines that work together to provide seamless access to information stored in various formats and locations. The framework supports a wide range of data sources including documents, databases, APIs, and web content, automatically handling the complexities of data preprocessing, embedding generation, and index optimization to ensure optimal performance for information retrieval tasks.

Enhance your AI development workflow with Claude’s advanced capabilities for building sophisticated LLM applications that leverage both Langchain and LlamaIndex frameworks effectively. The integration of multiple AI tools creates a comprehensive development environment that maximizes the potential of both frameworks while addressing their individual limitations.

Architecture and Design Philosophy Comparison

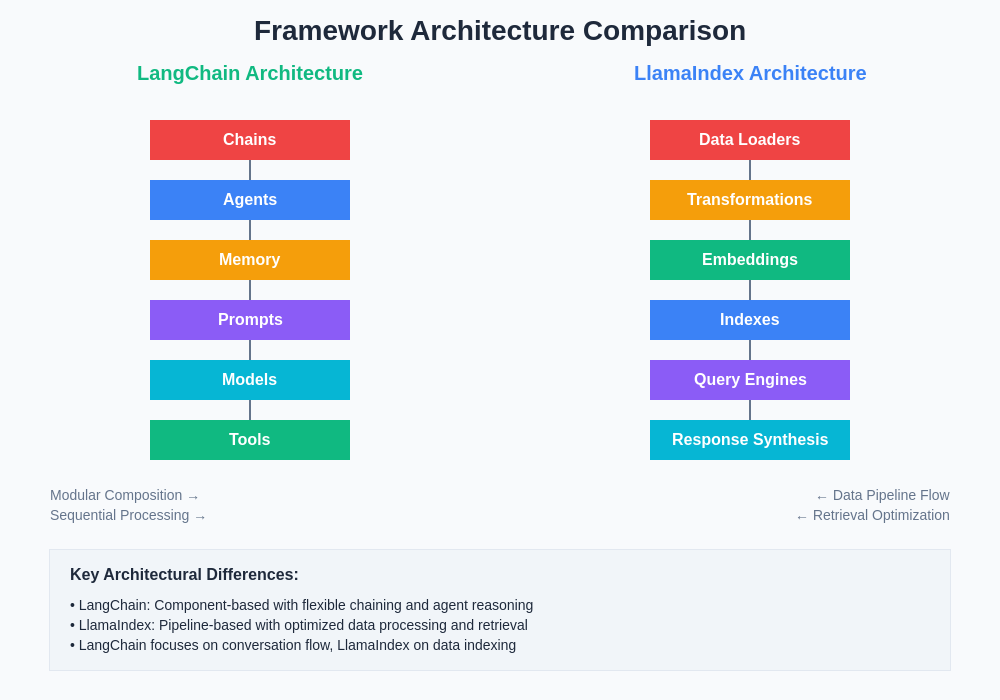

The architectural differences between Langchain and LlamaIndex reflect their distinct design philosophies and target use cases. Langchain’s architecture is built around the concept of composable components that can be chained together to create complex workflows, with a strong emphasis on flexibility and extensibility. The framework provides abstractions for prompts, models, memory, chains, and agents, each designed to work independently while integrating seamlessly with other components.

In contrast, LlamaIndex adopts a more specialized architecture focused on data processing and retrieval workflows. The framework’s design centers around data loaders, transformations, indexes, and query engines, with each component optimized for specific aspects of the data ingestion and retrieval pipeline. This specialization results in more efficient performance for data-centric applications but may require additional integration work for applications that need broader functionality.

The modularity approach in both frameworks allows developers to customize and extend functionality, but the implementation differs significantly. Langchain’s modularity focuses on behavioral composition, where different components contribute different capabilities to the overall application workflow. LlamaIndex’s modularity is more data-pipeline oriented, with components specializing in different stages of the data processing and retrieval workflow.

The architectural foundations of these frameworks reveal fundamental differences in their approach to LLM application development, with each framework optimizing for different aspects of the development and deployment pipeline.

Development Experience and Learning Curve

The development experience provided by each framework varies considerably based on the developer’s background and project requirements. Langchain offers a more general-purpose development experience with extensive documentation, numerous examples, and a large community ecosystem. The framework’s comprehensive approach means that developers need to understand multiple concepts including chains, agents, memory management, and prompt engineering, which can result in a steeper learning curve for newcomers to LLM development.

LlamaIndex provides a more focused development experience centered around data ingestion and retrieval workflows. Developers working with LlamaIndex typically find the learning curve more manageable for data-centric applications, as the framework’s concepts align closely with traditional data processing and search system development. However, expanding beyond the framework’s core data-focused capabilities may require additional learning and integration work.

Both frameworks provide extensive documentation and examples, but their community ecosystems differ in focus and activity levels. Langchain benefits from a larger, more diverse community with contributions spanning various application domains, while LlamaIndex’s community tends to focus more specifically on data retrieval and information extraction use cases.

The comprehensive feature analysis demonstrates how each framework excels in different areas, providing developers with clear guidance on which framework aligns best with their specific application requirements and technical priorities.

Performance and Scalability Considerations

Performance characteristics represent a critical factor in framework selection, particularly for production deployments that must handle significant user loads and data volumes. Langchain’s performance profile varies considerably depending on the complexity of chains and the number of external integrations required. Simple applications with minimal chaining typically perform well, but complex workflows involving multiple API calls, extensive memory operations, and sophisticated agent reasoning can introduce latency and resource consumption challenges.

LlamaIndex generally provides more predictable performance characteristics for its target use cases, with optimizations specifically designed for data retrieval and indexing operations. The framework’s focus on efficient indexing and query processing results in consistent performance for information retrieval tasks, even when working with large data sets. However, extending LlamaIndex applications beyond core data retrieval functionality may introduce performance variability.

Scalability considerations also differ between the frameworks. Langchain’s scalability depends heavily on the specific components used and the complexity of the application workflow. Applications using simple chains with minimal state management scale more effectively than complex agent-based systems that require extensive context management and external integrations. LlamaIndex’s scalability is more predictable but primarily applicable to data processing and retrieval scenarios.

Integration Capabilities and Ecosystem

The integration ecosystem surrounding each framework significantly impacts their utility for different types of applications. Langchain offers extensive integration capabilities with a wide range of LLM providers, vector databases, external APIs, and third-party services. The framework’s plugin architecture makes it relatively straightforward to add support for new integrations, and the active community has contributed integrations for numerous specialized tools and services.

LlamaIndex provides strong integration capabilities specifically focused on data sources and storage systems. The framework includes native support for various document formats, databases, cloud storage services, and content management systems. While the range of integrations is more specialized than Langchain’s broader ecosystem, LlamaIndex’s data source integrations are typically more comprehensive and optimized for the specific requirements of data ingestion and indexing workflows.

Both frameworks support integration with popular vector databases, but their approaches differ. Langchain treats vector databases as one component among many in a broader application architecture, while LlamaIndex integrates vector databases more deeply into its indexing and retrieval workflows, often providing better optimization for specific database configurations.

Utilize Perplexity’s research capabilities to stay informed about the latest developments in LLM framework integrations and emerging tools that can enhance your AI application development process.

Use Case Suitability and Application Domains

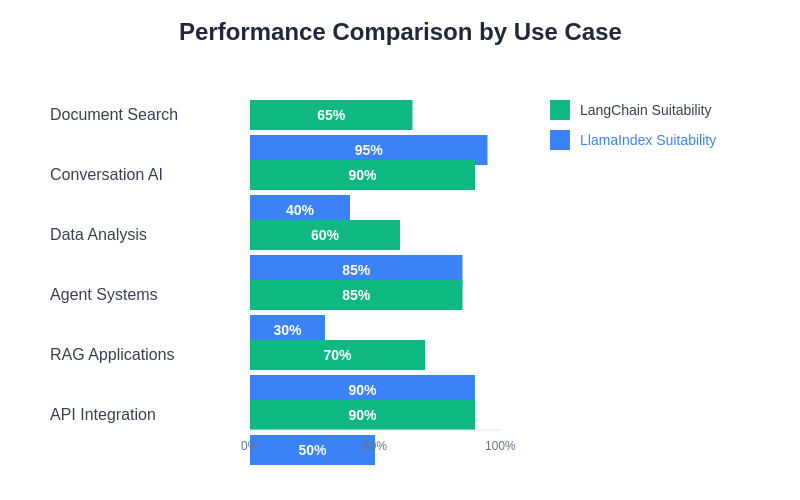

The suitability of each framework for different use cases represents one of the most important considerations for developers and organizations evaluating their options. Langchain excels in scenarios that require complex conversation management, multi-step reasoning, external tool integration, and sophisticated agent-based behaviors. Applications such as customer service chatbots, virtual assistants, automated workflow systems, and complex question-answering systems that require contextual understanding and multi-turn conversations benefit significantly from Langchain’s comprehensive approach.

LlamaIndex demonstrates superior performance in applications focused on information retrieval, document analysis, knowledge base construction, and data-driven question answering. Organizations building internal knowledge management systems, document search applications, research assistance tools, and data analysis platforms typically find LlamaIndex’s specialized capabilities more aligned with their requirements.

The distinction becomes particularly important when considering hybrid applications that combine conversational interfaces with data retrieval capabilities. Such applications may benefit from using both frameworks in complementary roles, with LlamaIndex handling data processing and retrieval while Langchain manages conversation flow and user interaction.

The performance analysis across different use cases clearly illustrates the complementary strengths of both frameworks, helping developers understand where each framework delivers optimal results for specific application requirements.

Development Velocity and Time-to-Market

Development velocity considerations often influence framework selection, particularly in competitive markets where rapid deployment can provide significant advantages. Langchain’s comprehensive feature set can accelerate development for complex applications by providing pre-built components for common LLM application patterns. However, the framework’s extensive capabilities can also introduce complexity that slows development for simple applications that don’t require its full feature set.

LlamaIndex typically enables faster development for data-centric applications by providing specialized tools and abstractions that align closely with common information retrieval patterns. Developers working on document search, knowledge base, or data analysis applications often achieve faster time-to-market using LlamaIndex’s focused approach compared to building similar functionality with more general-purpose frameworks.

The learning curve associated with each framework also impacts development velocity. Teams with existing experience in data processing and information retrieval may achieve faster results with LlamaIndex, while teams with broader software development backgrounds might find Langchain’s general-purpose approach more familiar and accessible.

Community Support and Ecosystem Maturity

Community support and ecosystem maturity play crucial roles in the long-term viability and success of framework-based development projects. Langchain benefits from a large, active community with extensive contributions spanning documentation, examples, tutorials, and third-party integrations. The framework’s popularity has attracted significant corporate backing and investment, resulting in rapid feature development and comprehensive support resources.

LlamaIndex maintains a smaller but highly focused community centered around data retrieval and information extraction use cases. While the community is smaller than Langchain’s, it tends to be deeply specialized in the framework’s core domain, providing high-quality contributions and support for data-centric applications. The framework has also received significant corporate support and continues to evolve rapidly in response to community needs.

Both communities are active in addressing issues, providing support, and contributing new features, but their focus areas differ. Langchain’s community contributions span a broader range of application domains, while LlamaIndex’s community concentrates more specifically on advancing data processing and retrieval capabilities.

The comprehensive feature comparison reveals the distinct strengths and focus areas of each framework, helping developers make informed decisions based on their specific application requirements and development priorities.

Cost Considerations and Resource Requirements

Cost considerations encompass both development costs and operational expenses associated with each framework. Langchain applications may incur higher operational costs due to the potential for complex chains that require multiple LLM API calls, extensive memory management, and integration with various external services. However, the framework’s efficiency in building complex applications can reduce development costs for sophisticated use cases.

LlamaIndex applications typically have more predictable cost profiles, with expenses primarily related to data processing, indexing, and query operations. The framework’s optimization for data retrieval tasks often results in more efficient resource utilization for information-focused applications, potentially reducing operational costs compared to less specialized alternatives.

Resource requirements also differ between the frameworks. Langchain applications may require more computational resources for complex agent-based reasoning and extensive context management, while LlamaIndex applications typically require more storage and indexing resources for data processing and retrieval operations.

Security and Enterprise Considerations

Security and enterprise readiness represent critical factors for production deployments, particularly in regulated industries or organizations handling sensitive data. Both frameworks provide security features and best practices, but their approaches reflect their different architectural focuses. Langchain’s security considerations span conversation management, external integrations, and agent behaviors, requiring comprehensive security planning across multiple components.

LlamaIndex’s security focus centers primarily on data handling, access control, and retrieval operations. The framework provides robust security features for data ingestion, indexing, and query processing, with particular attention to preventing unauthorized access to sensitive information through query manipulation or index traversal attacks.

Enterprise adoption considerations include factors such as deployment flexibility, monitoring capabilities, compliance support, and integration with existing enterprise infrastructure. Both frameworks provide enterprise-friendly features, but their suitability for specific enterprise environments depends on existing technology stacks and operational requirements.

Future Development and Innovation Trajectories

The future development trajectories of both frameworks reflect their distinct focuses and the evolving needs of the LLM application development community. Langchain’s roadmap emphasizes expanding integration capabilities, improving agent reasoning systems, and enhancing support for multi-modal applications. The framework continues to evolve toward supporting increasingly sophisticated AI application patterns while maintaining its comprehensive, flexible approach.

LlamaIndex’s development focus centers on advancing data processing capabilities, improving indexing efficiency, and expanding support for diverse data sources and formats. The framework’s innovation trajectory emphasizes making data-centric LLM applications more powerful and efficient while maintaining its specialized focus on information retrieval and knowledge management use cases.

Both frameworks are actively incorporating new AI research developments, supporting emerging LLM models, and adapting to changing best practices in AI application development. Their different focuses ensure that they will likely continue to serve complementary roles in the broader LLM application ecosystem.

Making the Framework Selection Decision

The decision between Langchain and LlamaIndex should be based on careful evaluation of project requirements, team capabilities, and long-term application goals. Projects requiring sophisticated conversation management, complex reasoning workflows, and extensive external integrations typically benefit from Langchain’s comprehensive approach. Applications focused on data retrieval, document analysis, and information extraction generally achieve better results with LlamaIndex’s specialized capabilities.

Hybrid approaches that utilize both frameworks in complementary roles represent another viable strategy, particularly for applications that require both sophisticated conversation management and efficient data retrieval. Such implementations can leverage the strengths of both frameworks while mitigating their individual limitations through careful architectural planning and integration design.

The evaluation process should consider factors including development team expertise, performance requirements, scalability needs, integration requirements, and long-term maintenance considerations. Organizations should also evaluate the frameworks through proof-of-concept implementations that test critical application requirements and validate architectural assumptions before committing to a particular approach.

The landscape of LLM application development continues to evolve rapidly, with both frameworks adapting to new requirements and incorporating emerging best practices. The choice between Langchain and LlamaIndex represents not just a technical decision but a strategic commitment to a particular approach to AI application development that will influence project outcomes for years to come.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The frameworks discussed are evolving rapidly, and specific features, capabilities, and performance characteristics may change over time. Readers should conduct thorough evaluation and testing based on their specific requirements before making framework selection decisions. The effectiveness and suitability of each framework may vary significantly depending on individual use cases, technical requirements, and implementation approaches.