Large Language Models have revolutionized artificial intelligence by demonstrating remarkable capabilities across diverse tasks, from natural language understanding to complex reasoning and code generation. However, while pre-trained models like GPT-4, Claude, and other foundation models excel at general-purpose tasks, they often require specialized adaptation to perform optimally in specific domains or applications. Fine-tuning represents the critical bridge between general AI capabilities and specialized performance, enabling organizations to harness the power of large-scale language models while tailoring them to their unique requirements and constraints.

The process of fine-tuning transforms a general-purpose language model into a specialized tool that can understand domain-specific terminology, follow particular formatting conventions, exhibit desired behavioral patterns, and integrate seamlessly with existing workflows. This transformation process has become increasingly important as organizations across industries recognize the potential of AI-powered solutions while demanding performance that meets their specific operational needs and quality standards.

Explore the latest developments in AI model training to stay informed about cutting-edge techniques and methodologies that are shaping the future of specialized language model development. The evolution of fine-tuning approaches continues to accelerate, driven by both academic research and practical industry applications that push the boundaries of what specialized AI systems can achieve.

Understanding the Fundamentals of Fine-Tuning

Fine-tuning represents a specialized form of transfer learning where a pre-trained language model undergoes additional training on a smaller, domain-specific dataset to adapt its behavior for particular use cases. This process leverages the vast knowledge and linguistic understanding that the model has already acquired during its initial training phase while introducing new patterns, vocabularies, and response styles that align with specific requirements. The fundamental principle underlying fine-tuning is that the model retains its general language capabilities while developing enhanced performance in targeted areas.

The mathematical foundation of fine-tuning involves adjusting the model’s parameters through continued gradient descent optimization, but with careful consideration of learning rates, regularization techniques, and data selection strategies that prevent catastrophic forgetting of previously learned knowledge. This delicate balance ensures that the model maintains its broad capabilities while incorporating specialized knowledge and behaviors that make it more effective for specific applications.

The effectiveness of fine-tuning depends heavily on the quality and relevance of the training data, the appropriateness of the base model architecture for the target task, and the careful calibration of hyperparameters that govern the learning process. Organizations must carefully consider these factors when embarking on fine-tuning projects, as the success of the resulting specialized model depends critically on the thoughtful execution of each step in the process.

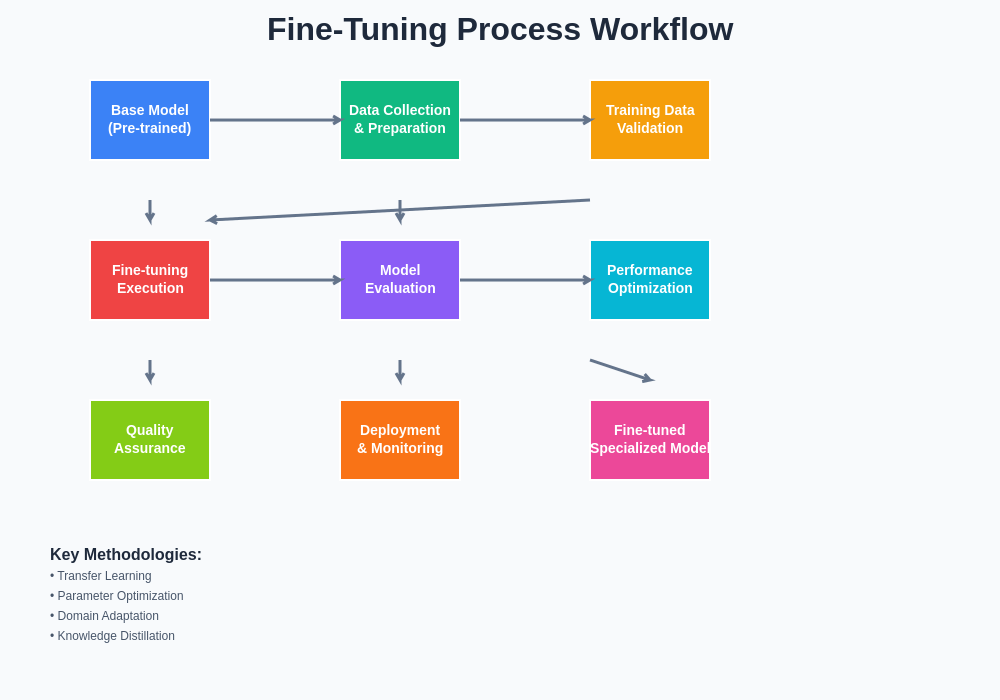

The systematic approach to fine-tuning involves multiple interconnected stages, each requiring careful attention to detail and strategic decision-making. From initial data collection through final deployment and monitoring, the workflow demonstrates the complexity and thoroughness required to achieve successful model specialization.

Identifying Optimal Use Cases for Fine-Tuning

The decision to pursue fine-tuning should be based on a careful analysis of specific requirements that cannot be adequately addressed through prompt engineering or general-purpose model usage. Optimal use cases typically involve scenarios where domain-specific knowledge, specialized terminology, particular output formats, or unique behavioral patterns are essential for success. Medical diagnosis systems, legal document analysis, financial risk assessment, and technical documentation generation represent prime examples of applications where fine-tuning can provide substantial benefits over general-purpose models.

Organizations should evaluate their use cases based on several key criteria, including the availability of high-quality training data, the complexity of domain-specific requirements, the frequency of model usage, and the criticality of performance improvements. Use cases that involve highly specialized vocabularies, complex regulatory requirements, or unique formatting standards often benefit significantly from fine-tuning approaches that can embed domain expertise directly into the model’s parameters.

Discover advanced AI capabilities with Claude for understanding complex requirements and developing sophisticated fine-tuning strategies that align with your specific organizational needs. The strategic approach to identifying and prioritizing fine-tuning opportunities requires careful consideration of both technical feasibility and business impact, ensuring that investments in model customization deliver meaningful returns.

The consideration of use case suitability also involves understanding the limitations and potential risks associated with fine-tuning, including the possibility of reduced performance on general tasks, increased computational requirements, and the ongoing maintenance needs of specialized models. Organizations must weigh these factors against the potential benefits to make informed decisions about when and how to pursue fine-tuning initiatives.

Data Collection and Preparation Strategies

The foundation of successful fine-tuning lies in the systematic collection and preparation of high-quality training data that accurately represents the desired model behavior and covers the full scope of the target application domain. Data collection strategies must balance comprehensiveness with quality, ensuring that the training dataset includes diverse examples while maintaining consistency in format, style, and accuracy. The process typically begins with identifying existing data sources within the organization, including documents, transcripts, previous outputs, and expert-generated content that exemplifies the desired model behavior.

Data preparation involves multiple stages of cleaning, formatting, and augmentation to create training examples that effectively guide the model toward desired behaviors. This process includes removing inconsistencies, standardizing formats, correcting errors, and ensuring that the data accurately reflects the knowledge and style that should be embedded in the fine-tuned model. Advanced preparation techniques may involve synthetic data generation, data augmentation through paraphrasing and variation, and careful curation to ensure balanced representation across different aspects of the target domain.

The quality of training data directly impacts the effectiveness of the fine-tuning process, making data validation and quality assurance critical components of the preparation workflow. Organizations must establish rigorous standards for data accuracy, relevance, and consistency while implementing systematic review processes that identify and address potential issues before they impact model training. The investment in high-quality data preparation typically translates directly into improved model performance and reduced training time.

Technical Implementation Approaches

The technical implementation of fine-tuning involves selecting appropriate methodologies, frameworks, and computational resources that align with the specific requirements of the use case and the characteristics of the available training data. Modern fine-tuning approaches range from full parameter fine-tuning, where all model weights are adjusted, to more efficient techniques like Low-Rank Adaptation (LoRA) and prefix tuning that achieve comparable results with significantly reduced computational requirements and faster training times.

Parameter-efficient fine-tuning techniques have gained particular prominence due to their ability to achieve specialized performance while minimizing resource requirements and training time. These approaches work by adding small numbers of trainable parameters to the base model while keeping the majority of pre-trained weights frozen, resulting in efficient adaptation that preserves the model’s general capabilities while introducing domain-specific behaviors. The choice of technique depends on factors including available computational resources, the complexity of the adaptation requirements, and the desired balance between specialization and generalization.

Implementation considerations also include the selection of appropriate loss functions, optimization algorithms, and regularization techniques that guide the learning process toward desired outcomes while preventing overfitting and catastrophic forgetting. Advanced implementations may incorporate techniques like gradient accumulation, mixed-precision training, and distributed computing to optimize resource utilization and reduce training time while maintaining training stability and model quality.

Evaluation and Performance Measurement

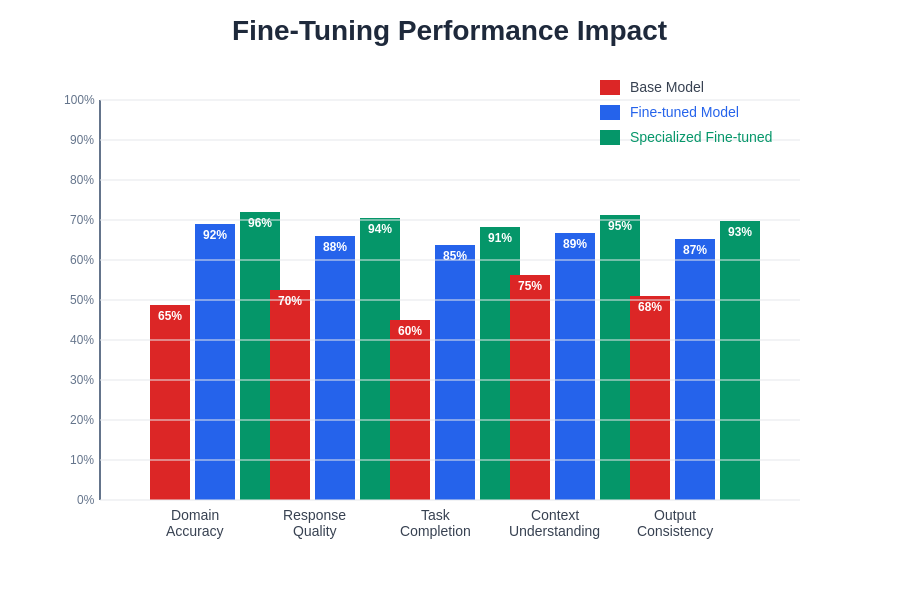

The evaluation of fine-tuned models requires comprehensive assessment methodologies that measure both specialized performance improvements and the retention of general capabilities. Traditional metrics like perplexity and BLEU scores provide quantitative assessments of model performance, but domain-specific applications often require custom evaluation frameworks that capture the nuances of particular use cases and align with business objectives. Effective evaluation strategies combine automated metrics with human evaluation protocols that assess factors like accuracy, relevance, style consistency, and adherence to domain-specific requirements.

Performance measurement should encompass multiple dimensions, including task-specific accuracy, response quality, computational efficiency, and robustness across different input variations. Comparative evaluation against baseline models, including the original pre-trained model and competing approaches, provides essential context for understanding the value and effectiveness of the fine-tuning process. Long-term evaluation protocols should also monitor model performance over time to identify potential degradation or drift that may require additional training or model updates.

Enhance your research capabilities with Perplexity to access comprehensive information about evaluation methodologies and performance benchmarks that can inform your model assessment strategies. The development of robust evaluation frameworks requires careful consideration of both quantitative metrics and qualitative assessments that capture the full spectrum of model capabilities and limitations.

The evaluation process should also include analysis of failure modes, edge cases, and potential biases that may emerge during fine-tuning. This comprehensive assessment approach enables organizations to understand not only where their models excel but also where additional improvement may be needed, informing iterative refinement strategies that enhance overall model effectiveness.

The quantitative benefits of fine-tuning are clearly demonstrated through comprehensive performance metrics that compare base models, fine-tuned models, and specialized fine-tuned implementations. These measurements reveal substantial improvements across critical performance dimensions including domain accuracy, response quality, and task completion rates.

Domain-Specific Applications and Case Studies

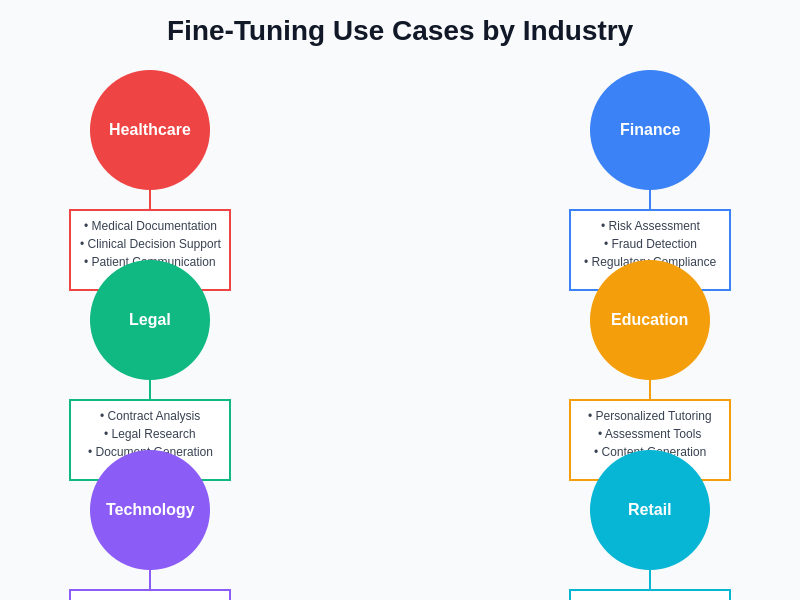

The application of fine-tuned language models across various domains demonstrates the versatility and effectiveness of specialized AI systems in addressing complex, industry-specific challenges. In healthcare, fine-tuned models have been successfully deployed for medical documentation, clinical decision support, and patient communication, where domain-specific terminology, regulatory compliance, and accuracy requirements demand specialized model behavior. These applications showcase how fine-tuning can embed medical knowledge and appropriate response patterns while maintaining the general language capabilities necessary for effective communication.

Financial services represent another domain where fine-tuning has delivered significant value through applications in risk assessment, regulatory compliance, fraud detection, and customer service automation. The specialized vocabulary, regulatory requirements, and complex analytical needs of financial applications benefit substantially from models that have been adapted to understand industry-specific concepts while generating responses that meet strict accuracy and compliance standards. These implementations demonstrate how fine-tuning can transform general-purpose AI capabilities into specialized tools that enhance operational efficiency and decision-making quality.

Legal technology applications have leveraged fine-tuned models for contract analysis, legal research, document generation, and compliance monitoring, where the precision of language interpretation and the accuracy of legal reasoning are paramount. The success of these applications depends on the model’s ability to understand complex legal concepts, interpret regulatory language, and generate outputs that meet professional standards while maintaining consistency with established legal principles and practices.

Educational technology represents an emerging domain where fine-tuned models are being deployed to create personalized learning experiences, automated assessment tools, and intelligent tutoring systems. These applications require models that can adapt their communication style, difficulty level, and instructional approach based on individual learner needs while maintaining pedagogical effectiveness and alignment with curriculum standards.

The diverse applications of fine-tuned language models across industries demonstrate the versatility and transformative potential of specialized AI systems. Each industry presents unique challenges and requirements that benefit from domain-specific model adaptation and customization.

Advanced Fine-Tuning Techniques and Methodologies

The evolution of fine-tuning methodologies has produced sophisticated techniques that optimize performance while addressing practical constraints related to computational resources, training time, and model maintenance. Advanced approaches like instruction tuning focus on teaching models to follow specific types of instructions or exhibit particular behavioral patterns, enabling more precise control over model outputs and better alignment with user expectations. These techniques involve careful curation of instruction-following datasets and specialized training procedures that enhance the model’s ability to interpret and respond to complex directives.

Multi-task fine-tuning represents another advanced approach that enables models to excel across multiple related tasks simultaneously, leveraging shared knowledge and reducing the need for separate specialized models. This methodology is particularly valuable for organizations with diverse but related use cases, enabling them to maintain a single specialized model that can handle multiple types of requests while maintaining high performance across all target applications.

Reinforcement Learning from Human Feedback (RLHF) has emerged as a powerful technique for aligning model behavior with human preferences and values, enabling fine-tuning based on qualitative feedback rather than traditional supervised learning approaches. This methodology is particularly valuable for applications where the desired output characteristics are difficult to capture through traditional training data but can be effectively communicated through human evaluation and preference ranking.

Continual learning approaches address the challenge of maintaining and updating fine-tuned models over time as new data becomes available or requirements evolve. These techniques enable models to incorporate new knowledge while preserving previously learned capabilities, reducing the need for complete retraining and enabling more efficient model maintenance and improvement processes.

Infrastructure and Resource Management

The successful implementation of fine-tuning initiatives requires careful planning and management of computational infrastructure, data storage, and operational resources that support both training and deployment phases. Modern fine-tuning approaches demand substantial computational resources during training, including high-performance GPUs, adequate memory capacity, and efficient data pipeline infrastructure that can handle large-scale training datasets without creating bottlenecks that extend training time or compromise model quality.

Cloud computing platforms have become essential for organizations pursuing fine-tuning projects, providing access to specialized hardware configurations, scalable storage solutions, and managed services that simplify the technical complexity of model training and deployment. The selection of appropriate cloud resources requires careful consideration of factors including cost optimization, performance requirements, data security constraints, and integration needs with existing organizational systems and workflows.

Resource management strategies should also address the ongoing operational requirements of fine-tuned models, including inference infrastructure, model versioning, monitoring systems, and backup procedures that ensure reliable model availability and performance. The computational requirements for serving fine-tuned models can vary significantly based on model size, usage patterns, and performance requirements, necessitating careful capacity planning and optimization strategies that balance cost and performance objectives.

Quality Assurance and Monitoring

The deployment of fine-tuned models in production environments requires comprehensive quality assurance frameworks that monitor model performance, detect potential issues, and ensure consistent delivery of expected results. Quality assurance protocols should encompass both automated monitoring systems that track quantitative performance metrics and human evaluation processes that assess qualitative aspects of model outputs that may not be captured through automated metrics alone.

Continuous monitoring systems should track key performance indicators including response accuracy, latency, throughput, and user satisfaction metrics while implementing alerting mechanisms that notify administrators of potential performance degradation or anomalous behavior. Advanced monitoring approaches may incorporate statistical analysis techniques that identify subtle changes in model behavior over time, enabling proactive intervention before issues impact user experience or business operations.

The establishment of feedback loops that capture user interactions, expert evaluations, and performance data enables continuous improvement of fine-tuned models through iterative training updates and parameter adjustments. These systems should balance the need for model stability with the benefits of continuous improvement, implementing change management procedures that ensure updates enhance rather than compromise model performance.

Ethical Considerations and Responsible AI

The development and deployment of fine-tuned language models raises important ethical considerations related to bias, fairness, transparency, and accountability that organizations must address through comprehensive responsible AI frameworks. Fine-tuning processes can inadvertently amplify biases present in training data or introduce new biases through selective data curation, making bias detection and mitigation critical components of the development workflow. Organizations must implement systematic approaches to identifying and addressing potential biases while ensuring that fine-tuned models promote fairness and inclusivity across diverse user populations.

Transparency requirements necessitate clear documentation of fine-tuning processes, data sources, intended use cases, and known limitations that enable users and stakeholders to make informed decisions about model deployment and usage. This documentation should include information about training methodologies, evaluation results, potential failure modes, and recommended usage guidelines that promote responsible model deployment and minimize the risk of inappropriate or harmful applications.

Accountability frameworks must establish clear responsibility structures for fine-tuned model behavior, including governance processes for model updates, usage monitoring, and incident response procedures that address potential issues promptly and effectively. These frameworks should align with organizational values and regulatory requirements while promoting the beneficial use of AI technology in ways that support human welfare and societal benefit.

Future Directions and Emerging Trends

The field of language model fine-tuning continues to evolve rapidly, driven by advances in machine learning research, increases in computational capacity, and growing demand for specialized AI applications across diverse domains. Emerging trends include the development of more efficient fine-tuning techniques that reduce resource requirements while maintaining performance improvements, the integration of multimodal capabilities that enable fine-tuning for applications involving text, images, and other data types, and the advancement of automated fine-tuning systems that can optimize model customization with minimal human intervention.

Research into few-shot and zero-shot fine-tuning approaches promises to reduce the data requirements for model specialization, enabling organizations to achieve effective customization with smaller training datasets and reduced data collection overhead. These advances could democratize access to fine-tuned AI capabilities by lowering the barriers to entry for organizations with limited data resources or specialized requirements that are difficult to address through general-purpose models.

The integration of fine-tuning capabilities into broader AI development platforms and tools is likely to simplify the technical complexity of model customization while providing more accessible interfaces for organizations to develop and deploy specialized AI solutions. These developments could accelerate the adoption of fine-tuned models across diverse industries and applications while reducing the specialized expertise required for successful implementation.

The continued advancement of fine-tuning methodologies, combined with improvements in base model capabilities and computational infrastructure, suggests that specialized AI applications will become increasingly sophisticated and widely deployed across industries and use cases. Organizations that develop expertise in fine-tuning approaches and establish robust development and deployment processes will be well-positioned to leverage these advances and realize the full potential of customized AI solutions for their specific needs and objectives.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI technologies and their applications in specialized domains. Readers should conduct their own research and consider their specific requirements when implementing fine-tuning strategies. The effectiveness and suitability of fine-tuning approaches may vary depending on specific use cases, data availability, technical resources, and organizational objectives.