In the rapidly evolving landscape of artificial intelligence, one of the most fascinating and transformative developments is the emergence of meta-learning algorithms that possess the remarkable ability to learn how to learn. This revolutionary approach to machine learning represents a fundamental shift from traditional AI systems that require extensive training data and computational resources to achieve proficiency in specific tasks. Meta-learning algorithms demonstrate an unprecedented capacity to rapidly adapt to new challenges, acquire skills from minimal examples, and transfer knowledge across diverse domains with remarkable efficiency.

The concept of meta-learning draws inspiration from human cognitive abilities, where individuals can quickly master new skills by leveraging previous experiences and applying learned strategies to novel situations. Explore the latest developments in AI learning methodologies to understand how these breakthrough approaches are reshaping the future of artificial intelligence and machine learning applications across industries.

Understanding the Foundations of Meta-Learning

Meta-learning, often referred to as “learning to learn,” represents a paradigm shift in how artificial intelligence systems acquire and apply knowledge. Unlike conventional machine learning approaches that focus on optimizing performance for specific tasks through extensive training on large datasets, meta-learning algorithms develop sophisticated strategies for rapidly adapting to new challenges with minimal data exposure. This fundamental difference enables AI systems to demonstrate human-like learning capabilities, where prior experiences inform and accelerate the acquisition of new skills.

The theoretical foundations of meta-learning rest upon the principle that learning itself can be treated as a learnable process. By examining patterns across multiple learning tasks, meta-learning algorithms identify common structures, strategies, and optimization approaches that can be generalized to new domains. This meta-level understanding enables AI systems to make intelligent decisions about how to approach unfamiliar problems, which learning algorithms to employ, and how to efficiently allocate computational resources for optimal performance.

The mathematical framework underlying meta-learning involves nested optimization problems, where the outer loop optimizes meta-parameters that govern the learning process, while the inner loop focuses on task-specific optimization. This hierarchical structure allows the system to develop general learning strategies while maintaining the flexibility to adapt to specific requirements of individual tasks.

The Evolution from Traditional Learning to Meta-Learning

Traditional machine learning approaches have demonstrated remarkable success in specific domains but often require substantial computational resources, extensive datasets, and domain-specific expertise to achieve acceptable performance levels. These conventional methods typically involve training models from scratch for each new task, resulting in lengthy development cycles and limited transferability of learned knowledge across different applications.

Meta-learning emerged as a solution to address these fundamental limitations by enabling AI systems to leverage accumulated knowledge and learning experiences to accelerate the acquisition of new capabilities. This evolutionary advancement has transformed the efficiency and effectiveness of AI applications, particularly in scenarios where data scarcity, rapid adaptation requirements, or resource constraints present significant challenges.

The transition from traditional learning to meta-learning has been facilitated by advances in neural network architectures, optimization algorithms, and computational hardware that support the complex hierarchical learning processes required for effective meta-learning implementation. These technological developments have enabled researchers to explore sophisticated approaches to knowledge transfer, few-shot learning, and adaptive optimization that were previously computationally infeasible.

Experience advanced AI capabilities with Claude to explore how meta-learning principles are being integrated into cutting-edge AI systems that demonstrate unprecedented adaptability and learning efficiency across diverse applications and domains.

Core Principles and Mechanisms of Meta-Learning

The fundamental principles governing meta-learning algorithms revolve around the development of generalizable learning strategies that can be effectively applied across diverse tasks and domains. These systems operate on the premise that successful learning approaches share common characteristics that can be identified, extracted, and systematically applied to new challenges. The meta-learning process involves analyzing patterns in learning trajectories, optimization landscapes, and performance metrics to develop sophisticated heuristics for efficient knowledge acquisition.

Meta-learning algorithms employ various mechanisms to achieve their remarkable adaptability, including gradient-based optimization methods that learn optimal initialization parameters for new tasks, memory-augmented networks that store and retrieve relevant experiences, and attention mechanisms that focus on the most informative aspects of training examples. These approaches enable AI systems to rapidly identify relevant patterns, make informed predictions with limited data, and continuously refine their learning strategies based on accumulated experiences.

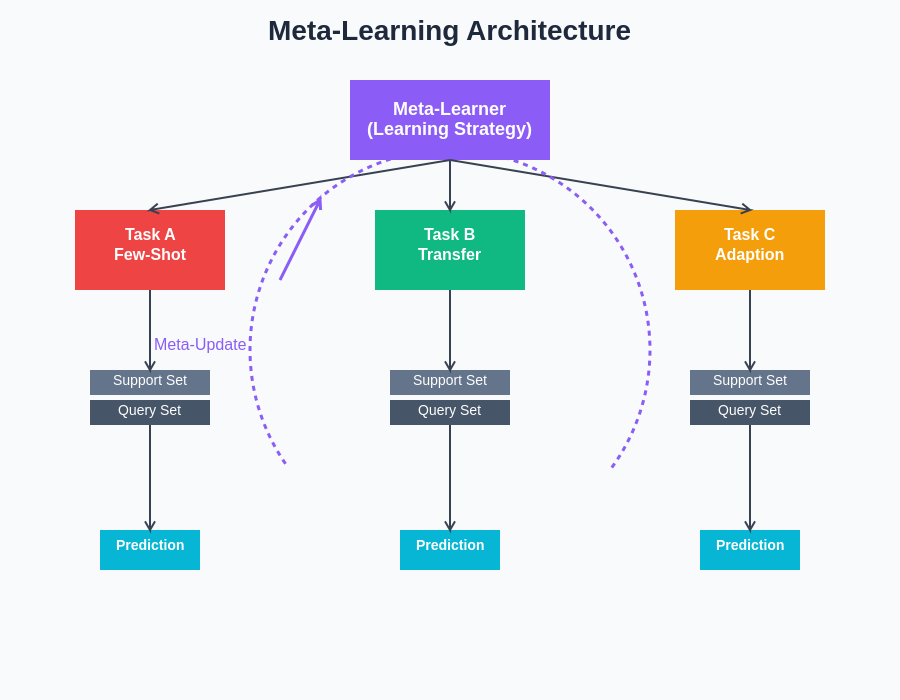

The architecture of meta-learning systems typically incorporates multiple levels of abstraction, where higher-level components focus on strategic decision-making about learning approaches, while lower-level components handle task-specific implementation details. This hierarchical organization enables efficient knowledge sharing across tasks while maintaining the specificity required for effective performance in individual applications.

Few-Shot Learning and Rapid Adaptation

One of the most impressive capabilities demonstrated by meta-learning algorithms is few-shot learning, where AI systems can achieve remarkable performance on new tasks using only a handful of training examples. This ability represents a significant departure from traditional deep learning approaches that typically require thousands or millions of training samples to achieve acceptable accuracy levels.

Few-shot learning algorithms leverage meta-knowledge acquired from previous learning experiences to make intelligent inferences about new tasks based on minimal data exposure. These systems employ sophisticated pattern recognition capabilities to identify similarities between new challenges and previously encountered problems, enabling them to apply relevant strategies and adapt existing knowledge to novel contexts.

The architecture of meta-learning systems demonstrates the sophisticated interplay between meta-learners that develop general learning strategies and task-specific learners that apply these strategies to individual challenges. This hierarchical structure enables effective knowledge transfer and rapid adaptation across diverse domains through systematic support and query set processing.

The implementation of few-shot learning involves complex neural network architectures that incorporate attention mechanisms, memory systems, and adaptive optimization procedures. These components work together to enable rapid knowledge acquisition and application, demonstrating performance levels that often rival or exceed traditional approaches trained on significantly larger datasets.

The practical implications of few-shot learning extend across numerous applications, including medical diagnosis systems that can identify rare diseases from limited examples, natural language processing models that can adapt to new languages or domains with minimal training data, and computer vision systems that can recognize new object categories from just a few representative images.

Transfer Learning and Knowledge Generalization

Transfer learning represents another crucial aspect of meta-learning, where knowledge acquired in one domain is systematically applied to improve performance in related but distinct areas. This capability enables AI systems to leverage accumulated expertise to accelerate learning in new domains, reducing the time and resources required for effective deployment in novel applications.

Meta-learning algorithms excel at identifying transferable knowledge components that maintain relevance across different tasks and domains. These systems develop sophisticated representations that capture essential patterns, relationships, and structures that persist across various contexts, enabling effective knowledge transfer even when surface-level characteristics differ significantly between source and target domains.

The mechanisms underlying effective transfer learning involve hierarchical feature representations where lower-level features capture general patterns applicable across domains, while higher-level features encode task-specific characteristics. This multi-level approach enables AI systems to selectively transfer relevant knowledge while adapting to domain-specific requirements and constraints.

Advanced Architectures and Implementation Strategies

The implementation of meta-learning algorithms requires sophisticated neural network architectures that can effectively balance generalization capabilities with task-specific adaptation requirements. These architectures often incorporate memory-augmented networks that store and retrieve relevant experiences, attention mechanisms that focus on the most informative aspects of training data, and adaptive optimization procedures that adjust learning strategies based on task characteristics.

Model-Agnostic Meta-Learning (MAML) represents one of the most influential approaches to meta-learning implementation, where algorithms learn optimal initialization parameters that can be rapidly fine-tuned for new tasks through gradient descent optimization. This approach has demonstrated remarkable effectiveness across diverse applications, from computer vision and natural language processing to robotics and game playing.

Gradient-based meta-learning approaches focus on learning optimization procedures that can effectively adapt to new tasks through gradient descent modifications. These methods develop sophisticated strategies for parameter updates, learning rate scheduling, and convergence criteria that enhance learning efficiency and effectiveness across diverse domains.

Enhance your research capabilities with Perplexity to explore the latest developments in meta-learning architectures and implementation strategies that are pushing the boundaries of what artificial intelligence systems can achieve in terms of adaptability and learning efficiency.

Applications Across Industries and Domains

Meta-learning algorithms have found applications across numerous industries and domains, demonstrating their versatility and practical value in addressing real-world challenges. In healthcare, these systems enable rapid adaptation to new diseases, patient populations, and treatment protocols with minimal data requirements, facilitating more efficient medical research and personalized treatment approaches.

The robotics industry has embraced meta-learning for developing adaptive control systems that can quickly learn new tasks, adapt to environmental changes, and transfer skills across different robotic platforms. These capabilities are particularly valuable in manufacturing environments where robots must rapidly adapt to new products, assembly procedures, and quality requirements.

Natural language processing applications have leveraged meta-learning to develop systems that can quickly adapt to new languages, domains, and communication styles with minimal training data. This capability is essential for creating AI assistants that can effectively serve diverse user populations and adapt to specialized professional contexts.

Computer vision applications utilize meta-learning for rapid object recognition, scene understanding, and image analysis tasks where new categories or contexts must be quickly incorporated without extensive retraining procedures. These capabilities are crucial for applications such as autonomous vehicles, medical imaging, and security systems that must adapt to evolving requirements and environments.

Optimization Challenges and Solutions

The implementation of meta-learning algorithms presents unique optimization challenges that require sophisticated approaches to achieve effective performance. The nested optimization structure inherent in meta-learning creates complex loss landscapes that can be difficult to navigate using traditional optimization methods, necessitating the development of specialized algorithms and techniques.

Gradient computation in meta-learning systems involves higher-order derivatives that can be computationally expensive and numerically unstable, requiring careful implementation and regularization strategies to ensure reliable convergence. Advanced optimization methods such as implicit differentiation, approximation techniques, and stable gradient computation procedures have been developed to address these challenges.

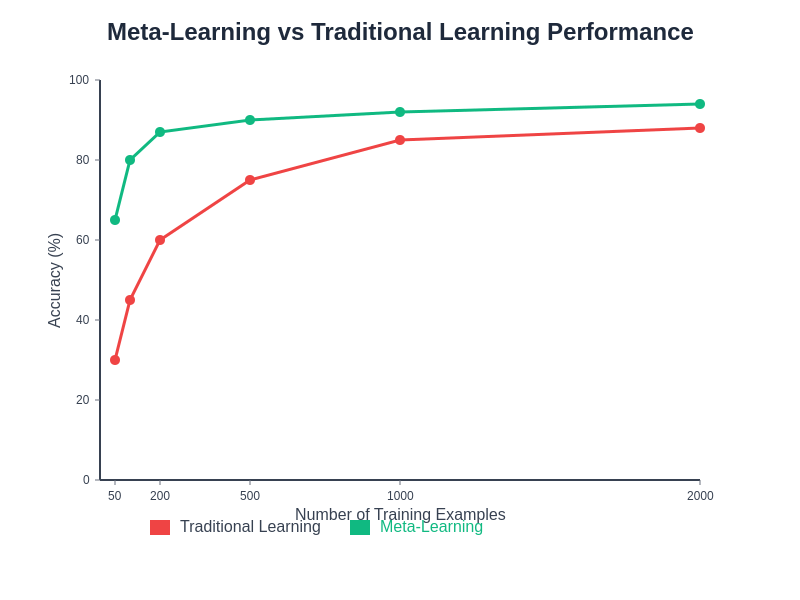

The performance advantages of meta-learning algorithms become particularly evident when examining their ability to achieve high accuracy with minimal training data compared to traditional approaches. This dramatic improvement in sample efficiency demonstrates the practical value of learning-to-learn strategies in resource-constrained environments and rapid deployment scenarios.

The balance between exploration and exploitation in meta-learning presents another significant challenge, where systems must effectively explore diverse learning strategies while exploiting successful approaches. This balance is crucial for developing robust meta-learning algorithms that can adapt to diverse tasks while maintaining consistent performance standards.

Evaluation Metrics and Performance Assessment

Evaluating the effectiveness of meta-learning algorithms requires sophisticated metrics that capture their ability to rapidly adapt to new tasks, transfer knowledge across domains, and maintain performance consistency across diverse applications. Traditional machine learning evaluation approaches are often insufficient for assessing meta-learning capabilities, necessitating the development of specialized benchmarks and assessment methodologies.

Few-shot learning performance is typically evaluated using N-way K-shot classification tasks, where systems must distinguish between N categories using only K examples per category. These evaluations assess the algorithm’s ability to rapidly acquire new knowledge and make accurate predictions with limited data exposure, providing insights into the effectiveness of meta-learning strategies.

Transfer learning assessment involves measuring performance improvements achieved when applying knowledge from source domains to target applications, with particular attention to the efficiency gains and accuracy enhancements realized through meta-learning approaches. These evaluations help identify the conditions under which meta-learning provides the greatest benefits and guide the development of more effective algorithms.

Current Limitations and Research Frontiers

Despite their remarkable capabilities, current meta-learning algorithms face several limitations that represent active areas of research and development. Scalability challenges emerge when applying meta-learning to very large datasets or extremely complex tasks, where the computational requirements for meta-optimization can become prohibitive.

The generalization capabilities of meta-learning algorithms, while impressive, still face limitations when encountering tasks that differ significantly from those encountered during meta-training. Developing more robust approaches to handle distribution shifts and novel task characteristics remains an active area of research with significant practical implications.

Interpretability and explainability of meta-learning systems present ongoing challenges, particularly in understanding how these algorithms make decisions about learning strategies and knowledge transfer. Developing approaches that provide insights into meta-learning processes is crucial for building trust and enabling effective deployment in critical applications.

Future Directions and Emerging Trends

The future of meta-learning research is characterized by several promising directions that hold the potential to address current limitations and unlock new capabilities. Continual learning approaches that enable AI systems to continuously acquire new knowledge without forgetting previous skills represent one of the most exciting developments in the field.

Multi-task meta-learning frameworks that can simultaneously optimize for multiple objectives and handle complex task relationships are emerging as powerful approaches for developing more versatile and capable AI systems. These frameworks promise to enable the development of AI systems that can effectively manage complex, multi-faceted challenges that require diverse skills and knowledge.

The integration of meta-learning with other advanced AI techniques such as reinforcement learning, generative models, and neurosymbolic approaches is creating new opportunities for developing more sophisticated and capable AI systems that can adapt to complex, dynamic environments while maintaining high performance standards.

Ethical Considerations and Responsible Development

The development and deployment of meta-learning algorithms raise important ethical considerations that must be carefully addressed to ensure responsible innovation. The rapid adaptation capabilities of these systems could potentially be misused for malicious purposes, highlighting the need for robust safeguards and ethical guidelines governing their development and application.

Bias propagation represents a significant concern in meta-learning systems, where biases learned from one domain could be inappropriately transferred to other applications, potentially amplifying discrimination and unfairness. Developing approaches to identify, measure, and mitigate bias transfer is crucial for ensuring equitable AI systems.

The potential for meta-learning algorithms to rapidly adapt to new tasks raises questions about accountability and responsibility when these systems make decisions in critical applications. Establishing clear frameworks for responsibility assignment and decision auditing is essential for maintaining public trust and ensuring appropriate oversight.

Implementation Strategies and Best Practices

Successful implementation of meta-learning algorithms requires careful consideration of several key factors that influence their effectiveness and reliability. Data preparation and task design play crucial roles in meta-learning success, where the diversity and quality of meta-training tasks significantly impact the algorithm’s ability to generalize to new challenges.

Architecture selection and hyperparameter tuning in meta-learning systems require specialized approaches that account for the nested optimization structure and complex interactions between meta-parameters and task-specific parameters. Systematic approaches to architecture design and parameter optimization are essential for achieving optimal performance.

Computational resource management becomes particularly important in meta-learning implementations, where the nested optimization procedures can be computationally intensive. Developing efficient implementation strategies and leveraging specialized hardware acceleration can significantly improve the practical feasibility of meta-learning applications.

The revolutionary potential of meta-learning algorithms extends far beyond their current applications, promising to fundamentally transform how artificial intelligence systems acquire, apply, and transfer knowledge across diverse domains. As these algorithms continue to evolve and mature, they will undoubtedly play an increasingly central role in developing AI systems that demonstrate unprecedented adaptability, efficiency, and intelligence in addressing complex real-world challenges.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of meta-learning technologies and their applications in artificial intelligence. Readers should conduct their own research and consider their specific requirements when implementing meta-learning algorithms. The effectiveness and suitability of meta-learning approaches may vary depending on specific use cases, data characteristics, and application requirements.