The mobile computing landscape has been fundamentally transformed by the integration of dedicated artificial intelligence processing units, creating a new battleground where smartphone manufacturers compete not just on traditional metrics like CPU speed and battery life, but on their ability to deliver sophisticated AI experiences directly on device. This technological revolution has positioned three major players at the forefront of mobile AI innovation: Apple with its Neural Engine architecture, Qualcomm through its Snapdragon AI platform, and MediaTek with its Advanced Processing Unit technology, each bringing distinct approaches to solving the complex challenges of mobile artificial intelligence processing.

Explore the latest developments in AI hardware to understand how these mobile AI innovations are shaping the future of computational intelligence in consumer devices. The competition between these technological giants has accelerated innovation cycles and pushed the boundaries of what is possible in mobile AI applications, from real-time image processing and natural language understanding to augmented reality experiences and predictive user interfaces.

The Evolution of Mobile AI Processing

The journey toward dedicated mobile AI processing began with the recognition that traditional CPU and GPU architectures, while powerful, were not optimally designed for the specific computational patterns required by artificial intelligence workloads. Neural networks demand massive parallel processing capabilities for matrix operations, tensor computations, and inference tasks that differ significantly from the sequential processing models that traditional processors excel at handling. This fundamental mismatch in computational requirements led to the development of specialized neural processing units designed specifically for AI workloads.

Apple pioneered this approach with the introduction of the Neural Engine in 2017, embedded within the A11 Bionic chip, marking the beginning of a new era in mobile computing where AI capabilities became as important as raw processing power. The success of this initial implementation demonstrated the viability of dedicated AI processing in mobile devices and established performance benchmarks that competitors would strive to match and exceed. The subsequent iterations of Apple’s Neural Engine have consistently pushed the boundaries of mobile AI performance while maintaining the power efficiency critical for battery-powered devices.

Apple Neural Engine: Architectural Excellence

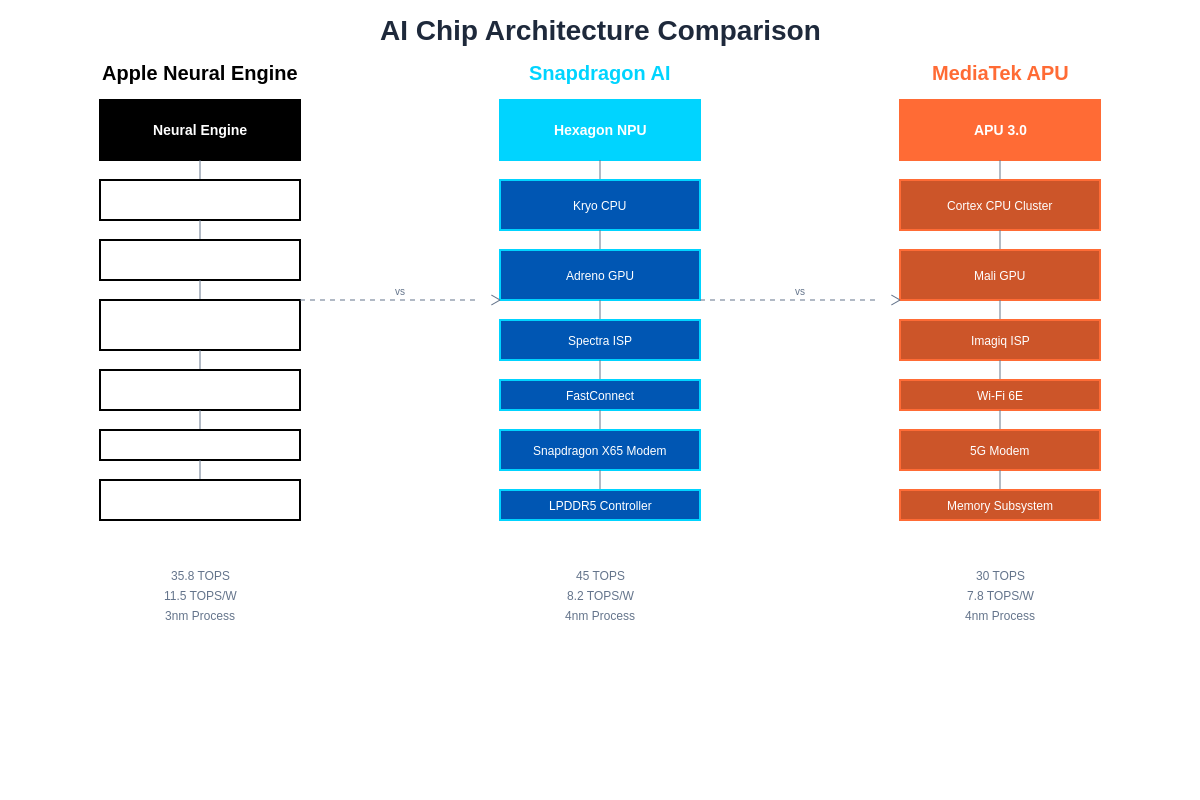

Apple’s Neural Engine represents a masterclass in specialized AI processor design, built from the ground up to handle the specific computational demands of machine learning inference tasks with exceptional efficiency and performance. The architecture employs a systolic array design that enables massively parallel execution of the multiply-accumulate operations that form the foundation of neural network computations. This design choice allows the Neural Engine to process multiple data streams simultaneously while maintaining synchronization across processing elements, resulting in both high throughput and energy efficiency.

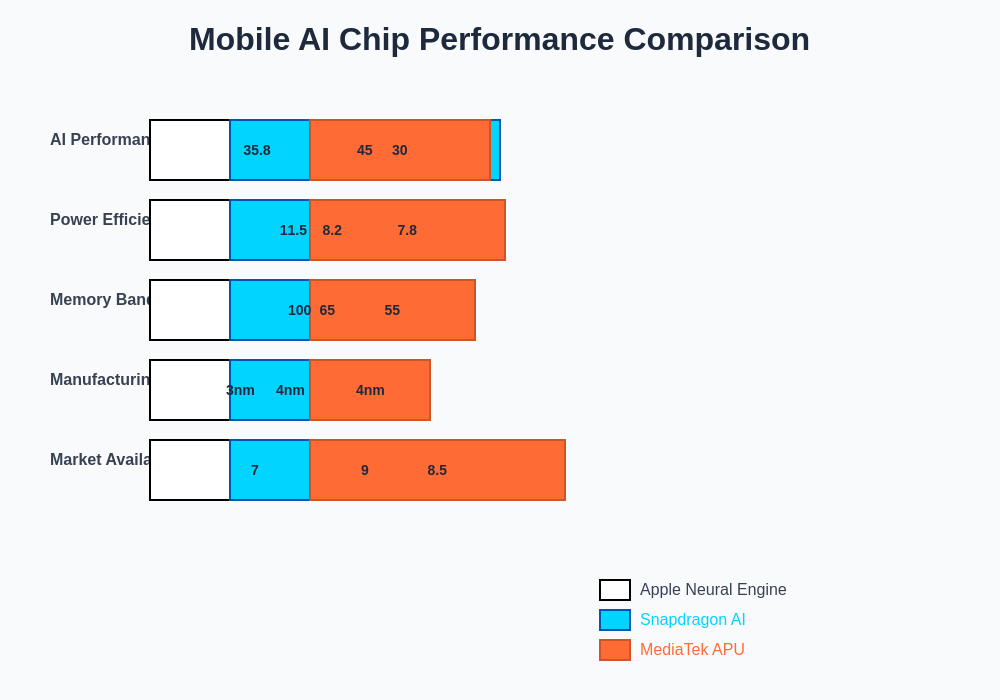

The latest iterations of Apple’s Neural Engine, found in the A17 Pro and M-series chips, feature sophisticated memory management systems that minimize data movement between processing cores and memory subsystems. This optimization is crucial for mobile applications where memory bandwidth limitations can become significant bottlenecks for AI performance. The Neural Engine’s ability to perform up to 35.8 trillion operations per second while maintaining industry-leading power efficiency demonstrates Apple’s commitment to delivering desktop-class AI performance within the thermal and power constraints of mobile devices.

Discover advanced AI processing capabilities that leverage specialized hardware architectures similar to those found in modern mobile AI chips. The integration between Apple’s Neural Engine and the broader system architecture, including optimized memory controllers, dedicated signal processors, and sophisticated thermal management systems, creates a holistic approach to mobile AI that extends beyond raw computational power to encompass the entire user experience.

Qualcomm Snapdragon AI: Versatile Integration

Qualcomm’s approach to mobile AI through the Snapdragon platform emphasizes versatility and broad compatibility across a diverse ecosystem of Android devices and manufacturers. The Snapdragon AI Engine utilizes a heterogeneous computing approach that distributes AI workloads across multiple specialized processing units, including the Hexagon Digital Signal Processor, Adreno GPU, and Kryo CPU cores, depending on the specific requirements of each task. This flexible architecture allows developers to optimize their applications for different types of AI workloads while maintaining compatibility across a wide range of device configurations and price points.

The Snapdragon 8 Gen 3 represents the culmination of Qualcomm’s AI development efforts, featuring a significantly enhanced Hexagon NPU capable of delivering up to 45 TOPS of AI performance while supporting advanced features like real-time generative AI applications, multi-modal AI processing, and sophisticated computer vision tasks. The architecture’s strength lies in its ability to adapt to different computational requirements dynamically, switching between processing units based on workload characteristics, power constraints, and performance requirements to deliver optimal results across diverse usage scenarios.

The Snapdragon platform’s open development environment and comprehensive software development kit have fostered a rich ecosystem of AI applications and optimizations that take advantage of the platform’s unique capabilities. This ecosystem approach has enabled innovative applications ranging from real-time language translation and augmented reality experiences to advanced photography features and personalized user interfaces that learn and adapt to individual usage patterns.

MediaTek APU: Innovative Architecture

MediaTek’s Advanced Processing Unit represents an innovative approach to mobile AI processing that emphasizes both performance and accessibility across different market segments. The APU architecture employs a unique multi-core design that combines high-performance computing cores with energy-efficient processing elements, enabling MediaTek to deliver competitive AI performance while maintaining the cost-effectiveness that has made the company a preferred choice for mid-range and budget smartphone manufacturers.

The latest MediaTek Dimensity 9300 features a significantly enhanced APU that delivers impressive AI performance through architectural innovations including advanced data flow optimization, intelligent workload scheduling, and sophisticated power management systems. The APU’s ability to handle complex AI tasks while maintaining compatibility with a wide range of software frameworks and development tools has positioned MediaTek as a serious competitor in the mobile AI space, particularly for manufacturers seeking to deliver premium AI experiences at accessible price points.

MediaTek’s commitment to AI democratization is evident in their comprehensive software support and development tools that enable smaller developers and manufacturers to implement sophisticated AI features without requiring extensive specialized knowledge or development resources. This approach has accelerated the adoption of AI features across the broader smartphone market and contributed to the overall advancement of mobile AI capabilities across all price segments.

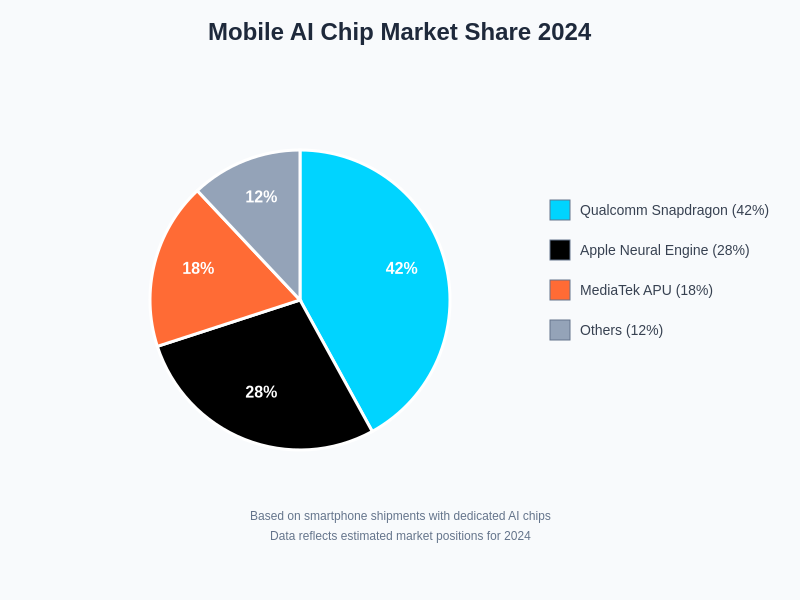

The current market landscape reveals the competitive positioning of major mobile AI chip manufacturers, with Qualcomm maintaining leadership through broad Android ecosystem adoption, Apple securing significant market presence through premium device integration, and MediaTek capturing substantial market share through cost-effective solutions across diverse price segments.

The performance landscape of mobile AI processors reveals significant differentiation in computational capabilities, energy efficiency, and specialized feature support across the three major platforms. These differences reflect distinct design philosophies and market positioning strategies that influence both developer adoption and end-user experiences.

Performance Benchmarks and Real-World Applications

Comparative analysis of mobile AI performance requires consideration of multiple metrics beyond raw computational throughput, including energy efficiency, thermal management, and real-world application performance across diverse usage scenarios. Apple’s Neural Engine consistently demonstrates superior performance in standardized AI benchmarks, particularly for inference tasks involving computer vision, natural language processing, and machine learning model execution. The architecture’s optimization for Apple’s specific use cases and tight integration with iOS APIs enables exceptional performance in applications like Face ID, Camera processing, and Siri functionality.

Qualcomm’s Snapdragon AI platform excels in versatility and broad application support, delivering competitive performance across a wider range of AI workloads and use cases. The platform’s strength in multimedia processing, gaming applications, and multi-modal AI tasks reflects Qualcomm’s focus on supporting diverse Android ecosystem requirements and enabling innovative applications that leverage multiple sensor inputs and processing modalities simultaneously.

MediaTek’s APU demonstrates impressive performance characteristics that often exceed expectations given the platform’s positioning in mid-range and budget device categories. The architecture’s ability to deliver sophisticated AI features like real-time photography enhancement, intelligent power management, and advanced connectivity optimization has enabled MediaTek-powered devices to compete effectively with premium smartphones in terms of AI-enabled user experiences.

Power Efficiency and Thermal Management

Power efficiency represents a critical differentiator in mobile AI processor design, as the most sophisticated AI capabilities become meaningless if they cannot be sustained within the thermal and battery constraints of mobile devices. Apple’s Neural Engine architecture demonstrates exceptional power efficiency through architectural optimizations including precision-optimized data paths, intelligent clock gating, and sophisticated voltage scaling systems that adapt processing intensity to workload requirements in real time.

The Neural Engine’s ability to deliver high-performance AI processing while maintaining low power consumption has enabled Apple to implement always-on AI features like continuous voice recognition, real-time camera processing, and background machine learning tasks without significantly impacting battery life. This efficiency advantage extends to thermal management, where the Neural Engine’s design minimizes heat generation even under sustained AI workloads, enabling consistent performance without thermal throttling.

Explore comprehensive AI research and development resources that provide detailed insights into the technical innovations driving mobile AI processor advancement. Qualcomm’s approach to power efficiency emphasizes dynamic workload distribution across multiple processing units, enabling the Snapdragon platform to optimize power consumption by utilizing the most efficient processing element for each specific task while maintaining overall system performance and responsiveness.

Software Ecosystem and Developer Support

The software ecosystem surrounding mobile AI processors plays a crucial role in determining the practical impact and adoption of AI features across different platforms. Apple’s approach emphasizes tight integration between hardware capabilities and software frameworks, providing developers with optimized APIs through Core ML, Metal Performance Shaders, and other specialized development tools that enable efficient utilization of Neural Engine capabilities while maintaining consistency across different device generations and configurations.

The iOS development environment’s emphasis on performance optimization and power efficiency extends to AI application development, where Apple provides sophisticated profiling tools, performance optimization frameworks, and comprehensive documentation that enables developers to create AI applications that take full advantage of Neural Engine capabilities while maintaining the high standards of user experience that characterize the iOS ecosystem.

Qualcomm’s software ecosystem reflects the company’s commitment to supporting the diverse Android development community through comprehensive development tools, including the Snapdragon Neural Processing SDK, optimized neural network libraries, and extensive hardware abstraction layers that simplify cross-device compatibility and performance optimization. The platform’s support for multiple AI frameworks including TensorFlow Lite, ONNX, and PyTorch Mobile enables developers to leverage existing AI models and development workflows while taking advantage of Snapdragon-specific optimizations and features.

Market Impact and Industry Influence

The competition between Apple, Qualcomm, and MediaTek in the mobile AI processor space has accelerated innovation across the entire mobile computing industry, driving rapid advancement in AI capabilities and forcing all participants to continuously push the boundaries of what is possible within the constraints of mobile devices. Apple’s early leadership with the Neural Engine established performance benchmarks and user experience expectations that have shaped the entire industry’s approach to mobile AI implementation.

The democratization of AI capabilities through MediaTek’s APU technology has extended sophisticated AI features to broader market segments, enabling manufacturers to offer premium AI experiences in devices across multiple price points and market categories. This accessibility has accelerated the adoption of AI features among consumers and developers, creating a positive feedback loop that drives continued innovation and improvement across all platforms.

The competitive dynamics between these three platforms have also influenced broader industry trends including the development of AI-optimized mobile applications, the evolution of cloud-edge computing architectures, and the advancement of AI model optimization techniques specifically designed for mobile deployment. These developments have created an ecosystem where AI capabilities are no longer luxury features reserved for premium devices but standard expectations across the entire smartphone market.

The architectural differences between Apple’s Neural Engine, Snapdragon AI, and MediaTek APU reflect distinct approaches to solving the fundamental challenges of mobile AI processing, each with unique advantages and trade-offs that influence performance characteristics and application suitability.

Future Developments and Technological Roadmaps

The roadmaps for mobile AI processor development indicate continued rapid advancement in computational capabilities, energy efficiency, and feature sophistication across all major platforms. Apple’s commitment to advancing the Neural Engine architecture includes ongoing research into advanced neural network architectures, improved precision optimization, and integration with emerging AI applications including large language models and generative AI capabilities that can operate efficiently within mobile device constraints.

Qualcomm’s future Snapdragon platforms are expected to incorporate advanced AI features including on-device training capabilities, improved multi-modal processing, and enhanced integration with cloud-based AI services that leverage the platform’s advanced connectivity capabilities. The company’s research into next-generation AI architectures includes exploration of neuromorphic computing concepts, advanced memory technologies, and novel approaches to AI model compression and optimization.

MediaTek’s development roadmap emphasizes continued democratization of advanced AI capabilities through cost-effective implementations that deliver premium features across broader market segments. The company’s research initiatives include advanced power management techniques, improved AI accelerator architectures, and innovative approaches to cross-platform AI model compatibility that enable developers to deploy sophisticated AI applications across diverse device configurations and price points.

Integration with Emerging Technologies

The evolution of mobile AI processors is increasingly intertwined with emerging technologies including 5G connectivity, augmented reality applications, and edge computing architectures that extend AI processing capabilities beyond individual devices to encompass broader computing ecosystems. Apple’s Neural Engine architecture is being optimized for integration with advanced camera systems, spatial computing applications, and seamless interaction with cloud-based AI services that complement on-device processing capabilities.

Qualcomm’s Snapdragon platform roadmap includes enhanced support for advanced connectivity features that enable sophisticated distributed AI applications, where mobile devices serve as intelligent edge nodes in broader AI processing networks. This approach leverages the platform’s advanced modem capabilities and AI processing power to enable applications that seamlessly blend on-device intelligence with cloud-based computational resources.

The integration of mobile AI processors with emerging display technologies, advanced sensor systems, and novel interaction paradigms is creating new categories of applications and user experiences that were previously impossible within the constraints of mobile devices. These developments are driving continued innovation in AI processor design and creating new competitive dimensions that extend beyond traditional performance metrics to encompass entirely new categories of user experience and application capability.

Industry Standards and Compatibility

The development of industry standards and compatibility frameworks for mobile AI processing is becoming increasingly important as the ecosystem matures and cross-platform compatibility becomes essential for widespread adoption of AI applications. Apple’s approach emphasizes optimization for iOS-specific frameworks while maintaining compatibility with industry-standard AI model formats through Core ML conversion tools and optimization frameworks that enable developers to deploy models across multiple platforms efficiently.

Qualcomm’s commitment to open standards and broad compatibility has positioned the Snapdragon platform as a reference implementation for Android AI development, with comprehensive support for industry-standard frameworks and extensive compatibility with existing AI development workflows. This approach has accelerated the adoption of Snapdragon AI capabilities across the Android ecosystem and established performance benchmarks for competitive platforms.

MediaTek’s focus on accessibility and broad compatibility has driven the development of cost-effective implementations of industry-standard AI features that enable smaller manufacturers and developers to participate in the mobile AI ecosystem without requiring extensive specialized development resources or significant technology investments.

The convergence toward common standards and compatibility frameworks is expected to accelerate innovation by enabling developers to focus on application development rather than platform-specific optimization, while still allowing differentiation through platform-specific features and optimizations that take advantage of unique architectural capabilities and integration opportunities.

Conclusion

The competition between Apple’s Neural Engine, Qualcomm’s Snapdragon AI, and MediaTek’s APU has fundamentally transformed the mobile computing landscape, creating an environment where artificial intelligence capabilities have become as important as traditional performance metrics in determining device capabilities and user experiences. Each platform brings unique strengths and approaches to mobile AI processing, reflecting different design philosophies, market positioning strategies, and technological priorities that serve diverse ecosystem requirements and user needs.

The continued advancement of these platforms promises to unlock new categories of mobile applications and user experiences while maintaining the accessibility and usability that have made smartphones ubiquitous computing devices. The future of mobile AI processing lies not in the dominance of any single approach but in the continued innovation and competition that drives rapid advancement across all platforms, ultimately benefiting consumers through more capable devices and more sophisticated applications that leverage the unique capabilities of mobile artificial intelligence processing.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The performance comparisons and technical specifications discussed are based on publicly available information and may vary depending on specific device configurations, software versions, and testing methodologies. Readers should conduct their own research and consider their specific requirements when evaluating mobile AI processor capabilities. The mobile AI processor market is rapidly evolving, and specifications and capabilities may change with new product releases and software updates.