The convergence of artificial intelligence and mobile gaming has reached a critical inflection point where sophisticated machine learning algorithms must operate within the stringent constraints of smartphone hardware and battery limitations. Modern mobile games increasingly rely on intelligent systems to enhance player experiences through adaptive difficulty scaling, personalized content generation, and real-time behavioral analysis, yet these computational demands traditionally come at the cost of significant battery drain and thermal throttling. The challenge facing contemporary game developers lies in implementing AI systems that maintain high performance while preserving device longevity and user satisfaction.

Explore the latest AI gaming innovations to understand how cutting-edge technologies are reshaping mobile entertainment experiences. The evolution of battery-efficient machine learning represents a fundamental shift in how developers approach AI integration, moving from cloud-dependent solutions toward edge-based processing that maintains responsiveness while minimizing power consumption.

The Power Consumption Challenge in Mobile AI

Mobile devices operate under severe power constraints that fundamentally differ from traditional computing environments, where AI algorithms can leverage unlimited electrical supply and sophisticated cooling systems. The typical smartphone battery provides approximately 3000-4000 mAh capacity, which must power not only the AI processing units but also display systems, wireless communications, and background applications throughout extended gaming sessions. Machine learning operations, particularly those involving neural network inference, historically consume substantial computational resources through intensive matrix multiplications and floating-point calculations that can rapidly deplete battery reserves.

The thermal management aspect presents additional complexity, as sustained AI processing generates heat that triggers dynamic frequency scaling in mobile processors, effectively reducing performance to prevent hardware damage. This thermal throttling creates a cascade effect where AI systems must work harder to maintain performance levels, paradoxically increasing power consumption while decreasing effectiveness. Understanding these fundamental constraints has driven the development of specialized optimization techniques that specifically address the unique challenges of mobile AI implementation.

Edge Computing Architecture for Mobile Gaming

The architectural foundation of battery-efficient mobile game AI centers on edge computing principles that minimize reliance on cloud-based processing while maximizing local computational efficiency. Modern smartphones incorporate dedicated neural processing units, graphics processing units optimized for AI workloads, and specialized digital signal processors that can execute machine learning operations with significantly reduced power consumption compared to general-purpose CPU cores. This distributed processing approach allows game developers to offload AI computations to the most appropriate hardware components based on power efficiency and performance requirements.

Edge-based AI architecture enables real-time decision making without network latency penalties, crucial for responsive gaming experiences that require immediate AI responses to player actions. The elimination of constant data transmission to remote servers not only reduces battery drain from wireless communication but also ensures consistent AI performance regardless of network connectivity quality. This architectural approach has proven particularly effective for implementing features such as dynamic difficulty adjustment, intelligent enemy behavior, and personalized content recommendations that must operate seamlessly during gameplay.

Experience advanced AI capabilities with Claude for development tasks that require sophisticated reasoning and optimization strategies. The integration of edge computing with mobile gaming AI represents a convergence of hardware innovation and software optimization that enables previously impossible levels of intelligent interaction within power-constrained environments.

Quantization and Model Compression Techniques

The implementation of battery-efficient mobile AI heavily relies on advanced quantization techniques that reduce the computational precision required for neural network operations without significantly compromising accuracy. Traditional machine learning models utilize 32-bit floating-point representations for weights and activations, consuming substantial memory bandwidth and processing power during inference operations. Quantization reduces this precision to 16-bit, 8-bit, or even binary representations, dramatically decreasing memory requirements and enabling more efficient processing on mobile hardware optimized for integer operations.

Dynamic quantization represents a particularly sophisticated approach where different layers of neural networks utilize varying precision levels based on their sensitivity to numerical accuracy. Critical layers that significantly impact model performance maintain higher precision, while less sensitive components operate with aggressive quantization to maximize power savings. This granular approach to precision management enables developers to achieve optimal trade-offs between AI capability and battery consumption based on specific game requirements and performance targets.

Model pruning techniques complement quantization by removing redundant neural network connections that contribute minimally to overall model accuracy. Structured pruning removes entire neurons or channels, creating more compact models that require fewer computational resources, while unstructured pruning targets individual weights to maximize accuracy preservation. The combination of quantization and pruning can reduce model size by 90% or more while maintaining acceptable performance levels, enabling sophisticated AI functionality within mobile power constraints.

Adaptive AI Processing Strategies

Battery-efficient mobile game AI implementation requires dynamic adaptation strategies that adjust computational intensity based on current device conditions, battery levels, and thermal states. These adaptive systems continuously monitor device performance metrics and automatically scale AI processing complexity to maintain optimal balance between functionality and power consumption. During periods of high battery charge and low thermal load, AI systems can operate at full capability, while battery conservation modes trigger simplified processing algorithms that maintain core functionality with reduced computational overhead.

Temporal adaptation represents another crucial optimization strategy where AI processing intensity varies based on game state and player activity patterns. During intense gameplay sequences requiring immediate AI responses, systems prioritize performance over power efficiency, while quieter moments enable more aggressive power conservation measures. This dynamic scaling ensures that battery life extends throughout extended gaming sessions while maintaining responsive AI behavior when most critical to player experience.

The implementation of predictive power management allows AI systems to anticipate computational requirements based on game progression and player behavior patterns, enabling proactive optimization decisions that prevent performance degradation before it occurs. These predictive systems learn from individual player patterns to optimize AI resource allocation, ensuring personalized power management that adapts to specific usage patterns and preferences.

Specialized Neural Network Architectures

The development of mobile-optimized neural network architectures specifically designed for power-constrained environments has revolutionized the implementation of AI in mobile gaming applications. MobileNet architectures utilize depthwise separable convolutions that dramatically reduce computational complexity while maintaining feature extraction effectiveness, enabling sophisticated computer vision applications within mobile power budgets. These architectures achieve comparable accuracy to traditional convolutional neural networks while requiring significantly fewer floating-point operations and memory accesses.

EfficientNet models represent another breakthrough in mobile AI optimization, utilizing compound scaling techniques that simultaneously optimize network depth, width, and resolution to achieve maximum efficiency across different computational budgets. These models can be precisely configured to match available processing resources, enabling developers to implement AI features that scale appropriately with device capabilities and power constraints.

Transformer architectures specifically optimized for mobile deployment incorporate attention mechanisms with reduced computational complexity through techniques such as linear attention and sparse attention patterns. These optimized transformers enable natural language processing capabilities for AI-driven dialogue systems and content generation while maintaining reasonable power consumption levels suitable for mobile gaming applications.

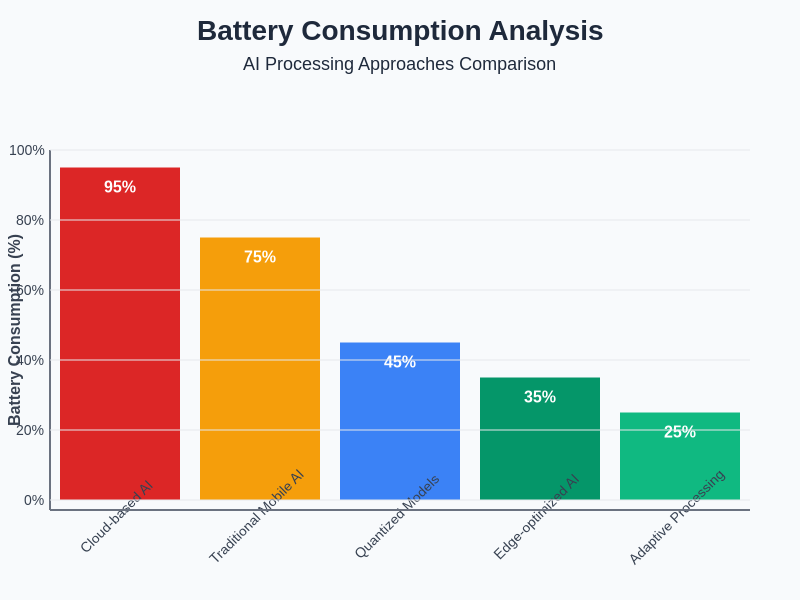

The quantitative analysis of different AI processing approaches reveals significant variations in power consumption across implementation strategies. Edge-based processing with optimized neural networks demonstrates substantial efficiency improvements compared to traditional cloud-dependent AI systems, particularly when combined with adaptive processing techniques that respond to device conditions.

Real-Time Optimization and Inference Acceleration

The acceleration of AI inference operations represents a critical component in achieving battery-efficient mobile game AI implementation. Hardware-specific optimization techniques leverage the unique capabilities of mobile processors, including specialized neural processing units, GPU compute shaders, and vector processing extensions that can execute AI operations with dramatically improved efficiency compared to general-purpose computing approaches. These optimizations often require platform-specific implementations that take advantage of manufacturer-specific acceleration features.

Batching strategies enable more efficient utilization of mobile AI hardware by grouping multiple inference operations together, reducing the overhead associated with individual processing requests. Dynamic batching techniques adjust batch sizes based on current computational load and available resources, ensuring optimal utilization without creating latency issues that could impact gameplay responsiveness. These strategies prove particularly effective for AI systems that process multiple game entities simultaneously, such as crowd behavior simulation or multi-agent pathfinding systems.

Cache optimization techniques minimize memory access patterns that traditionally consume significant power in mobile devices, where memory operations often represent the largest component of AI processing power consumption. Intelligent caching strategies preload frequently accessed model weights and intermediate results, reducing the need for repeated memory transfers while implementing eviction policies that maintain cache effectiveness within limited mobile memory constraints.

Enhance your research with Perplexity’s AI capabilities for comprehensive analysis of mobile optimization techniques and emerging battery efficiency strategies. The integration of multiple optimization approaches creates synergistic effects that enable sophisticated AI functionality within practical mobile power budgets.

Player Behavior Prediction and Personalization

Battery-efficient AI systems in mobile games excel at implementing sophisticated player behavior prediction models that enhance engagement while minimizing computational overhead through intelligent data processing strategies. These systems analyze player interaction patterns, session duration preferences, and skill progression trajectories to build lightweight predictive models that operate efficiently on mobile hardware. The predictive capabilities enable proactive content generation and difficulty adjustment without requiring constant computational overhead during gameplay.

Personalization algorithms specifically optimized for mobile deployment utilize federated learning techniques that train AI models locally on device data without transmitting sensitive player information to remote servers. This approach not only preserves privacy but also eliminates the power consumption associated with continuous data transmission while enabling highly personalized gaming experiences. Local learning algorithms adapt to individual player preferences and skill levels, creating unique AI behaviors that evolve with continued interaction.

The implementation of player clustering techniques enables efficient resource allocation by grouping similar players and applying appropriate AI processing strategies based on cluster characteristics. Players requiring more sophisticated AI assistance receive enhanced computational resources, while casual players benefit from optimized algorithms that prioritize battery conservation over advanced AI features. This segmentation approach ensures optimal resource utilization across diverse player populations with varying performance requirements.

Dynamic Difficulty Adjustment Systems

Intelligent difficulty scaling represents one of the most successful applications of battery-efficient AI in mobile gaming, where machine learning algorithms continuously analyze player performance metrics to maintain optimal challenge levels without overwhelming computational resources. These systems utilize lightweight neural networks trained to recognize player frustration patterns, skill development trajectories, and engagement indicators that inform real-time difficulty adjustments. The AI models operate with minimal computational overhead while providing significant improvements to player retention and satisfaction metrics.

Adaptive difficulty systems implement multi-layered optimization strategies that consider both immediate player performance and long-term engagement patterns when making adjustment decisions. Short-term adaptations respond to immediate gameplay challenges, while longer-term learning algorithms identify broader skill development trends that inform more substantial difficulty curve modifications. This multi-temporal approach ensures that difficulty adjustments remain appropriate across extended gaming sessions while minimizing the computational complexity required for effective implementation.

The integration of contextual awareness enables difficulty adjustment systems to consider external factors such as device orientation, ambient noise levels, and usage patterns that may impact player performance independent of skill level. These contextual inputs allow AI systems to make more nuanced adjustment decisions that account for environmental factors affecting gameplay, creating more empathetic AI responses that enhance overall player experience while maintaining computational efficiency.

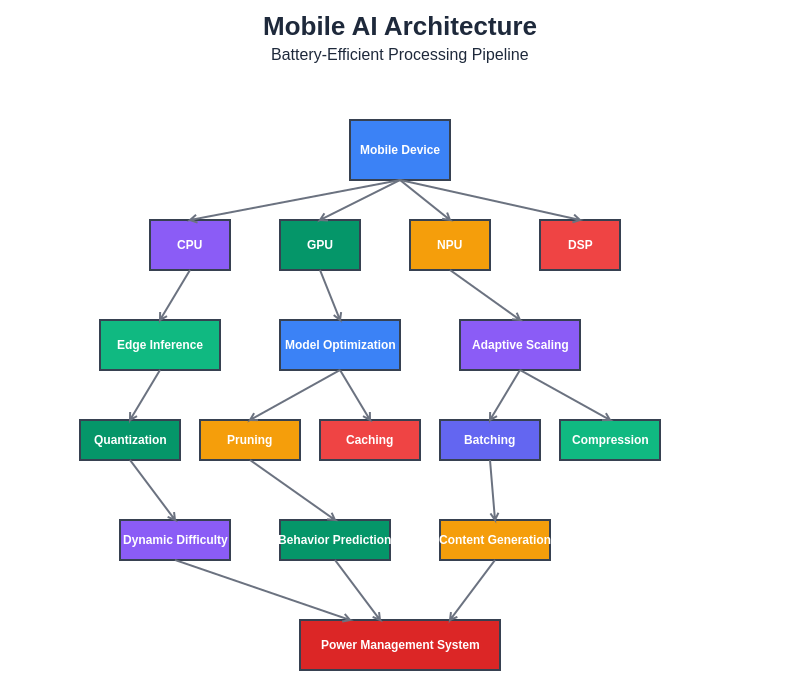

The architectural overview of battery-efficient mobile game AI demonstrates the integration of multiple optimization strategies working in concert to achieve sophisticated AI functionality within mobile power constraints. Edge processing, model optimization, and adaptive algorithms combine to create systems capable of complex intelligent behavior while preserving device battery life.

Content Generation and Procedural AI

Battery-efficient procedural content generation systems leverage optimized AI algorithms to create dynamic gaming experiences without requiring extensive pre-generated assets that consume device storage and memory resources. These systems utilize lightweight generative models trained to produce game content such as level layouts, character variations, and narrative elements using minimal computational resources. The procedural approach enables infinite content variety while maintaining consistent performance across diverse mobile hardware configurations.

Streaming generation techniques implement AI content creation on-demand, generating game assets just before they become visible to players and discarding unnecessary data to minimize memory footprint. This approach prevents memory bloat that traditionally impacts mobile performance while ensuring that generated content maintains high quality standards. The streaming algorithms coordinate with predictive systems to anticipate content requirements and begin generation processes early enough to prevent noticeable delays during gameplay.

Hierarchical generation strategies implement multi-resolution content creation where broad structural elements are generated using simplified algorithms, while detailed features utilize more sophisticated AI processes only when necessary. This layered approach enables efficient resource allocation that prioritizes computational effort based on content importance and player proximity, ensuring that the most visible and interactive elements receive appropriate AI attention while background elements utilize optimized generation techniques.

Multiplayer AI Synchronization

The implementation of battery-efficient AI in multiplayer mobile games requires sophisticated synchronization strategies that maintain consistent game states across multiple devices while minimizing the computational and communication overhead associated with distributed AI processing. Predictive synchronization techniques enable devices to anticipate AI behavior based on shared game state information, reducing the frequency of synchronization messages while maintaining gameplay consistency across all participants.

Distributed AI processing allows different devices to handle specific AI computations based on their current resource availability and performance capabilities, creating dynamic load balancing that adapts to varying hardware configurations and battery levels among participants. More powerful devices can assume greater AI processing responsibilities, while devices with limited resources focus on essential local computations that directly impact their player’s immediate experience.

Consensus algorithms specifically optimized for mobile environments ensure that critical AI decisions remain consistent across all participants while allowing non-critical AI behaviors to vary slightly between devices without impacting gameplay integrity. These algorithms minimize communication requirements while maintaining the shared experience quality that defines successful multiplayer gaming, enabling sophisticated multi-device AI coordination within practical mobile networking and power constraints.

Performance Monitoring and Analytics

Advanced monitoring systems enable continuous optimization of battery-efficient AI implementation through real-time analysis of power consumption patterns, thermal behavior, and performance metrics across diverse mobile hardware configurations. These analytics platforms collect telemetry data that informs ongoing optimization efforts while respecting privacy constraints and minimizing additional power consumption from monitoring overhead. The collected data enables developers to identify optimization opportunities and validate the effectiveness of power-saving strategies across different device types and usage patterns.

Machine learning algorithms trained on monitoring data can predict device performance degradation and proactively adjust AI processing strategies to maintain consistent gameplay experiences throughout extended sessions. These predictive systems learn from historical performance patterns to anticipate thermal throttling, battery depletion effects, and other performance-limiting factors that could impact AI functionality. Early warning systems enable graceful degradation of AI features rather than sudden performance drops that could negatively impact player experience.

Automated optimization systems utilize monitoring data to continuously refine AI algorithms and resource allocation strategies without requiring manual intervention from developers. These systems implement genetic algorithms and reinforcement learning techniques to discover optimal configuration parameters for different device types and usage scenarios, creating self-improving AI systems that become more efficient over time while maintaining or enhancing functionality.

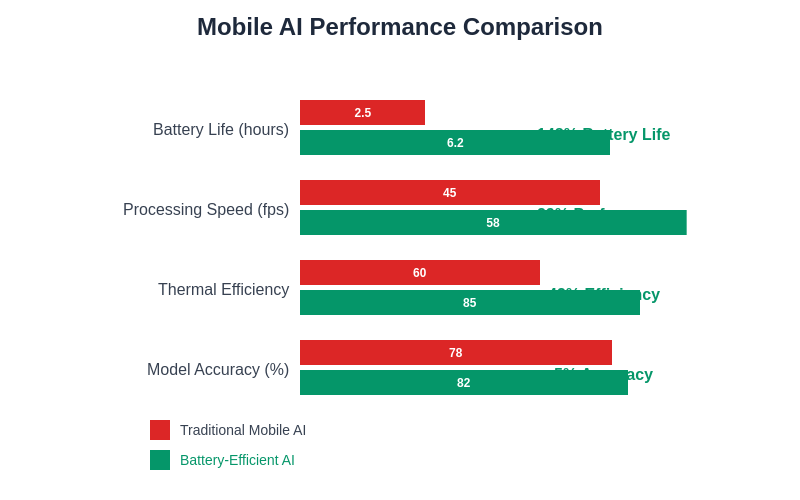

The comprehensive performance analysis demonstrates the effectiveness of various optimization strategies in achieving battery-efficient AI implementation. The comparison reveals significant improvements in battery life, thermal management, and sustained performance when utilizing optimized AI architectures compared to traditional implementation approaches.

Future Trends and Emerging Technologies

The evolution of mobile AI hardware continues to drive new possibilities for battery-efficient game AI implementation, with emerging neuromorphic processors promising revolutionary improvements in power efficiency for AI workloads. These specialized processors mimic biological neural structures to achieve dramatically reduced power consumption for specific AI operations, potentially enabling always-on AI features that operate within minimal power budgets. Early neuromorphic implementations show promise for handling repetitive AI tasks such as pattern recognition and decision-making with power consumption levels orders of magnitude lower than traditional digital processors.

Advanced battery technologies including solid-state batteries and improved lithium-ion chemistries will provide higher energy densities that enable more sophisticated AI features without compromising device portability or usage duration. These battery improvements will expand the feasible complexity of mobile AI systems while maintaining acceptable form factors and weight characteristics that define successful mobile devices. The combination of improved power sources and more efficient AI processing will unlock new categories of intelligent mobile gaming experiences.

The integration of 5G and edge computing infrastructure enables hybrid AI processing models where computationally intensive AI operations can be offloaded to nearby edge servers with minimal latency penalties. This infrastructure evolution will allow mobile games to access more sophisticated AI capabilities during periods of good connectivity while maintaining essential AI functionality through local processing during offline situations. The seamless transition between edge and local AI processing will provide optimal performance across varying connectivity conditions.

Quantum computing research continues to explore potential applications for mobile AI optimization, though practical implementation remains years away from consumer devices. Quantum algorithms show theoretical promise for specific AI optimization problems that could dramatically improve the efficiency of neural network training and inference operations. While current quantum systems require extreme cooling and isolation incompatible with mobile devices, the fundamental algorithmic insights may inform new classical optimization techniques that benefit mobile AI implementation.

Implementation Best Practices and Guidelines

Successful implementation of battery-efficient mobile game AI requires adherence to established best practices that have emerged from extensive real-world deployment experience across diverse mobile gaming applications. Profiling and benchmarking represent fundamental activities that must precede AI implementation, enabling developers to understand the specific performance characteristics and power consumption patterns of their target hardware platforms. Comprehensive profiling identifies bottlenecks and optimization opportunities that might not be apparent through theoretical analysis alone.

Iterative optimization approaches enable gradual refinement of AI algorithms through systematic testing and measurement of power consumption improvements. These iterative cycles allow developers to validate optimization strategies and identify the most effective techniques for their specific applications without compromising functionality or user experience. The iterative approach also enables risk mitigation by implementing changes incrementally rather than attempting wholesale AI architecture modifications that could introduce stability issues.

Testing across diverse device configurations ensures that battery optimization strategies remain effective across the wide range of mobile hardware specifications encountered in real-world deployment scenarios. Different processors, memory configurations, and thermal management systems can significantly impact the effectiveness of specific optimization techniques, requiring validation across representative device samples to ensure consistent performance. Comprehensive testing protocols should include both synthetic benchmarks and real-world usage scenarios to capture the full spectrum of performance characteristics.

Documentation and knowledge sharing within development teams enable consistent application of optimization techniques and prevent the introduction of inefficient AI implementations that could compromise battery performance. Establishing coding standards and architectural guidelines specific to mobile AI development ensures that all team members understand the constraints and best practices necessary for successful implementation. Regular training and knowledge transfer sessions help maintain expertise as mobile AI technologies continue to evolve rapidly.

Industry Impact and Market Evolution

The successful implementation of battery-efficient AI in mobile gaming has catalyzed broader industry adoption of similar optimization techniques across other mobile application categories, demonstrating the transferable value of power-conscious AI development practices. Social media applications, productivity software, and utility applications increasingly incorporate AI features optimized using techniques pioneered in mobile gaming, creating a positive feedback loop that drives continued innovation in mobile AI efficiency.

Market demand for longer battery life in mobile devices has elevated the importance of power-efficient software development, with AI optimization becoming a key competitive differentiator for mobile applications. Consumers increasingly evaluate mobile games based on their impact on device battery life, creating market pressure that rewards developers who prioritize power efficiency alongside functionality and entertainment value. This market dynamic has accelerated the adoption of battery-efficient AI techniques across the mobile gaming industry.

The emergence of specialized mobile AI development tools and frameworks has democratized access to power optimization techniques, enabling smaller development teams to implement sophisticated AI features without requiring extensive hardware-level optimization expertise. These tools abstract many of the complex optimization details while providing developers with the control necessary to achieve optimal performance for their specific applications. The availability of these tools has accelerated innovation in mobile AI applications across the gaming industry.

Investment in mobile AI optimization research has increased substantially as the strategic importance of battery-efficient AI becomes apparent to industry leaders. Major mobile platform providers, semiconductor manufacturers, and game development studios have allocated significant resources toward advancing mobile AI efficiency, creating a collaborative ecosystem focused on pushing the boundaries of what’s possible within mobile power constraints. This investment has accelerated the pace of innovation and enabled breakthrough developments that benefit the entire mobile gaming ecosystem.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of mobile AI technologies and battery optimization techniques. Readers should conduct their own research and consider their specific technical requirements when implementing mobile AI systems. The effectiveness of optimization strategies may vary depending on hardware configurations, application requirements, and usage patterns. Power consumption and performance improvements mentioned are representative examples and actual results may vary based on implementation details and device specifications.