The evolution of artificial intelligence applications has brought forth unprecedented challenges in designing scalable, secure, and efficient multi-tenant Software as a Service architectures. As organizations increasingly deploy AI-powered solutions to serve diverse customer bases, the need for sophisticated isolation patterns and architectural strategies has become paramount. Multi-tenant AI systems must balance the competing demands of resource efficiency, data security, regulatory compliance, and performance optimization while maintaining the flexibility to adapt to rapidly evolving AI technologies and customer requirements.

Discover the latest AI architectural trends that are shaping the future of multi-tenant applications and driving innovation in cloud-based AI services. The complexity of modern AI workloads, combined with the stringent requirements of multi-tenant environments, demands a comprehensive understanding of architectural patterns that can effectively address isolation, scalability, and operational challenges while delivering exceptional user experiences across diverse tenant profiles.

Fundamentals of Multi-Tenant AI Architecture

Multi-tenant AI architecture represents a sophisticated approach to serving multiple customers or organizations through a single, shared infrastructure while maintaining logical separation and isolation between different tenant data and operations. This architectural paradigm enables significant cost efficiencies through resource sharing while providing each tenant with the appearance of having dedicated AI services tailored to their specific needs and requirements. The fundamental challenge lies in balancing shared resource utilization with stringent isolation requirements that ensure data privacy, security, and performance predictability.

The complexity of multi-tenant AI systems extends beyond traditional web applications due to the computational intensity and data sensitivity inherent in AI workloads. Machine learning models require substantial computational resources for training and inference, while handling diverse data types ranging from structured datasets to unstructured content such as images, text, and audio. These characteristics necessitate specialized architectural patterns that can efficiently manage resource allocation, ensure data isolation, and maintain consistent performance across varying tenant workloads and usage patterns.

The architectural decisions made in multi-tenant AI systems have far-reaching implications for scalability, security, compliance, and operational efficiency. Organizations must carefully consider factors such as tenant data isolation levels, resource sharing strategies, model deployment patterns, and infrastructure scaling approaches to create systems that can effectively serve diverse customer bases while maintaining high standards of security and performance. The architectural foundation established during the initial design phase significantly influences the system’s ability to adapt to changing requirements and scale to accommodate growing tenant populations.

Isolation Patterns and Strategies

Data isolation represents the cornerstone of successful multi-tenant AI architectures, encompassing multiple layers of separation that protect tenant information while enabling efficient resource utilization. Physical isolation, the most secure but least cost-effective approach, involves completely separate infrastructure for each tenant, including dedicated servers, databases, and AI model instances. This pattern provides maximum security and performance predictability but sacrifices the economic benefits of resource sharing that make multi-tenant architectures attractive.

Logical isolation patterns offer more balanced approaches that maintain security while enabling resource sharing through sophisticated separation mechanisms. Database-level isolation can be achieved through separate schemas, table-level partitioning, or row-level security policies that ensure tenant data remains segregated even within shared database instances. Application-level isolation involves implementing robust authentication, authorization, and data filtering mechanisms that prevent cross-tenant data access while maintaining shared application logic and infrastructure.

Experience advanced AI capabilities with Claude for architecting complex multi-tenant systems that require sophisticated reasoning and design pattern analysis. Container-based isolation leverages technologies such as Docker and Kubernetes to create lightweight, isolated execution environments for different tenants while sharing underlying infrastructure resources. This approach provides excellent balance between security, resource efficiency, and operational flexibility, enabling dynamic scaling and resource allocation based on tenant-specific requirements.

The selection of appropriate isolation patterns requires careful evaluation of security requirements, cost constraints, operational complexity, and scalability objectives. Each pattern offers distinct advantages and trade-offs that must be aligned with specific business requirements and regulatory compliance needs.

Network-level isolation adds another crucial layer of protection through virtual private clouds, software-defined networking, and micro-segmentation techniques that control traffic flow and prevent unauthorized access between tenant environments. These network isolation patterns are particularly important in AI applications where large data transfers and model synchronization operations can create potential security vulnerabilities if not properly controlled and monitored.

Data Architecture and Segregation

The data architecture in multi-tenant AI systems must address complex requirements for storing, processing, and securing diverse data types while ensuring efficient access patterns and compliance with regulatory requirements. Tenant data segregation strategies range from physical database separation to sophisticated partitioning schemes that maintain logical isolation within shared storage systems. The choice of segregation approach significantly impacts system performance, cost efficiency, and operational complexity.

Horizontal partitioning, also known as sharding, distributes tenant data across multiple database instances based on tenant identifiers, providing natural isolation boundaries while enabling independent scaling of storage resources. Vertical partitioning involves separating different data types or functional areas into distinct databases, allowing for specialized optimization and access control policies tailored to specific data characteristics and usage patterns.

Hybrid partitioning strategies combine multiple approaches to create sophisticated data distribution patterns that optimize for various factors including data locality, access patterns, regulatory requirements, and performance characteristics. These strategies often involve dynamic data placement algorithms that can automatically distribute and relocate tenant data based on usage patterns, growth trends, and resource availability.

Data encryption and key management represent critical components of multi-tenant data architecture, requiring sophisticated approaches to protect tenant data both at rest and in transit. Tenant-specific encryption keys ensure that data remains protected even in the event of unauthorized access to shared storage systems, while key rotation and management policies maintain long-term security and compliance with evolving regulatory requirements.

AI Model Deployment and Sharing

The deployment and management of AI models in multi-tenant environments presents unique challenges that require careful consideration of performance, resource utilization, and tenant-specific customization requirements. Shared model architectures leverage common base models that serve multiple tenants, providing cost efficiency and simplified maintenance while potentially limiting customization capabilities for specific tenant needs and use cases.

Dedicated model instances provide each tenant with isolated model deployments that can be customized and optimized for specific requirements, datasets, and performance objectives. This approach offers maximum flexibility and performance predictability but requires significant additional resources and operational complexity to manage multiple model versions, updates, and scaling requirements across diverse tenant populations.

Hybrid model deployment strategies combine shared and dedicated approaches through techniques such as model fine-tuning, where base models are shared across tenants but specialized layers or parameters are maintained for tenant-specific customizations. This approach balances resource efficiency with customization capabilities while maintaining reasonable operational complexity and enabling efficient model updates and improvements.

Model serving infrastructure must accommodate varying load patterns, performance requirements, and scaling characteristics across different tenants while maintaining consistent response times and availability. Advanced load balancing, auto-scaling, and resource allocation algorithms ensure that model inference resources are efficiently distributed based on tenant priorities, service level agreements, and real-time demand patterns.

Security and Compliance Framework

Multi-tenant AI systems require comprehensive security frameworks that address the complex intersection of data privacy, regulatory compliance, and operational security across diverse tenant requirements and jurisdictions. Authentication and authorization systems must provide fine-grained access control that prevents unauthorized access while enabling seamless user experiences within tenant boundaries. Multi-layered security approaches combine network security, application security, and data security measures to create robust protection against various threat vectors.

Compliance requirements vary significantly across different industries, jurisdictions, and tenant types, necessitating flexible security architectures that can adapt to diverse regulatory frameworks such as GDPR, HIPAA, SOX, and industry-specific standards. Audit logging and monitoring systems must capture comprehensive activity records while maintaining tenant data isolation and enabling compliance reporting without compromising security or privacy requirements.

Leverage Perplexity’s research capabilities for staying current with evolving security regulations and compliance requirements that impact multi-tenant AI architectures. Data sovereignty and residency requirements increasingly influence architectural decisions, requiring systems that can enforce geographic data placement, processing restrictions, and cross-border transfer limitations based on tenant-specific requirements and regulatory obligations.

Incident response and recovery procedures must account for the complexities of multi-tenant environments, ensuring that security incidents affecting one tenant do not impact others while maintaining the ability to conduct thorough investigations and implement corrective measures. Business continuity and disaster recovery planning requires sophisticated approaches that can maintain service availability for unaffected tenants while addressing issues impacting specific tenant data or services.

Performance Optimization and Resource Management

Resource management in multi-tenant AI systems involves complex optimization problems that must balance competing demands for computational resources, memory, storage, and network bandwidth across diverse tenant workloads and priorities. Dynamic resource allocation algorithms must consider factors such as tenant service level agreements, current system utilization, predicted demand patterns, and cost optimization objectives to make real-time resource distribution decisions.

Performance isolation mechanisms ensure that resource-intensive operations by one tenant do not adversely impact the performance experienced by other tenants sharing the same infrastructure. Queue management, throttling, and priority-based scheduling systems provide granular control over resource allocation while maintaining fairness and preventing resource starvation scenarios that could affect system stability and tenant satisfaction.

Caching strategies in multi-tenant AI systems must carefully balance shared cache efficiency with data isolation requirements, implementing sophisticated cache partitioning and invalidation mechanisms that maximize performance benefits while maintaining security boundaries. Multi-level caching hierarchies can provide tenant-specific caching layers while leveraging shared caches for common data and model components.

Auto-scaling mechanisms must accommodate the unique characteristics of AI workloads, which often exhibit unpredictable demand patterns, long startup times for model loading, and significant resource requirements for training operations. Predictive scaling algorithms leverage historical usage patterns, tenant behavior analytics, and external factors to proactively adjust resource allocation and minimize response time degradation during demand spikes.

Microservices Architecture Patterns

Microservices architectures provide excellent foundations for multi-tenant AI systems by enabling independent scaling, deployment, and management of different system components while maintaining loose coupling and high cohesion. Service decomposition strategies must carefully consider tenant isolation requirements, data flow patterns, and operational boundaries to create service definitions that support both multi-tenancy requirements and system maintainability.

API gateway patterns play crucial roles in multi-tenant microservices architectures by providing centralized points for tenant authentication, authorization, rate limiting, and request routing. Advanced API gateways can implement tenant-aware routing, load balancing, and circuit breaker patterns that ensure system resilience while maintaining tenant isolation and performance requirements.

Service mesh technologies such as Istio and Linkerd provide sophisticated traffic management, security, and observability capabilities that are particularly valuable in multi-tenant microservices environments. These technologies enable fine-grained control over inter-service communication, implement zero-trust security models, and provide comprehensive monitoring and tracing capabilities across tenant boundaries.

Event-driven architecture patterns facilitate loose coupling between microservices while enabling real-time data processing and integration capabilities essential for AI applications. Event sourcing and command query responsibility segregation patterns can provide excellent foundations for maintaining data consistency and enabling complex business logic while preserving tenant isolation and supporting diverse scaling requirements.

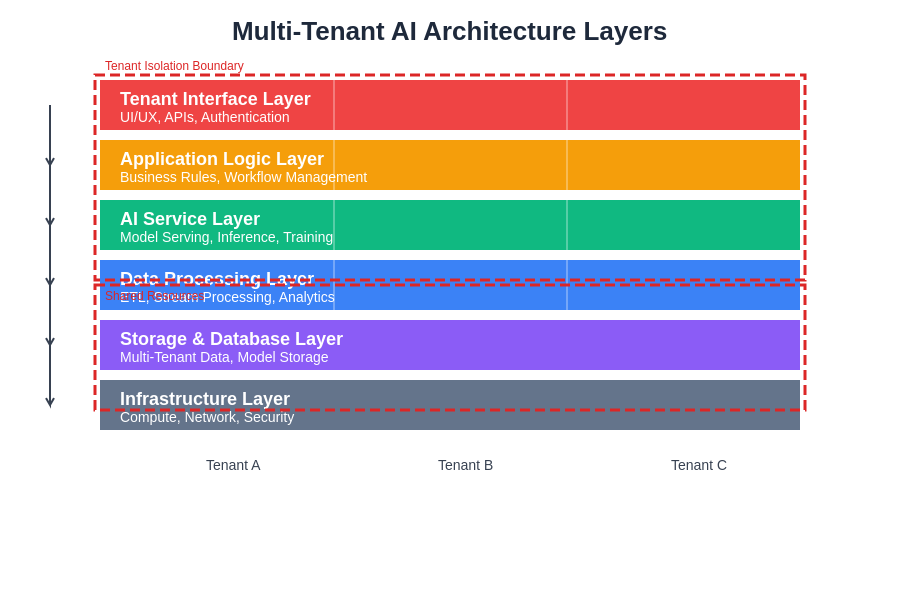

The layered architecture approach provides clear separation of concerns while enabling different isolation strategies at each layer based on security requirements and operational constraints. This structured approach facilitates independent scaling, maintenance, and evolution of different system components.

Data Pipeline and ETL Considerations

Data pipelines in multi-tenant AI systems must handle diverse data sources, formats, and processing requirements while maintaining strict isolation boundaries and ensuring data quality, consistency, and timeliness across tenant workloads. Pipeline orchestration systems must coordinate complex workflows that may involve data ingestion, transformation, model training, and inference operations while respecting tenant-specific scheduling requirements and resource constraints.

Extract, Transform, and Load processes require sophisticated approaches to handle tenant-specific data schemas, transformation rules, and destination requirements while maximizing resource utilization and maintaining processing efficiency. Data validation and quality assurance mechanisms must operate across tenant boundaries while preventing cross-contamination and ensuring that data quality issues affecting one tenant do not impact others.

Stream processing architectures enable real-time data processing capabilities that are increasingly important for AI applications requiring immediate responses to changing conditions or user interactions. Multi-tenant stream processing systems must implement tenant-aware partitioning, routing, and processing logic while maintaining low latency and high throughput characteristics essential for responsive AI applications.

Data lineage and provenance tracking become particularly complex in multi-tenant environments where data may flow through multiple processing stages, undergo various transformations, and contribute to multiple tenant-specific outcomes. Comprehensive lineage tracking systems enable audit capabilities, regulatory compliance, and debugging while maintaining tenant data isolation and security requirements.

Monitoring and Observability

Comprehensive monitoring and observability strategies are essential for maintaining reliable multi-tenant AI systems that can effectively serve diverse tenant populations while meeting stringent performance and availability requirements. Multi-dimensional monitoring approaches must track system performance, tenant-specific metrics, resource utilization patterns, and business-level indicators while respecting data privacy and isolation requirements.

Distributed tracing systems provide crucial visibility into complex multi-tenant request flows that may span multiple services, data stores, and processing components. Trace correlation and analysis capabilities enable rapid problem identification and resolution while maintaining tenant data privacy and supporting capacity planning and performance optimization initiatives.

Anomaly detection and alerting systems must operate across multiple tenant contexts while avoiding false positives and ensuring that issues affecting specific tenants are rapidly identified and addressed. Machine learning-based anomaly detection can provide sophisticated capabilities for identifying unusual patterns in system behavior, tenant usage, or performance characteristics that may indicate problems or optimization opportunities.

Performance analytics and reporting systems must provide comprehensive insights into system operation while enabling tenant-specific performance analysis and capacity planning. Multi-tenant dashboards and reporting interfaces must balance operational visibility requirements with tenant data privacy and security considerations while supporting various stakeholder information needs.

Cost Optimization and Billing

Cost management in multi-tenant AI systems requires sophisticated approaches to resource tracking, allocation, and billing that can accurately attribute costs to specific tenants while optimizing overall system efficiency and profitability. Activity-based costing models must consider various factors including computational resources, storage utilization, data transfer, and shared infrastructure overhead to provide fair and transparent cost allocation across tenant populations.

Resource pooling and sharing strategies can significantly reduce overall system costs while maintaining tenant isolation and performance requirements. Advanced resource optimization algorithms can dynamically allocate shared resources based on demand patterns, service level agreements, and cost optimization objectives while ensuring that resource sharing does not compromise security or performance requirements.

Billing and metering systems must provide accurate, transparent, and flexible pricing models that can accommodate diverse tenant usage patterns, service requirements, and business models. Real-time usage tracking and reporting enable both providers and tenants to monitor resource consumption, optimize usage patterns, and make informed decisions about service utilization and configuration.

Cost prediction and budgeting capabilities help both providers and tenants plan for future resource requirements and costs while identifying optimization opportunities and potential cost savings through improved resource utilization, architectural changes, or service configuration adjustments.

Scalability and Growth Planning

Scalability planning for multi-tenant AI systems must accommodate exponential growth in both tenant populations and individual tenant resource requirements while maintaining consistent performance and reliability characteristics. Capacity planning models must consider various growth scenarios including organic tenant growth, seasonal usage variations, and sudden demand spikes that may result from external factors or viral adoption patterns.

Infrastructure scaling strategies must balance automated scaling capabilities with manual oversight and control to ensure that scaling operations maintain system stability and tenant isolation while optimizing resource utilization and costs. Multi-region deployment patterns provide geographical scaling capabilities while addressing data sovereignty requirements and improving performance for globally distributed tenant populations.

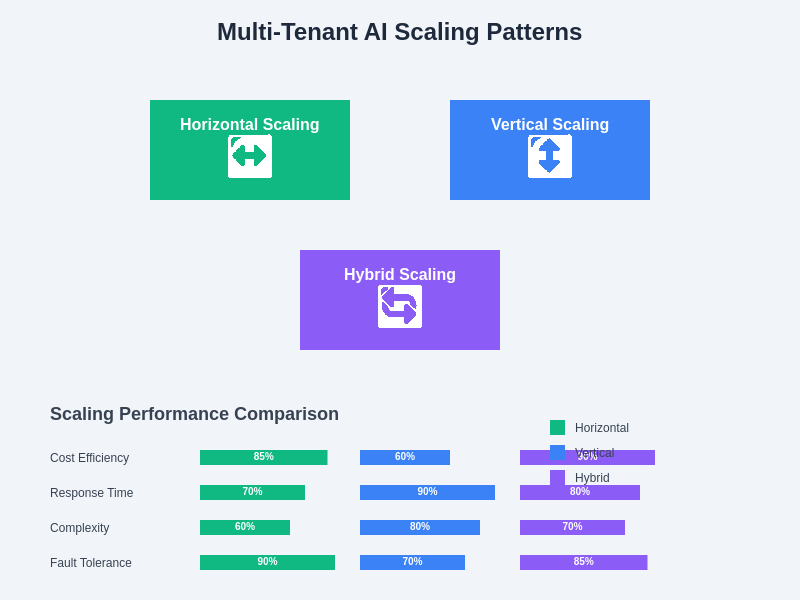

The choice of scaling approach significantly impacts system performance, cost efficiency, and operational complexity. Hybrid scaling strategies often provide optimal balance by combining horizontal and vertical scaling techniques based on workload characteristics and resource requirements.

Database scaling approaches must handle growing data volumes, increasing query complexity, and evolving schema requirements while maintaining consistent performance and ensuring that scaling operations do not disrupt service availability. Horizontal scaling through sharding, vertical scaling through hardware upgrades, and hybrid approaches each offer different trade-offs in terms of complexity, performance, and cost characteristics.

Technology evolution and migration planning ensure that multi-tenant AI systems can adapt to changing technology landscapes, emerging AI capabilities, and evolving tenant requirements without disrupting service delivery or compromising existing functionality. Gradual migration strategies and backward compatibility considerations enable smooth transitions to new technologies while maintaining system reliability and tenant satisfaction.

Future Trends and Considerations

The evolution of multi-tenant AI architectures continues to be shaped by emerging technologies, changing regulatory landscapes, and evolving customer expectations for AI-powered services. Edge computing integration brings new opportunities for distributed AI processing while introducing additional complexity in managing multi-tenant workloads across diverse edge locations with varying capabilities and connectivity characteristics.

Serverless computing paradigms offer potential advantages for multi-tenant AI systems by providing automatic scaling, reduced operational overhead, and fine-grained cost allocation, though they also present challenges in managing state, handling long-running AI workloads, and maintaining consistent performance across tenant boundaries.

Federated learning and privacy-preserving AI techniques are becoming increasingly important for multi-tenant systems that must balance the benefits of shared learning with strict data privacy requirements. These approaches enable collaborative model improvement while maintaining tenant data isolation and addressing growing privacy concerns and regulatory requirements.

Quantum computing integration represents a longer-term consideration that may fundamentally change the computational characteristics of AI workloads and require new approaches to multi-tenant resource management, security, and isolation. Early planning and architectural flexibility will be crucial for organizations preparing to leverage quantum advantages while maintaining multi-tenant service capabilities.

The continued advancement of AI technologies, combined with evolving customer expectations and regulatory requirements, will drive ongoing innovation in multi-tenant AI architectures, requiring organizations to maintain flexible, adaptable systems that can incorporate new technologies and approaches while preserving existing investments and maintaining service reliability.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The architectural patterns and strategies discussed should be carefully evaluated in the context of specific requirements, constraints, and objectives. Implementation of multi-tenant AI systems involves complex technical, security, and compliance considerations that require thorough analysis and expert guidance. Organizations should conduct comprehensive risk assessments and consult with qualified professionals when designing and implementing multi-tenant AI architectures.