The convergence of Node.js and artificial intelligence has opened unprecedented opportunities for developers to create sophisticated server-side machine learning applications that can process, analyze, and respond to data in real-time. This powerful combination leverages JavaScript’s versatility and Node.js’s event-driven architecture to deliver scalable AI solutions that can handle complex computational tasks while maintaining the responsiveness and efficiency that modern applications demand.

Server-side AI implementation with Node.js represents a paradigm shift from traditional client-side processing, offering enhanced security, centralized model management, and the ability to leverage powerful server hardware for intensive machine learning operations. Explore the latest AI development trends to understand how Node.js is becoming the backbone of modern AI-powered applications that serve millions of users worldwide.

The Foundation of Server-Side AI with Node.js

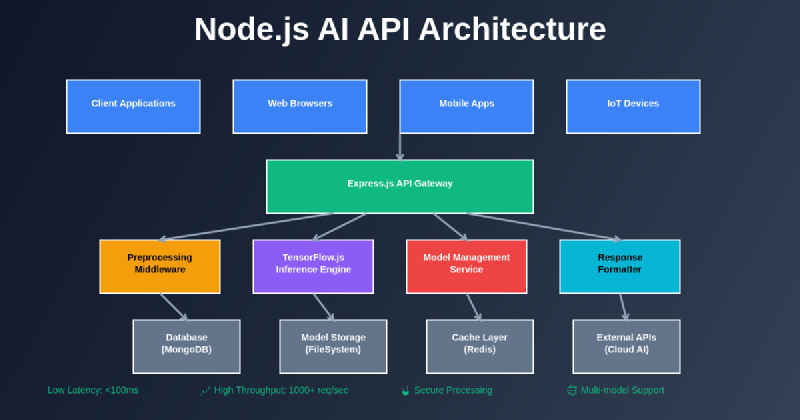

Building AI APIs with Node.js and Express requires understanding the fundamental architecture that enables efficient machine learning operations on the server. Unlike browser-based implementations, server-side AI processing can utilize the full computational power of the hosting environment, access extensive datasets, and implement sophisticated caching mechanisms that dramatically improve response times for repeated inference requests.

The Express framework provides the ideal foundation for AI API development through its lightweight, flexible architecture that can be easily extended with machine learning capabilities. Express middleware can be configured to handle model loading, input preprocessing, inference execution, and result formatting, creating a seamless pipeline that transforms raw data into actionable intelligence. This architectural approach ensures that AI functionality integrates naturally with existing web application patterns while providing the scalability needed for production deployments.

The event-driven nature of Node.js particularly benefits AI applications that require handling multiple concurrent requests for model inference. Traditional synchronous processing models can create bottlenecks when serving AI predictions to multiple clients simultaneously, but Node.js’s non-blocking I/O operations enable efficient handling of numerous AI requests without blocking the main execution thread.

TensorFlow.js: Bringing Machine Learning to JavaScript

TensorFlow.js has revolutionized server-side machine learning in the JavaScript ecosystem by providing comprehensive tools for both model deployment and training directly within Node.js environments. This powerful library enables developers to load pre-trained models, perform inference operations, and even train custom models entirely in JavaScript, eliminating the need for complex multi-language architectures that traditionally characterized machine learning deployments.

The library supports multiple backend implementations optimized for different deployment scenarios, including CPU-optimized backends for general-purpose servers and GPU-accelerated backends for high-performance inference workloads. This flexibility allows developers to choose the most appropriate computational approach based on their specific performance requirements and infrastructure constraints.

Model loading and management in TensorFlow.js provides sophisticated options for optimizing memory usage and inference speed. Models can be loaded from various sources including local files, remote URLs, and cloud storage services, with built-in caching mechanisms that ensure rapid startup times and efficient resource utilization. The library also supports model quantization and optimization techniques that reduce memory footprint while maintaining prediction accuracy.

The architectural foundation of Node.js AI APIs demonstrates the seamless integration between Express routing, middleware processing, and TensorFlow.js inference engines, creating a robust pipeline for handling intelligent requests at scale.

Implementing Natural Language Processing APIs

Natural Language Processing represents one of the most compelling applications of server-side AI, enabling applications to understand, analyze, and respond to human language with sophisticated intelligence. Node.js provides excellent support for NLP through libraries like TensorFlow.js, natural, and compromise, allowing developers to implement text analysis, sentiment detection, entity extraction, and language translation services.

Enhance your AI development with Claude’s advanced capabilities for building sophisticated language processing systems that can understand context, detect nuances, and provide intelligent responses to complex queries. Server-side NLP processing offers significant advantages over client-side implementations, including access to larger language models, centralized training data management, and the ability to implement real-time learning mechanisms that improve accuracy over time.

Text preprocessing forms a critical component of effective NLP APIs, involving tokenization, stemming, lemmatization, and feature extraction processes that prepare raw text for model inference. Node.js streams provide excellent support for handling large text corpora efficiently, enabling real-time processing of documents, social media feeds, and user-generated content without consuming excessive server resources.

Sentiment analysis APIs built with Node.js can process thousands of text samples per second, providing businesses with real-time insights into customer feedback, social media mentions, and market sentiment. These implementations typically combine pre-trained models with custom business logic that categorizes sentiment scores, identifies key themes, and triggers appropriate automated responses based on detected patterns.

Computer Vision and Image Processing Services

Server-side computer vision implementations with Node.js enable powerful image analysis capabilities that can classify objects, detect faces, recognize text, and analyze visual content with remarkable accuracy. TensorFlow.js provides comprehensive support for image processing models including convolutional neural networks, object detection systems, and semantic segmentation algorithms that can be deployed directly in Node.js environments.

Image preprocessing represents a crucial aspect of effective computer vision APIs, involving operations like resizing, normalization, color space conversion, and augmentation techniques that prepare visual data for model inference. Node.js streams and buffer handling capabilities provide efficient mechanisms for processing images without loading entire files into memory, enabling scalable handling of high-resolution images and video content.

Object detection APIs can identify and locate multiple objects within images, providing bounding box coordinates, confidence scores, and classification labels that enable sophisticated visual analysis applications. These services are particularly valuable for e-commerce platforms, security systems, and content moderation applications that require automated visual content analysis at scale.

Face recognition and biometric analysis services implemented in Node.js can provide secure authentication mechanisms, demographic analysis, and emotion detection capabilities that enhance user experiences while maintaining privacy and security standards. Server-side processing ensures that sensitive biometric data never leaves the secure server environment while providing rapid response times for authentication requests.

Real-Time AI Processing with WebSockets

Real-time AI processing represents a significant advancement in interactive applications, enabling live analysis of streaming data, continuous model inference, and immediate response generation that creates engaging user experiences. Node.js WebSocket implementations provide the perfect foundation for these real-time AI services, handling bidirectional communication between clients and AI processing engines with minimal latency.

Streaming data analysis through WebSocket connections enables applications to process audio, video, and sensor data in real-time, providing immediate feedback and adaptive responses based on continuous input streams. This capability is particularly valuable for applications like live transcription services, real-time language translation, and interactive voice assistants that require immediate processing and response generation.

The event-driven architecture of Node.js naturally complements real-time AI processing requirements, enabling efficient handling of multiple concurrent streams while maintaining responsive performance for each connected client. Middleware components can implement sophisticated queuing mechanisms, load balancing strategies, and resource allocation policies that ensure fair distribution of computational resources across multiple real-time processing requests.

Data Pipeline Integration and ETL Processes

Modern AI APIs require sophisticated data pipeline integration that can handle diverse data sources, implement transformation processes, and maintain data quality standards throughout the inference lifecycle. Node.js provides excellent support for building these data pipelines through its rich ecosystem of database connectors, file processing libraries, and streaming data handlers that can integrate seamlessly with machine learning workflows.

Extract, Transform, and Load (ETL) processes for AI applications often involve complex data preprocessing steps including feature engineering, normalization, encoding, and validation operations that prepare raw data for model consumption. Node.js streams provide efficient mechanisms for handling these transformations without consuming excessive memory, enabling processing of large datasets that exceed available RAM capacity.

Leverage Perplexity’s research capabilities for gathering comprehensive information about optimal data pipeline architectures and best practices that ensure reliable, scalable AI service implementations. Data quality monitoring and validation mechanisms implemented in Node.js can detect anomalies, handle missing values, and ensure data consistency across distributed AI processing systems.

Database integration for AI applications requires careful consideration of performance, scalability, and consistency requirements that differ significantly from traditional web application database usage patterns. AI workloads often involve complex queries, large result sets, and frequent model parameter updates that require specialized database optimization strategies and caching mechanisms.

Model Management and Version Control

Production AI APIs require sophisticated model management systems that can handle model versioning, A/B testing, gradual rollouts, and rollback capabilities that ensure service reliability while enabling continuous improvement of AI capabilities. Node.js provides excellent support for implementing these model management systems through its modular architecture and extensive package ecosystem.

Model versioning strategies must balance performance requirements with flexibility needs, enabling seamless transitions between model versions while maintaining consistent API interfaces for client applications. Loading multiple model versions simultaneously allows for comparative analysis, gradual traffic migration, and immediate rollback capabilities that minimize service disruption during model updates.

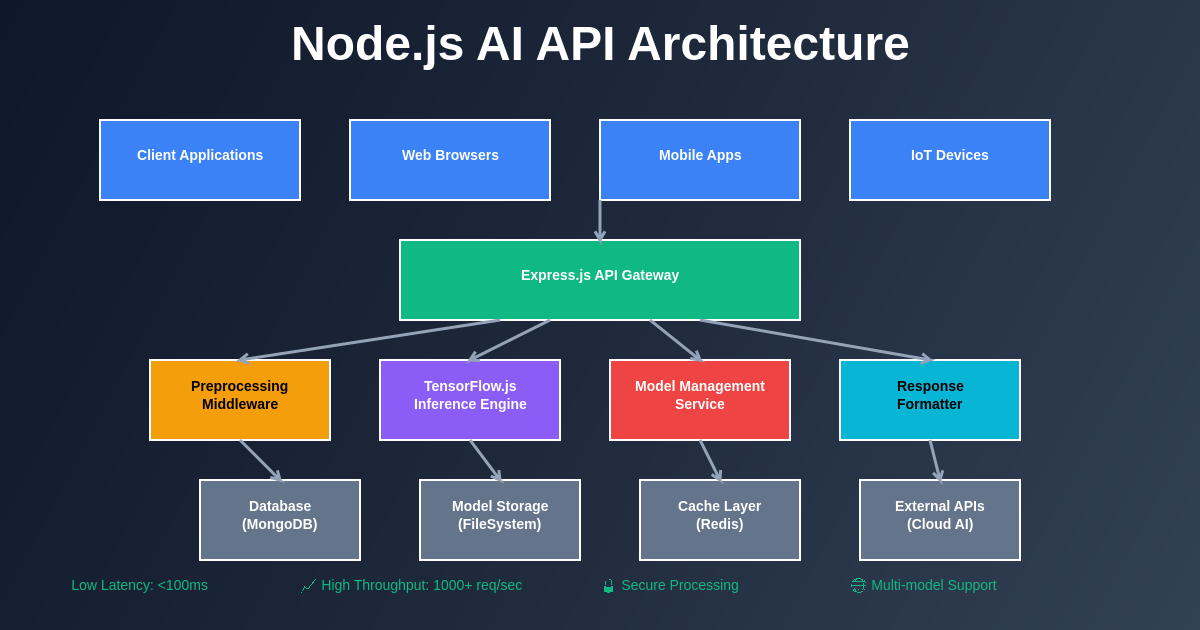

Performance monitoring and metrics collection for AI models requires specialized instrumentation that tracks inference latency, accuracy metrics, resource utilization, and error rates across different model versions and deployment environments. Node.js monitoring libraries provide comprehensive capabilities for implementing these tracking systems while maintaining minimal performance overhead.

Automated model deployment pipelines can integrate continuous integration systems with model training workflows, enabling automatic testing, validation, and deployment of improved models based on predefined performance criteria and approval processes.

Security and Authentication in AI APIs

AI API security requires comprehensive approaches that protect sensitive model parameters, prevent adversarial attacks, ensure data privacy, and implement robust authentication mechanisms that control access to computational resources. Node.js security middleware provides extensive capabilities for implementing these protection mechanisms while maintaining optimal performance for legitimate AI requests.

Rate limiting and resource allocation controls prevent abuse of computationally expensive AI endpoints while ensuring fair access for authorized users. These systems must balance security requirements with performance needs, implementing intelligent throttling mechanisms that can distinguish between legitimate high-volume usage and potential abuse patterns.

Data privacy considerations for AI APIs often involve implementing encryption for data in transit and at rest, anonymization techniques for sensitive information, and access logging mechanisms that provide audit trails for compliance requirements. Node.js cryptography libraries provide comprehensive support for implementing these security measures with minimal performance impact.

Authentication and authorization systems for AI APIs frequently require integration with existing identity management systems while providing granular access controls for different AI capabilities and resource tiers. Token-based authentication systems can implement sophisticated permission models that control access to specific models, features, and computational resources based on user roles and subscription levels.

Performance monitoring for AI APIs requires tracking multiple dimensions including inference latency, throughput, accuracy metrics, and resource utilization across different models and deployment environments.

Scalability and Performance Optimization

Scaling AI APIs built with Node.js requires careful consideration of computational requirements, memory management, load balancing strategies, and horizontal scaling approaches that can handle increasing demand while maintaining consistent response times. The event-driven architecture of Node.js provides natural advantages for scaling AI workloads, but optimization strategies must account for the unique characteristics of machine learning inference operations.

Horizontal scaling strategies for AI APIs often involve distributing model instances across multiple server nodes while implementing intelligent load balancing that considers model loading times, memory requirements, and current computational load. Container orchestration systems like Kubernetes provide excellent support for managing these distributed AI deployments with automatic scaling based on demand patterns.

Memory management optimization for AI applications requires careful consideration of model loading strategies, caching policies, and garbage collection tuning that minimizes memory usage while maintaining optimal performance. Large machine learning models can consume significant memory resources, requiring specialized allocation strategies that balance memory efficiency with response time requirements.

Caching strategies for AI APIs can dramatically improve performance by storing frequently requested inference results, preprocessing common input patterns, and maintaining warm model instances that eliminate initialization latency. These caching mechanisms must consider the trade-offs between memory usage, accuracy requirements, and response time improvements.

Monitoring and Debugging AI Services

Production AI APIs require comprehensive monitoring systems that track both traditional web service metrics and AI-specific performance indicators including model accuracy, inference latency, resource utilization, and data quality metrics. Node.js monitoring libraries provide extensive capabilities for implementing these tracking systems while maintaining minimal performance overhead on AI processing operations.

Error handling and debugging for AI services must address unique challenges including model loading failures, input validation errors, inference exceptions, and resource exhaustion scenarios that require specialized recovery strategies. Implementing robust error handling ensures graceful degradation of service quality rather than complete system failures when individual components encounter problems.

Logging strategies for AI APIs should capture both system-level events and AI-specific metrics including input characteristics, inference results, processing times, and accuracy measurements that enable comprehensive analysis of system behavior and performance trends over time.

Performance profiling for AI applications requires specialized tools that can analyze computational bottlenecks, memory allocation patterns, and processing pipeline efficiency while accounting for the unique characteristics of machine learning workloads that differ significantly from traditional web application performance patterns.

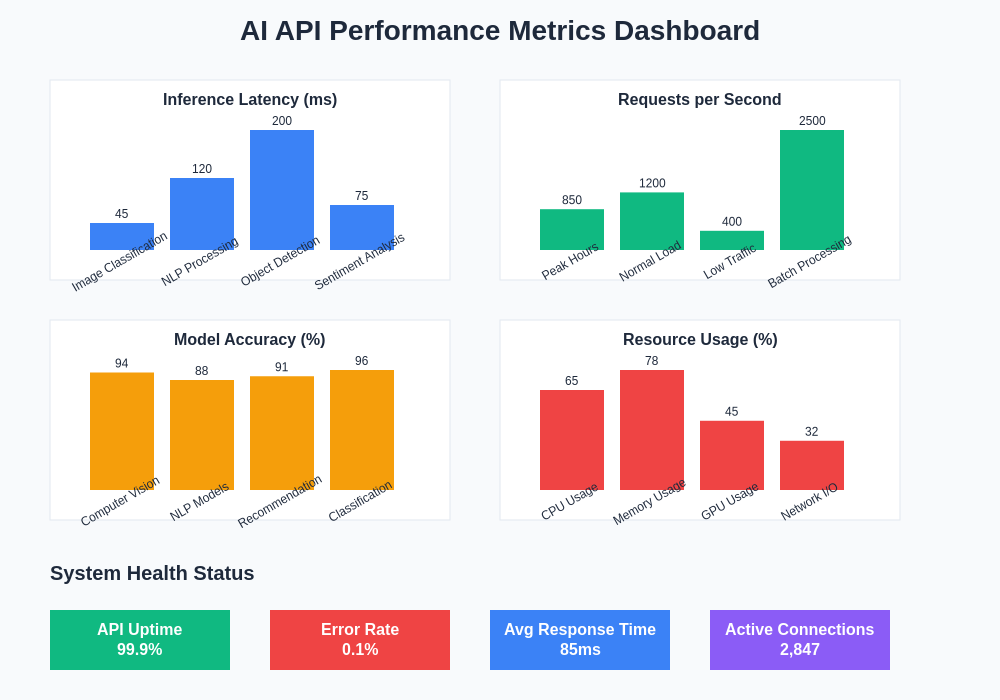

The machine learning pipeline visualization demonstrates the flow of data through preprocessing, model inference, and post-processing stages that comprise a complete AI API implementation.

Integration with Cloud AI Services

Modern AI APIs often benefit from integrating multiple AI services including cloud-based machine learning platforms, specialized AI APIs, and hybrid architectures that combine local processing with cloud-based capabilities. Node.js provides excellent support for implementing these integrations through its comprehensive HTTP client libraries and async processing capabilities.

Hybrid architectures can optimize performance and cost by implementing intelligent routing that directs requests to appropriate processing environments based on complexity, latency requirements, and computational resource availability. Simple inference operations might be handled locally while complex analysis tasks are routed to specialized cloud services.

API orchestration for multi-service AI implementations requires sophisticated coordination mechanisms that can handle service failures, implement fallback strategies, and maintain consistent response formats across different AI providers. Node.js async capabilities provide natural support for implementing these orchestration patterns while maintaining optimal performance.

Cost optimization strategies for cloud AI integration involve implementing intelligent caching, request batching, and service selection algorithms that minimize external API usage while maintaining required response times and accuracy levels.

Future Trends and Emerging Technologies

The evolution of Node.js AI APIs continues to accelerate with emerging technologies including edge computing deployments, WebAssembly optimization, federated learning implementations, and advanced model compression techniques that enable more sophisticated AI capabilities in resource-constrained environments.

Edge computing deployment strategies for Node.js AI APIs enable processing closer to data sources, reducing latency and bandwidth requirements while maintaining security and privacy standards. These deployments often involve specialized optimization techniques that balance model complexity with computational constraints typical of edge environments.

WebAssembly integration provides opportunities for significant performance improvements in AI inference operations by enabling near-native execution speeds for computationally intensive machine learning algorithms while maintaining the development convenience and ecosystem advantages of JavaScript.

Federated learning implementations allow AI APIs to participate in distributed training processes that improve model accuracy while maintaining data privacy and security requirements. Node.js provides excellent support for implementing the communication and coordination mechanisms required for effective federated learning participation.

The continued advancement of AI technologies promises to expand the capabilities of Node.js-based AI APIs while reducing the computational and development complexity required to implement sophisticated machine learning features in web applications and services.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Node.js, Express, and machine learning technologies. Readers should conduct their own research and testing when implementing AI APIs in production environments. Performance and accuracy may vary depending on specific use cases, data characteristics, and implementation details. Always consider security, privacy, and compliance requirements when deploying AI services.