The integration of OpenAI’s powerful GPT models into modern applications has become a cornerstone of intelligent software development, enabling developers to harness the transformative capabilities of artificial intelligence without requiring extensive machine learning expertise. This comprehensive approach to API integration opens unprecedented opportunities for creating applications that can understand natural language, generate human-like responses, and perform complex reasoning tasks that were previously impossible or prohibitively expensive to implement.

Explore the latest AI development trends to understand how OpenAI API integration fits into the broader landscape of AI-powered application development. The strategic implementation of GPT capabilities within existing software architectures represents a fundamental shift toward more intelligent, responsive, and user-centric application design that leverages the cutting-edge advances in natural language processing and artificial intelligence.

Understanding OpenAI API Architecture

The OpenAI API provides a robust and scalable infrastructure for accessing state-of-the-art language models through a well-designed RESTful interface that abstracts the complexity of machine learning operations behind simple HTTP requests. This architectural approach enables developers to integrate sophisticated AI capabilities into their applications regardless of their underlying technology stack or deployment environment, creating a democratized pathway to AI-enhanced functionality.

The API’s design philosophy emphasizes simplicity without sacrificing power, offering multiple model variants optimized for different use cases, from quick conversational responses to complex document analysis and code generation. Understanding the nuances of model selection, prompt engineering, and response handling forms the foundation for successful OpenAI API integration that delivers consistent, reliable, and cost-effective results across diverse application scenarios.

The modular nature of the OpenAI API allows developers to start with basic implementations and progressively enhance their integrations with advanced features such as fine-tuning, function calling, and streaming responses. This scalable approach ensures that applications can grow and adapt to changing requirements while maintaining optimal performance and user experience throughout their evolution.

Setting Up Your Development Environment

Establishing a proper development environment for OpenAI API integration requires careful consideration of authentication mechanisms, dependency management, and error handling strategies that ensure secure and reliable communication with OpenAI’s servers. The initial setup process involves obtaining API credentials, configuring environment variables, and installing the appropriate client libraries that provide language-specific abstractions for API interactions.

Security considerations play a crucial role in environment setup, particularly regarding API key management, request rate limiting, and data privacy compliance. Implementing proper security practices from the beginning prevents common vulnerabilities and ensures that sensitive information remains protected throughout the development and deployment lifecycle. This includes establishing secure storage mechanisms for API credentials, implementing proper input validation, and designing error handling that doesn’t expose sensitive system information.

The development environment should also include monitoring and logging capabilities that provide visibility into API usage patterns, response times, and error rates. This observability infrastructure enables developers to optimize their integrations, identify potential issues before they impact users, and maintain cost-effective API usage through intelligent request management and caching strategies.

Enhance your AI development workflow with Claude for advanced reasoning and code generation capabilities that complement OpenAI API integration. The combination of multiple AI tools creates a comprehensive development ecosystem that supports every aspect of intelligent application development from initial design through production deployment.

Basic API Integration Patterns

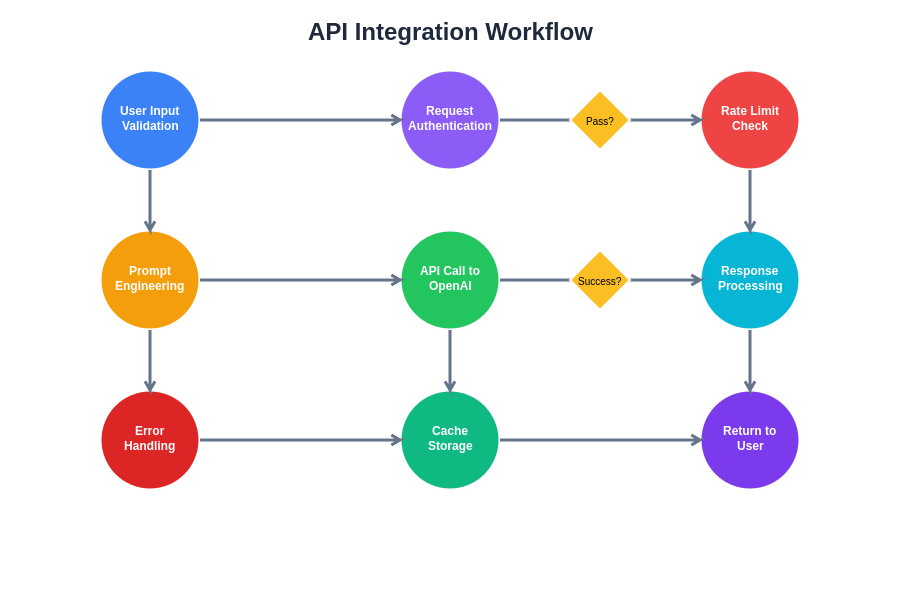

The fundamental patterns for OpenAI API integration center around three core operations: prompt construction, request execution, and response processing. These patterns form the building blocks for more sophisticated implementations and provide a solid foundation for understanding how to effectively communicate with GPT models to achieve desired outcomes. Mastering these basic patterns enables developers to create reliable, efficient, and maintainable integrations that can be extended and customized for specific application requirements.

Prompt construction represents the most critical aspect of successful API integration, as the quality and structure of prompts directly influence the relevance and accuracy of generated responses. Effective prompt engineering involves understanding model capabilities, designing clear instructions, providing appropriate context, and structuring inputs in ways that optimize model performance. This skill develops through experimentation and testing, requiring developers to iterate on prompt designs while measuring the quality and consistency of generated outputs.

Request execution encompasses the technical aspects of API communication, including proper header configuration, request formatting, error handling, and timeout management. Implementing robust request handling ensures that applications can gracefully manage API failures, network issues, and rate limiting while providing users with consistent experiences even when underlying services experience temporary disruptions.

Advanced Implementation Strategies

Advanced OpenAI API integration strategies involve sophisticated techniques for optimizing performance, managing costs, and implementing complex workflows that leverage multiple API calls or combine GPT capabilities with other services. These strategies enable developers to create more sophisticated applications that can handle complex user interactions, maintain conversation context, and provide personalized experiences that adapt to individual user preferences and behavior patterns.

Function calling represents one of the most powerful advanced features, enabling GPT models to interact with external systems, databases, and APIs through structured function definitions. This capability transforms GPT from a text generation tool into an intelligent orchestrator that can perform complex multi-step operations, gather information from various sources, and execute actions based on natural language instructions. Implementing function calling requires careful design of function schemas, proper error handling, and security considerations to prevent unauthorized access to sensitive systems.

Streaming responses provide another advanced capability that significantly improves user experience by delivering partial results as they become available rather than waiting for complete responses. This approach is particularly valuable for applications that generate lengthy content or need to provide real-time feedback to users. Implementing streaming requires careful handling of partial responses, proper error recovery mechanisms, and user interface designs that can accommodate incremental content updates.

Error Handling and Resilience

Robust error handling and resilience strategies are essential for production OpenAI API integrations, as network issues, rate limiting, and service interruptions can significantly impact application reliability and user experience. Comprehensive error handling involves implementing retry mechanisms with exponential backoff, graceful degradation strategies, and fallback options that maintain application functionality even when API services are temporarily unavailable.

Rate limiting management requires sophisticated strategies for monitoring API usage, implementing request queuing, and optimizing request patterns to stay within allocated limits while maintaining responsive user experiences. This involves understanding OpenAI’s rate limiting policies, implementing client-side rate limiting, and designing application workflows that efficiently batch requests and minimize unnecessary API calls through intelligent caching and response reuse.

Circuit breaker patterns provide additional resilience by automatically detecting service failures and temporarily routing requests to alternative implementations or cached responses. These patterns prevent cascading failures and help applications maintain stability during periods of high load or service degradation while automatically recovering when normal service levels are restored.

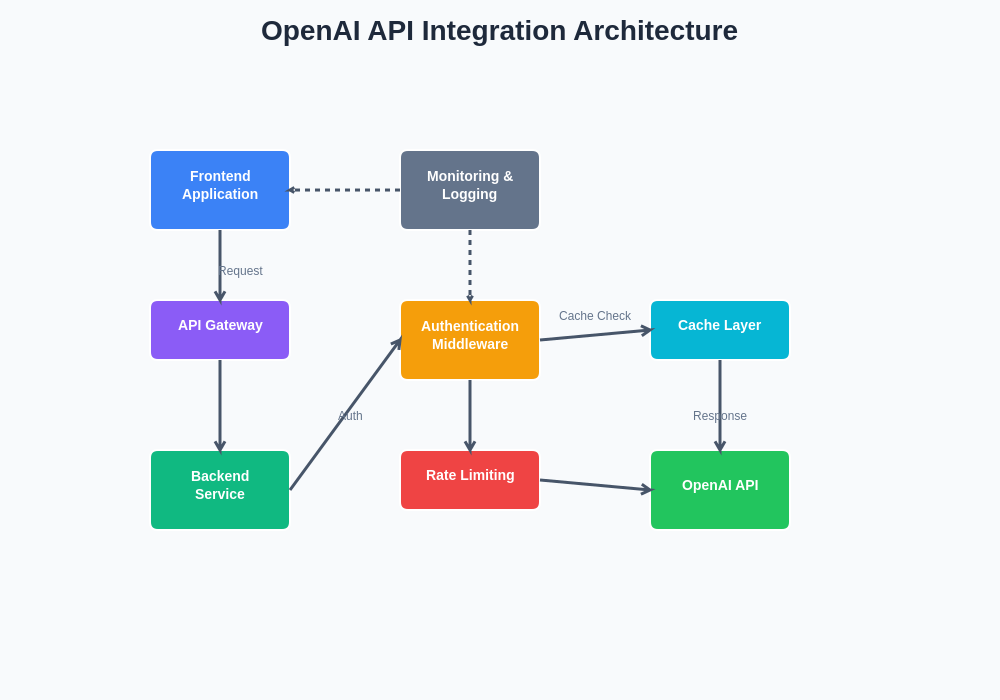

The architectural diagram illustrates the comprehensive integration patterns that enable seamless communication between applications and OpenAI’s services while maintaining security, reliability, and performance standards throughout the request lifecycle.

Security and Authentication Best Practices

Security considerations for OpenAI API integration extend beyond basic authentication to encompass comprehensive data protection, access control, and privacy compliance strategies that protect both applications and user data. Implementing proper security measures requires understanding the full security landscape, from secure credential management and encrypted communications to input validation and output sanitization that prevents potential security vulnerabilities.

API key management represents the foundation of secure integration, requiring secure storage mechanisms, regular key rotation, and proper access controls that limit key exposure and prevent unauthorized usage. This includes implementing environment-specific key management, using secure key storage services, and establishing monitoring systems that detect unusual usage patterns or potential security breaches.

Data privacy and compliance considerations become increasingly important as applications handle sensitive user information and must comply with various regulatory requirements. Implementing proper data handling procedures, user consent mechanisms, and data retention policies ensures that applications meet legal obligations while maintaining user trust and protecting sensitive information from unauthorized access or misuse.

Performance Optimization Techniques

Performance optimization for OpenAI API integrations involves multiple strategies for reducing latency, minimizing costs, and improving user experience through intelligent request management and response caching. These optimization techniques enable applications to provide responsive experiences while managing API costs and maintaining scalability as user demand grows.

Caching strategies play a crucial role in performance optimization, allowing applications to reuse previous responses for similar requests and reduce unnecessary API calls. Implementing effective caching requires understanding request patterns, designing appropriate cache keys, and establishing cache invalidation policies that balance performance benefits with data freshness requirements. Advanced caching strategies may include semantic similarity matching and intelligent cache warming based on predicted user behavior.

Request batching and parallel processing techniques enable applications to handle multiple operations efficiently while respecting rate limits and optimizing resource utilization. These approaches require careful design of request queuing systems, intelligent batching algorithms, and proper error handling that can manage partial failures without compromising overall system reliability.

Leverage Perplexity for comprehensive research and information gathering that complements OpenAI API capabilities in building intelligent applications. The integration of multiple AI services creates more robust and capable applications that can handle diverse user requirements and complex information processing tasks.

Real-World Implementation Examples

Practical implementation examples demonstrate how OpenAI API integration principles apply to real-world scenarios, from simple chatbot implementations to complex document analysis systems and intelligent content generation platforms. These examples provide concrete guidance for developers facing similar challenges and illustrate best practices for common integration patterns and use cases.

Customer support automation represents a common and valuable application of OpenAI API integration, where GPT models can understand customer inquiries, access relevant information from knowledge bases, and generate helpful responses that resolve issues efficiently. Implementing effective customer support automation requires careful prompt engineering, integration with existing support systems, and escalation mechanisms that seamlessly transfer complex issues to human agents when necessary.

Content generation and personalization systems leverage OpenAI APIs to create tailored experiences that adapt to individual user preferences, behavior patterns, and contextual requirements. These implementations require sophisticated prompt engineering, user modeling systems, and content management workflows that can generate, review, and publish personalized content at scale while maintaining quality and brand consistency.

Integration with Popular Frameworks

Modern application development relies heavily on established frameworks and libraries that provide structured approaches to building scalable, maintainable applications. OpenAI API integration patterns have been adapted and optimized for popular frameworks including React, Vue.js, Angular, Django, Flask, Express.js, and many others, each offering unique advantages and considerations for AI-powered functionality.

Frontend framework integration involves managing asynchronous API calls, handling loading states, implementing proper error boundaries, and designing user interfaces that can accommodate variable response times and content lengths. These integrations must balance user experience considerations with technical constraints while providing intuitive interfaces for AI-powered features that feel natural and responsive to users.

Backend framework integration focuses on API orchestration, data processing, security implementation, and scalability considerations that enable applications to handle high volumes of requests while maintaining performance and reliability. This includes implementing proper middleware, designing efficient data pipelines, and establishing monitoring systems that provide visibility into system performance and usage patterns.

The workflow diagram demonstrates the complete integration process from initial request processing through response delivery, highlighting key decision points and optimization opportunities throughout the integration pipeline.

Cost Management and Monitoring

Effective cost management for OpenAI API integrations requires comprehensive monitoring systems, usage optimization strategies, and budget controls that prevent unexpected expenses while maintaining application functionality and user experience. Understanding the cost structure of different models, request types, and usage patterns enables developers to make informed decisions about implementation strategies and resource allocation.

Usage monitoring and analytics provide essential insights into API consumption patterns, enabling developers to identify optimization opportunities, detect unusual usage spikes, and implement cost-saving measures without compromising application quality. This includes implementing detailed logging systems, usage dashboards, and automated alerting mechanisms that notify teams of significant changes in usage patterns or costs.

Budget controls and cost optimization strategies involve implementing usage limits, intelligent request routing, and dynamic model selection based on request complexity and budget constraints. These strategies enable applications to maintain functionality while staying within budget limits through intelligent resource management and adaptive behavior based on current usage levels and financial constraints.

Testing and Quality Assurance

Comprehensive testing strategies for OpenAI API integrations must account for the non-deterministic nature of AI responses while ensuring consistent application behavior and reliable user experiences. This requires developing testing methodologies that can validate AI functionality without being overly rigid about specific response content, focusing instead on response quality, relevance, and adherence to expected patterns.

Integration testing involves validating API communication, error handling, and data flow through complex systems while simulating various failure scenarios and edge cases. These tests must account for network latency, rate limiting, and service availability issues while ensuring that applications can gracefully handle all potential failure modes without compromising data integrity or user experience.

Quality assurance processes for AI-powered features require establishing evaluation criteria, implementing automated quality checks, and developing review processes that can assess the appropriateness and accuracy of AI-generated content. This includes implementing content moderation systems, establishing feedback loops for continuous improvement, and designing processes for handling edge cases and unexpected outputs.

Deployment and Production Considerations

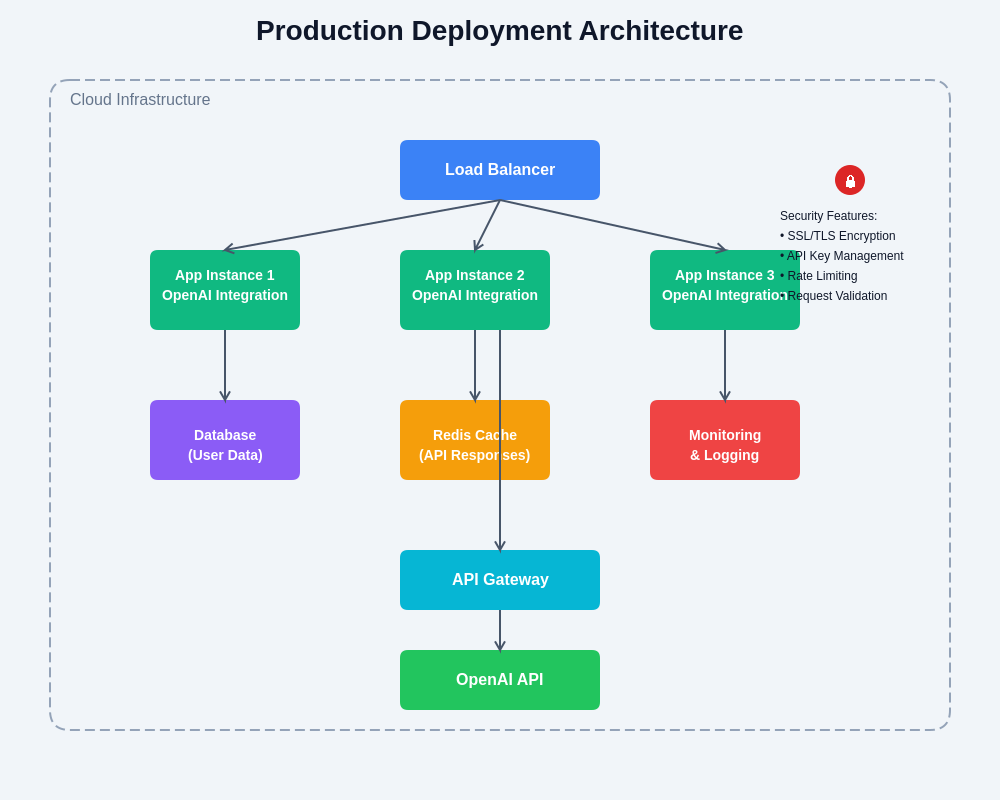

Production deployment of OpenAI API integrations requires careful planning and consideration of scalability, reliability, and maintenance requirements that ensure stable operation under varying load conditions and usage patterns. This involves implementing proper infrastructure, monitoring systems, and operational procedures that support continuous operation while enabling rapid response to issues and changing requirements.

Scalability planning involves designing systems that can handle increasing API usage, user demand, and data processing requirements while maintaining performance and cost efficiency. This includes implementing load balancing, auto-scaling mechanisms, and efficient resource management strategies that can adapt to changing demand patterns without manual intervention.

Monitoring and alerting systems provide essential visibility into production operations, enabling teams to detect and respond to issues quickly while maintaining high availability and user satisfaction. These systems must monitor API performance, application health, user experience metrics, and cost trends while providing actionable insights that support informed decision-making and proactive problem resolution.

The production architecture diagram illustrates the comprehensive infrastructure required for scalable, reliable OpenAI API integration including monitoring, security, and failover mechanisms that ensure consistent service delivery.

Future Developments and Trends

The landscape of OpenAI API integration continues to evolve rapidly with new model releases, feature enhancements, and integration patterns that expand the possibilities for AI-powered applications. Staying current with these developments requires ongoing learning, experimentation, and adaptation of integration strategies to leverage new capabilities while maintaining backward compatibility and system stability.

Emerging integration patterns include multimodal capabilities, improved function calling, enhanced customization options, and more sophisticated fine-tuning mechanisms that enable developers to create increasingly specialized and powerful AI-powered applications. Understanding these trends and their implications helps developers make informed decisions about technology adoption and system architecture evolution.

The future of OpenAI API integration will likely involve deeper integration with development frameworks, improved tooling and debugging capabilities, and more sophisticated orchestration systems that can manage complex AI workflows across multiple services and platforms. Preparing for these developments involves building flexible, extensible systems that can adapt to changing capabilities while maintaining reliability and performance standards.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of OpenAI API capabilities and integration practices. Readers should conduct their own research and testing when implementing AI integrations in production environments. API capabilities, pricing, and terms of service may change, and developers should refer to official OpenAI documentation for the most current information. The effectiveness of integration strategies may vary depending on specific use cases, technical requirements, and business objectives.