The academic publishing landscape in artificial intelligence and machine learning represents one of the most dynamic and rapidly evolving fields in modern scientific research. The peer review process serves as the cornerstone of scientific integrity, ensuring that groundbreaking discoveries in AI are rigorously evaluated, validated, and disseminated to the global research community. Understanding the intricacies of publishing machine learning research requires navigating complex editorial policies, reviewer expectations, and the unique challenges that arise from the interdisciplinary nature of AI research.

Stay updated with the latest AI research trends to understand current directions in machine learning publications and emerging research areas that are capturing the attention of the academic community. The intersection of theoretical advancement and practical application in AI research creates unique opportunities for researchers to contribute meaningfully to both academic knowledge and real-world solutions.

The Modern Landscape of AI Research Publishing

The contemporary environment for publishing machine learning research differs significantly from traditional academic disciplines due to the rapid pace of technological advancement and the substantial commercial interest in AI developments. Major conferences such as NeurIPS, ICML, ICLR, and AAAI have become the primary venues for disseminating cutting-edge research, often surpassing traditional journals in terms of impact and immediacy. These conferences employ rigorous peer review processes that can be more competitive than many established journals, with acceptance rates often falling below twenty percent for top-tier venues.

The unique characteristics of AI research publishing include the prominence of preprint servers like arXiv, where researchers share preliminary findings before formal peer review, and the increasing importance of reproducibility and open-source code availability. The community has embraced rapid dissemination of ideas while maintaining high standards for experimental rigor and theoretical soundness. This balance between speed and quality creates both opportunities and challenges for researchers seeking to publish their work in prestigious venues.

Understanding the Peer Review Process in Machine Learning

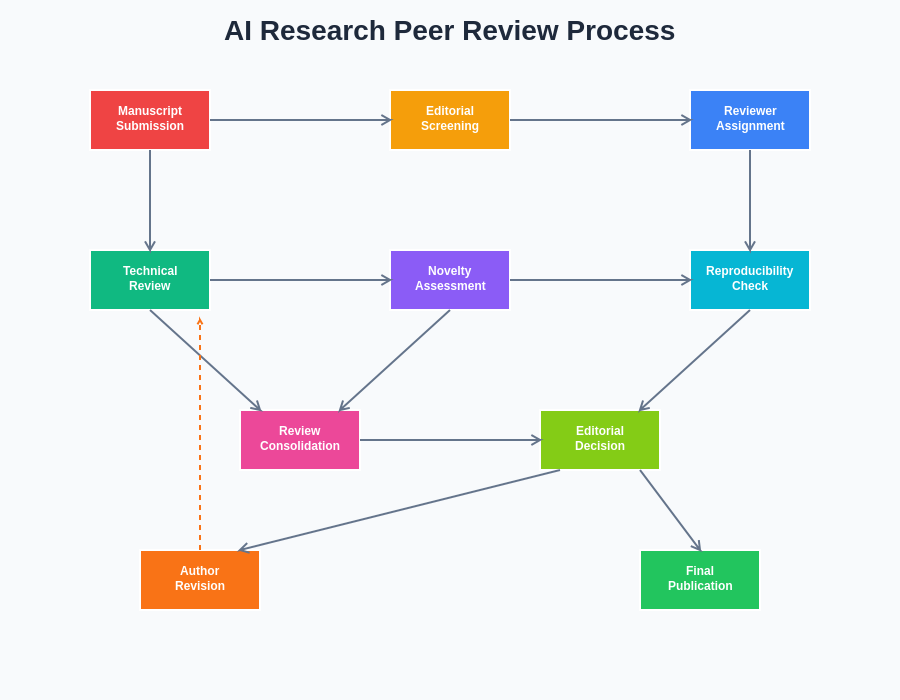

The peer review process for machine learning research involves multiple stages of evaluation that assess both technical merit and broader significance to the field. Initial screening by program committees or editorial boards ensures that submissions meet basic requirements for scope, formatting, and preliminary quality standards. Following this initial review, papers are assigned to area chairs or senior program committee members who coordinate the detailed review process and manage reviewer assignments based on expertise and potential conflicts of interest.

The review process typically involves three to four independent reviewers who evaluate submissions across multiple dimensions including novelty, technical soundness, experimental validation, clarity of presentation, and significance of contributions. Reviewers in the machine learning community often possess deep expertise in specific subfields, enabling them to provide detailed technical feedback on mathematical formulations, algorithmic innovations, and experimental methodologies. The interdisciplinary nature of AI research means that reviewers must also consider the broader implications of work that may span computer science, statistics, cognitive science, and domain-specific applications.

The systematic nature of AI research peer review ensures thorough evaluation at each stage, from initial submission through final publication. This comprehensive process maintains the high standards expected in machine learning research while providing multiple opportunities for author feedback and manuscript improvement.

Enhance your research capabilities with AI tools like Claude to improve manuscript preparation, literature review processes, and technical writing quality. The integration of AI assistance in research workflows can significantly improve the clarity and impact of academic communications while maintaining the rigor expected in peer-reviewed publications.

Preparing Manuscripts for AI Research Venues

Successful manuscript preparation for machine learning publications requires careful attention to both technical content and presentation quality. The structure of AI research papers typically follows established conventions that include clear problem formulation, comprehensive related work sections, detailed methodology descriptions, extensive experimental validation, and thoughtful discussion of limitations and future directions. The technical depth expected in machine learning papers often exceeds that of other disciplines, with reviewers expecting rigorous mathematical formulations, comprehensive ablation studies, and statistical analysis of experimental results.

The presentation of experimental results in AI research requires particular attention to reproducibility standards that have become increasingly important in the field. This includes providing sufficient implementation details, reporting hyperparameter choices, describing computational requirements, and making code and datasets available when possible. Many venues now require reproducibility statements or checklists that ensure authors have considered the factors necessary for others to replicate their work. The emphasis on reproducibility reflects the field’s commitment to building reliable knowledge that can serve as a foundation for future research.

Navigating Conference vs Journal Publication Strategies

The decision between conference and journal publication in machine learning requires understanding the distinct advantages and characteristics of each venue type. Conferences offer rapid feedback cycles, immediate visibility within the research community, and opportunities for direct interaction with peers through presentations and discussions. The timeline from submission to publication in conferences is typically shorter than journals, making them attractive for researchers working in rapidly evolving areas where timeliness is crucial for impact and relevance.

Journals in AI and machine learning, while potentially having longer review cycles, often provide more comprehensive feedback and allow for more extensive experimental validation and theoretical development. Journal papers typically undergo multiple rounds of revision that can significantly improve the quality and completeness of the final publication. The choice between venues often depends on the nature of the research contribution, with incremental advances potentially more suitable for conferences and comprehensive theoretical or empirical studies finding better homes in journals.

Understanding the landscape of AI research publication venues enables strategic decision-making about where to submit research contributions. The varying acceptance rates, review timelines, and prestige levels across different venue types provide multiple pathways for researchers to disseminate their work effectively.

The Role of Reproducibility and Open Science

Reproducibility has emerged as a central concern in machine learning research, fundamentally changing how papers are evaluated during peer review. The complexity of modern AI systems, with their dependence on large datasets, complex architectures, and extensive hyperparameter tuning, creates significant challenges for reproduction that reviewers and authors must address. The community has responded by developing standards for reporting experimental details, sharing code implementations, and providing access to datasets and trained models when possible.

The open science movement in AI research extends beyond reproducibility to encompass broader principles of transparency and accessibility. Many researchers now share not only their final results but also intermediate findings, negative results, and detailed experimental logs that provide insights into the research process itself. This transparency facilitates more informed peer review by allowing reviewers to assess not just the final outcomes but the rigor of the experimental process that led to those results.

Comprehensive reproducibility standards have become essential for successful publication in top-tier AI venues. This systematic approach to documentation and transparency significantly improves the likelihood of favorable peer review outcomes while contributing to the overall reliability of machine learning research.

Explore comprehensive research tools with Perplexity to enhance literature reviews, fact-checking, and background research that strengthens the foundation of your machine learning manuscripts. Thorough preparation and research significantly improve the likelihood of successful peer review outcomes.

Addressing Reviewer Feedback and Revision Strategies

The revision process following peer review represents a critical phase in machine learning research publication that requires strategic thinking about how to address reviewer concerns while maintaining the integrity of the original research contribution. Reviewer feedback in AI research often focuses on technical details, experimental design choices, and the positioning of work within the broader research landscape. Successful revision strategies involve systematically addressing each reviewer comment while providing clear explanations of changes made and rationales for decisions that differ from reviewer suggestions.

The technical nature of machine learning research means that reviewers may request additional experiments, different baseline comparisons, or alternative evaluation metrics that can significantly impact the scope of required revisions. Authors must balance the desire to satisfy reviewer requests with practical constraints around computational resources, dataset availability, and project timelines. Effective communication with editors about the feasibility and appropriateness of requested changes can facilitate productive revision processes that improve paper quality without creating unreasonable burdens.

Building Research Networks and Collaboration

The collaborative nature of modern AI research extends the peer review process beyond formal manuscript evaluation to include ongoing dialogue within research communities. Building relationships with other researchers through conference attendance, workshop participation, and collaborative projects creates networks that can provide informal feedback before formal submission and help authors understand the expectations and preferences of different research communities.

The interdisciplinary nature of AI research creates opportunities for collaborations that span traditional academic boundaries, bringing together expertise from computer science, mathematics, psychology, neuroscience, and domain-specific fields. These collaborations can strengthen research contributions by providing diverse perspectives on problem formulation, methodology selection, and result interpretation. The peer review process benefits from this diversity by ensuring that work is evaluated from multiple disciplinary viewpoints.

Managing Publication Ethics and Integrity

Ethical considerations in AI research publishing extend beyond traditional academic integrity concerns to include questions about the societal implications of research contributions, the responsible disclosure of potentially harmful applications, and the fair representation of limitations and potential misuse of developed technologies. Peer reviewers increasingly consider these ethical dimensions when evaluating submissions, particularly for research that could have significant real-world applications or implications for privacy, fairness, or safety.

The rapid pace of AI development and the substantial commercial interest in research outcomes create additional ethical complexities around intellectual property, conflict of interest disclosure, and the balance between open science principles and competitive advantages. Authors and reviewers must navigate these considerations while maintaining the scientific integrity that forms the foundation of peer review processes.

Understanding Different Research Contribution Types

Machine learning research encompasses diverse contribution types that require different approaches to manuscript preparation and peer review evaluation. Theoretical contributions focus on mathematical foundations, algorithmic innovations, or formal analysis of existing methods, requiring rigorous proof techniques and clear exposition of theoretical insights. Empirical contributions emphasize experimental validation, comprehensive evaluation across datasets or domains, and practical insights derived from systematic investigation of research questions.

System contributions in AI research involve the development of new tools, frameworks, or platforms that enable other researchers to advance their work more effectively. These contributions require different evaluation criteria that focus on usability, performance, and community impact rather than traditional metrics of novelty or theoretical depth. Survey and position papers play important roles in synthesizing existing knowledge and identifying promising future directions, requiring comprehensive literature coverage and insightful analysis of research trends.

Leveraging Preprint Servers and Early Dissemination

The role of preprint servers, particularly arXiv, in AI research publication has created new dynamics in the peer review process that authors must understand and navigate effectively. Preprint publication allows for rapid dissemination of research findings and enables community feedback before formal peer review, potentially improving the quality of final submissions. However, the timing of preprint release relative to conference or journal submission requires careful consideration of venue policies and potential impacts on the formal review process.

The interaction between preprint publication and formal peer review creates opportunities for iterative improvement based on community feedback, but also raises questions about priority, attribution, and the relationship between informal and formal scientific communication. Understanding these dynamics helps researchers make strategic decisions about when and how to share preliminary findings while maximizing the potential for successful formal publication.

Future Directions in AI Research Publishing

The evolution of AI research publishing continues to be shaped by technological advances, changing community norms, and the increasing intersection between academic research and commercial development. Emerging trends include the integration of interactive elements in publications, such as executable code and live demonstrations, that provide reviewers and readers with more comprehensive access to research contributions. The development of new peer review models, including open review processes and post-publication review mechanisms, reflects ongoing efforts to improve the efficiency and effectiveness of scientific evaluation.

The growing importance of interdisciplinary collaboration in AI research is likely to continue reshaping publication norms and review processes, requiring venues to develop expertise across broader ranges of topics and methodologies. The integration of AI tools into the research process itself, from literature review to experimental design to manuscript preparation, creates new opportunities for enhancing research quality while raising questions about the appropriate role of automated assistance in scientific discovery and communication.

The future landscape of AI research publishing will likely be characterized by continued emphasis on reproducibility, increased integration of diverse evaluation metrics that capture both technical merit and broader impact, and evolving standards for ethical consideration of research implications. These developments will require ongoing adaptation by researchers, reviewers, and publication venues to maintain the integrity and effectiveness of peer review processes while supporting the rapid advancement of artificial intelligence research.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of academic publishing practices in AI and machine learning research. Readers should consult specific publication guidelines and editorial policies when preparing manuscripts for submission. Publication practices may vary significantly across different venues, institutions, and research areas within the broader field of artificial intelligence.