The landscape of artificial intelligence research continues to evolve at an unprecedented pace, presenting doctoral candidates with an extraordinary array of cutting-edge research opportunities that promise to reshape our understanding of intelligence, computation, and human-machine interaction. As we stand at the precipice of transformative breakthroughs in machine learning, neural computation, and cognitive systems, the selection of a PhD thesis topic has never been more crucial or more exciting for aspiring researchers seeking to contribute meaningfully to this rapidly expanding field.

Discover the latest AI research trends and breakthroughs that are shaping the future of artificial intelligence and providing inspiration for groundbreaking doctoral research. The convergence of theoretical foundations with practical applications creates a fertile ground for innovative research that not only advances scientific understanding but also addresses real-world challenges across diverse domains from healthcare and education to climate science and social justice.

The contemporary AI research ecosystem presents unique opportunities for interdisciplinary collaboration, where traditional boundaries between computer science, neuroscience, psychology, philosophy, and domain-specific fields are increasingly blurred. This interdisciplinary nature of modern AI research enables doctoral candidates to explore novel intersections that can yield transformative insights and methodological innovations, establishing new paradigms that will influence the trajectory of artificial intelligence for decades to come.

Neural Architecture Search and Automated Machine Learning

The frontier of neural architecture search represents one of the most promising and computationally challenging areas of AI research, where the goal is to automatically discover optimal neural network architectures without relying on human expertise or intuition. This field combines principles from evolutionary computation, reinforcement learning, and optimization theory to create systems capable of designing neural networks that surpass human-engineered architectures in both efficiency and performance across diverse tasks and domains.

Current research in this area focuses on developing more efficient search strategies that can explore the vast space of possible architectures while minimizing computational overhead. The challenge lies not only in finding architectures that perform well on specific tasks but also in discovering designs that generalize across different domains, exhibit robust performance under various conditions, and can be efficiently deployed on resource-constrained devices such as mobile phones and embedded systems.

The implications of advances in neural architecture search extend far beyond academic curiosity, as automated design of neural networks could democratize access to state-of-the-art AI capabilities for researchers and practitioners who lack extensive expertise in deep learning architecture design. Furthermore, the development of architecture search methods that can adapt to specific hardware constraints or optimize for particular metrics such as energy efficiency or inference speed represents a crucial step toward making AI systems more practical and environmentally sustainable.

Quantum Machine Learning and Hybrid Computing Systems

The intersection of quantum computing and machine learning represents a revolutionary research frontier that promises to unlock computational capabilities far beyond what classical systems can achieve. Quantum machine learning explores how quantum mechanical phenomena such as superposition, entanglement, and quantum interference can be harnessed to accelerate learning algorithms, solve optimization problems more efficiently, and discover patterns in data that are intractable for classical computers.

Research in this area encompasses the development of quantum algorithms specifically designed for machine learning tasks, the creation of hybrid classical-quantum systems that leverage the strengths of both computational paradigms, and the investigation of how quantum effects might be naturally present in biological neural networks. The theoretical foundations of quantum machine learning require deep understanding of both quantum mechanics and statistical learning theory, making it an intellectually challenging but potentially transformative research area.

Experience advanced AI research tools with Claude to explore complex theoretical frameworks and mathematical models that underpin quantum machine learning research. The practical implementation of quantum machine learning algorithms requires addressing significant technical challenges including quantum error correction, decoherence mitigation, and the development of quantum programming frameworks that can effectively bridge the gap between theoretical algorithms and physical quantum hardware.

Explainable AI and Interpretability Research

The growing deployment of AI systems in critical applications such as healthcare, criminal justice, and financial services has created an urgent need for artificial intelligence systems that can provide clear, understandable explanations for their decisions and recommendations. Explainable AI research focuses on developing methods and frameworks that make complex machine learning models more transparent, interpretable, and trustworthy for human users and decision-makers.

This research area encompasses multiple dimensions including local explanations that clarify individual predictions, global explanations that reveal overall model behavior patterns, counterfactual explanations that describe how inputs would need to change to produce different outcomes, and causal explanations that identify the underlying causal relationships learned by models. The challenge lies in developing explanation methods that are simultaneously accurate, comprehensive, and accessible to non-technical users who must rely on AI systems for critical decisions.

Current research investigates techniques such as attention visualization, gradient-based attribution methods, surrogate model approaches, and rule extraction algorithms that can provide insights into how neural networks process information and arrive at specific conclusions. The development of standardized metrics for evaluating explanation quality and the creation of user-centered design principles for explanation interfaces represent crucial areas for advancing the practical utility of explainable AI systems.

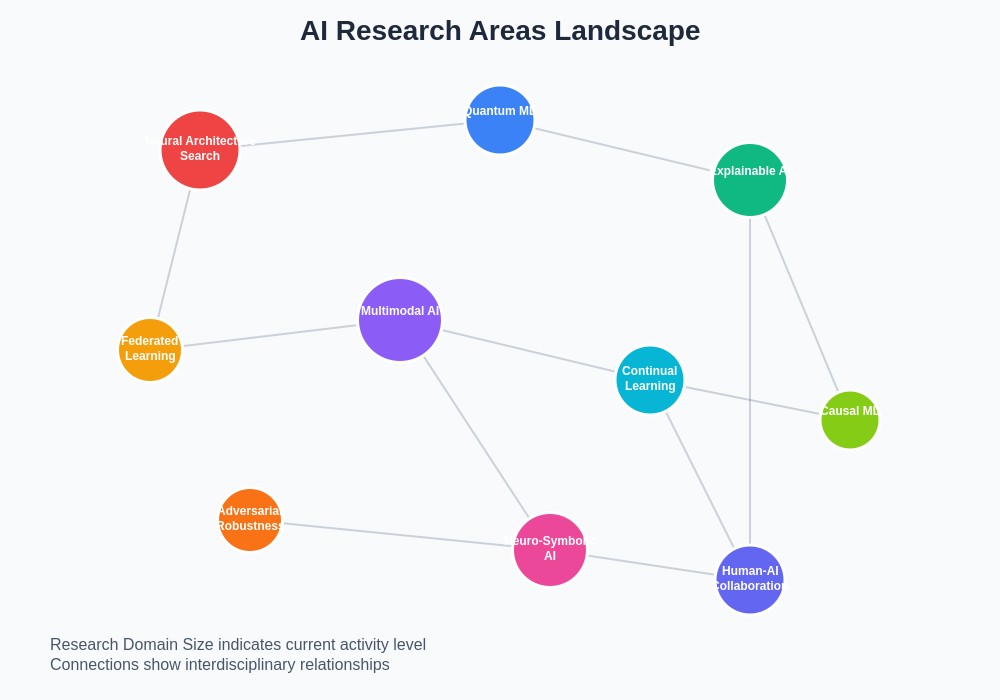

The contemporary AI research landscape encompasses diverse interconnected domains that offer rich opportunities for doctoral research, from fundamental theoretical investigations to applied research addressing specific societal challenges.

Federated Learning and Privacy-Preserving AI

The increasing importance of data privacy and security in an interconnected world has driven significant research interest in federated learning systems that enable machine learning models to be trained across distributed datasets without requiring centralized data collection. This research area addresses fundamental challenges in developing algorithms that can learn effectively from decentralized data sources while preserving the privacy and confidentiality of individual data contributors.

Federated learning research encompasses multiple technical challenges including communication efficiency in distributed training scenarios, handling non-independent and non-identically distributed data across different participants, developing robust aggregation methods that are resilient to malicious participants, and creating differential privacy mechanisms that provide formal guarantees about individual data protection. The interdisciplinary nature of this field requires expertise spanning machine learning, cryptography, distributed systems, and privacy law.

The applications of federated learning extend across numerous domains including healthcare where patient data must remain within institutional boundaries, financial services where regulatory compliance requires strict data localization, and mobile computing where on-device learning can improve user experiences while preserving privacy. Research in this area contributes not only to technical advancement but also to the development of ethical AI frameworks that respect individual privacy rights while enabling beneficial collective intelligence.

Multimodal AI and Cross-Domain Learning

The human ability to seamlessly integrate information from multiple sensory modalities and apply knowledge across different domains represents a fundamental aspect of intelligence that current AI systems struggle to replicate effectively. Multimodal AI research focuses on developing systems that can process, understand, and reason about information spanning multiple modalities such as text, images, audio, video, and sensor data in ways that capture the rich interactions and complementarities between different information sources.

This research area encompasses challenges in learning joint representations that effectively capture cross-modal relationships, developing attention mechanisms that can dynamically focus on relevant information across modalities, and creating architectures that can handle the temporal dynamics present in multimodal sequences. The theoretical foundations of multimodal learning draw from information theory, cognitive science, and signal processing to understand how different types of information can be optimally combined for learning and inference.

Enhance your research capabilities with Perplexity for comprehensive literature reviews and staying current with rapidly evolving multimodal AI research developments. Applications of multimodal AI span from autonomous vehicles that must integrate visual, lidar, and GPS information for navigation decisions to healthcare systems that combine medical imaging, electronic health records, and genetic data for improved diagnostic accuracy and treatment recommendations.

Continual Learning and Lifelong AI Systems

One of the most significant limitations of current machine learning systems is their inability to continuously learn and adapt to new information without forgetting previously acquired knowledge, a phenomenon known as catastrophic forgetting. Continual learning research addresses this fundamental challenge by developing algorithms and architectures that can incrementally acquire new capabilities while preserving existing knowledge, enabling AI systems to adapt and evolve throughout their operational lifetime.

This research area draws inspiration from neuroscience and cognitive psychology to understand how biological systems manage the stability-plasticity dilemma, balancing the need to learn new information with the requirement to maintain stable memories of past experiences. Technical approaches to continual learning include memory replay systems that selectively rehearse important past experiences, regularization methods that protect critical network parameters from modification, and meta-learning algorithms that can quickly adapt to new tasks based on prior learning experience.

The implications of successful continual learning systems extend far beyond academic research, as they could enable AI applications that continuously improve through interaction with users and environments, require less frequent retraining, and can adapt to changing conditions without extensive human intervention. This capability is particularly crucial for applications such as personalized recommendation systems, adaptive educational platforms, and autonomous agents operating in dynamic environments.

Causal Inference and Causal Machine Learning

The ability to understand and reason about causal relationships represents a fundamental aspect of human intelligence that has been largely absent from traditional machine learning approaches focused primarily on pattern recognition and correlation detection. Causal machine learning research aims to develop algorithms and frameworks that can discover causal relationships from observational data, make predictions about the effects of interventions, and provide explanations based on causal mechanisms rather than mere statistical associations.

This research area combines insights from causal inference theory, graphical models, and machine learning to create methods that can handle confounding variables, selection bias, and other challenges that arise when attempting to infer causation from observational data. The development of causal discovery algorithms that can automatically identify causal structures from data, along with methods for causal effect estimation that can account for unmeasured confounders, represents active areas of investigation with significant theoretical and practical implications.

Applications of causal machine learning span numerous domains including epidemiology where understanding disease causation is crucial for public health interventions, economics where policy effectiveness must be evaluated through causal analysis, and personalized medicine where treatment recommendations must be based on causal understanding of how interventions affect individual patients. The integration of causal reasoning capabilities into AI systems could fundamentally transform how these systems make decisions and provide explanations for their recommendations.

Adversarial Machine Learning and Robustness

The discovery that machine learning models can be easily fooled by carefully crafted adversarial examples has revealed fundamental vulnerabilities in AI systems that pose serious security and safety concerns for real-world deployments. Adversarial machine learning research focuses on understanding these vulnerabilities, developing robust training methods that improve model resilience to adversarial attacks, and creating detection mechanisms that can identify when models are being subjected to adversarial inputs.

This research area encompasses both offensive and defensive perspectives, studying how adversarial examples can be generated across different domains and attack scenarios while simultaneously developing countermeasures that can improve model robustness without significantly compromising performance on clean data. The theoretical understanding of adversarial examples draws from optimization theory, game theory, and statistical learning theory to characterize the fundamental trade-offs between model accuracy and robustness.

The practical implications of adversarial robustness research are particularly critical for safety-critical applications such as autonomous vehicles where adversarial attacks could have catastrophic consequences, medical diagnosis systems where adversarial examples could lead to misdiagnosis, and cybersecurity applications where robust detection of malicious activities is essential. The development of provable defenses that provide theoretical guarantees about model robustness represents an active area of research with significant practical importance.

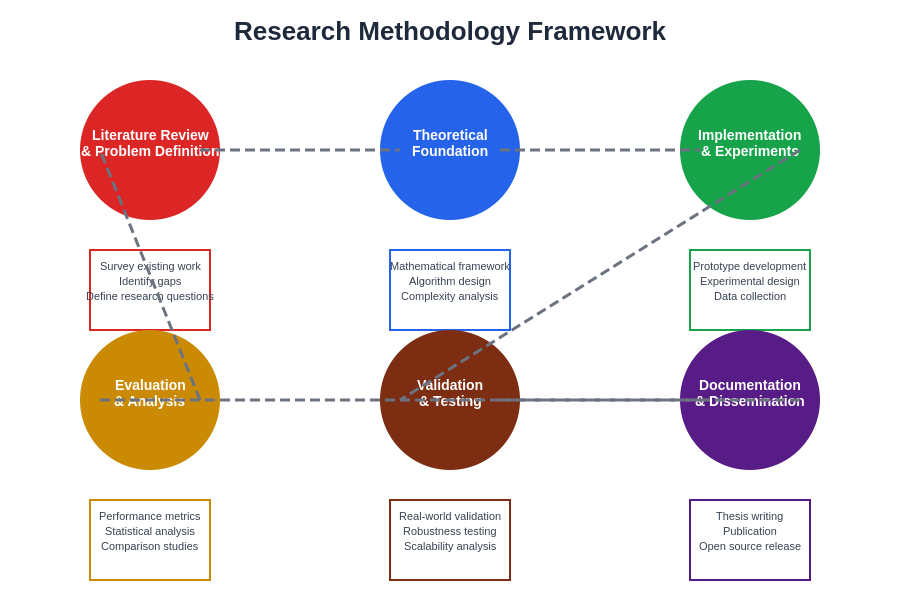

A systematic approach to AI research encompasses multiple interconnected phases from theoretical foundation development through empirical validation and real-world application, requiring careful consideration of experimental design, evaluation metrics, and reproducibility standards.

Neuro-Symbolic AI and Hybrid Reasoning Systems

The integration of symbolic reasoning capabilities with neural learning systems represents a promising research direction that aims to combine the pattern recognition strengths of deep learning with the logical reasoning and interpretability advantages of symbolic AI approaches. Neuro-symbolic AI research explores how symbolic knowledge can be incorporated into neural networks, how neural systems can learn to manipulate symbolic representations, and how hybrid architectures can leverage both paradigms for enhanced reasoning capabilities.

This research area addresses fundamental questions about how to represent and manipulate symbolic knowledge in continuous neural networks, how to enable end-to-end learning in systems that incorporate both symbolic and neural components, and how to design architectures that can perform complex reasoning tasks while maintaining the scalability and learning capabilities of neural systems. The theoretical foundations of neuro-symbolic integration draw from logic programming, knowledge representation, and differentiable programming to create unified frameworks for hybrid reasoning.

Applications of neuro-symbolic AI include question answering systems that must combine factual knowledge with reading comprehension capabilities, program synthesis tools that generate code based on natural language specifications, and scientific reasoning systems that can integrate empirical observations with theoretical knowledge. The development of neuro-symbolic approaches could address current limitations of purely neural systems including their difficulty with systematic generalization, logical reasoning, and incorporation of prior knowledge.

AI Ethics and Algorithmic Fairness

The increasing impact of AI systems on society has created urgent research needs in understanding and addressing the ethical implications of artificial intelligence, including issues of algorithmic bias, fairness, accountability, and transparency. AI ethics research combines technical methods with insights from philosophy, sociology, law, and public policy to develop frameworks for creating AI systems that align with human values and promote social good.

Research in algorithmic fairness focuses on developing mathematical definitions of fairness that can be operationalized in machine learning systems, creating methods for detecting and mitigating bias in training data and model predictions, and designing evaluation frameworks that can assess the social impact of AI deployments. The challenge lies in balancing multiple competing fairness criteria while maintaining system utility and addressing the inherent trade-offs between different notions of fairness.

The interdisciplinary nature of AI ethics research requires collaboration between technologists, ethicists, social scientists, and domain experts to ensure that research outcomes are both technically sound and socially relevant. This research area encompasses not only the development of bias detection and mitigation algorithms but also the creation of governance frameworks, policy recommendations, and educational resources that can guide the responsible development and deployment of AI systems across different sectors and applications.

Human-AI Collaboration and Augmented Intelligence

Rather than focusing solely on creating AI systems that replace human capabilities, research in human-AI collaboration aims to develop technologies that augment and enhance human intelligence through effective partnership between humans and artificial agents. This research area investigates how AI systems can be designed to complement human strengths while compensating for human limitations, creating collaborative systems that achieve better outcomes than either humans or AI could achieve independently.

Research in this domain encompasses user interface design principles for AI systems, methods for calibrating human trust in AI recommendations, techniques for enabling effective human oversight of automated systems, and frameworks for designing AI systems that can adapt to different human users and collaboration contexts. The cognitive science foundations of this research draw from studies of human decision-making, team dynamics, and skill acquisition to understand how humans and AI can most effectively work together.

Applications of human-AI collaboration research span numerous domains including medical diagnosis where AI can assist physicians in identifying patterns while humans provide contextual interpretation and patient interaction, scientific discovery where AI can generate hypotheses while humans provide domain expertise and experimental design, and creative endeavors where AI can suggest possibilities while humans provide aesthetic judgment and emotional understanding.

Environmental AI and Climate Intelligence

The application of artificial intelligence to environmental challenges and climate science represents a rapidly growing research area with significant potential for societal impact. Environmental AI research focuses on developing machine learning methods specifically designed to address environmental problems such as climate modeling, ecosystem monitoring, renewable energy optimization, and environmental policy analysis.

This research area encompasses challenges in handling the unique characteristics of environmental data including spatial and temporal dependencies, multi-scale phenomena, missing data, and the need for uncertainty quantification in predictions that inform critical policy decisions. The development of AI methods that can effectively integrate diverse environmental data sources, model complex Earth system processes, and provide reliable projections under different scenarios represents active areas of investigation.

The interdisciplinary nature of environmental AI requires collaboration between computer scientists, earth system scientists, ecologists, and policy experts to ensure that research outcomes are scientifically rigorous and practically relevant for addressing environmental challenges. Applications include precision agriculture systems that optimize resource use while minimizing environmental impact, renewable energy forecasting systems that improve grid integration of variable energy sources, and biodiversity monitoring systems that can track ecosystem health using remote sensing and citizen science data.

Large Language Models and Foundation Model Research

The emergence of large language models and foundation models has created new research opportunities in understanding how massive neural networks trained on diverse data can develop broad capabilities that can be adapted to numerous downstream tasks. This research area encompasses questions about scaling laws that govern model performance as size increases, emergent capabilities that arise in large models, and methods for efficiently adapting foundation models to specific applications and domains.

Current research investigates techniques for improving the efficiency of large model training through methods such as gradient compression, mixed precision training, and distributed computing strategies that can reduce the computational cost and environmental impact of training massive models. Additionally, research focuses on developing better methods for fine-tuning and prompt engineering that can effectively leverage pre-trained models for specific applications while minimizing additional training requirements.

The societal implications of foundation model research extend beyond technical considerations to include questions about access and democratization of AI capabilities, the concentration of computational resources required for training large models, and the potential risks associated with deploying powerful language models at scale. Research in this area contributes to both technical advancement and policy discussions about how to ensure that the benefits of foundation models are broadly accessible while mitigating potential risks.

The trajectory of AI research points toward increasingly interdisciplinary approaches that combine technical innovation with deep consideration of societal impacts, environmental sustainability, and human-centered design principles.

Brain-Inspired Computing and Neuromorphic AI

The quest to understand and replicate the computational principles underlying biological intelligence has driven research into brain-inspired computing approaches that aim to capture the efficiency, adaptability, and robustness of neural processing in artificial systems. Neuromorphic AI research explores how insights from neuroscience can inform the design of computing architectures, learning algorithms, and information processing strategies that more closely resemble biological neural networks.

This research area encompasses the development of spiking neural networks that process information through discrete events rather than continuous signals, the creation of memristive computing devices that can store and process information simultaneously, and the investigation of neural coding strategies that efficiently represent and transmit information in resource-constrained environments. The theoretical foundations draw from computational neuroscience, information theory, and dynamical systems to understand how biological neural networks achieve their remarkable capabilities.

Applications of neuromorphic computing research include ultra-low-power AI systems for edge computing applications, adaptive control systems that can learn and respond to changing environments, and brain-computer interfaces that can directly connect human neural activity with artificial processing systems. The development of neuromorphic hardware that can efficiently implement brain-inspired algorithms represents a crucial area for enabling practical applications of these research advances.

AI for Scientific Discovery and Automated Research

The application of artificial intelligence to accelerate scientific discovery represents one of the most ambitious and potentially transformative research directions in contemporary AI, with the goal of developing systems that can autonomously generate hypotheses, design experiments, analyze results, and contribute to scientific knowledge across diverse domains. This research area encompasses automated literature review systems that can synthesize knowledge from vast scientific databases, hypothesis generation algorithms that can identify promising research directions, and experimental design systems that can optimize research protocols for maximum information gain.

Research in AI for science addresses fundamental challenges in representing scientific knowledge in forms suitable for machine reasoning, developing methods for handling uncertainty and contradictory evidence in scientific literature, and creating evaluation frameworks for assessing the quality and significance of AI-generated scientific insights. The interdisciplinary nature of this research requires deep collaboration between AI researchers and domain scientists to ensure that automated systems can effectively contribute to scientific progress.

The implications of successful AI-assisted scientific discovery extend far beyond efficiency improvements to potentially fundamentally changing how scientific research is conducted, validated, and communicated. Applications span from drug discovery where AI can accelerate the identification of promising therapeutic compounds to materials science where automated systems can design and test novel materials with desired properties, and to fundamental physics where AI might identify new patterns in experimental data that lead to theoretical breakthroughs.

Future Implications and Research Trajectory

The landscape of AI research continues to evolve rapidly, with emerging areas of investigation constantly reshaping our understanding of what is possible and what challenges remain to be addressed. The convergence of multiple research directions creates opportunities for synergistic advances that could accelerate progress across the entire field, while also raising important questions about the societal implications of increasingly capable AI systems and the responsibility of researchers to consider the broader impact of their work.

The future trajectory of AI research will likely be characterized by increasing emphasis on interdisciplinary collaboration, with computer scientists working closely with domain experts, ethicists, policy makers, and social scientists to ensure that technological advances contribute positively to human welfare and societal progress. The development of AI systems that are not only technically advanced but also aligned with human values, environmentally sustainable, and accessible to diverse communities represents a crucial challenge for the next generation of AI researchers.

The selection of a PhD thesis topic in this rich research landscape requires careful consideration of personal interests, available resources, potential impact, and the opportunity to contribute meaningfully to the advancement of human knowledge and capability. The most successful doctoral research projects often combine technical innovation with deep consideration of real-world applications and societal implications, creating work that advances both scientific understanding and human welfare.

Disclaimer

This article is for informational purposes only and does not constitute academic or professional advice. The research areas and topics discussed represent current trends and opportunities in AI research but may evolve rapidly as the field continues to advance. Prospective PhD candidates should consult with academic advisors, conduct thorough literature reviews, and carefully consider their research interests and career goals when selecting thesis topics. The availability of resources, funding, and supervision for specific research areas may vary significantly across different institutions and geographic regions.