The modern digital landscape demands artificial intelligence systems that can adapt gracefully to changing conditions, hardware limitations, and unexpected failures. Progressive Enhancement AI represents a fundamental architectural philosophy that ensures machine learning applications maintain functionality and user experience even when advanced AI features become unavailable. This approach transforms traditional monolithic AI systems into resilient, layered architectures that provide value across a spectrum of operational conditions, from high-performance cloud environments to resource-constrained edge devices.

Explore the latest AI architecture trends to understand how progressive enhancement strategies are shaping the future of resilient machine learning systems. The principle of graceful degradation in AI systems mirrors the progressive enhancement methodologies that have long been essential in web development, but extends these concepts into the complex realm of machine learning inference, model serving, and intelligent feature delivery.

Foundational Principles of Progressive Enhancement AI

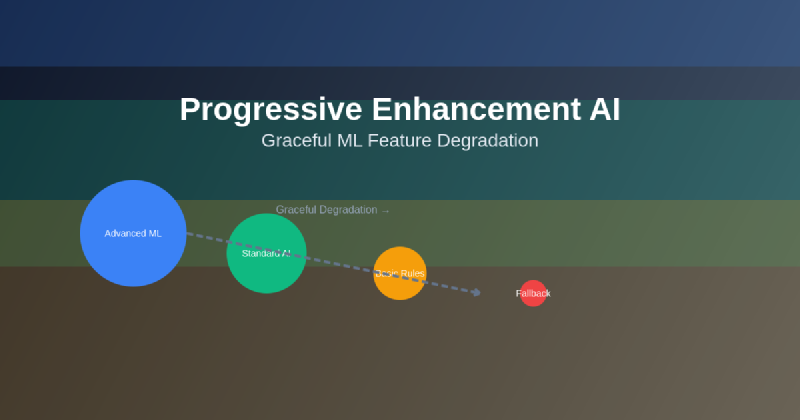

The architecture of Progressive Enhancement AI rests upon several core principles that distinguish it from traditional AI system design approaches. At its foundation lies the concept of layered intelligence, where multiple levels of AI capability are designed to work in harmony, with each layer providing a safety net for the layers above it. This hierarchical approach ensures that when sophisticated machine learning models fail or become unavailable, simpler but reliable alternatives can seamlessly take their place, maintaining system functionality and user trust.

The principle of graceful degradation extends beyond mere failover mechanisms to encompass intelligent feature scaling based on available computational resources, network conditions, and system load. Rather than experiencing complete feature failure, users encounter a thoughtfully designed reduction in AI capabilities that preserves core functionality while maintaining acceptable performance standards. This approach requires careful consideration of which features are essential versus enhancement-oriented, creating clear hierarchies of functionality that inform degradation strategies.

Resource awareness forms another fundamental pillar of Progressive Enhancement AI, where systems continuously monitor available computational power, memory constraints, network bandwidth, and battery life to make intelligent decisions about which AI features to enable or disable. This dynamic adjustment capability ensures optimal resource utilization while preventing system overload that could compromise overall application stability and user experience.

Architectural Patterns for Resilient AI Systems

The implementation of Progressive Enhancement AI requires sophisticated architectural patterns that support multiple levels of functionality while maintaining clean separation of concerns. The layered architecture pattern serves as the primary structural foundation, organizing AI capabilities into distinct tiers that range from basic rule-based systems to advanced deep learning models. Each layer operates independently while providing interfaces that allow higher-level layers to fall back to lower-level implementations when necessary.

The adapter pattern becomes crucial in Progressive Enhancement AI systems, providing standardized interfaces that allow different AI implementations to be swapped seamlessly based on runtime conditions. Whether transitioning from a complex transformer model to a simpler statistical approach, or falling back from cloud-based inference to local processing, adapter patterns ensure that application logic remains unaffected by changes in underlying AI implementation.

Circuit breaker patterns, borrowed from distributed systems engineering, play a vital role in detecting AI service failures and automatically routing requests to alternative implementations. These patterns monitor the health and performance of machine learning services, implementing intelligent thresholds that trigger graceful degradation before complete system failure occurs. The circuit breaker approach prevents cascading failures that could compromise entire application functionality when individual AI components experience issues.

Enhance your AI development with Claude’s advanced reasoning to build more sophisticated progressive enhancement architectures that adapt intelligently to changing conditions. The integration of multiple AI services and fallback mechanisms requires careful orchestration and monitoring to ensure optimal user experiences across all operational scenarios.

Feature Hierarchy and Degradation Strategies

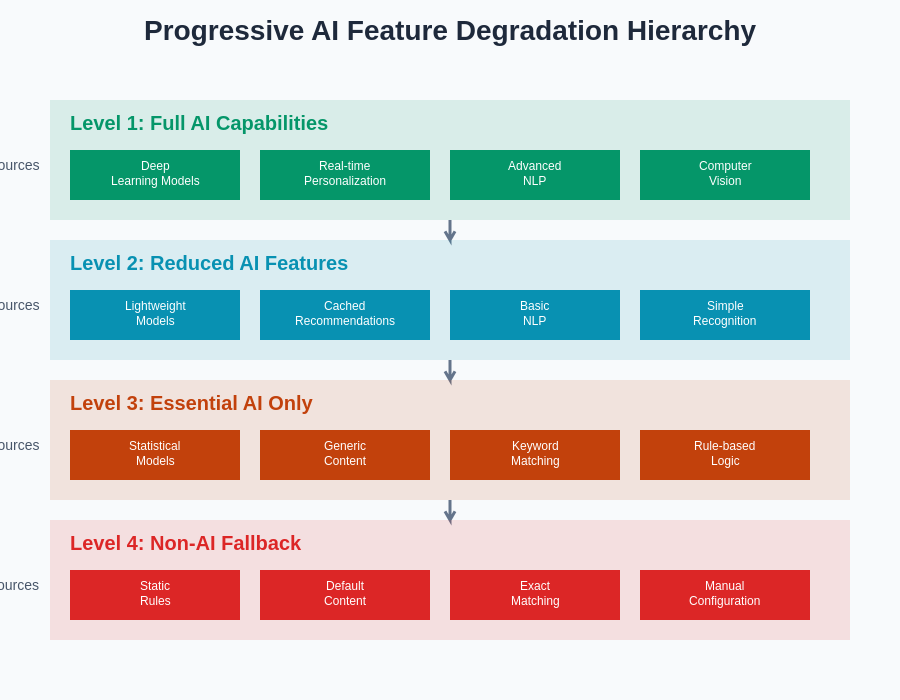

Successful Progressive Enhancement AI implementation requires careful analysis and classification of application features based on their importance to core functionality and their computational requirements. Essential features that are critical to basic application operation receive the highest priority and are supported by multiple implementation layers, ensuring they remain available even under the most constrained conditions. Enhancement features that improve user experience but are not critical to core functionality are designed with graceful degradation paths that allow them to be disabled or simplified when resources become limited.

The degradation strategy must consider not only technical constraints but also user experience implications. Features should degrade in ways that are transparent to users or, when transparency is impossible, should provide clear communication about reduced functionality. This might involve transitioning from real-time AI recommendations to cached suggestions, from personalized content to generic alternatives, or from advanced natural language processing to simpler keyword-based matching.

Performance budgets play a crucial role in feature hierarchy design, establishing clear thresholds for resource consumption that trigger different levels of feature degradation. These budgets encompass CPU utilization, memory consumption, network bandwidth, battery usage, and response time requirements, creating a multidimensional framework for making intelligent degradation decisions. The system continuously monitors these metrics and adjusts feature availability accordingly, ensuring optimal resource allocation across all active functionality.

The hierarchical approach to AI feature degradation creates multiple fallback layers that ensure continuous functionality even when advanced machine learning capabilities become unavailable. This systematic degradation preserves user experience while adapting to changing resource constraints and system conditions.

Implementation Techniques and Best Practices

The technical implementation of Progressive Enhancement AI requires sophisticated service orchestration and monitoring capabilities that can detect performance degradation, resource constraints, and service failures in real-time. Load balancing algorithms must be enhanced to consider not just traffic distribution but also the computational complexity of different AI models and their resource requirements. This intelligent load distribution ensures that system resources are allocated optimally across different enhancement layers.

Caching strategies become particularly important in Progressive Enhancement AI systems, where expensive machine learning computations can be cached at multiple levels to provide faster fallback options. Intelligent caching considers not only the results of AI computations but also the context and parameters that influenced those results, allowing systems to serve relevant cached responses even when full AI processing is unavailable. Cache invalidation strategies must account for the dynamic nature of machine learning models and the temporal relevance of AI-generated content.

Model versioning and deployment strategies must support multiple concurrent AI implementations, allowing systems to switch between different model versions or completely different approaches based on runtime conditions. This requires sophisticated model management systems that can handle the storage, loading, and execution of multiple AI implementations while maintaining consistent interfaces and ensuring smooth transitions between different processing approaches.

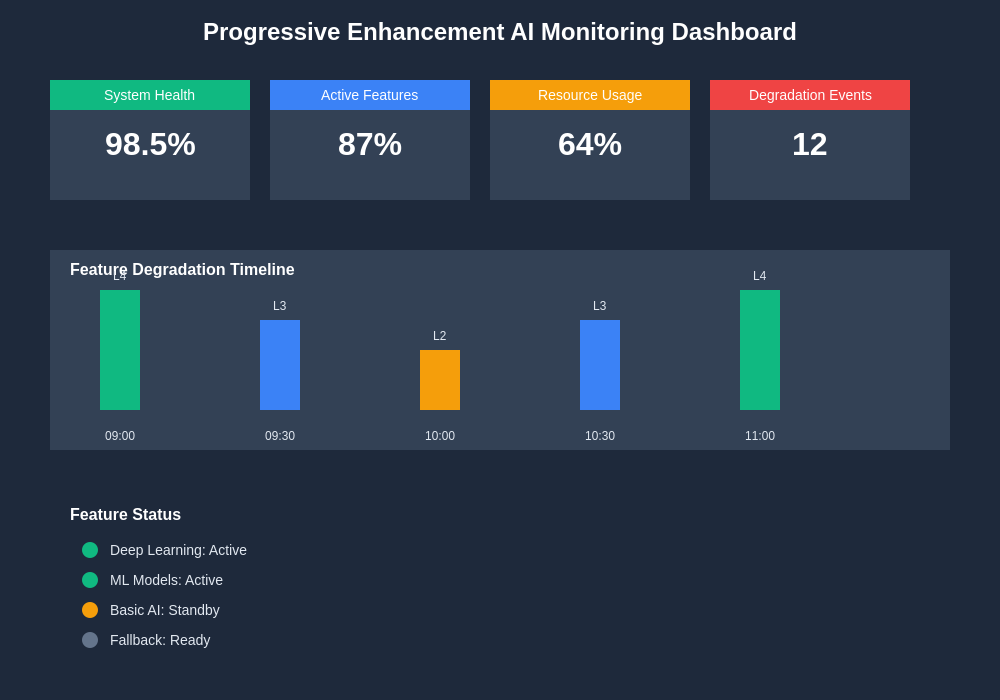

Monitoring and observability become critical components of Progressive Enhancement AI systems, providing detailed insights into system performance, resource utilization, and degradation patterns. These monitoring systems must track not only traditional metrics like response times and error rates but also AI-specific metrics such as model confidence scores, prediction accuracy, and feature utilization patterns. This comprehensive monitoring enables intelligent decision-making about when to trigger degradation and how to optimize system performance across different operational conditions.

Real-World Applications and Use Cases

E-commerce platforms represent one of the most compelling applications of Progressive Enhancement AI, where recommendation systems can gracefully degrade from sophisticated deep learning models to simpler collaborative filtering approaches, and finally to basic popularity-based recommendations. This ensures that users always receive product suggestions, even when advanced personalization systems are unavailable. Search functionality can similarly degrade from advanced natural language processing and semantic search to traditional keyword matching, maintaining core functionality while preserving user experience.

Content platforms and social media applications benefit significantly from Progressive Enhancement AI approaches, where content moderation can fall back from advanced computer vision and natural language understanding to simpler rule-based filtering systems. Content recommendation engines can transition from complex multi-modal analysis to simpler engagement-based algorithms, ensuring that users continue to receive relevant content even when sophisticated AI features are compromised.

Leverage Perplexity’s comprehensive search capabilities to research and implement advanced Progressive Enhancement AI patterns that are proven effective across different industries and application domains. The combination of multiple AI tools and services creates robust systems that can adapt to various operational challenges while maintaining high-quality user experiences.

Mobile applications present unique challenges and opportunities for Progressive Enhancement AI implementation, where battery constraints, network connectivity issues, and varying device capabilities require sophisticated adaptation strategies. Image recognition features can degrade from advanced neural networks to simpler computer vision algorithms, while natural language processing can fall back from cloud-based transformer models to on-device keyword matching. These mobile-specific degradation strategies ensure that AI features remain functional across diverse device capabilities and network conditions.

Performance Optimization and Resource Management

The performance optimization strategies for Progressive Enhancement AI systems must balance the overhead of maintaining multiple implementation layers against the benefits of graceful degradation. Lazy loading techniques become essential, ensuring that alternative AI implementations are loaded only when needed, minimizing memory consumption and startup times. Resource pooling strategies allow multiple degradation layers to share computational resources efficiently, reducing overall system overhead while maintaining responsiveness.

Dynamic resource allocation algorithms must consider the computational requirements of different AI implementations and adjust system resources accordingly. This might involve dynamically scaling cloud infrastructure based on the complexity of active AI features, or intelligently distributing processing between edge devices and cloud services based on network conditions and computational requirements. These optimization strategies ensure that system performance remains optimal across different operational scenarios.

Model quantization and compression techniques play crucial roles in Progressive Enhancement AI systems, enabling the deployment of lightweight versions of complex AI models that can serve as intermediate degradation layers. These compressed models provide a middle ground between full-featured AI implementations and basic fallback systems, offering improved functionality while remaining computationally efficient enough to run under resource-constrained conditions.

The integration of edge computing capabilities enhances Progressive Enhancement AI systems by providing local processing options that can serve as fallbacks when cloud-based AI services become unavailable. Edge deployment strategies must consider the trade-offs between model complexity and local processing capabilities, creating optimized implementations that provide maximum functionality within the constraints of edge hardware.

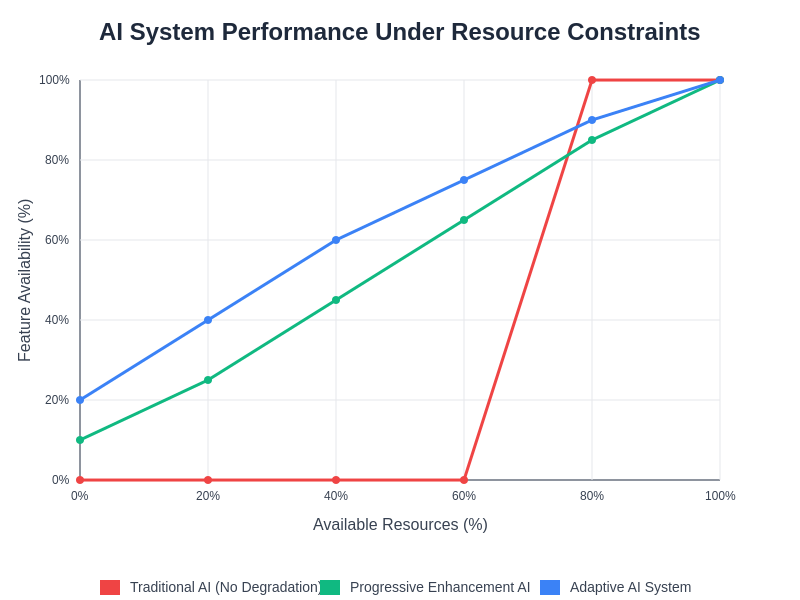

The relationship between available system resources and AI feature availability demonstrates how Progressive Enhancement AI systems adapt functionality to match operational conditions, ensuring optimal performance across diverse deployment scenarios.

Testing and Validation Strategies

The testing methodologies for Progressive Enhancement AI systems must encompass not only the functionality of individual AI components but also the behavior of degradation mechanisms and transition between different implementation layers. Chaos engineering principles become particularly valuable, where controlled failures are introduced to verify that degradation mechanisms function correctly and that user experience remains acceptable under various failure conditions.

Load testing strategies must simulate not only high traffic volumes but also resource constraint scenarios that would trigger feature degradation. These tests should verify that degradation occurs smoothly and transparently, without causing user confusion or system instability. Performance benchmarking must measure not only the speed and accuracy of individual AI implementations but also the overhead and latency associated with degradation mechanisms and fallback systems.

User experience testing becomes crucial in validating Progressive Enhancement AI implementations, ensuring that feature degradation occurs in ways that are either transparent to users or clearly communicated when transparency is not possible. A/B testing frameworks should be designed to evaluate user satisfaction and engagement across different degradation scenarios, providing insights into the effectiveness of different fallback strategies and the impact of reduced AI functionality on overall user experience.

Automated testing frameworks must be capable of simulating diverse operational conditions, including network failures, resource constraints, and service outages, to verify that degradation mechanisms function correctly across all potential scenarios. These testing frameworks should also validate the accuracy and appropriateness of AI responses across different implementation layers, ensuring that degraded functionality still provides value to users.

Security and Privacy Considerations

The security implications of Progressive Enhancement AI systems extend beyond traditional application security to encompass the unique challenges associated with multiple AI implementations and dynamic feature degradation. Each degradation layer must be secured independently while maintaining consistent security policies across all implementation levels. This requires careful consideration of data access patterns, model security, and the potential for information leakage between different AI implementations.

Privacy considerations become more complex in Progressive Enhancement AI systems where user data might be processed by different AI implementations with varying privacy capabilities. Cloud-based AI services might offer advanced privacy protection features that are not available in simpler fallback implementations, requiring careful design of data handling policies that maintain privacy standards even during degradation scenarios. User consent mechanisms must account for the possibility of data being processed by different AI systems based on runtime conditions.

Model security becomes particularly important when multiple AI implementations are deployed concurrently, as attackers might attempt to exploit differences between implementations or target specific degradation layers that might have weaker security measures. Security monitoring must encompass all degradation layers and detect attempts to manipulate system behavior through targeted attacks on specific AI implementations.

Data governance frameworks must account for the complexity of Progressive Enhancement AI systems, ensuring that data handling policies are consistent across all implementation layers while accommodating the different capabilities and requirements of various AI approaches. This includes establishing clear guidelines for data retention, processing limitations, and user control mechanisms that function correctly regardless of which degradation layer is active.

Monitoring and Observability

The observability requirements for Progressive Enhancement AI systems encompass traditional application monitoring extended with AI-specific metrics that provide insights into model performance, degradation patterns, and user impact. Key performance indicators must include not only response times and error rates but also metrics such as degradation frequency, fallback success rates, and user satisfaction across different implementation layers.

Real-time monitoring dashboards must provide comprehensive visibility into system health across all degradation layers, enabling operations teams to identify potential issues before they trigger unplanned degradation. These monitoring systems should track resource utilization patterns, AI model performance metrics, and the frequency and duration of different degradation scenarios, providing insights that inform system optimization and capacity planning decisions.

Alerting mechanisms must be sophisticated enough to distinguish between planned degradation scenarios and actual system failures, ensuring that operations teams are notified appropriately without being overwhelmed by alerts related to normal degradation behavior. Alert thresholds should consider the cascading nature of Progressive Enhancement AI systems, where issues in one layer might trigger degradation in others, requiring intelligent correlation and suppression mechanisms.

Log aggregation and analysis become more complex in Progressive Enhancement AI systems where multiple AI implementations might process the same user requests under different conditions. Log correlation mechanisms must be capable of tracking user journeys across different implementation layers, providing comprehensive insights into user experience and system behavior that inform ongoing optimization efforts.

Comprehensive monitoring of Progressive Enhancement AI systems requires tracking performance metrics across all degradation layers, providing operations teams with the visibility needed to ensure optimal system performance and user experience.

Future Directions and Emerging Trends

The evolution of Progressive Enhancement AI is being driven by advances in edge computing, federated learning, and adaptive AI architectures that can dynamically adjust their behavior based on environmental conditions. Emerging trends include the development of AI models that are specifically designed for graceful degradation, with built-in mechanisms for reducing complexity while maintaining core functionality. These models represent a shift from retrofitting existing AI systems with degradation capabilities to designing AI implementations that are inherently adaptive and resilient.

Federated learning approaches are enabling more sophisticated Progressive Enhancement AI implementations where models can be partially updated or adapted based on local conditions and requirements. This allows for more personalized degradation strategies that consider not only technical constraints but also user preferences and usage patterns. The integration of federated learning with Progressive Enhancement AI creates systems that can learn and adapt their degradation strategies over time, improving efficiency and user experience through continuous optimization.

Automated model optimization and compression techniques are advancing rapidly, enabling the creation of model hierarchies where different versions of the same AI capability can be deployed at different complexity levels. These advances reduce the manual effort required to create effective degradation layers while ensuring that each layer provides optimal functionality within its resource constraints. Machine learning approaches to degradation strategy optimization are emerging, where systems can learn the most effective degradation patterns based on historical performance and user feedback.

The integration of Progressive Enhancement AI with emerging technologies such as 5G networks, advanced edge computing platforms, and next-generation mobile devices is creating new opportunities for more sophisticated and responsive degradation strategies. These technological advances enable more dynamic and granular degradation decisions that can adapt in real-time to changing network conditions, device capabilities, and user requirements.

The future of Progressive Enhancement AI lies in the development of truly adaptive systems that can not only degrade gracefully but also enhance functionality dynamically as resources become available. This bidirectional adaptability represents the next evolution of resilient AI systems, where functionality can scale both up and down based on changing conditions while maintaining optimal user experience and resource utilization across all operational scenarios.

Conclusion

Progressive Enhancement AI represents a fundamental shift in how we approach the design and deployment of machine learning systems, moving from brittle, all-or-nothing implementations to resilient, adaptive architectures that provide value across a spectrum of operational conditions. The principles and practices outlined in this comprehensive exploration provide a foundation for building AI systems that gracefully handle failure, adapt to resource constraints, and maintain user satisfaction even under challenging conditions. As artificial intelligence becomes increasingly integral to modern applications, the ability to implement graceful degradation becomes not just a technical advantage but a business necessity that determines the reliability and trustworthiness of AI-powered services. The investment in Progressive Enhancement AI architectures pays dividends through improved user experience, reduced system failures, and the ability to serve diverse user bases across varying technological capabilities and constraints.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The implementation of Progressive Enhancement AI systems requires careful consideration of specific technical requirements, business constraints, and user needs. Readers should conduct thorough testing and validation of any Progressive Enhancement AI implementations in their specific environments. The effectiveness of these approaches may vary depending on application requirements, technical infrastructure, and operational constraints.