The exponential growth of artificial intelligence systems in production environments has created an unprecedented demand for sophisticated testing methodologies that can adequately validate the complex, probabilistic behaviors inherent in modern AI applications. Traditional testing approaches, while effective for deterministic software systems, often fall short when applied to machine learning models and AI-driven applications that exhibit emergent behaviors, non-linear decision boundaries, and context-dependent outputs that defy conventional testing paradigms.

Property-based testing emerges as a revolutionary approach to quality assurance that addresses these fundamental challenges by focusing on the invariant properties that AI systems should maintain regardless of specific input conditions. Rather than testing predetermined scenarios with fixed inputs and expected outputs, property-based testing generates thousands of diverse test cases automatically, exploring edge cases and boundary conditions that human testers might never consider, thereby providing comprehensive validation coverage that scales with the complexity of modern AI systems.

Explore the latest AI testing methodologies and trends to stay current with rapidly evolving quality assurance practices that are reshaping how we validate artificial intelligence systems in production environments. The integration of property-based testing into AI development workflows represents a fundamental shift toward more robust, reliable, and trustworthy artificial intelligence applications.

Understanding Property-Based Testing Fundamentals

Property-based testing represents a paradigm shift from example-based testing to specification-based validation, where instead of writing individual test cases with specific inputs and expected outputs, developers define high-level properties that should hold true across all possible inputs within a given domain. This approach leverages automated test case generation to explore vast input spaces systematically, uncovering edge cases and boundary conditions that would be practically impossible to identify through manual test case creation.

The core philosophy underlying property-based testing rests on the mathematical concept of invariants, which are conditions or relationships that remain consistent regardless of the specific values or states involved in the computation. In the context of AI systems, these invariants might include properties such as output consistency under semantically equivalent inputs, monotonicity relationships between input features and predictions, or conservation laws that must be preserved during model inference.

The automated nature of property-based testing makes it particularly well-suited for AI systems, where the complexity and dimensionality of input spaces often exceed human comprehension. By defining properties in terms of mathematical relationships and logical constraints rather than specific examples, developers can create test suites that scale automatically with system complexity while maintaining comprehensive coverage of critical system behaviors.

The Unique Challenges of Testing AI Systems

Artificial intelligence systems present a unique set of testing challenges that traditional software quality assurance methodologies struggle to address effectively. Unlike conventional software applications that follow deterministic execution paths and produce predictable outputs for given inputs, AI systems exhibit probabilistic behaviors, context-sensitive responses, and emergent properties that arise from complex interactions between multiple components and learned representations.

The black-box nature of many modern AI models, particularly deep neural networks, creates additional complications for testing and validation efforts. These systems often make decisions based on learned patterns that are not explicitly programmed and may not be easily interpretable by human developers, making it difficult to define appropriate test cases or verify the correctness of model outputs in ambiguous scenarios.

Furthermore, AI systems frequently operate in dynamic environments where input distributions may shift over time, requiring testing methodologies that can validate system robustness across diverse operating conditions and data distributions that may differ significantly from training data. The temporal aspects of AI system behavior, including concept drift and model degradation, introduce additional dimensions of complexity that must be addressed through comprehensive testing strategies.

Leverage advanced AI capabilities with Claude to enhance your testing strategies and develop more sophisticated approaches to AI system validation and quality assurance. The intersection of AI-powered development tools and rigorous testing methodologies creates new opportunities for building more reliable and trustworthy artificial intelligence applications.

Core Principles of Property-Based Testing for AI

The application of property-based testing to AI systems requires a deep understanding of the fundamental principles that govern intelligent behavior and the mathematical properties that should be preserved during model inference and decision-making processes. These principles serve as the foundation for developing effective property-based test suites that can validate AI system correctness across diverse operating conditions and input scenarios.

Symmetry and invariance properties form the cornerstone of property-based testing for AI systems. These properties assert that certain transformations of input data should not affect the fundamental behavior or decision-making capabilities of the AI system. For example, image classification models should maintain consistent predictions when presented with rotated, translated, or scaled versions of the same image, assuming these transformations preserve the essential features relevant to the classification task.

Compositional properties define how AI systems should behave when processing complex inputs that can be decomposed into simpler components or when combining multiple predictions or decisions into coherent outputs. These properties are particularly important for natural language processing systems, where the meaning of complex sentences should relate systematically to the meanings of constituent phrases and words, and for multi-modal AI systems that must integrate information from diverse sources.

Monotonicity and consistency properties ensure that AI systems exhibit logical and predictable responses to changes in input parameters or environmental conditions. These properties are crucial for establishing trust and reliability in AI-driven decision-making systems, particularly in domains where logical consistency and predictable behavior are essential for user acceptance and regulatory compliance.

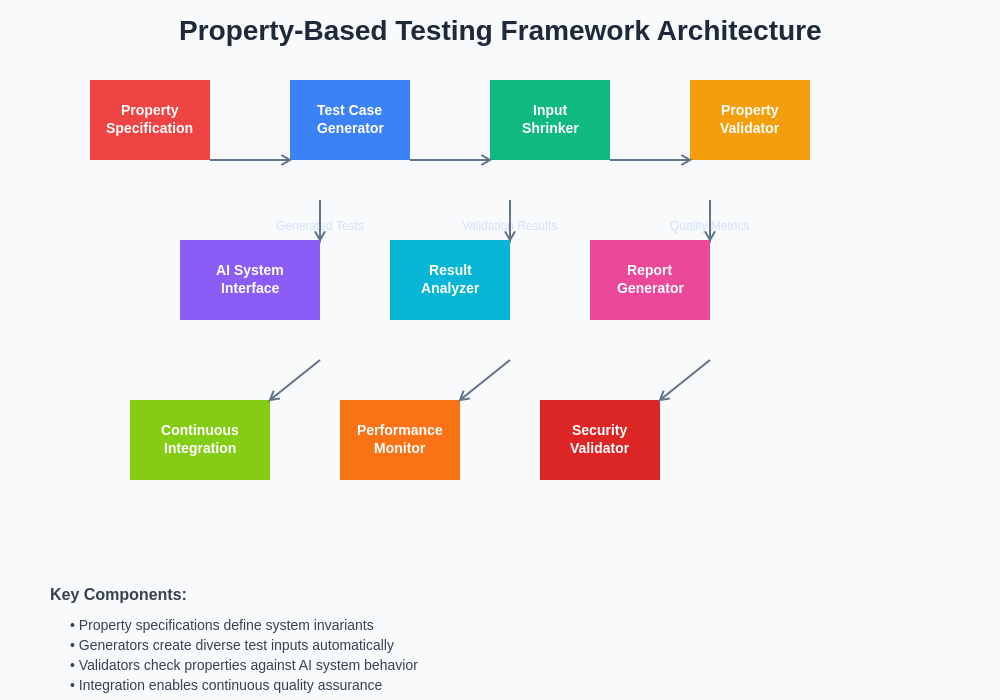

Implementing Property-Based Testing Frameworks

The practical implementation of property-based testing for AI systems requires sophisticated frameworks and tools that can generate diverse test cases, execute complex validation logic, and provide meaningful feedback about system behavior across extensive input spaces. Modern property-based testing frameworks such as Hypothesis for Python, QuickCheck for Haskell, and fast-check for JavaScript provide the foundational infrastructure needed to implement comprehensive testing strategies for AI applications.

These frameworks typically employ advanced algorithms for test case generation that can explore input spaces intelligently, focusing computational resources on regions most likely to reveal system failures or edge cases. Shrinking algorithms automatically reduce failing test cases to minimal examples that isolate the specific conditions causing system failures, dramatically simplifying the debugging and issue resolution process.

The architecture of effective property-based testing frameworks for AI systems must accommodate the unique requirements of machine learning workflows, including support for tensor operations, probabilistic outputs, and complex data structures that characterize modern AI applications. Integration with popular machine learning libraries and frameworks enables seamless incorporation of property-based testing into existing development and validation pipelines.

The implementation process typically begins with the identification of key system properties that should be preserved across different operating conditions, followed by the development of generators that can produce diverse, realistic test inputs representative of expected production scenarios. Property verification functions encode the mathematical relationships and logical constraints that define correct system behavior, while reporting mechanisms provide detailed insights into system performance and failure modes.

Advanced Property Specification Techniques

The specification of meaningful properties for AI systems requires sophisticated techniques that can capture the nuanced behaviors and complex relationships that characterize intelligent systems. Advanced property specification goes beyond simple input-output relationships to encompass higher-order properties that describe how AI systems should respond to systematic variations in input conditions, environmental parameters, and operational contexts.

Differential testing represents one powerful approach to property specification, where multiple implementations of similar functionality are compared to identify discrepancies that might indicate bugs or inconsistencies in AI system behavior. This technique is particularly valuable for validating the correctness of optimized model implementations, cross-platform compatibility, and consistency across different versions or configurations of AI systems.

Metamorphic testing techniques focus on relationships between multiple test executions rather than the absolute correctness of individual outputs, making them particularly well-suited for AI systems where ground truth may be ambiguous or expensive to obtain. These techniques define transformations of input data that should produce predictable changes in system outputs, enabling comprehensive validation even when exact expected outputs are unknown or difficult to specify.

Statistical property specifications enable validation of probabilistic behaviors and stochastic processes that are common in AI systems. These properties might specify expected distributions of outputs, convergence behaviors of iterative algorithms, or statistical relationships between inputs and outputs that should be preserved across different operating conditions and data distributions.

Test Case Generation Strategies

Effective test case generation for AI systems requires sophisticated strategies that can produce diverse, realistic inputs while efficiently exploring the vast input spaces characteristic of modern artificial intelligence applications. Random generation techniques, while simple to implement, often fail to produce test cases that adequately exercise the complex decision boundaries and edge cases that are most likely to reveal system failures in AI applications.

Guided generation strategies leverage domain knowledge and system understanding to produce more targeted test cases that are likely to reveal specific types of failures or validate particular aspects of system behavior. These strategies might incorporate knowledge about input data distributions, system architecture, or problem domain characteristics to generate test cases that are both realistic and challenging for the AI system under test.

Adversarial generation techniques specifically target the identification of inputs that are most likely to cause system failures or expose vulnerabilities in AI system behavior. These techniques draw inspiration from adversarial machine learning research to generate inputs that systematically explore the boundaries of system robustness and reliability.

Coverage-guided generation approaches monitor system behavior during test execution and adjust generation strategies to ensure comprehensive exploration of different execution paths, decision branches, or feature spaces. These techniques are particularly valuable for AI systems with complex internal architectures where achieving adequate coverage of system components requires sophisticated generation strategies.

Enhance your AI research capabilities with Perplexity to access comprehensive information about advanced testing methodologies and stay current with the latest developments in AI system validation and quality assurance practices. The combination of advanced research tools and practical testing frameworks enables the development of more effective and comprehensive validation strategies.

Validating Machine Learning Model Properties

Machine learning models exhibit unique characteristics that require specialized approaches to property-based testing, focusing on the mathematical properties and behavioral invariants that should be preserved during model training, inference, and deployment processes. The validation of these properties is essential for ensuring that machine learning systems behave correctly and reliably in production environments.

Robustness properties define how machine learning models should respond to various types of input perturbations, including noise injection, adversarial modifications, and distribution shifts that commonly occur in real-world deployment scenarios. These properties ensure that models maintain acceptable performance levels even when operating conditions differ from training environments or when inputs are subject to natural variations or malicious manipulations.

Fairness and bias properties address the critical need for machine learning systems to make decisions that are equitable across different demographic groups, protected classes, or sensitive attributes. Property-based testing can systematically validate that model predictions do not exhibit systematic biases or discriminatory patterns that could lead to unfair or harmful outcomes in real-world applications.

Interpretability and explainability properties ensure that machine learning models provide reasonable justifications for their decisions and that these explanations are consistent with expected domain knowledge and logical reasoning principles. These properties are particularly important for high-stakes applications where decision transparency and accountability are essential requirements.

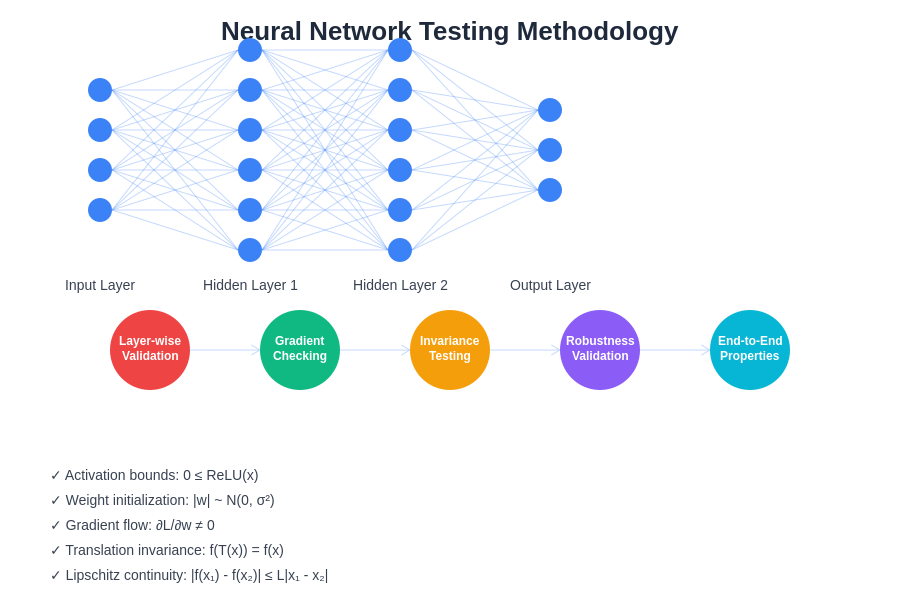

Testing Neural Network Architectures

Neural networks present unique testing challenges due to their complex, hierarchical architectures and the emergent behaviors that arise from the interaction of multiple layers and non-linear activation functions. Property-based testing of neural networks requires specialized techniques that can validate both the correctness of individual network components and the overall system-level behaviors that emerge from their integration.

Layer-wise property validation focuses on the mathematical properties that should be preserved at each level of the neural network hierarchy, including gradient flow properties during backpropagation, activation distribution characteristics, and weight update behaviors during training. These properties help ensure that individual network components function correctly and contribute appropriately to overall system performance.

Architecture-specific properties address the unique characteristics of different neural network architectures, such as translation invariance in convolutional networks, sequential processing capabilities in recurrent networks, or attention mechanisms in transformer architectures. Validating these properties ensures that specialized network components function as intended and provide the expected computational capabilities.

End-to-end system properties validate the overall behavior of complete neural network systems, including input preprocessing, network inference, and output postprocessing components. These properties ensure that the entire system pipeline functions correctly and produces outputs that meet specified quality, performance, and reliability requirements.

Continuous Integration and Property-Based Testing

The integration of property-based testing into continuous integration and deployment pipelines requires careful consideration of computational resources, execution time constraints, and the need for reliable, reproducible test results across different execution environments. Modern CI/CD systems must accommodate the probabilistic nature of property-based testing while maintaining the determinism and reliability required for automated quality assurance processes.

Adaptive testing strategies adjust the scope and intensity of property-based testing based on the nature of code changes, system complexity, and available computational resources. These strategies ensure that critical system properties are validated thoroughly while maintaining reasonable execution times and resource consumption in automated testing environments.

Test result analysis and reporting mechanisms must accommodate the unique characteristics of property-based testing, including the automatic generation of minimal failing examples, statistical summaries of test execution across multiple runs, and trend analysis that can identify gradual degradations in system properties over time.

Version control integration enables tracking of property specifications alongside code changes, ensuring that testing strategies evolve appropriately as systems develop and new requirements emerge. This integration also facilitates collaborative development of property-based test suites and enables systematic review and approval processes for changes to critical system properties.

Performance and Scalability Testing

Property-based testing of AI system performance and scalability requires sophisticated approaches that can validate system behavior under varying load conditions, input complexities, and resource constraints. These testing strategies must account for the inherent variability in AI system performance while identifying systematic issues that could impact production deployments.

Computational complexity properties ensure that AI systems exhibit expected algorithmic behavior as input sizes scale, identifying potential performance bottlenecks or resource consumption issues that could impact system scalability. These properties are particularly important for AI systems that must operate under strict latency or throughput requirements in production environments.

Memory usage and resource management properties validate that AI systems utilize computational resources efficiently and do not exhibit memory leaks, excessive resource consumption, or other resource management issues that could impact system stability or performance over extended operation periods.

Concurrent execution properties address the challenges of validating AI systems that must operate in multi-threaded or distributed computing environments, ensuring that parallel processing capabilities function correctly and that race conditions or synchronization issues do not impact system correctness or performance.

Security and Robustness Validation

The security implications of AI systems require specialized property-based testing approaches that can systematically validate system robustness against various types of attacks, adversarial inputs, and security threats. These validation strategies must address both traditional software security concerns and AI-specific vulnerabilities that arise from the unique characteristics of machine learning systems.

Adversarial robustness properties ensure that AI systems maintain acceptable performance and security characteristics when subjected to carefully crafted inputs designed to exploit system vulnerabilities or cause misclassification. These properties are essential for AI systems deployed in security-critical environments where adversarial attacks pose significant risks.

Input validation and sanitization properties verify that AI systems properly handle unexpected, malformed, or potentially malicious inputs without compromising system security or stability. These properties ensure that input processing pipelines function correctly and do not introduce vulnerabilities that could be exploited by attackers.

Privacy and data protection properties validate that AI systems appropriately handle sensitive information and do not inadvertently leak private data through model outputs, intermediate representations, or side-channel attacks. These properties are particularly important for AI systems that process personal or confidential information.

Integration with Existing Testing Frameworks

The successful adoption of property-based testing in AI development organizations requires seamless integration with existing testing frameworks, development tools, and quality assurance processes. This integration must preserve existing investments in testing infrastructure while enhancing testing capabilities with the advanced features provided by property-based testing methodologies.

Framework interoperability ensures that property-based testing tools can work effectively alongside traditional unit testing frameworks, integration testing suites, and performance testing tools. This interoperability enables organizations to adopt property-based testing incrementally without disrupting existing testing workflows or requiring complete replacement of established testing infrastructure.

Reporting and analytics integration provides unified visibility into testing results across different testing methodologies, enabling development teams to maintain comprehensive oversight of system quality and identify trends or issues that span multiple testing approaches. This integration is essential for maintaining effective quality assurance processes in complex AI development environments.

Tool ecosystem compatibility ensures that property-based testing frameworks can leverage existing development tools, CI/CD systems, and quality assurance platforms without requiring significant modifications or custom integration work. This compatibility reduces the barriers to adoption and enables organizations to realize the benefits of property-based testing more quickly and efficiently.

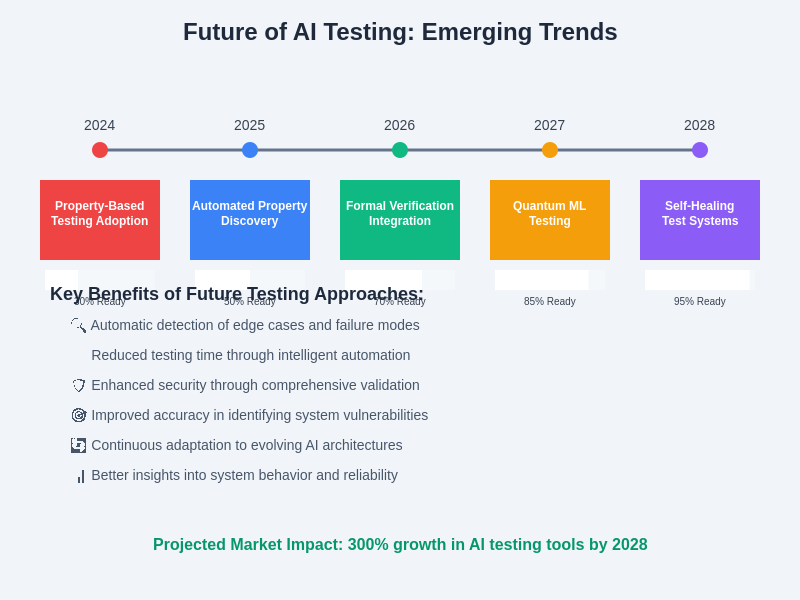

Future Directions and Emerging Trends

The field of property-based testing for AI systems continues to evolve rapidly, driven by advances in artificial intelligence research, improvements in testing methodologies, and growing recognition of the importance of robust quality assurance practices for AI applications. Several emerging trends and future directions are shaping the development of next-generation property-based testing approaches.

Automated property discovery represents a promising direction where machine learning techniques are applied to automatically identify relevant properties and invariants from system behavior, reducing the manual effort required to develop comprehensive property-based test suites. These approaches leverage statistical analysis, symbolic reasoning, and pattern recognition to extract meaningful properties from system execution traces and historical testing data.

Formal verification integration combines property-based testing with formal methods and theorem proving to provide mathematical guarantees about system correctness and reliability. This integration enables more rigorous validation of critical AI system properties while maintaining the practical advantages of automated test case generation and execution.

Quantum computing applications of property-based testing address the unique challenges of validating quantum machine learning algorithms and quantum-enhanced AI systems, requiring new approaches to property specification and test case generation that account for quantum mechanical principles and the probabilistic nature of quantum computation.

The convergence of property-based testing with explainable AI research creates opportunities for developing testing methodologies that not only validate system correctness but also provide insights into why systems behave correctly or incorrectly under different conditions. This convergence promises to enhance both the effectiveness of testing processes and the interpretability of AI system behavior.

As artificial intelligence systems become increasingly critical components of essential infrastructure and decision-making processes, the importance of rigorous quality assurance methodologies continues to grow. Property-based testing represents a fundamental advancement in our ability to validate the correctness, reliability, and safety of AI systems across diverse operating conditions and deployment scenarios. The continued development and adoption of these methodologies will play a crucial role in building trustworthy AI systems that can reliably serve society’s needs while minimizing risks and maximizing benefits.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of property-based testing methodologies and their applications to AI systems. Readers should conduct their own research and consider their specific requirements when implementing property-based testing strategies. The effectiveness and appropriateness of different testing approaches may vary depending on specific use cases, system architectures, and organizational constraints.