The convergence of React’s component-based architecture with artificial intelligence and machine learning has created unprecedented opportunities for building sophisticated, intelligent user interfaces. As machine learning models become increasingly accessible through JavaScript frameworks like TensorFlow.js and ONNX.js, developers face the unique challenge of managing complex AI-related state within React applications. This comprehensive exploration delves into the specialized patterns, custom hooks, and state management strategies necessary for creating robust, performant machine learning applications that seamlessly integrate AI capabilities into modern React ecosystems.

Explore the latest AI development trends to understand how frontend AI integration is reshaping user experience design and application architecture. The integration of machine learning capabilities directly into React applications represents a fundamental shift in how we approach client-side intelligence, requiring sophisticated state management solutions that can handle the complexities of model lifecycle management, asynchronous predictions, and real-time data processing.

The Evolution of Frontend AI State Management

Traditional React state management patterns were designed for handling user interactions, API responses, and component lifecycle events. However, machine learning applications introduce entirely new categories of state that must be carefully orchestrated to ensure optimal performance and user experience. These applications must manage model loading states, prediction queues, inference results, training progress, data preprocessing pipelines, and real-time model updates while maintaining the responsive, interactive experience users expect from modern web applications.

The complexity of ML state management extends beyond simple data fetching patterns. Machine learning models often require significant computational resources, have specific memory requirements, and may need to be loaded asynchronously from remote sources or initialized with specific configurations. Additionally, ML applications frequently involve continuous learning scenarios where models are updated based on user feedback, requiring sophisticated state management patterns that can handle dynamic model evolution while maintaining application stability and performance.

Experience advanced AI assistance with Claude for developing sophisticated React applications that leverage machine learning capabilities effectively. The intersection of React’s declarative paradigm with ML’s computational complexity requires careful architectural consideration to ensure applications remain maintainable, performant, and scalable as AI capabilities evolve.

Custom Hooks for Model Management

The foundation of effective AI state management in React applications begins with custom hooks designed specifically for handling machine learning model lifecycles. These specialized hooks encapsulate the complex logic required for model loading, initialization, and configuration while providing clean, reusable interfaces that can be easily integrated across different components. A well-designed model management hook handles asynchronous model loading, caches loaded models to prevent unnecessary re-initialization, manages loading and error states, and provides methods for model disposal and cleanup when components unmount.

Custom model hooks also address the unique challenges of managing multiple models simultaneously, which is increasingly common in sophisticated AI applications. These scenarios require careful resource management to prevent memory leaks and ensure optimal performance across different model types and sizes. Advanced model management hooks implement intelligent caching strategies, priority-based loading systems, and memory optimization techniques that ensure smooth application performance even when working with large, resource-intensive models.

The implementation of custom model hooks extends beyond basic loading and initialization to include sophisticated configuration management, version control, and hot-swapping capabilities. These advanced features enable applications to dynamically update models based on user preferences, A/B testing scenarios, or real-time performance metrics without requiring application restarts or losing existing state. Such flexibility is crucial for production ML applications that need to adapt and improve continuously based on user feedback and changing requirements.

Asynchronous Prediction State Patterns

Managing prediction state in React applications requires specialized patterns that can handle the asynchronous nature of machine learning inference while maintaining responsive user interfaces. Unlike traditional API calls, ML predictions often involve complex preprocessing steps, batch processing for efficiency, and post-processing of results before presentation to users. These requirements necessitate sophisticated state management patterns that can coordinate multiple asynchronous operations while providing meaningful feedback to users about processing status and progress.

Effective prediction state management involves implementing queuing mechanisms for batch processing, maintaining prediction history for caching and analytics, handling prediction cancellation for improved user experience, and managing concurrent predictions without overwhelming system resources. These patterns must also account for the possibility of prediction failures, model unavailability, and network connectivity issues that can impact the reliability of ML-powered features.

Advanced prediction state patterns incorporate intelligent caching strategies that can significantly improve application performance by storing and reusing previous predictions when appropriate. These caching mechanisms must consider the temporal relevance of predictions, the computational cost of re-prediction versus cache lookup, and the trade-offs between memory usage and performance. Such optimization strategies are particularly important for applications that perform frequent predictions or work with computationally expensive models.

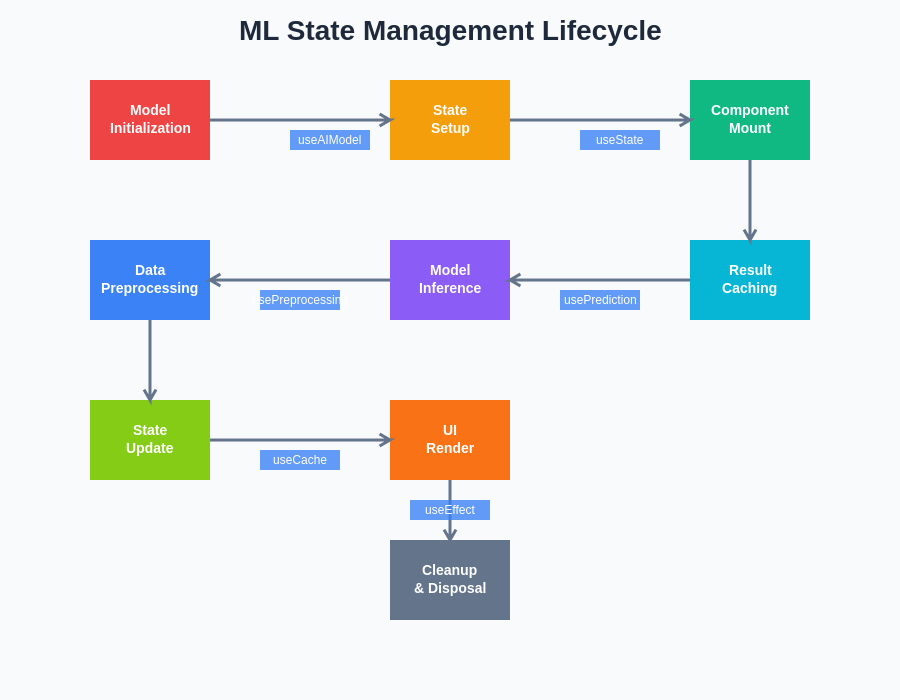

The architectural foundation of React AI applications requires careful orchestration of multiple specialized hooks that manage different aspects of machine learning functionality. This systematic approach ensures that AI capabilities are properly integrated into the React component lifecycle while maintaining clean separation of concerns and optimal performance characteristics.

Real-time Data Processing and Streaming

Modern machine learning applications increasingly require real-time data processing capabilities, particularly for applications involving computer vision, natural language processing, or sensor data analysis. React applications must be architected to handle continuous data streams while maintaining smooth user interfaces and preventing performance degradation. This requires specialized hooks and state management patterns that can efficiently process streaming data, manage buffer states, and coordinate real-time predictions without blocking the main thread.

Real-time ML applications present unique challenges in terms of state synchronization, data consistency, and performance optimization. These applications must balance the need for immediate responsiveness with the computational requirements of machine learning inference, often requiring sophisticated scheduling and prioritization mechanisms. Advanced implementations incorporate web workers, service workers, and other background processing techniques to ensure that ML computations do not interfere with user interface responsiveness.

The integration of real-time ML capabilities also requires careful consideration of data flow architectures, event handling patterns, and state synchronization mechanisms. Applications must be designed to handle varying data rates, temporary connectivity issues, and the need for graceful degradation when real-time processing capabilities become unavailable. These considerations are particularly important for applications that rely on continuous learning or adaptation based on real-time user feedback.

Error Handling and Fallback Strategies

Machine learning applications face unique error scenarios that traditional web applications rarely encounter, including model loading failures, inference timeouts, memory allocation issues, and incompatible input data formats. Effective AI state management requires comprehensive error handling strategies that can gracefully manage these scenarios while maintaining application stability and providing meaningful feedback to users. This involves implementing sophisticated retry mechanisms, fallback model strategies, and progressive enhancement patterns that ensure applications remain functional even when AI capabilities are temporarily unavailable.

Advanced error handling in ML applications extends beyond simple try-catch patterns to include predictive error detection, automatic recovery mechanisms, and intelligent degradation strategies. These systems must be capable of detecting when models are likely to fail, implementing alternative processing pathways, and maintaining application state consistency even when AI components encounter unexpected errors. Such robustness is essential for production applications where AI features are integral to core functionality rather than optional enhancements.

The implementation of effective error handling also requires careful consideration of user experience implications, including appropriate loading states, error messaging, and alternative interaction patterns when AI features become unavailable. Applications must be designed to fail gracefully while providing users with clear information about system status and available alternatives, ensuring that AI-powered features enhance rather than hinder the overall user experience.

Discover comprehensive AI research capabilities with Perplexity for staying updated on the latest developments in frontend AI integration and React state management patterns. The rapidly evolving landscape of browser-based machine learning requires continuous learning and adaptation to leverage new capabilities and optimization techniques effectively.

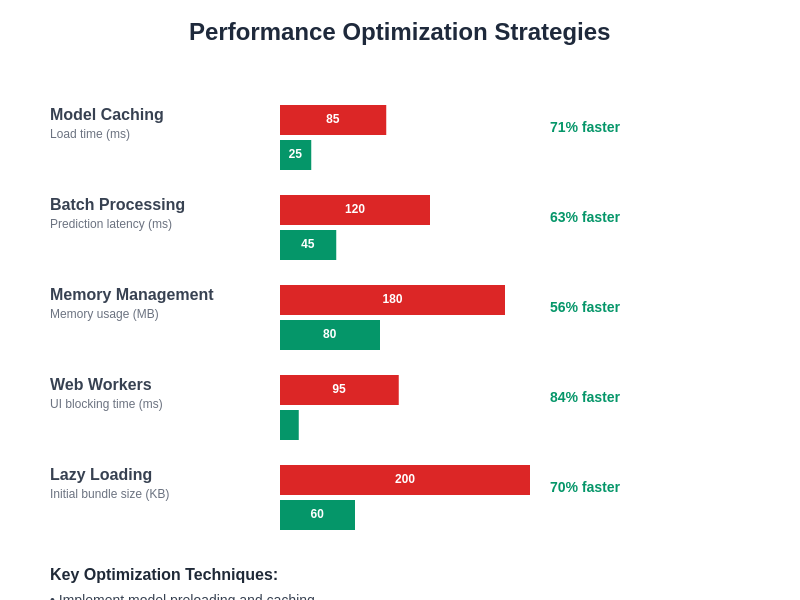

Performance Optimization for ML State

Performance optimization in React AI applications requires specialized techniques that address the unique computational and memory requirements of machine learning operations. These optimizations must consider factors such as model size, inference frequency, memory allocation patterns, and the need to maintain smooth user interfaces while performing computationally intensive operations. Effective performance optimization involves implementing intelligent batching strategies, memory management techniques, and computational scheduling that ensures optimal resource utilization without compromising user experience.

Advanced performance optimization techniques include implementing predictive loading strategies that anticipate user needs and preload relevant models or predictions, utilizing service workers and web workers to offload computational tasks from the main thread, implementing intelligent caching mechanisms that balance memory usage with performance benefits, and optimizing data flow patterns to minimize unnecessary state updates and re-renders. These optimizations are particularly important for mobile applications where computational resources and battery life are significant constraints.

The optimization of ML state management also extends to consideration of different deployment environments, network conditions, and device capabilities. Applications must be designed to adapt their behavior based on available resources, automatically scaling computational complexity and model selection to match device capabilities while maintaining acceptable performance levels across diverse user environments.

Integration with Popular ML Frameworks

The integration of React hooks with popular machine learning frameworks requires specialized adapters and abstraction layers that bridge the gap between ML library APIs and React’s component-based architecture. Different ML frameworks have distinct initialization procedures, prediction interfaces, and resource management requirements that must be carefully abstracted to provide consistent, intuitive hooks for React developers. This integration work involves creating framework-specific hooks while maintaining common interfaces that allow applications to switch between different ML backends without significant code changes.

TensorFlow.js integration requires hooks that can handle both graph-based and eager execution models, manage GPU acceleration settings, and coordinate complex model architectures including sequential, functional, and subclassed models. PyTorch.js integration involves different considerations around dynamic computation graphs and just-in-time compilation, while ONNX.js integration requires handling cross-framework model compatibility and optimization for web deployment. Each framework presents unique opportunities and challenges that must be addressed through specialized hook implementations.

The development of framework-agnostic abstractions enables applications to leverage multiple ML frameworks simultaneously, choosing the most appropriate framework for specific tasks while maintaining consistent state management patterns. This flexibility is particularly valuable for applications that require different ML capabilities, such as combining computer vision models from one framework with natural language processing models from another, while maintaining unified state management and user interface patterns.

The lifecycle of machine learning state in React applications involves multiple phases from initial model loading through prediction processing and cleanup. Understanding this lifecycle is crucial for implementing efficient state management patterns that optimize performance while ensuring proper resource management throughout the application’s runtime.

Testing Strategies for AI Components

Testing React components that integrate machine learning capabilities requires specialized strategies that can effectively validate AI functionality while maintaining reasonable test execution times and resource requirements. Traditional testing approaches must be adapted to handle asynchronous model loading, probabilistic prediction outcomes, and the computational complexity of ML operations. Effective testing strategies involve creating mock models for unit testing, implementing integration tests with lightweight models, and developing performance benchmarks that ensure AI features meet acceptability criteria.

Advanced testing strategies for ML components include implementing property-based testing for prediction consistency, creating synthetic datasets for reproducible testing scenarios, developing visual regression testing for ML-powered UI components, and implementing A/B testing frameworks that can evaluate the effectiveness of different AI features. These testing approaches must balance comprehensiveness with practicality, ensuring that AI features are thoroughly validated without creating prohibitively expensive or time-consuming test suites.

The testing of AI components also requires consideration of edge cases specific to machine learning applications, including handling of unusual input data, performance under resource constraints, and behavior during model updates or failures. Comprehensive testing strategies incorporate stress testing, performance profiling, and reliability testing that ensures AI features perform consistently across diverse usage scenarios and deployment environments.

Accessibility and Inclusive AI Design

The integration of AI capabilities into React applications must prioritize accessibility and inclusive design principles to ensure that machine learning features enhance rather than hinder the user experience for individuals with diverse abilities and needs. This involves implementing AI features that are compatible with assistive technologies, providing alternative interaction methods for users who cannot access AI-powered features, and ensuring that AI-driven interfaces remain navigable and comprehensible for users with cognitive, visual, or motor impairments.

Accessible AI design requires careful consideration of how machine learning predictions and recommendations are presented to users, including providing clear explanations of AI behavior, offering manual overrides for AI-driven actions, and implementing progressive enhancement patterns that ensure core functionality remains available even when AI features are disabled. These design principles are particularly important for applications where AI features are integrated into critical user workflows or decision-making processes.

The development of inclusive AI features also involves considering bias and fairness implications of machine learning models, implementing transparency mechanisms that allow users to understand AI decision-making processes, and providing controls that allow users to customize AI behavior based on their individual preferences and needs. Such considerations are essential for creating AI-powered applications that serve diverse user communities effectively and ethically.

Security Considerations for Client-Side ML

The implementation of machine learning capabilities in client-side React applications introduces unique security considerations that must be carefully addressed to protect user data and maintain application integrity. Client-side ML applications must consider the exposure of model architectures and weights, the potential for adversarial attacks on client-side models, and the security implications of processing sensitive data in browser environments. Effective security strategies involve implementing model obfuscation techniques, validating model inputs and outputs, and designing data processing pipelines that minimize exposure of sensitive information.

Advanced security considerations for client-side ML include implementing secure model distribution mechanisms, protecting against model extraction and reverse engineering attempts, and ensuring that AI features cannot be exploited to compromise application security or user privacy. These security measures must be balanced against performance requirements and user experience considerations, ensuring that security enhancements do not significantly impact application functionality or responsiveness.

The security architecture of client-side ML applications must also consider the implications of edge computing environments, offline functionality requirements, and the need for secure communication with backend AI services. Comprehensive security strategies incorporate defense-in-depth approaches that protect against multiple attack vectors while maintaining the flexibility and performance benefits that make client-side ML attractive for modern web applications.

Future Trends and Emerging Patterns

The landscape of React AI integration continues to evolve rapidly as new browser capabilities, ML frameworks, and development patterns emerge. Future trends in React AI state management include the adoption of WebAssembly for high-performance ML operations, integration with browser-native ML APIs, and the development of more sophisticated caching and optimization strategies. These emerging capabilities promise to further simplify AI integration while improving performance and expanding the range of ML applications that can be effectively deployed in browser environments.

Emerging patterns in React AI development include the standardization of AI hook interfaces, the development of cross-framework compatibility layers, and the integration of AI capabilities into popular React libraries and frameworks. These developments are making AI integration more accessible to mainstream React developers while establishing best practices and common patterns that improve code quality and maintainability across the ecosystem.

The future of React AI integration also includes consideration of emerging hardware capabilities, such as specialized ML acceleration in mobile devices and browsers, which will enable more sophisticated AI applications while requiring adaptation of existing state management patterns. Understanding these trends is crucial for developers who want to build AI applications that can evolve and scale effectively as new capabilities become available.

The optimization of React AI applications requires a comprehensive approach that addresses multiple performance dimensions simultaneously. These strategies must balance computational efficiency with user experience requirements while ensuring that AI features enhance rather than degrade overall application performance.

Production Deployment and Monitoring

The deployment of React applications with integrated AI capabilities requires specialized deployment strategies that account for model distribution, performance monitoring, and the unique scaling requirements of ML-powered features. Production deployments must consider model versioning and rollback strategies, performance monitoring for AI features, and the infrastructure requirements for supporting client-side ML at scale. Effective deployment strategies involve implementing CDN distribution for models, monitoring AI feature usage and performance, and developing rollback mechanisms that can quickly disable problematic AI features without affecting core application functionality.

Advanced production considerations include implementing A/B testing frameworks for AI features, monitoring model performance and accuracy in production environments, and developing automated systems for detecting and responding to AI feature failures. These monitoring and management systems must be capable of tracking both technical performance metrics and business impact metrics to ensure that AI features are contributing positively to overall application success.

The production lifecycle management of AI-powered React applications also involves consideration of model retraining workflows, user feedback integration, and the continuous improvement of AI capabilities based on real-world usage patterns. These operational considerations are essential for maintaining and improving AI features over time while ensuring that applications remain stable, performant, and aligned with user needs and business objectives.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The implementation of AI capabilities in web applications requires careful consideration of security, privacy, and performance implications. Readers should conduct thorough testing and security assessments before deploying AI-powered features in production environments. The rapidly evolving nature of browser-based machine learning technologies means that specific implementation details and best practices may change as new capabilities and standards emerge.