The convergence of artificial intelligence and real-time communication technologies has created unprecedented opportunities for building intelligent, responsive applications that can process and respond to data streams instantaneously. WebSocket APIs have emerged as the cornerstone technology enabling this transformation, facilitating bidirectional communication channels that allow AI systems to receive continuous data inputs, process them in real-time, and deliver intelligent responses without the latency constraints of traditional request-response patterns.

Stay updated with the latest AI streaming technologies to understand how real-time processing is revolutionizing industries from finance to healthcare. The integration of WebSocket protocols with AI processing pipelines represents a fundamental shift in how we design and deploy intelligent systems, moving from batch processing paradigms to continuous, streaming intelligence that can adapt and respond to changing conditions in milliseconds rather than minutes or hours.

The Foundation of Real-Time AI Architecture

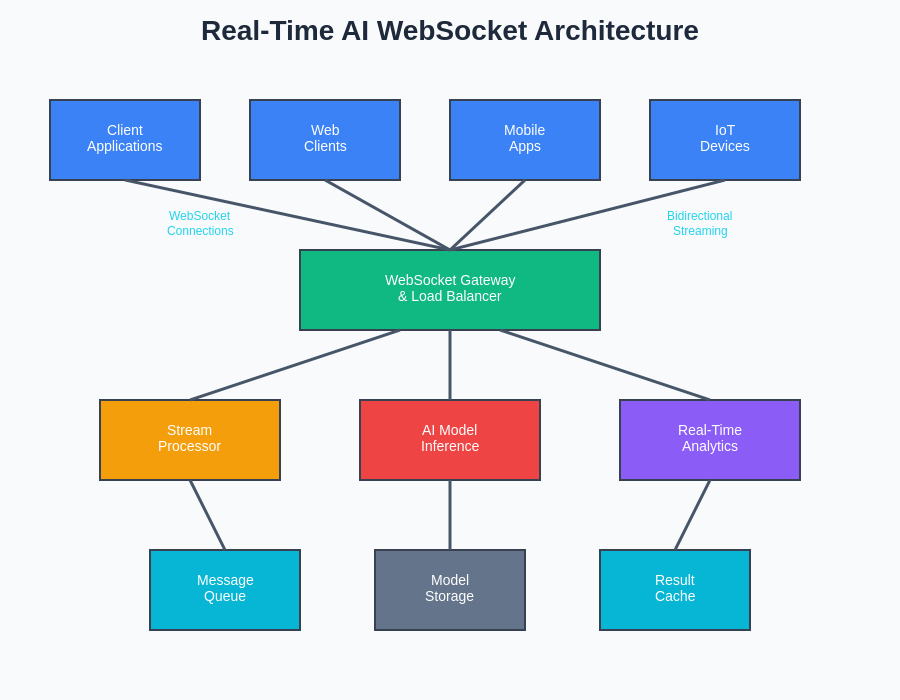

Real-time AI systems built on WebSocket foundations require sophisticated architectural considerations that go far beyond traditional web application designs. The persistent connection nature of WebSocket protocols enables continuous data flow between clients and AI processing engines, creating opportunities for truly interactive and responsive AI experiences. This architectural approach eliminates the overhead associated with establishing new connections for each data exchange, dramatically reducing latency and enabling the kind of immediate feedback loops that modern AI applications demand.

The architectural complexity of real-time AI systems extends beyond simple message passing to encompass considerations such as connection management, load balancing across multiple AI processing nodes, graceful degradation under high load conditions, and sophisticated error recovery mechanisms. These systems must handle thousands of concurrent connections while maintaining consistent performance and ensuring that AI processing capabilities can scale dynamically based on demand. The result is a robust infrastructure capable of supporting everything from real-time fraud detection in financial transactions to live sentiment analysis of social media streams.

The comprehensive architecture demonstrates how WebSocket gateways coordinate between multiple client types and processing layers, creating a scalable foundation for real-time AI applications. This multi-tier approach ensures that data flows efficiently from diverse sources through intelligent processing pipelines to deliver actionable insights with minimal latency.

WebSocket Protocol Advantages for AI Applications

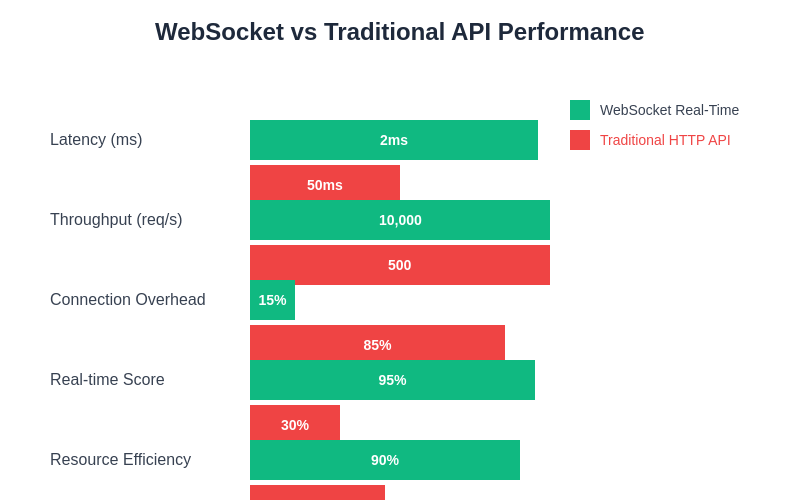

The inherent characteristics of the WebSocket protocol make it particularly well-suited for AI applications that require continuous data ingestion and real-time processing. Unlike traditional HTTP requests that follow a strict request-response pattern, WebSocket connections remain open and allow both clients and servers to initiate data transmission at any time. This bidirectional communication capability is essential for AI systems that need to process streaming data while simultaneously providing real-time feedback, updates, or alerts based on their analysis.

The protocol’s low overhead and persistent connection model significantly reduce the computational and network resources required for maintaining real-time communication channels. This efficiency becomes critically important when dealing with high-frequency data streams such as IoT sensor readings, financial market data, or real-time user interaction data. The reduced latency and increased throughput enabled by WebSocket connections allow AI systems to operate with the responsiveness required for time-sensitive applications such as autonomous vehicle control systems, real-time recommendation engines, and live content moderation platforms.

Explore advanced AI capabilities with Claude to understand how sophisticated reasoning can be integrated into real-time streaming applications for enhanced decision-making capabilities. The combination of WebSocket’s efficient communication layer with advanced AI processing creates powerful platforms for building intelligent, responsive applications that can adapt to changing conditions in real-time.

Streaming Data Processing Architectures

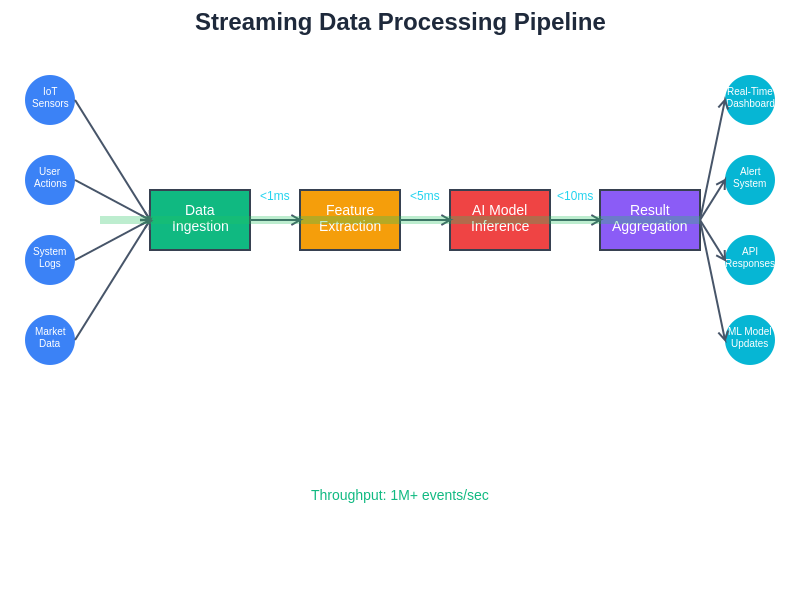

Modern streaming data processing architectures for AI applications typically employ a multi-layered approach that separates data ingestion, processing, and output generation into distinct but interconnected components. The ingestion layer handles the continuous flow of data from various sources through WebSocket connections, ensuring that data is properly formatted, validated, and queued for processing. This layer must be capable of handling variable data rates, managing connection failures, and providing appropriate backpressure mechanisms to prevent system overload during peak usage periods.

The processing layer contains the core AI algorithms and models responsible for analyzing the incoming data streams and generating intelligent insights or predictions. This layer often employs techniques such as sliding window analysis, real-time feature extraction, and incremental learning algorithms that can adapt to new patterns in the data without requiring complete model retraining. The distributed nature of these processing systems allows for horizontal scaling and fault tolerance, ensuring that AI processing capabilities can grow with demand while maintaining consistent performance levels.

The output layer manages the delivery of AI-generated insights back to connected clients through the same WebSocket connections used for data ingestion. This creates a closed-loop system where data flows continuously in both directions, enabling interactive AI experiences where user actions can immediately influence AI behavior and vice versa. The output layer must handle message routing, client-specific filtering, and appropriate throttling to ensure that clients receive relevant information without being overwhelmed by excessive data volumes.

The streaming data processing pipeline illustrates how diverse data sources flow through feature extraction and AI inference stages to generate real-time outputs across multiple channels. This systematic approach maintains sub-millisecond latency while processing millions of events per second, enabling responsive AI applications that can react to changing conditions instantaneously.

Real-Time Machine Learning Pipeline Integration

The integration of machine learning pipelines with real-time data streams presents unique challenges and opportunities that distinguish it from traditional batch processing approaches. Real-time ML pipelines must be designed to handle continuous data ingestion while simultaneously performing feature engineering, model inference, and result aggregation without introducing significant latency into the processing chain. This requires careful optimization of data structures, algorithm implementations, and resource allocation strategies to ensure that processing can keep pace with incoming data rates.

Feature engineering in real-time systems often relies on streaming aggregations, rolling statistics, and time-window based calculations that must be computed incrementally as new data arrives. These computations must be designed to maintain accuracy while minimizing memory usage and computational overhead, often requiring sophisticated algorithms for approximate calculations and efficient data structure management. The ability to maintain multiple concurrent feature extraction processes while ensuring consistent performance across varying data rates represents one of the core technical challenges in real-time AI system design.

Model serving in streaming environments requires specialized infrastructure that can handle high-throughput inference requests while maintaining low latency response times. This often involves techniques such as model caching, batch inference optimization, and intelligent request routing to ensure that AI models can process incoming data efficiently without creating bottlenecks in the processing pipeline. Advanced systems may employ multiple model versions simultaneously, allowing for A/B testing and gradual model deployment strategies that minimize the risk of performance degradation during model updates.

Interactive AI User Experiences

The combination of WebSocket communication and real-time AI processing enables entirely new categories of user experiences that were previously impossible with traditional web architectures. Interactive AI applications can provide immediate feedback on user inputs, adapt their behavior based on real-time user interactions, and maintain contextual awareness across extended interaction sessions. This creates opportunities for building AI assistants that can engage in natural, flowing conversations, collaborative editing tools that provide intelligent suggestions in real-time, and gaming experiences that adapt dynamically to player behavior.

Real-time AI user interfaces must be designed to handle the continuous flow of information without overwhelming users or degrading the user experience. This requires sophisticated client-side data management, intelligent filtering and prioritization of AI-generated content, and adaptive interface designs that can adjust their information density based on user preferences and context. The challenge lies in presenting AI insights in a way that enhances rather than distracts from the user’s primary objectives while maintaining the responsiveness that users expect from real-time applications.

Discover comprehensive research capabilities with Perplexity for exploring the latest developments in real-time AI user interface design and interaction patterns. The evolution of real-time AI interfaces continues to push the boundaries of what’s possible in human-computer interaction, creating new paradigms for how users can collaborate with intelligent systems.

Scalability and Performance Optimization

Scaling real-time AI systems to handle thousands or millions of concurrent connections while maintaining consistent performance requires sophisticated architectural decisions and optimization strategies. Connection pooling, load balancing, and intelligent request routing become critical components for ensuring that system resources are utilized efficiently and that individual users experience consistent performance regardless of overall system load. These systems must be designed to handle graceful degradation under extreme load conditions while maintaining core functionality for all connected users.

Performance optimization in real-time AI systems often focuses on minimizing end-to-end latency from data ingestion to result delivery. This involves optimizing every component in the processing pipeline, from network protocol implementations to algorithm efficiency and data structure design. Advanced systems employ techniques such as predictive caching, speculative processing, and intelligent batching to reduce latency while maintaining system stability and resource efficiency.

Memory management becomes particularly critical in streaming AI systems where data must be processed continuously without accumulating unbounded state. Efficient data structures, garbage collection optimization, and careful resource lifecycle management are essential for maintaining system stability over extended operation periods. These considerations become even more complex when dealing with stateful AI models that must maintain context across multiple interactions while avoiding memory leaks or excessive resource consumption.

The performance comparison clearly demonstrates the substantial advantages of WebSocket-based real-time AI systems over traditional HTTP API approaches. With dramatically reduced latency, exponentially higher throughput, and superior resource efficiency, WebSocket implementations enable truly responsive AI applications that can operate at the speed required for modern real-time use cases.

Security Considerations for Real-Time AI Systems

Real-time AI systems present unique security challenges that extend beyond traditional web application security concerns. The persistent nature of WebSocket connections creates attack surfaces that require specialized security measures, including connection authentication, message validation, and protection against various types of denial-of-service attacks. These systems must implement robust security measures without significantly impacting the performance characteristics that make real-time processing valuable.

Data privacy and protection become particularly complex in streaming AI systems where sensitive information may be processed in real-time and potentially cached for performance optimization. Implementing proper data anonymization, encryption, and access control mechanisms while maintaining the low latency required for real-time processing presents significant technical challenges. Systems must be designed to handle data privacy requirements such as GDPR compliance while ensuring that AI processing capabilities are not compromised.

The continuous operation nature of real-time AI systems requires sophisticated monitoring and anomaly detection capabilities to identify potential security threats or system compromises quickly. This includes monitoring for unusual connection patterns, detecting potential data exfiltration attempts, and identifying attempts to manipulate AI processing through malicious inputs. Security measures must be integrated seamlessly into the real-time processing pipeline without introducing latency or reducing system performance.

Edge Computing and Distributed Processing

The deployment of real-time AI systems increasingly involves edge computing architectures that distribute processing capabilities closer to data sources and end users. This approach reduces latency by minimizing the distance data must travel while providing improved resilience through distributed processing capabilities. Edge-based real-time AI systems must handle intermittent connectivity, variable processing capabilities, and sophisticated data synchronization challenges while maintaining consistent performance and reliability.

Distributed real-time AI processing requires careful coordination between multiple processing nodes to ensure that data streams are handled efficiently and that results are aggregated appropriately. This often involves implementing sophisticated consensus algorithms, data partitioning strategies, and conflict resolution mechanisms that can handle the dynamic nature of real-time data streams while maintaining system consistency and reliability.

The integration of edge computing with real-time AI processing creates opportunities for building highly responsive systems that can operate effectively even with limited connectivity to centralized processing resources. These systems must be designed to handle autonomous operation during connectivity disruptions while seamlessly integrating with centralized systems when connectivity is available. This hybrid approach enables new categories of applications that can provide real-time AI capabilities in environments where traditional cloud-based processing would be impractical.

Industry Applications and Use Cases

Financial services represent one of the most demanding environments for real-time AI systems, where millisecond latencies can translate to significant competitive advantages or losses. Real-time fraud detection systems process millions of transactions per second, using streaming AI algorithms to identify suspicious patterns and trigger immediate protective measures. High-frequency trading platforms rely on real-time AI to analyze market conditions and execute trades faster than human traders could ever achieve, creating entirely new categories of financial services and investment strategies.

Healthcare applications of real-time AI include patient monitoring systems that can detect critical changes in vital signs and alert medical staff immediately, telemedicine platforms that provide real-time diagnostic assistance, and surgical robotics systems that use AI to enhance precision and safety during procedures. These applications require extremely high reliability and low latency while handling sensitive medical data that must be protected according to strict regulatory requirements.

Manufacturing and industrial applications leverage real-time AI for predictive maintenance, quality control, and process optimization. These systems process continuous streams of sensor data from industrial equipment to predict failures before they occur, optimize production parameters in real-time, and maintain quality standards through automated inspection and correction systems. The integration of real-time AI with industrial control systems creates opportunities for achieving new levels of efficiency and reliability in manufacturing processes.

Development Tools and Frameworks

The development ecosystem for real-time AI applications has evolved to include specialized frameworks and tools designed specifically for building streaming AI systems. These tools provide abstractions for handling WebSocket connections, managing streaming data processing pipelines, and integrating AI models into real-time processing workflows. Popular frameworks include Apache Kafka for stream processing, TensorFlow Serving for real-time model deployment, and various WebSocket libraries that simplify connection management and message handling.

Modern development environments for real-time AI include sophisticated debugging and monitoring tools that help developers understand system behavior under various load conditions and identify performance bottlenecks in complex processing pipelines. These tools provide real-time visibility into system metrics, connection statistics, and processing latencies, enabling developers to optimize their applications for maximum performance and reliability.

Testing real-time AI systems requires specialized approaches that can simulate realistic load conditions, validate system behavior under various failure scenarios, and ensure that AI processing maintains accuracy and consistency across different operating conditions. This includes load testing tools that can simulate thousands of concurrent WebSocket connections, chaos engineering approaches that test system resilience, and specialized testing frameworks for validating AI model performance in streaming environments.

Future Developments and Emerging Trends

The future of real-time AI systems points toward even greater integration between AI processing capabilities and real-time communication technologies. Emerging trends include the development of specialized hardware for real-time AI processing, advanced compression algorithms for reducing bandwidth requirements in streaming AI applications, and new protocol developments that optimize communication patterns for AI workloads. These developments promise to enable even more sophisticated real-time AI applications with lower latency and higher throughput capabilities.

Quantum computing represents a potential game-changer for real-time AI processing, offering the possibility of performing certain types of AI calculations exponentially faster than classical computers. While practical quantum computing for real-time AI remains in early development stages, research in this area suggests that future systems may be capable of processing complex AI workloads with unprecedented speed and efficiency.

The integration of 5G and future wireless communication technologies with real-time AI systems will enable new categories of mobile and IoT applications that can leverage sophisticated AI processing capabilities while maintaining the low latency required for real-time operation. This convergence of advanced communication technologies with AI processing capabilities promises to unlock entirely new categories of applications and services that were previously impossible due to technical limitations.

The continued evolution of real-time AI systems will likely focus on improving energy efficiency, reducing computational requirements, and developing more sophisticated algorithms that can maintain accuracy while operating under the constraints imposed by real-time processing requirements. These developments will make real-time AI capabilities accessible to a broader range of applications and deployment scenarios, democratizing access to advanced AI technologies across various industries and use cases.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of real-time AI technologies and their applications. Readers should conduct their own research and consider their specific requirements when implementing real-time AI systems. The effectiveness and suitability of real-time AI solutions may vary depending on specific use cases, technical requirements, and operational constraints.