The exponential growth of artificial intelligence applications has fundamentally transformed the landscape of data processing and storage requirements, creating unprecedented demands for high-performance caching solutions that can handle the complex, data-intensive operations characteristic of modern machine learning workloads. As AI systems become increasingly sophisticated, the choice between caching technologies like Redis and Memcached has evolved from a simple performance consideration to a critical architectural decision that can determine the success or failure of AI implementations at scale.

Explore the latest AI infrastructure trends to understand how caching strategies are evolving to meet the demanding requirements of next-generation artificial intelligence applications. The intersection of caching technology and AI represents a crucial frontier where system performance, data accessibility, and computational efficiency converge to enable breakthrough applications in machine learning, natural language processing, and computer vision.

Understanding the AI Caching Challenge

The unique characteristics of AI applications present distinct challenges that traditional caching solutions were not originally designed to address. Unlike conventional web applications that primarily cache static content or simple database queries, AI systems must handle complex data structures including multi-dimensional arrays, trained model parameters, feature vectors, and real-time inference results that demand sophisticated storage and retrieval mechanisms.

Machine learning workflows typically involve massive datasets that require rapid access during training phases, while inference operations demand ultra-low latency retrieval of model weights and intermediate computations. The caching layer must support not only high-throughput data operations but also complex data types, atomic operations for model updates, and sophisticated eviction policies that understand the relative importance of different cached elements in the context of AI workloads.

Redis Architecture and AI Advantages

Redis distinguishes itself in the AI landscape through its advanced data structure support and powerful atomic operations that align naturally with machine learning computational patterns. The platform’s native support for complex data types including hashes, lists, sets, and sorted sets provides AI applications with the flexibility to cache sophisticated data structures without requiring serialization and deserialization overhead that can significantly impact performance in high-frequency operations.

The Redis clustering capabilities and built-in replication mechanisms offer AI systems the scalability required to handle massive model parameters and training datasets across distributed computing environments. Redis Streams functionality provides particular value for AI applications that require real-time data processing pipelines, enabling efficient handling of continuous data feeds that are characteristic of online learning systems and real-time inference applications.

Experience advanced AI development with Claude to leverage sophisticated reasoning capabilities that can help optimize your caching strategies for complex machine learning architectures. The integration of intelligent caching decisions with AI-powered optimization creates synergistic effects that can dramatically improve overall system performance and resource utilization.

Memcached Simplicity and Performance Focus

Memcached takes a fundamentally different approach by emphasizing simplicity and raw performance optimization that can be particularly advantageous for AI applications with straightforward caching requirements. The platform’s minimalist design philosophy eliminates unnecessary complexity, resulting in extremely low memory overhead and predictable performance characteristics that are crucial for AI systems operating under strict latency constraints.

The distributed nature of Memcached aligns well with AI applications that require horizontal scaling of caching operations across multiple computing nodes. The simple key-value storage model, while less flexible than Redis, offers superior memory efficiency for AI workloads that primarily cache large binary objects such as serialized model weights, preprocessed datasets, or computed feature representations that do not require complex manipulation within the cache.

Performance Characteristics in AI Workloads

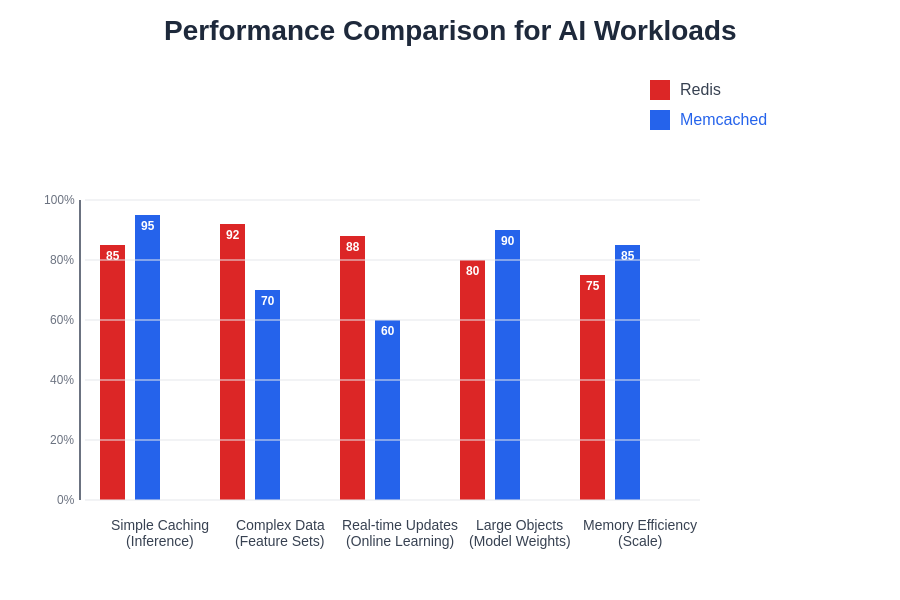

The performance profile differences between Redis and Memcached become particularly pronounced in AI application contexts where the nature of data access patterns and computational requirements create distinct optimization opportunities. Redis excels in scenarios requiring complex data manipulations, atomic updates to model parameters, and sophisticated query operations that are common in online learning systems and real-time recommendation engines.

Memcached demonstrates superior performance in pure caching scenarios where AI applications need to rapidly retrieve large data objects with minimal processing overhead. The platform’s optimized memory allocation and simple protocol result in lower CPU utilization and higher throughput for straightforward get-and-set operations that are characteristic of inference caching and preprocessed data storage in batch processing workflows.

The performance characteristics reveal distinct optimization patterns that align with different AI application architectures. Redis demonstrates superior performance in complex data manipulation scenarios, while Memcached excels in high-throughput simple retrieval operations that are common in inference pipelines and batch processing systems.

Data Structure Support for Machine Learning

The fundamental difference in data structure support between Redis and Memcached significantly impacts their suitability for various AI application patterns. Redis provides native support for complex data structures that map naturally to machine learning concepts, enabling developers to store and manipulate neural network architectures, feature sets, and training metadata directly within the cache without requiring external serialization frameworks.

Machine learning applications frequently require operations on data collections that benefit from Redis’s atomic operations on lists, sets, and sorted sets. Feature ranking systems can leverage sorted sets for efficient top-k retrieval, while recommendation engines can utilize set operations for rapid intersection and union calculations on user preference data. These capabilities eliminate the need for external processing steps that would be required when using Memcached’s simple key-value model.

Scalability and Distribution Strategies

The scalability approaches offered by Redis and Memcached present different architectural implications for AI systems operating at various scales. Redis Cluster provides sophisticated partitioning and replication capabilities that enable AI applications to distribute model parameters and training data across multiple nodes while maintaining consistency and fault tolerance that are crucial for mission-critical AI systems.

Memcached’s client-side distribution model offers simpler implementation but requires AI applications to handle partitioning logic and failure scenarios explicitly. This approach can be advantageous for AI systems with custom distribution requirements or those that need fine-grained control over data placement strategies, particularly in heterogeneous computing environments where different types of AI workloads may have distinct performance characteristics.

Enhance your research capabilities with Perplexity to access comprehensive information about advanced caching architectures and distributed systems design patterns that can optimize your AI application performance. The combination of intelligent research tools and sophisticated caching strategies creates powerful synergies for developing high-performance AI systems.

Memory Management and Efficiency

Memory management strategies represent a critical consideration for AI applications where the size of cached data can easily exceed available system memory, requiring sophisticated eviction policies and memory optimization techniques. Redis offers multiple eviction policies including LRU, LFU, and TTL-based expiration that can be tailored to specific AI workload characteristics, enabling intelligent cache management that considers the relative importance of different data elements.

The memory overhead characteristics differ significantly between the platforms, with Memcached providing lower per-object overhead that can be crucial for AI applications caching millions of small data objects such as individual feature vectors or model parameters. Redis’s additional metadata for supporting complex data structures results in higher memory overhead but enables functionality that can eliminate the need for external data processing components.

Persistence and Durability Considerations

The persistence capabilities offered by Redis provide significant advantages for AI applications that require durable storage of expensive-to-compute results such as trained model parameters, computed embeddings, or processed training datasets. Redis offers both RDB snapshots and AOF logging that enable AI systems to recover from failures without requiring complete recomputation of cached results that may have taken hours or days to generate.

Memcached’s pure in-memory approach, while offering superior performance, requires AI applications to implement external persistence mechanisms when durability is required. This design choice can be advantageous for AI systems that have existing robust backup and recovery mechanisms or those that can tolerate data loss in exchange for maximum performance optimization.

Integration Patterns and Implementation Strategies

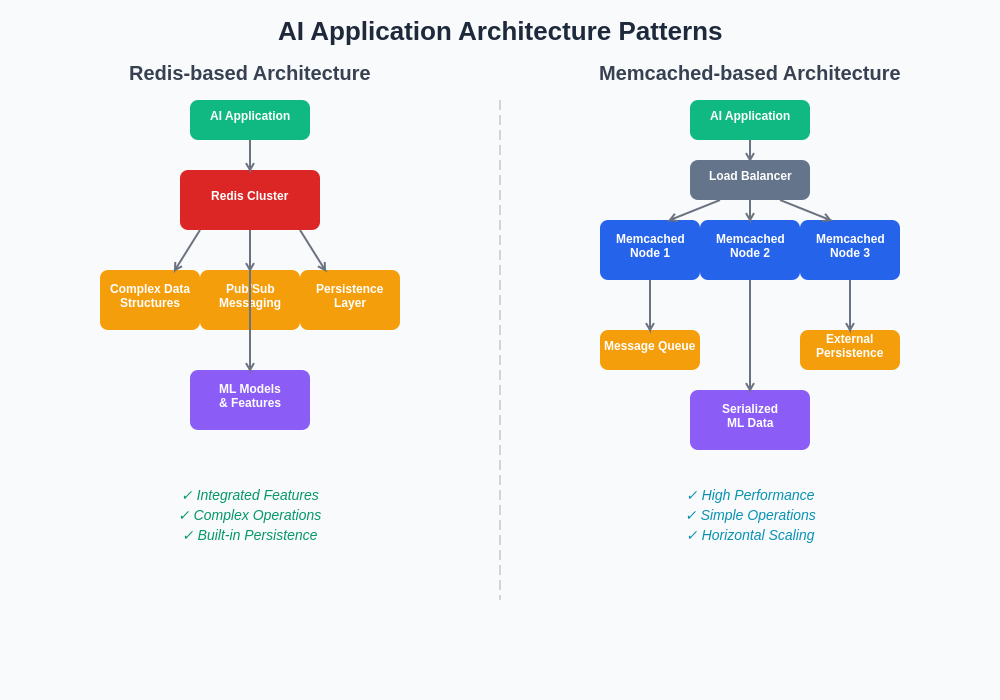

The integration patterns for Redis and Memcached in AI applications reflect different architectural philosophies that impact system design and operational complexity. Redis’s rich feature set enables it to serve multiple roles within AI architectures, functioning simultaneously as a cache, message queue, and coordination mechanism for distributed training operations, simplifying overall system architecture while providing powerful functionality.

Memcached’s focused approach requires AI applications to combine it with additional components for complex functionality, but this separation of concerns can result in more modular architectures that are easier to optimize and maintain. The choice between these approaches often depends on team expertise, operational preferences, and the specific requirements of AI workloads in terms of functionality versus performance optimization.

The architectural patterns demonstrate how Redis and Memcached integrate differently into AI application ecosystems, with Redis providing centralized functionality and Memcached offering distributed simplicity that can be combined with specialized components for complex AI operations.

Use Case Analysis for AI Applications

Different categories of AI applications exhibit distinct caching requirements that favor either Redis or Memcached depending on the specific operational patterns and performance requirements. Real-time recommendation systems typically benefit from Redis’s complex data structures and atomic operations that enable sophisticated ranking and filtering operations directly within the cache, reducing latency and computational overhead.

Batch processing AI workloads, including training pipelines and offline inference systems, often achieve better performance with Memcached’s optimized simple storage model that provides maximum throughput for large data object retrieval without the overhead of unused advanced features. Computer vision applications with large model files and image preprocessing results frequently favor Memcached’s efficient binary data storage capabilities.

Natural language processing applications present mixed requirements where Redis’s text processing capabilities and complex data structures can provide advantages for applications requiring sophisticated text analysis within the cache, while simpler NLP pipelines may achieve better performance through Memcached’s straightforward binary storage approach for preprocessed language models and embeddings.

Performance Optimization Techniques

Optimization strategies for Redis in AI applications focus on leveraging advanced features while minimizing overhead through careful configuration of data structures and operations. Pipeline operations can significantly improve performance for AI workloads that require multiple related operations, such as updating multiple model parameters or retrieving related feature sets, by reducing network round-trips and improving overall throughput.

Memcached optimization in AI contexts emphasizes efficient key design, optimal object sizing, and strategic use of compression to maximize cache hit rates while minimizing memory usage. AI applications can achieve significant performance improvements through intelligent key naming schemes that enable efficient prefix-based operations and careful tuning of connection pooling parameters to handle high-concurrency inference workloads.

Monitoring and Operational Considerations

Monitoring Redis in AI applications requires attention to metrics that reflect the health and performance of complex operations including memory usage patterns, slow query identification, and cluster coordination overhead. The platform’s extensive monitoring capabilities provide detailed insights into operation performance that can guide optimization efforts for AI-specific workloads.

Memcached monitoring focuses on fundamental performance metrics including hit rates, memory utilization, and connection patterns that directly impact AI application performance. The simpler monitoring model can be advantageous for AI teams that prefer focused metrics without the complexity of advanced feature monitoring, enabling straightforward performance analysis and capacity planning.

Security and Compliance in AI Environments

Security considerations for caching solutions in AI applications encompass both data protection and compliance requirements that are increasingly important in regulated industries and privacy-sensitive applications. Redis provides authentication mechanisms and encryption capabilities that enable secure deployment in environments handling sensitive training data or proprietary model parameters that require protection from unauthorized access.

Memcached’s simpler security model may require additional network-level protection mechanisms but can be advantageous in environments where security requirements are handled at the infrastructure level rather than within individual components. The choice between approaches often depends on regulatory requirements and organizational security policies that govern AI application deployment and data handling practices.

Cost Optimization and Resource Management

Cost considerations for Redis versus Memcached in AI applications extend beyond simple licensing and hardware costs to include operational overhead, development complexity, and performance optimization requirements. Redis’s comprehensive feature set can reduce the need for additional infrastructure components while providing functionality that can improve overall AI application efficiency and reduce computational costs.

Memcached’s lower resource overhead can result in reduced infrastructure costs for AI applications with straightforward caching requirements, particularly in cloud environments where memory and CPU costs are directly correlated with usage. The optimal choice often depends on the total cost of ownership analysis that considers development time, operational complexity, and long-term maintenance requirements in addition to direct infrastructure costs.

Future Trends and Technology Evolution

The evolution of caching technologies continues to respond to the changing requirements of AI applications, with both Redis and Memcached incorporating enhancements that address the specific needs of machine learning workloads. Redis continues to expand its AI-focused capabilities including native support for vector operations and machine learning data structures that align with the growing adoption of AI applications across industries.

Memcached’s development focuses on performance optimizations and efficiency improvements that benefit AI applications requiring maximum throughput and minimal overhead. The ongoing development of both platforms reflects the growing recognition of caching as a critical component in AI infrastructure that requires specialized optimization for machine learning workloads.

The emergence of specialized AI caching solutions and the integration of caching capabilities into AI frameworks suggests a future where caching technology becomes increasingly specialized for artificial intelligence applications, potentially offering hybrid approaches that combine the best characteristics of both Redis and Memcached while providing AI-specific optimizations and features.

Implementation Recommendations

The decision between Redis and Memcached for AI applications should be based on careful analysis of specific workload requirements, team expertise, and long-term architectural goals. Redis is typically the optimal choice for AI applications requiring complex data operations, atomic updates, persistence capabilities, or integration with message queuing and coordination functions that are common in sophisticated machine learning systems.

Memcached represents the better choice for AI applications prioritizing maximum performance for simple caching operations, minimal memory overhead, or integration into existing distributed caching infrastructures where simplicity and predictability are more valuable than advanced functionality. The decision should also consider the availability of expertise, operational preferences, and compatibility with existing AI development and deployment frameworks.

Hybrid approaches that utilize both Redis and Memcached for different aspects of AI applications can provide optimal performance by leveraging the strengths of each platform for appropriate use cases. This strategy requires additional operational complexity but can result in superior overall performance for complex AI systems with diverse caching requirements that span simple object storage and sophisticated data manipulation operations.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of caching technologies and their applications in AI systems. Readers should conduct their own research and consider their specific requirements when selecting caching solutions for artificial intelligence applications. Performance characteristics may vary depending on specific use cases, infrastructure configurations, and application architectures.