The artificial intelligence revolution has democratized access to sophisticated machine learning models, but deploying these models at scale remains a significant challenge for developers and organizations. Two platforms have emerged as leading solutions for AI model deployment: Replicate and Hugging Face. Both platforms offer unique approaches to making AI models accessible and scalable, yet they serve different needs and use cases within the AI ecosystem. Understanding the nuances between these platforms is crucial for making informed decisions about AI infrastructure and deployment strategies.

Discover the latest AI deployment trends and tutorials to stay current with rapidly evolving deployment methodologies and platform capabilities. The choice between deployment platforms can significantly impact development velocity, operational costs, and the overall success of AI-powered applications, making this comparison essential for developers and technical decision-makers.

Understanding Replicate: Simplicity in AI Deployment

Replicate has positioned itself as the most straightforward platform for running machine learning models in the cloud. The platform’s philosophy centers around eliminating the complexity traditionally associated with model deployment, offering developers a seamless experience from model packaging to production scaling. Replicate’s approach focuses on containerized models that can be deployed with minimal configuration, making it particularly attractive for developers who want to integrate AI capabilities without extensive infrastructure management.

The platform’s strength lies in its Docker-based deployment system, which ensures consistent model execution across different environments. Developers can package their models using standard containerization practices and deploy them to Replicate’s infrastructure with simple API calls. This approach has made Replicate particularly popular among indie developers, startups, and teams that need quick deployment capabilities without the overhead of managing complex machine learning infrastructure.

Replicate’s curated model library features high-quality, production-ready models that have been optimized for performance and reliability. The platform maintains strict quality standards for models in its public library, ensuring that developers can trust the models they integrate into their applications. This curation approach contrasts with more open platforms and provides a level of reliability that is particularly valuable for production applications.

Hugging Face: The Open Source AI Ecosystem

Hugging Face has evolved from a natural language processing company into a comprehensive platform that serves as the GitHub of machine learning. The platform’s open-source philosophy has created the largest repository of machine learning models, datasets, and spaces available to developers worldwide. Hugging Face’s ecosystem approach encompasses everything from model training and fine-tuning to deployment and collaboration, making it a one-stop destination for AI development workflows.

The platform’s transformers library has become the de facto standard for working with pre-trained language models, providing developers with easy access to state-of-the-art models like GPT, BERT, and T5. This extensive library support has created a vibrant community of researchers and developers who contribute models, share insights, and collaborate on advancing the field of artificial intelligence. The community-driven approach has resulted in rapid innovation and an unprecedented variety of available models.

Experience advanced AI capabilities with Claude to enhance your model development and deployment workflows with sophisticated reasoning and code generation support. Hugging Face’s integration capabilities extend beyond simple model hosting to include comprehensive development tools, collaborative features, and enterprise-grade deployment options that scale with organizational needs.

Deployment Models and Architecture

The architectural approaches of Replicate and Hugging Face reflect their different philosophies toward AI deployment. Replicate emphasizes serverless deployment with automatic scaling, allowing models to scale from zero to handle varying traffic loads without manual intervention. This serverless approach eliminates the need for capacity planning and ensures cost-effective operation by charging only for actual usage. The platform automatically handles model loading, memory management, and request routing, abstracting away the complexities of infrastructure management.

Hugging Face offers more diverse deployment options, ranging from simple inference endpoints to dedicated instances for high-throughput applications. The platform’s Inference Endpoints provide managed deployment with customizable compute resources, while Spaces offer a unique approach to model demonstration and sharing. This flexibility allows developers to choose deployment strategies that align with their specific performance requirements, budget constraints, and operational preferences.

The scaling characteristics of both platforms differ significantly in their implementation and cost structures. Replicate’s per-second billing model with automatic scaling makes it ideal for applications with unpredictable traffic patterns, while Hugging Face’s various pricing tiers accommodate everything from experimental projects to enterprise-scale deployments. Understanding these architectural differences is crucial for selecting the platform that best matches application requirements and usage patterns.

Model Library and Ecosystem

The model ecosystems of Replicate and Hugging Face represent fundamentally different approaches to AI model distribution and accessibility. Replicate maintains a carefully curated collection of high-quality models that have been optimized for production use. Each model in Replicate’s library undergoes performance testing and optimization to ensure reliable operation at scale. This curation process results in a smaller but more reliable selection of models that developers can trust for production applications.

Hugging Face operates the world’s largest repository of machine learning models, with hundreds of thousands of models contributed by researchers, organizations, and individual developers. This vast ecosystem includes everything from cutting-edge research models to production-ready implementations across multiple domains including natural language processing, computer vision, audio processing, and multimodal AI. The platform’s open approach has fostered innovation and collaboration but requires developers to evaluate model quality and suitability for their specific use cases.

The discovery and selection process differs significantly between platforms. Replicate’s focused catalog makes it easy to find proven models for common use cases, while Hugging Face’s extensive search and filtering capabilities help developers navigate the vast model landscape. The trade-off between curation and variety reflects different priorities: Replicate prioritizes reliability and ease of use, while Hugging Face emphasizes innovation and comprehensive coverage of AI research and applications.

Developer Experience and Integration

The developer experience on both platforms reflects their target audiences and design philosophies. Replicate prioritizes simplicity and speed of integration, offering straightforward REST APIs and client libraries that enable developers to integrate AI capabilities with minimal code. The platform’s prediction API follows standard web development patterns, making it accessible to developers regardless of their machine learning expertise. This approach has made Replicate particularly popular for rapid prototyping and applications where AI is a feature rather than the core focus.

Hugging Face provides a more comprehensive development environment with tools for model training, fine-tuning, and deployment. The platform’s transformers library and datasets library have become essential tools for AI researchers and developers, providing standardized interfaces for working with diverse models and data sources. The deeper integration capabilities come with increased complexity but offer more control over model behavior and performance optimization.

Enhance your research and development with Perplexity for comprehensive information gathering and analysis during the platform evaluation and model selection process. The documentation quality and community support differ between platforms, with Replicate focusing on clear, concise guides for common use cases, while Hugging Face provides extensive documentation that covers both basic usage and advanced customization options.

Performance and Scalability Considerations

Performance characteristics vary significantly between Replicate and Hugging Face based on their underlying infrastructure and optimization strategies. Replicate’s containerized approach with automatic scaling provides consistent performance with predictable cold start times. The platform’s optimization for Docker-based deployments ensures efficient resource utilization and fast model loading, making it suitable for applications that require responsive AI inference with minimal latency.

Hugging Face offers multiple performance tiers through its Inference Endpoints, allowing developers to select compute resources that match their performance requirements. The platform’s dedicated instances provide superior performance for high-throughput applications, while shared infrastructure offers cost-effective solutions for lower-volume use cases. The ability to customize hardware configurations enables optimization for specific model requirements and performance targets.

Scalability approaches reflect the platforms’ different architectures and pricing models. Replicate’s serverless scaling automatically handles traffic spikes without configuration, making it ideal for applications with variable demand. Hugging Face’s dedicated instances provide predictable performance for steady workloads but require more planning for capacity management. Understanding these performance trade-offs is essential for selecting the platform that best matches application requirements and expected usage patterns.

Pricing Models and Cost Optimization

The pricing structures of Replicate and Hugging Face reflect their different approaches to AI model deployment and target markets. Replicate employs a pay-per-use model that charges based on actual compute time consumed by model inference. This granular billing approach makes it cost-effective for applications with sporadic usage patterns or unpredictable demand. The per-second billing ensures that developers only pay for actual model execution time, making it particularly attractive for experimental applications and low-volume production use cases.

Hugging Face offers multiple pricing tiers designed to accommodate different usage patterns and organizational needs. The platform’s free tier provides generous access to community models and basic inference capabilities, making it accessible for learning, experimentation, and small-scale applications. Professional and enterprise tiers offer enhanced performance, dedicated resources, and advanced features that support production deployments and organizational requirements.

Cost optimization strategies differ between platforms based on their pricing models and feature sets. Replicate’s automatic scaling and per-second billing naturally optimize costs for variable workloads, while Hugging Face’s multiple deployment options allow developers to select the most cost-effective configuration for their specific usage patterns. Understanding the total cost of ownership, including development time, operational overhead, and scaling requirements, is crucial for making economically sound platform decisions.

Security and Compliance Features

Security considerations play a crucial role in platform selection, particularly for applications handling sensitive data or operating in regulated industries. Replicate implements security best practices including encrypted data transmission, secure model execution environments, and access controls that protect both models and inference data. The platform’s containerized approach provides isolation between different model executions and users, reducing security risks associated with shared infrastructure.

Hugging Face offers comprehensive security features across its platform, including private model repositories, enterprise single sign-on integration, and compliance with major data protection regulations. The platform’s enterprise offerings include advanced security features such as private deployments, audit logging, and data residency controls that meet the requirements of security-conscious organizations. These features enable deployment of AI applications in environments with strict security and compliance requirements.

Both platforms continuously update their security practices to address emerging threats and evolving compliance requirements. The choice between platforms often depends on specific security requirements, regulatory constraints, and organizational policies regarding data handling and AI model deployment. Enterprise customers particularly benefit from understanding the security implications and compliance capabilities of each platform.

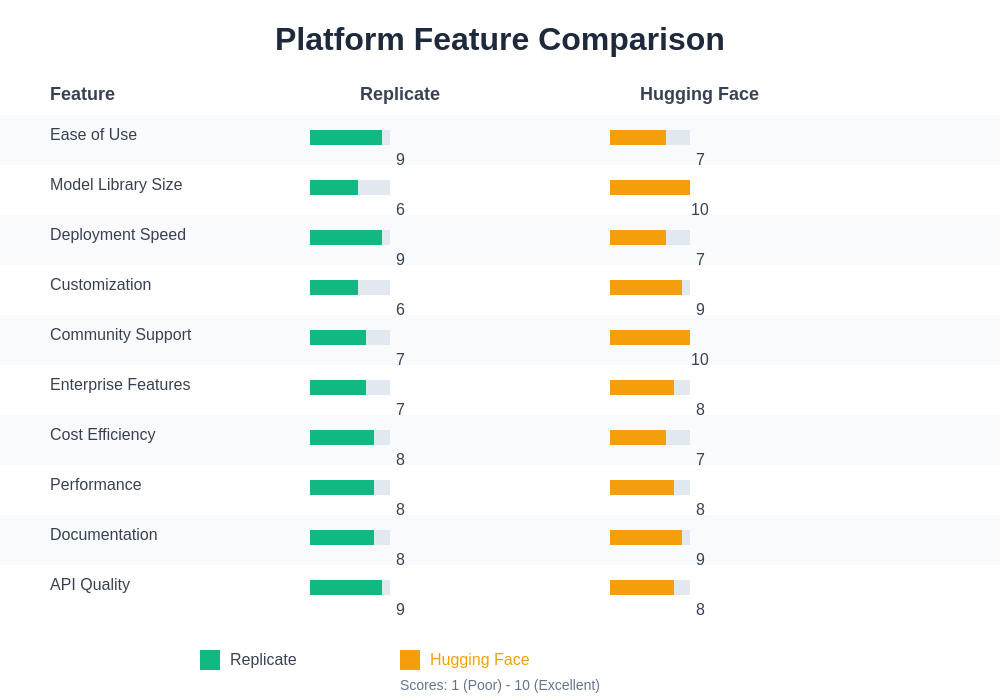

The comprehensive feature comparison reveals distinct strengths and positioning for each platform. While both serve the AI deployment market, their different approaches to scalability, pricing, model curation, and developer experience create clear differentiators that align with specific use cases and organizational needs.

Use Case Scenarios and Recommendations

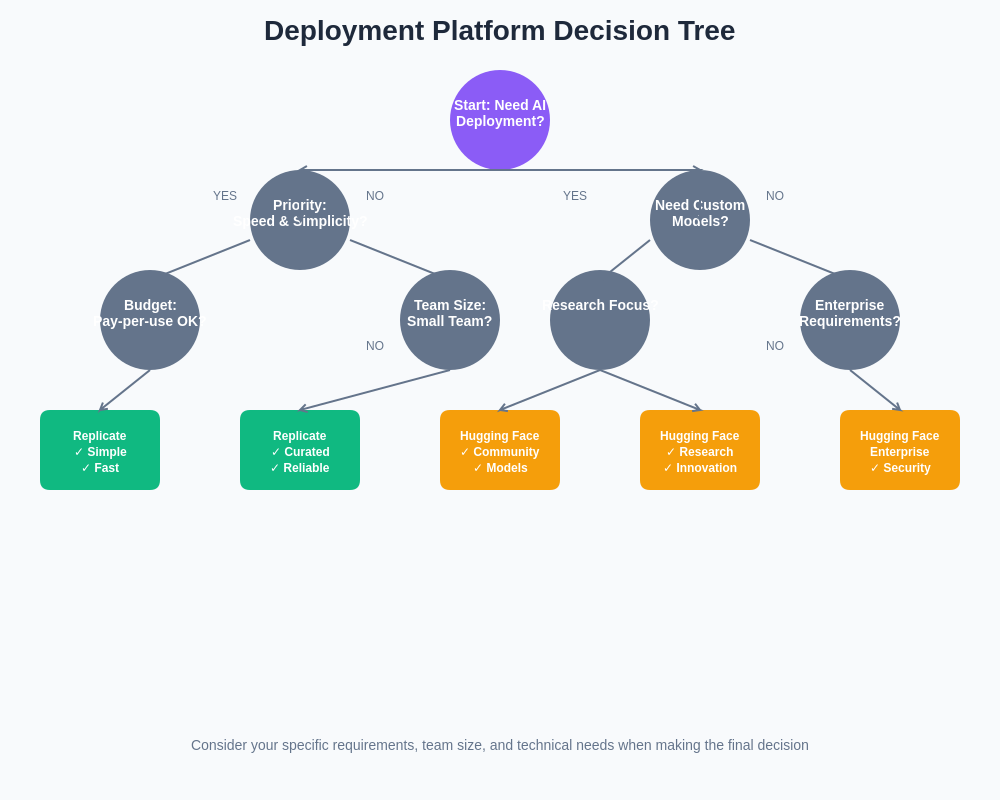

The optimal platform choice depends heavily on specific use cases, organizational requirements, and development priorities. Replicate excels in scenarios requiring rapid deployment of proven AI capabilities without extensive infrastructure management. The platform is particularly well-suited for content creation applications, creative tools, and consumer-facing products where ease of integration and reliable performance are paramount. Startups and small teams often find Replicate’s simplicity and pay-per-use pricing ideal for validating AI-powered product concepts.

Hugging Face shines in research environments, educational contexts, and organizations that require access to cutting-edge AI models and extensive customization capabilities. The platform’s comprehensive ecosystem makes it invaluable for teams that need to experiment with different models, fine-tune existing models, or contribute to the broader AI community. Large organizations and research institutions particularly benefit from Hugging Face’s collaborative features and enterprise-grade deployment options.

Hybrid approaches often provide optimal solutions by leveraging the strengths of both platforms. Many organizations use Hugging Face for model development, experimentation, and research while deploying production models through Replicate for optimal performance and cost management. This combination allows teams to benefit from Hugging Face’s extensive model library during development while leveraging Replicate’s production-optimized infrastructure for customer-facing applications.

Integration Strategies and Best Practices

Successful integration with either platform requires understanding best practices for API usage, error handling, and performance optimization. Replicate’s REST API design follows standard web service patterns, making integration straightforward for web developers. Key considerations include implementing proper retry logic for handling temporary failures, optimizing request batching for improved efficiency, and monitoring usage patterns to optimize costs and performance.

Hugging Face integration strategies vary based on the specific services and deployment options selected. The platform’s transformers library provides the foundation for many integrations, while the Inference API offers hosted model access with minimal setup requirements. Advanced use cases may benefit from custom deployments using Hugging Face’s infrastructure while maintaining integration with the broader ecosystem of tools and models.

Performance monitoring and optimization represent critical aspects of successful AI model deployment regardless of platform choice. Both platforms provide monitoring capabilities and performance metrics that enable developers to optimize their implementations. Regular monitoring of inference times, error rates, and cost metrics helps ensure optimal performance and cost-effectiveness over time.

The decision framework helps developers and organizations systematically evaluate their requirements and select the most appropriate platform based on technical needs, organizational constraints, and long-term strategic objectives.

Future Developments and Platform Evolution

The AI deployment landscape continues to evolve rapidly, with both Replicate and Hugging Face investing heavily in platform improvements and new capabilities. Replicate’s roadmap focuses on expanding model support, improving performance optimization, and enhancing developer tools for easier integration and debugging. The platform’s commitment to simplicity and reliability drives continuous improvements in areas such as cold start times, scaling efficiency, and cost optimization.

Hugging Face continues expanding its ecosystem with new tools, models, and deployment options that serve the growing AI community. Recent developments include improved enterprise features, enhanced collaboration tools, and expanded support for different AI modalities beyond natural language processing. The platform’s open-source philosophy drives innovation through community contributions and partnerships with leading AI research organizations.

Industry trends toward more specialized AI applications, improved model efficiency, and enhanced deployment automation will likely influence both platforms’ development directions. The growing importance of edge deployment, privacy-preserving AI, and sustainable computing practices will create new requirements and opportunities for platform differentiation. Understanding these trends helps inform long-term platform selection decisions and technical strategy development.

The competitive landscape continues evolving with new entrants and existing cloud providers expanding their AI deployment capabilities. This competition drives innovation and improved offerings across all platforms, benefiting developers and organizations seeking AI deployment solutions. Staying informed about platform developments and industry trends ensures optimal platform selection and utilization over time.

Making the Right Choice for Your Organization

Selecting between Replicate and Hugging Face requires careful consideration of multiple factors including technical requirements, team expertise, budget constraints, and long-term strategic objectives. Organizations should evaluate their current AI maturity, development resources, and operational capabilities when making platform decisions. The choice impacts not only immediate development velocity but also long-term scalability, cost management, and technical flexibility.

Technical evaluation should include proof-of-concept implementations on both platforms to assess real-world performance, integration complexity, and operational requirements. This hands-on evaluation provides insights beyond theoretical comparisons and helps identify potential challenges or advantages that may not be apparent from documentation alone. Performance testing with representative workloads ensures that platform capabilities align with application requirements.

Organizational factors such as team size, technical expertise, and development methodologies also influence optimal platform selection. Teams with limited AI infrastructure experience may benefit from Replicate’s simplified approach, while organizations with dedicated AI/ML teams might leverage Hugging Face’s comprehensive ecosystem more effectively. Budget considerations should include not only platform costs but also development time, operational overhead, and scaling requirements.

The AI deployment landscape offers compelling options for organizations seeking to integrate artificial intelligence capabilities into their applications and services. Both Replicate and Hugging Face provide valuable solutions with distinct advantages that serve different segments of the AI development community. Success depends on matching platform capabilities with specific requirements, organizational constraints, and long-term objectives rather than selecting based solely on feature comparisons or pricing models.

Disclaimer

This article provides general information about AI model deployment platforms and should not be considered as specific technical or business advice. Platform features, pricing, and capabilities may change over time. Readers should conduct their own evaluation and testing to determine the most suitable platform for their specific requirements. The effectiveness and suitability of any platform depends on individual use cases, technical requirements, and organizational factors that may not be covered in this general comparison.