The rapid advancement of artificial intelligence has brought unprecedented opportunities alongside significant ethical challenges that demand careful consideration from every programmer working in this domain. As AI systems increasingly influence critical decisions affecting human lives, from healthcare diagnostics to financial lending and criminal justice, the responsibility to develop these technologies ethically has become paramount for the programming community. The principles of responsible AI development extend far beyond technical implementation, encompassing considerations of fairness, transparency, accountability, and societal impact that must be woven into the fabric of every AI project from conception to deployment.

Explore the latest developments in AI ethics and governance to stay informed about evolving best practices and regulatory frameworks that are shaping the future of responsible AI development. The intersection of technology and ethics has never been more critical, as the decisions made by developers today will fundamentally shape how AI systems impact society for generations to come.

Foundational Principles of Ethical AI

The foundation of responsible AI development rests upon several core principles that must guide every aspect of the development process. Fairness represents perhaps the most fundamental requirement, demanding that AI systems treat all individuals and groups equitably without perpetuating or amplifying existing societal biases. This principle extends beyond simple non-discrimination to actively promoting inclusive outcomes that benefit diverse populations and address historical inequities in access to opportunities and resources.

Transparency and explainability form another crucial pillar of responsible AI development, requiring systems to provide clear insights into their decision-making processes. Users and stakeholders must be able to understand how AI systems reach their conclusions, particularly when those decisions have significant consequences for individuals or communities. This transparency extends to data usage, algorithmic logic, and the limitations and uncertainties inherent in AI predictions and recommendations.

Accountability mechanisms ensure that responsibility for AI system outcomes can be clearly attributed and addressed when problems arise. This involves establishing clear chains of responsibility from development teams through deployment organizations to end users, with appropriate governance structures to oversee AI system behavior and impact. The principle of accountability also encompasses ongoing monitoring and evaluation of AI system performance across different contexts and populations to identify and address emerging issues.

Understanding and Mitigating Algorithmic Bias

Algorithmic bias represents one of the most pervasive challenges in AI development, often arising from biased training data, flawed assumptions in model design, or inadequate consideration of diverse use cases and populations. Historical data used to train AI systems frequently reflects past discrimination and inequality, which can be perpetuated and amplified by machine learning algorithms if not carefully addressed through deliberate bias mitigation strategies.

Enhance your understanding of AI ethics with advanced tools like Claude that can help identify potential bias issues and suggest mitigation strategies during the development process. The complexity of bias in AI systems requires sophisticated analytical capabilities and diverse perspectives to effectively address.

Bias can manifest in multiple forms throughout the AI development lifecycle, including representation bias where certain groups are underrepresented in training data, measurement bias where data collection methods systematically favor certain outcomes, and evaluation bias where performance metrics fail to capture important aspects of system behavior across different populations. Understanding these various forms of bias is essential for developing comprehensive mitigation strategies that address root causes rather than merely treating symptoms.

Effective bias mitigation requires a multi-faceted approach that begins with diverse and representative datasets, continues through careful algorithm design and validation, and extends to ongoing monitoring of system performance across different demographic groups and use cases. Technical approaches such as fairness-aware machine learning, adversarial debiasing, and multi-objective optimization can help reduce bias, but these must be combined with diverse development teams, inclusive design processes, and comprehensive testing across varied contexts and populations.

Data Privacy and Security Considerations

The responsible development of AI systems demands rigorous attention to data privacy and security throughout the entire development lifecycle. AI systems often require vast amounts of personal and sensitive data to achieve optimal performance, creating significant privacy risks that must be carefully managed through appropriate technical and organizational measures. The principles of data minimization, purpose limitation, and consent must guide data collection and usage practices to ensure that individuals’ privacy rights are protected while enabling beneficial AI applications.

Privacy-preserving techniques such as differential privacy, federated learning, and homomorphic encryption offer powerful tools for developing AI systems that can learn from sensitive data without compromising individual privacy. These techniques enable organizations to gain insights from collective data patterns while providing mathematical guarantees about the privacy protection afforded to individual data subjects. However, implementing these approaches requires careful consideration of the trade-offs between privacy protection and system performance, as well as thorough understanding of the specific privacy requirements and threat models relevant to each application domain.

Data security measures must be implemented at every stage of the AI development process, from secure data collection and storage through protected model training environments to secure deployment and inference systems. This includes encryption of data at rest and in transit, access controls and authentication mechanisms, secure communication protocols, and comprehensive audit logging to track data access and usage. The distributed nature of many AI development workflows, involving multiple teams, cloud services, and third-party tools, requires particular attention to security boundaries and trust relationships.

Transparency and Explainability Requirements

The development of transparent and explainable AI systems has become increasingly critical as these technologies are deployed in high-stakes applications where understanding decision rationale is essential for trust, accountability, and regulatory compliance. Explainability encompasses both technical interpretability, referring to the ability to understand how models process inputs to generate outputs, and practical explainability, referring to the ability to communicate AI system behavior in terms that are meaningful to relevant stakeholders including end users, domain experts, and regulatory authorities.

Different levels of transparency may be appropriate for different stakeholders and use cases, ranging from global explanations that describe overall system behavior patterns to local explanations that clarify specific decisions or predictions. The choice of explanation type and depth must consider the technical sophistication of the audience, the criticality of the application, and the specific information needs of different stakeholders. This may involve providing multiple types of explanations for the same system, from high-level conceptual overviews to detailed technical documentation.

Technical approaches to explainability include model-agnostic methods such as LIME and SHAP that can provide insights into the behavior of complex black-box models, inherently interpretable models such as decision trees and linear models that provide natural explanations, and attention mechanisms that highlight which inputs are most important for specific predictions. However, technical explainability must be complemented by careful attention to how explanations are communicated and used in practice, ensuring that they provide actionable insights rather than merely technical details.

Robust Testing and Validation Frameworks

Comprehensive testing and validation represent essential components of responsible AI development, requiring approaches that go far beyond traditional software testing to address the unique challenges posed by machine learning systems. AI systems exhibit probabilistic behavior that can vary across different inputs, populations, and contexts, necessitating testing frameworks that can adequately assess performance across diverse scenarios and identify potential failure modes that might not be apparent during initial development and validation.

Testing frameworks for AI systems must address multiple dimensions of system behavior including accuracy and performance across different demographic groups and use cases, robustness to adversarial inputs and distribution shifts, fairness across protected characteristics, and behavior under edge cases and unusual inputs. This requires developing comprehensive test datasets that represent the full diversity of real-world usage scenarios, including both typical use cases and challenging edge cases that might reveal system limitations or vulnerabilities.

Access comprehensive research capabilities with Perplexity to stay current with the latest testing methodologies and validation frameworks being developed by the AI research community. The field of AI testing is rapidly evolving with new techniques and best practices emerging regularly.

Continuous validation throughout the system lifecycle is particularly important for AI systems due to their potential for performance degradation over time as real-world conditions change and drift away from training data distributions. This requires implementing monitoring systems that can detect when model performance is declining, when new types of bias are emerging, or when system behavior is changing in unexpected ways. Such monitoring must be coupled with processes for retraining, updating, or retiring AI systems when validation indicates that performance has become inadequate.

Human Oversight and Control Mechanisms

The implementation of appropriate human oversight and control mechanisms represents a critical aspect of responsible AI development, ensuring that human judgment remains central to AI system operation and that meaningful human control is maintained over important decisions. The level and nature of human oversight required varies significantly depending on the stakes and consequences of AI system decisions, with high-risk applications requiring more extensive human involvement and lower-risk applications potentially operating with greater autonomy.

Human-in-the-loop approaches integrate human expertise and judgment directly into AI system operation, with humans providing input, validation, or decision-making at critical points in the process. This might involve humans reviewing and approving AI recommendations before implementation, providing feedback to improve system performance, or making final decisions based on AI-generated insights and analysis. The design of effective human-in-the-loop systems requires careful consideration of human cognitive capabilities and limitations, ensuring that the interface between human and AI decision-making enhances rather than degrades overall system performance.

Human-on-the-loop approaches maintain human oversight and control while allowing AI systems to operate more autonomously, with humans monitoring system behavior and intervening when necessary. This requires developing effective monitoring interfaces that can alert human operators to potentially problematic situations while avoiding alert fatigue that might reduce the effectiveness of human oversight. The design of such systems must consider how to present information to human operators in ways that enable effective decision-making under time pressure and uncertainty.

Documentation and Governance Standards

Comprehensive documentation and robust governance frameworks are essential for ensuring accountability and enabling effective oversight of AI system development and deployment. Documentation must cover all aspects of AI system development including data sources and collection methods, preprocessing and feature engineering decisions, model architecture and training procedures, validation and testing results, deployment configurations, and ongoing monitoring and maintenance processes. This documentation serves multiple purposes including enabling reproducibility, facilitating audit and compliance activities, supporting maintenance and updates, and providing transparency to stakeholders.

Model cards and dataset datasheets represent standardized approaches to documenting AI systems and their components, providing structured formats for communicating key information about system capabilities, limitations, intended use cases, and potential risks. These documentation formats help ensure that critical information is captured and communicated consistently, enabling better decision-making about AI system deployment and use. However, effective documentation goes beyond standardized formats to include comprehensive technical documentation, user guides, and impact assessments tailored to the needs of different stakeholders.

Governance frameworks establish the organizational structures, processes, and policies needed to ensure responsible AI development and deployment. This includes establishing clear roles and responsibilities for AI system oversight, defining approval processes for AI system development and deployment, implementing review mechanisms for ongoing AI system operation, and establishing incident response procedures for addressing problems that arise. Effective governance must be tailored to the specific organizational context and risk profile while incorporating industry best practices and regulatory requirements.

Continuous Monitoring and Improvement

Responsible AI development extends far beyond initial system deployment to encompass ongoing monitoring, evaluation, and improvement throughout the system lifecycle. AI systems can exhibit changing behavior over time due to data drift, concept drift, adversarial attacks, or changes in the real-world environment, necessitating continuous monitoring to detect when system performance is degrading or when new risks are emerging. This monitoring must address multiple dimensions of system behavior including accuracy, fairness, safety, and user satisfaction across different populations and use cases.

Effective monitoring systems require establishing appropriate metrics and thresholds for different aspects of AI system performance, implementing automated monitoring infrastructure that can detect anomalies and performance degradation, and developing processes for investigating and responding to monitoring alerts. The choice of monitoring metrics must be carefully considered to ensure that they capture meaningful aspects of system behavior rather than merely technical performance measures that may not reflect real-world impact.

The framework for responsible AI development encompasses multiple interconnected components that must work together to ensure ethical and beneficial AI systems. This systematic approach provides a comprehensive foundation for addressing the complex challenges of responsible AI development while maintaining focus on both technical excellence and ethical considerations.

Continuous improvement processes must be established to act on monitoring insights and evolving understanding of AI system impact and effectiveness. This includes processes for updating models when performance degrades, retraining systems on new data when appropriate, and retiring systems when they no longer meet performance or safety requirements. The continuous improvement process must also incorporate feedback from users, stakeholders, and affected communities to ensure that AI systems continue to serve their intended purposes effectively and ethically.

Legal and Regulatory Compliance

The evolving legal and regulatory landscape surrounding AI development presents both challenges and opportunities for responsible AI practitioners. Regulatory frameworks such as the European Union’s AI Act, emerging legislation in various jurisdictions, and industry-specific regulations create compliance requirements that must be integrated into AI development processes from the earliest stages. Understanding and anticipating regulatory requirements is essential for avoiding costly retrofitting of systems and ensuring that AI development aligns with societal expectations and legal obligations.

Compliance strategies must address both existing regulations that apply to AI systems, such as data protection laws and anti-discrimination statutes, and emerging AI-specific regulations that establish requirements for high-risk AI systems. This includes implementing technical measures such as privacy-preserving techniques and bias mitigation approaches, establishing organizational processes such as impact assessments and audit procedures, and maintaining documentation that demonstrates compliance with relevant requirements.

The global nature of AI development and deployment creates additional complexity as systems may be subject to multiple regulatory jurisdictions with potentially conflicting requirements. Navigating this complexity requires understanding the regulatory landscape across relevant jurisdictions, implementing systems that can meet the most stringent applicable requirements, and maintaining flexibility to adapt to evolving regulatory requirements as they emerge.

Stakeholder Engagement and Community Impact

Responsible AI development requires meaningful engagement with diverse stakeholders including end users, affected communities, domain experts, civil society organizations, and regulatory authorities. This engagement should begin early in the development process and continue throughout the system lifecycle to ensure that AI systems are designed and operated in ways that serve the needs and interests of all affected parties. Effective stakeholder engagement requires creating accessible channels for input and feedback, providing clear and understandable information about AI system capabilities and limitations, and demonstrating how stakeholder input is incorporated into system development and operation.

Community impact assessments represent a valuable tool for understanding and addressing the potential effects of AI systems on different communities and populations. These assessments should consider both positive and negative impacts, direct and indirect effects, and short-term and long-term consequences. The process of conducting impact assessments should involve meaningful participation from affected communities and should result in concrete measures to maximize benefits and minimize harms.

Building trust with stakeholders requires transparency about AI system capabilities and limitations, honesty about potential risks and uncertainties, and demonstrated commitment to addressing problems when they arise. This trust-building process is ongoing and requires consistent communication, responsive problem resolution, and genuine engagement with stakeholder concerns and feedback.

Technical Implementation Best Practices

The translation of responsible AI principles into concrete technical implementation requires a comprehensive understanding of available tools, techniques, and methodologies. Data preprocessing and feature engineering must incorporate bias detection and mitigation techniques, ensuring that training data is representative and that feature selection does not inadvertently introduce or amplify bias. This includes techniques such as data augmentation to improve representation of underrepresented groups, statistical parity constraints to ensure fair treatment across protected characteristics, and careful validation of data quality and representativeness.

Model development must incorporate fairness constraints and evaluation metrics that assess performance across different demographic groups and use cases. This includes implementing fairness-aware learning algorithms that optimize for both accuracy and fairness objectives, using cross-validation strategies that ensure robust performance across different populations, and employing ensemble methods that can improve both accuracy and fairness. The choice of algorithms and architectures must consider not only performance characteristics but also interpretability requirements and computational efficiency constraints.

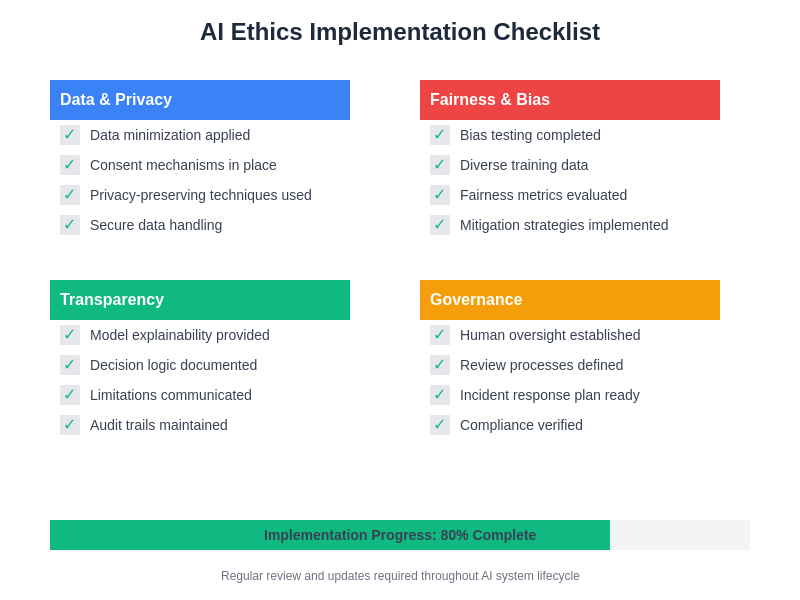

A systematic approach to implementing AI ethics requires careful attention to each phase of the development lifecycle, from initial problem formulation through deployment and ongoing monitoring. This checklist provides a practical framework for ensuring that ethical considerations are properly addressed throughout the development process.

Deployment and monitoring infrastructure must be designed to support ongoing evaluation and improvement of AI system performance across multiple dimensions including accuracy, fairness, robustness, and user satisfaction. This includes implementing logging and monitoring systems that can track system behavior across different populations and use cases, establishing alert mechanisms that can detect performance degradation or emerging bias, and maintaining the capability to update or roll back system changes when problems are identified.

Future Considerations and Emerging Challenges

The field of responsible AI development continues to evolve rapidly as new technologies emerge, regulatory frameworks develop, and our understanding of AI system impact deepens. Emerging technologies such as large language models, multimodal AI systems, and autonomous agents present new challenges for responsible development that require innovative approaches to testing, validation, and governance. The increasing capability and autonomy of AI systems raise questions about appropriate levels of human oversight and control that will require ongoing research and development.

The democratization of AI development tools and platforms creates both opportunities and challenges for responsible AI development. While these tools make AI development more accessible to a broader range of developers and organizations, they also create risks that responsible development practices may not be adequately implemented by developers who lack specialized expertise in AI ethics and safety. Addressing this challenge requires developing better tools and frameworks that make responsible AI practices easier to implement and providing education and training resources that help developers understand and implement these practices effectively.

The global nature of AI development and deployment creates challenges for ensuring consistent application of responsible AI practices across different cultural, legal, and regulatory contexts. Building international cooperation and coordination around responsible AI development will be essential for addressing challenges that transcend national boundaries and ensuring that AI technologies benefit all of humanity rather than exacerbating existing inequalities and divisions.

Building Organizational Capability

Implementing responsible AI development practices requires building organizational capabilities that span technical expertise, ethical reasoning, stakeholder engagement, and governance processes. This begins with recruiting and developing diverse teams that bring different perspectives, experiences, and expertise to AI development challenges. Diversity in AI development teams has been shown to improve both technical performance and ethical outcomes by bringing different viewpoints to problem identification and solution development.

Training and education programs must be established to ensure that all team members understand responsible AI principles and possess the skills needed to implement them effectively. This includes technical training on bias detection and mitigation techniques, privacy-preserving methods, and explainability approaches, as well as broader education on AI ethics, stakeholder engagement, and regulatory compliance. Regular training updates are essential as the field continues to evolve rapidly.

Organizational processes and incentives must be aligned to support responsible AI development rather than merely technical performance optimization. This includes incorporating responsible AI considerations into project planning and evaluation processes, establishing performance metrics that reward ethical behavior alongside technical achievement, and creating career advancement paths that recognize expertise in responsible AI development. Leadership commitment and support are essential for creating an organizational culture that prioritizes responsible AI development.

The journey toward responsible AI development represents both a technical challenge and a moral imperative that requires sustained commitment from individual developers, organizations, and the broader technology community. The principles and practices outlined in this guide provide a foundation for developing AI systems that not only achieve technical excellence but also contribute to human flourishing and social progress. As AI technologies continue to evolve and proliferate, the responsibility for ensuring their beneficial impact rests with every programmer who contributes to their development and deployment.

The future of AI development lies not in choosing between innovation and responsibility, but in recognizing that truly beneficial AI systems must be both technically sophisticated and ethically grounded. By embracing the principles and practices of responsible AI development, programmers can help ensure that artificial intelligence serves as a force for positive change that benefits all members of society while respecting human rights, dignity, and autonomy.

Disclaimer

This article provides general guidance on responsible AI development practices and should not be considered as legal or professional advice. The regulatory landscape for AI is rapidly evolving, and specific compliance requirements may vary by jurisdiction and application domain. Organizations developing AI systems should consult with legal experts and domain specialists to ensure compliance with applicable laws and regulations. The views expressed are based on current understanding of best practices and may evolve as the field continues to develop.