The convergence of open-source hardware architectures and artificial intelligence has reached a pivotal moment with the emergence of RISC-V as a transformative force in edge machine learning applications. This revolutionary instruction set architecture is democratizing access to customizable computing solutions that can be precisely tailored for AI workloads, fundamentally changing how we approach machine learning deployment in resource-constrained environments such as Internet of Things devices, embedded systems, and edge computing platforms.

Explore the latest AI hardware trends to understand how open architectures like RISC-V are reshaping the landscape of edge computing and machine learning acceleration. The open nature of RISC-V represents more than just an alternative to proprietary architectures; it embodies a philosophical shift toward collaborative innovation that empowers developers, researchers, and organizations to create specialized computing solutions without the constraints of licensing fees or architectural limitations imposed by traditional processor vendors.

The Foundation of Open Hardware Innovation

RISC-V’s significance in the artificial intelligence ecosystem stems from its unique position as a completely open and royalty-free instruction set architecture that can be freely used, modified, and extended by anyone. This openness has created unprecedented opportunities for hardware designers to develop specialized processors optimized specifically for machine learning workloads, particularly in edge computing scenarios where power efficiency, cost constraints, and performance requirements demand innovative architectural approaches.

The modular nature of RISC-V allows designers to select only the instruction set extensions necessary for their specific applications, resulting in lean and efficient processors that can execute AI algorithms with minimal overhead. This capability is particularly valuable in edge machine learning applications where every milliwatt of power consumption and every square millimeter of silicon area directly impacts the feasibility and cost-effectiveness of deployment.

Traditional proprietary architectures often include extensive instruction sets designed to serve general-purpose computing needs, which can introduce unnecessary complexity and power consumption in specialized AI applications. RISC-V’s extensible design philosophy enables the creation of domain-specific instruction set extensions tailored specifically for neural network operations, tensor manipulations, and other AI-specific computational patterns.

Architectural Advantages for Edge AI Computing

The inherent characteristics of RISC-V architecture align exceptionally well with the requirements of edge machine learning applications. The reduced instruction set computing philosophy emphasizes simplicity and efficiency, which translates directly into lower power consumption and reduced hardware complexity. These attributes are crucial for edge devices that must operate within strict power budgets while delivering reliable AI inference capabilities.

Edge machine learning applications typically involve repetitive mathematical operations such as matrix multiplications, convolutions, and activation function evaluations that can benefit significantly from specialized instruction set extensions. RISC-V’s extensibility allows hardware designers to implement custom instructions that accelerate these operations while maintaining compatibility with standard software development tools and operating systems.

The scalability of RISC-V implementations ranges from ultra-low-power microcontrollers suitable for sensor fusion applications to high-performance multicore processors capable of running complex neural networks. This scalability enables a unified software development approach across different performance tiers, reducing development complexity and enabling code reuse across diverse edge computing platforms.

Enhance your AI development with Claude’s advanced capabilities for designing and optimizing RISC-V based machine learning systems that push the boundaries of edge computing performance. The combination of open hardware architecture and sophisticated AI development tools creates opportunities for breakthrough innovations in edge machine learning applications.

Customization Capabilities for ML Workloads

One of the most compelling aspects of RISC-V for edge machine learning lies in its unprecedented customization capabilities. Hardware designers can implement application-specific instruction set extensions that directly accelerate common neural network operations, resulting in significant improvements in both performance and energy efficiency compared to general-purpose processors running the same workloads.

Custom instruction extensions for RISC-V can include specialized operations for quantized neural network inference, which is particularly important for edge applications where memory bandwidth and storage capacity are limited. These extensions can implement efficient 8-bit or even 4-bit arithmetic operations that maintain acceptable accuracy while dramatically reducing computational and memory requirements.

Vector processing extensions for RISC-V enable efficient parallel execution of the matrix operations that form the backbone of most machine learning algorithms. These extensions can be tailored to specific neural network architectures, such as convolutional neural networks for computer vision applications or recurrent neural networks for time-series analysis, optimizing performance for the target application domain.

The ability to customize RISC-V implementations extends beyond instruction set modifications to include memory hierarchy optimizations, specialized interconnect architectures, and integrated accelerator units. This holistic approach to customization enables the creation of system-on-chip solutions that are perfectly matched to specific edge AI applications, achieving levels of efficiency that would be impossible with off-the-shelf general-purpose processors.

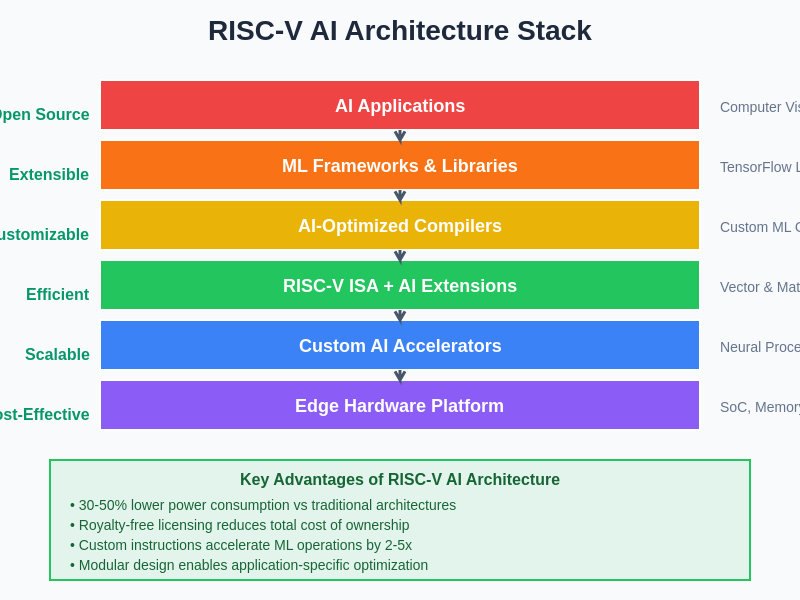

The layered architecture of RISC-V AI systems demonstrates the comprehensive approach required for successful edge machine learning deployment. From application-level frameworks down to specialized hardware accelerators, each layer contributes to the overall system efficiency while maintaining the flexibility and openness that makes RISC-V particularly attractive for custom AI solutions.

Power Efficiency and Performance Optimization

Power efficiency represents a critical consideration for edge machine learning applications, where devices must often operate on battery power for extended periods or harvest energy from environmental sources. RISC-V’s architectural simplicity and customization capabilities enable the development of processors that achieve exceptional performance-per-watt ratios for AI workloads.

The ability to eliminate unnecessary computational overhead through custom instruction set design directly translates into reduced power consumption. By implementing AI-specific operations as single instructions rather than sequences of general-purpose operations, RISC-V based processors can complete neural network inference tasks with fewer clock cycles and lower energy expenditure.

Dynamic voltage and frequency scaling techniques can be more effectively implemented in RISC-V based systems due to the precise control over architectural features that the open design philosophy enables. This capability allows edge AI devices to adapt their power consumption in real-time based on computational demands, extending battery life while maintaining acceptable performance levels.

The integration of specialized memory architectures optimized for AI workloads becomes possible with RISC-V’s flexible design approach. Near-data computing techniques, where processing elements are placed close to memory arrays, can dramatically reduce the energy costs associated with data movement, which often dominates the power consumption profile of machine learning applications.

Development Ecosystem and Toolchain Support

The success of RISC-V in edge machine learning applications depends not only on the architectural advantages but also on the availability of comprehensive development tools and software ecosystems. The open nature of RISC-V has fostered the development of robust toolchains that support both traditional software development and AI-specific optimization workflows.

Compiler support for RISC-V has matured rapidly, with major compiler frameworks including GCC and LLVM providing comprehensive support for the base instruction set and many common extensions. This mature compiler infrastructure enables efficient compilation of machine learning frameworks and libraries, ensuring that AI applications can take full advantage of RISC-V’s architectural features.

Machine learning framework support for RISC-V continues to expand, with popular frameworks such as TensorFlow Lite, ONNX Runtime, and PyTorch Mobile providing optimization paths for RISC-V targets. These frameworks enable developers to leverage existing AI models and training pipelines while targeting RISC-V based edge computing platforms.

The development of AI-specific development tools for RISC-V includes neural network compilers that can automatically generate optimized code for custom instruction set extensions, profiling tools that help identify optimization opportunities, and simulation environments that enable early-stage performance evaluation before hardware becomes available.

Leverage Perplexity’s research capabilities to stay informed about the latest developments in RISC-V toolchain improvements and machine learning framework optimizations. The rapid evolution of development tools continues to lower barriers to adoption and improve the productivity of RISC-V based AI development projects.

Real-World Applications and Use Cases

The practical applications of RISC-V in edge machine learning span a diverse range of industries and use cases, each benefiting from the unique advantages that open architecture customization provides. Smart sensor applications represent one of the most promising areas, where RISC-V based processors can implement specialized signal processing and pattern recognition algorithms directly within sensor nodes.

Industrial Internet of Things applications leverage RISC-V’s customization capabilities to implement predictive maintenance algorithms that can detect equipment failures before they occur. These applications require real-time processing of sensor data using machine learning models that must operate within strict latency and power constraints, making RISC-V’s efficiency advantages particularly valuable.

Autonomous vehicle systems utilize RISC-V based processors for sensor fusion and perception tasks that must process multiple data streams simultaneously while meeting stringent safety and reliability requirements. The ability to customize RISC-V implementations for specific automotive applications enables the development of processors that can handle the unique computational patterns required for real-time perception and decision-making.

Healthcare monitoring devices benefit from RISC-V’s low power consumption and customization capabilities to implement sophisticated biometric analysis algorithms that can operate continuously while maintaining patient privacy through local processing. These applications often require specialized signal processing capabilities that can be efficiently implemented through custom RISC-V instruction set extensions.

Smart home and building automation systems use RISC-V based processors to implement distributed intelligence that can adapt to user preferences and optimize energy consumption through machine learning algorithms. The cost-effectiveness of RISC-V implementations makes it feasible to deploy AI capabilities throughout building infrastructure without prohibitive hardware costs.

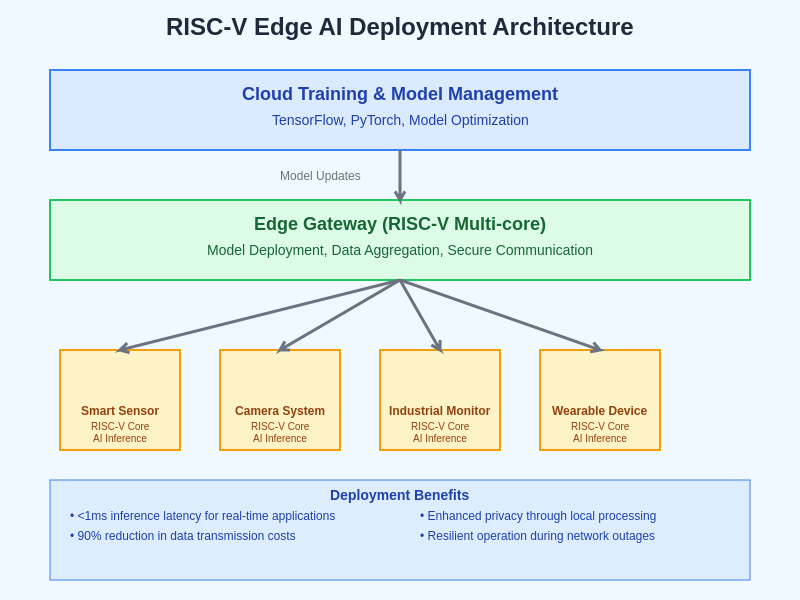

The distributed deployment architecture for RISC-V based edge AI systems illustrates how open hardware enables scalable machine learning implementations across diverse environments. From cloud-based model training to edge device inference, the seamless integration of RISC-V processors throughout the deployment pipeline ensures optimal performance and cost-effectiveness at every level of the system hierarchy.

Integration with AI Accelerators and Coprocessors

The modular nature of RISC-V architecture facilitates seamless integration with specialized AI accelerators and coprocessors, creating heterogeneous computing systems that can efficiently handle diverse machine learning workloads. This integration capability enables the development of edge computing platforms that combine the flexibility of programmable processors with the efficiency of dedicated AI hardware.

Neural processing units designed specifically for deep learning inference can be tightly coupled with RISC-V cores to create systems that excel at both traditional computing tasks and AI workloads. The open nature of RISC-V enables custom interface designs that minimize latency and maximize bandwidth between the main processor and AI accelerators.

Vector processing units optimized for matrix operations can be integrated as coprocessors that handle the computationally intensive portions of machine learning algorithms while the main RISC-V core manages control flow and data orchestration. This division of labor optimizes overall system efficiency while maintaining programming simplicity.

Dedicated memory controllers and data movement engines can be co-designed with RISC-V processors to address the memory bandwidth challenges that often limit the performance of edge AI applications. These specialized components can implement compression, prefetching, and caching strategies optimized for neural network access patterns.

The integration of cryptographic accelerators with RISC-V based AI systems enables the implementation of secure edge computing platforms that can protect sensitive data and models while providing AI capabilities. This security integration is crucial for applications in healthcare, finance, and other domains where data privacy is paramount.

Software Stack and Framework Compatibility

The software ecosystem surrounding RISC-V based edge AI systems has evolved to provide comprehensive support for machine learning development workflows. Operating system support includes lightweight real-time operating systems optimized for edge applications as well as full-featured Linux distributions that can support complex AI development environments.

Container and virtualization technologies have been adapted for RISC-V platforms, enabling the deployment of machine learning applications using familiar DevOps practices and tools. This compatibility reduces the learning curve for developers transitioning from traditional edge computing platforms to RISC-V based systems.

Machine learning inference engines optimized specifically for RISC-V architectures provide efficient execution of trained neural networks while supporting popular model formats such as ONNX, TensorFlow Lite, and Core ML. These inference engines can take advantage of custom instruction set extensions to achieve performance levels that exceed what would be possible with generic inference frameworks.

Development and debugging tools specifically designed for RISC-V based AI applications include neural network profilers that can identify performance bottlenecks, memory analyzers that optimize data layout for custom memory hierarchies, and hardware-software co-simulation environments that enable optimization across the entire system stack.

The availability of pre-trained models optimized for RISC-V edge computing platforms accelerates application development by providing ready-to-deploy solutions for common AI tasks such as object detection, speech recognition, and natural language processing. These optimized models demonstrate the performance advantages achievable through RISC-V customization while serving as starting points for application-specific optimization.

Security and Trust Considerations

Security represents a fundamental concern for edge AI applications, particularly those processing sensitive data or operating in critical infrastructure environments. RISC-V’s open architecture provides unique advantages for implementing robust security features that can be tailored to specific application requirements and threat models.

Hardware-based security features can be integrated directly into RISC-V implementations, including trusted execution environments that protect AI models and sensitive data from unauthorized access. These security features can be designed to meet specific industry standards and certification requirements while maintaining the performance advantages of custom optimization.

Secure boot and attestation mechanisms ensure that only authorized software can execute on RISC-V based edge AI devices, preventing the deployment of malicious code that could compromise system integrity or steal sensitive information. The open nature of RISC-V enables transparent implementation of these security features, facilitating security audits and certification processes.

Cryptographic acceleration integrated with RISC-V processors enables efficient implementation of encryption and authentication protocols that protect AI model parameters and training data. This protection is crucial for applications where intellectual property protection or regulatory compliance requires strong data security measures.

Side-channel attack resistance can be incorporated into RISC-V implementations through architectural features designed to prevent the leakage of sensitive information through timing, power consumption, or electromagnetic emissions. These protections are particularly important for edge AI applications that process biometric data or other highly sensitive information.

Economic Impact and Market Dynamics

The economic implications of RISC-V adoption in edge machine learning extend far beyond the immediate cost savings from royalty-free licensing. The ability to customize processors for specific applications enables the development of highly optimized solutions that can achieve better price-performance ratios than general-purpose alternatives while addressing market needs that cannot be efficiently served by existing processor architectures.

Reduced time-to-market becomes possible through RISC-V’s mature development ecosystem and the availability of proven IP blocks that can be quickly assembled into custom processor designs. This acceleration of development cycles enables companies to respond more rapidly to market opportunities and customer requirements.

Supply chain independence represents a significant advantage for companies adopting RISC-V based solutions, reducing dependence on specific semiconductor vendors and enabling more flexible sourcing strategies. This independence is particularly valuable in markets where geopolitical considerations or supply chain disruptions could impact product availability.

The democratization of processor design through RISC-V enables smaller companies and startups to develop specialized AI processors without the massive upfront investments traditionally required for custom silicon development. This lowered barrier to entry fosters innovation and competition in the AI hardware market.

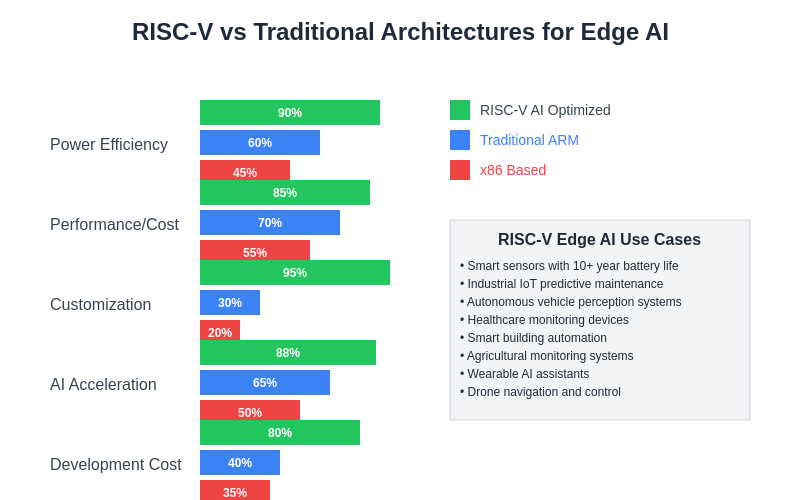

The quantitative advantages of RISC-V implementations for edge AI applications become apparent when comparing key performance metrics against traditional architectures. The open nature of RISC-V enables optimizations that result in superior power efficiency, customization capabilities, and cost-effectiveness while maintaining competitive performance levels for machine learning workloads.

Educational and research institutions benefit from RISC-V’s open nature by gaining access to processor architectures that can be studied, modified, and enhanced without licensing restrictions. This openness accelerates academic research in computer architecture and AI hardware design while training the next generation of engineers in cutting-edge technologies.

Future Developments and Research Directions

The trajectory of RISC-V development in edge machine learning continues to evolve rapidly, driven by ongoing research in both computer architecture and artificial intelligence. Emerging instruction set extensions specifically designed for neural network operations promise to further improve the efficiency of AI workloads on RISC-V platforms.

Quantum-inspired computing techniques may find implementation in RISC-V based systems as hybrid classical-quantum algorithms become practical for specific machine learning applications. The flexibility of RISC-V architecture positions it well to incorporate these emerging computational paradigms as they mature.

Neuromorphic computing approaches that mimic biological neural networks may benefit from RISC-V’s customization capabilities, enabling the development of processors that can efficiently simulate spiking neural networks and other brain-inspired computing models. These approaches could dramatically improve the energy efficiency of certain AI applications.

Advanced memory technologies such as processing-in-memory and near-data computing will likely see integration with RISC-V based systems, addressing the memory wall challenges that limit the performance of current AI systems. The open nature of RISC-V facilitates the co-design of processors and memory systems optimized for specific AI workloads.

Federated learning implementations on RISC-V based edge devices will enable the development of distributed AI systems that can learn collaboratively while preserving data privacy. The customization capabilities of RISC-V enable the implementation of efficient protocols for secure aggregation and model updates in resource-constrained environments.

The continued evolution of RISC-V in edge machine learning represents more than just technological advancement; it embodies a fundamental shift toward open, collaborative innovation that democratizes access to cutting-edge AI capabilities. As the ecosystem continues to mature, RISC-V based solutions will likely play an increasingly important role in enabling ubiquitous AI deployment across diverse applications and industries, fundamentally transforming how we interact with intelligent systems in our daily lives.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of RISC-V architecture and its applications in edge machine learning. Readers should conduct their own research and consider their specific requirements when evaluating RISC-V based solutions. The effectiveness and suitability of RISC-V implementations may vary depending on specific use cases, performance requirements, and development constraints. Hardware design and AI implementation decisions should be made in consultation with qualified engineers and domain experts.