The artificial intelligence and machine learning landscape has witnessed an unprecedented surge in computational demands, pushing developers to seek programming languages that can deliver exceptional performance while maintaining safety and reliability. Rust has emerged as a compelling choice for AI applications, offering a unique combination of zero-cost abstractions, memory safety guarantees, and bare-metal performance that addresses the critical requirements of modern machine learning systems.

Explore the latest AI development trends to understand how systems programming languages like Rust are becoming increasingly important in the AI ecosystem. The intersection of Rust’s systems-level capabilities with AI’s computational intensity creates opportunities for building more efficient, reliable, and scalable machine learning infrastructure that can handle the massive datasets and complex algorithms that define contemporary artificial intelligence applications.

The Performance Imperative in Machine Learning

Machine learning applications operate under intense computational constraints that demand optimal resource utilization, minimal memory overhead, and predictable performance characteristics. Traditional high-level languages often introduce runtime overhead through garbage collection, dynamic typing, and interpreted execution models that can significantly impact the performance of computationally intensive AI workloads. Rust addresses these limitations by providing low-level control over system resources while maintaining high-level abstractions that enable productive development.

The language’s emphasis on zero-cost abstractions ensures that convenient programming constructs do not introduce runtime penalties, allowing developers to write expressive, maintainable code without sacrificing the performance critical for machine learning applications. This characteristic becomes particularly valuable when implementing custom algorithms, optimizing data processing pipelines, or developing specialized neural network architectures that require fine-grained control over memory layout and computational execution patterns.

Memory Safety Without Performance Penalties

One of Rust’s most significant contributions to AI development lies in its ability to prevent memory-related bugs without introducing the performance overhead typically associated with garbage-collected languages. Memory safety issues, including buffer overflows, use-after-free errors, and data races, represent serious concerns in machine learning applications where large datasets and concurrent processing are commonplace.

Rust’s ownership system and borrow checker provide compile-time guarantees against these classes of errors, eliminating the need for runtime checks that would degrade performance in performance-critical AI workloads. This safety-performance combination proves particularly valuable in production machine learning systems where reliability and predictable performance are essential for maintaining service level agreements and ensuring consistent model inference times.

The elimination of garbage collection pauses represents another critical advantage for real-time AI applications, such as autonomous vehicle control systems, high-frequency trading algorithms, and interactive AI assistants that require consistent, low-latency responses. Rust’s deterministic memory management ensures that performance remains predictable and stable even under heavy computational loads.

Parallel Processing and Concurrency Advantages

Modern machine learning algorithms heavily rely on parallel processing to achieve acceptable performance on large datasets and complex models. Rust’s approach to concurrency provides significant advantages for AI applications through its fearless concurrency model that prevents data races at compile time while enabling efficient utilization of multi-core processors and distributed computing resources.

The language’s built-in support for various concurrency patterns, including message passing, shared-state concurrency, and async/await programming models, allows AI developers to choose the most appropriate approach for their specific use cases. Whether implementing data-parallel training algorithms, model-parallel inference systems, or asynchronous data preprocessing pipelines, Rust provides the tools and guarantees necessary for safe, efficient concurrent execution.

Enhance your AI development with Claude for advanced reasoning and code generation capabilities that complement Rust’s performance advantages in machine learning applications. The combination of AI-assisted development and Rust’s performance characteristics creates an optimal environment for building sophisticated machine learning systems that can scale to meet enterprise demands.

GPU Computing and Heterogeneous Acceleration

The integration of GPU computing and specialized accelerators has become fundamental to modern machine learning workflows, and Rust provides excellent support for heterogeneous computing through various libraries and frameworks. The language’s low-level capabilities and zero-cost abstractions make it particularly well-suited for implementing custom GPU kernels, managing device memory, and coordinating computation across different types of processing units.

Rust’s CUDA bindings and OpenCL support enable developers to leverage GPU acceleration for training and inference tasks while maintaining the safety guarantees and performance characteristics that make Rust attractive for systems programming. The ability to write both host and device code in the same language, with consistent safety guarantees, reduces the complexity of heterogeneous AI applications and minimizes the potential for bugs that can arise from mixing different programming languages and execution environments.

The growing ecosystem of Rust-based GPU computing libraries provides high-level abstractions over low-level GPU programming models, enabling AI developers to leverage accelerated computing resources without sacrificing the productivity and safety benefits that Rust provides for CPU-based computations. This unified approach to heterogeneous computing simplifies the development of AI applications that need to coordinate work across multiple types of processing units.

Ecosystem Development and Machine Learning Libraries

The Rust ecosystem has witnessed significant growth in machine learning libraries and frameworks specifically designed to leverage the language’s performance and safety characteristics. Projects like Candle, Burn, and SmartCore provide comprehensive machine learning capabilities built from the ground up in Rust, offering alternatives to traditional Python-based frameworks while maintaining the performance advantages that make Rust attractive for AI applications.

These native Rust libraries eliminate the foreign function interface overhead that often occurs when calling C or C++ libraries from higher-level languages, resulting in more efficient execution and reduced complexity in deployment scenarios. The libraries are designed to take advantage of Rust’s ownership system and type safety features, providing APIs that prevent common machine learning programming errors while enabling optimal performance for training and inference workloads.

The development of specialized libraries for different aspects of machine learning, including tensor operations, automatic differentiation, neural network architectures, and optimization algorithms, demonstrates the maturity of Rust’s AI ecosystem and its readiness for production machine learning applications. These libraries benefit from Rust’s package management system and build tools, enabling easy integration and dependency management in complex AI projects.

Real-World Performance Comparisons

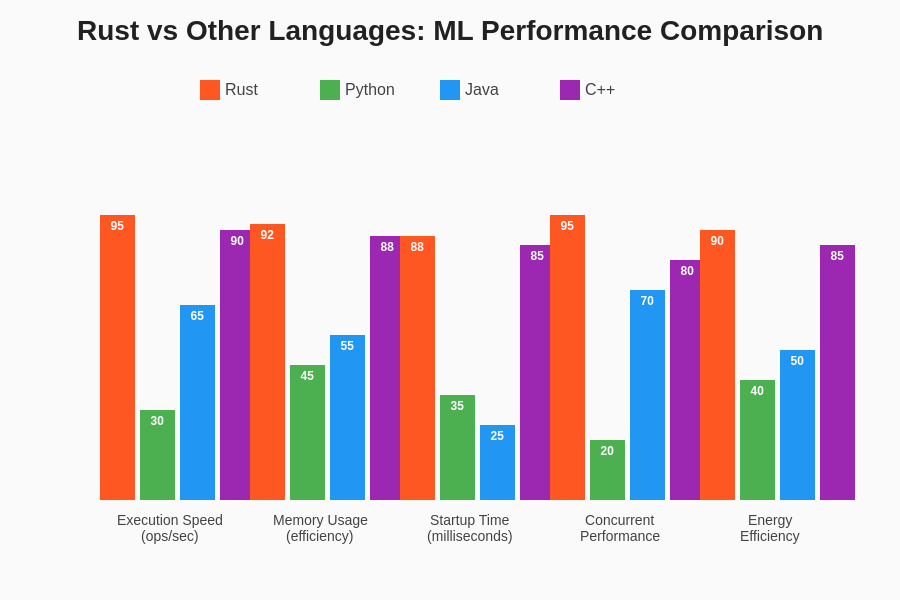

Empirical performance evaluations consistently demonstrate Rust’s advantages in machine learning scenarios, particularly for computationally intensive tasks such as matrix operations, numerical optimization, and data preprocessing. Benchmarks comparing Rust implementations with equivalent Python, Java, and C++ versions show significant performance improvements, especially in scenarios involving large-scale data processing and iterative algorithms commonly found in machine learning workflows.

The performance advantages become particularly pronounced in deployment scenarios where resource efficiency directly impacts operational costs. Rust applications typically require less memory and CPU resources than equivalent implementations in garbage-collected languages, resulting in lower infrastructure costs and improved scalability for production machine learning services. These efficiency gains become especially valuable when deploying models at scale or in resource-constrained environments such as edge computing platforms.

The performance characteristics of Rust in machine learning applications demonstrate clear advantages across multiple dimensions, including execution speed, memory utilization, and energy efficiency. These improvements translate directly into reduced operational costs and improved user experience in production AI systems.

Memory Efficiency and Resource Optimization

Machine learning applications often work with massive datasets that require careful memory management to achieve acceptable performance and avoid resource exhaustion. Rust’s control over memory layout and allocation patterns enables developers to optimize data structures for specific machine learning workloads, minimizing cache misses and maximizing memory bandwidth utilization.

The ability to use custom memory allocators and control memory layout becomes particularly valuable when implementing specialized data structures for machine learning, such as sparse matrices, compressed tensors, and custom neural network architectures. Rust’s zero-cost abstractions ensure that these optimizations do not introduce abstraction overhead, allowing developers to achieve near-optimal performance while maintaining code readability and maintainability.

Resource optimization extends beyond memory management to include efficient utilization of CPU caches, SIMD instructions, and other architectural features that can significantly impact machine learning performance. Rust’s low-level capabilities enable developers to leverage these hardware features directly when necessary, while higher-level abstractions provide convenient interfaces for common optimization patterns.

Utilize Perplexity for AI research to stay informed about the latest developments in Rust-based machine learning frameworks and optimization techniques. The rapidly evolving landscape of AI hardware and software requires continuous learning and adaptation to maintain competitive advantage in machine learning system development.

Integration with Existing AI Infrastructure

The adoption of Rust in AI development is facilitated by its excellent interoperability with existing machine learning infrastructure and tools. Rust can seamlessly interface with C and C++ libraries commonly used in AI applications, enabling gradual migration strategies that allow organizations to leverage Rust’s benefits without requiring complete system rewrites.

The language’s foreign function interface capabilities enable integration with established machine learning frameworks, BLAS libraries, and specialized computing libraries while maintaining Rust’s safety guarantees in the interface layers. This interoperability proves crucial for organizations with significant investments in existing AI infrastructure who want to incorporate Rust’s performance advantages selectively in performance-critical components.

Container-based deployment strategies work excellently with Rust applications, as the language’s static compilation model produces self-contained binaries that minimize deployment complexity and reduce container image sizes. This characteristic proves particularly valuable for microservice architectures and cloud-native AI applications where deployment efficiency and resource utilization directly impact operational costs and scalability.

Development Productivity and Maintainability

Despite its systems programming heritage, Rust provides excellent developer productivity through its expressive type system, pattern matching capabilities, and comprehensive error handling mechanisms. These language features prove particularly valuable in machine learning development, where complex algorithms and data transformations can benefit from Rust’s ability to express invariants and constraints at the type level.

The language’s emphasis on explicit error handling reduces the likelihood of runtime failures in production machine learning systems, while its powerful macro system enables the creation of domain-specific languages and abstractions tailored to specific machine learning tasks. These productivity features, combined with Rust’s performance characteristics, create an attractive proposition for teams developing sophisticated AI applications.

Code maintainability in large-scale machine learning projects benefits significantly from Rust’s ownership system and type safety features, which prevent entire classes of bugs that commonly occur in systems dealing with complex data flows and concurrent processing. The compiler’s helpful error messages and suggestions contribute to faster development cycles and reduced debugging time, particularly valuable in the iterative nature of machine learning development.

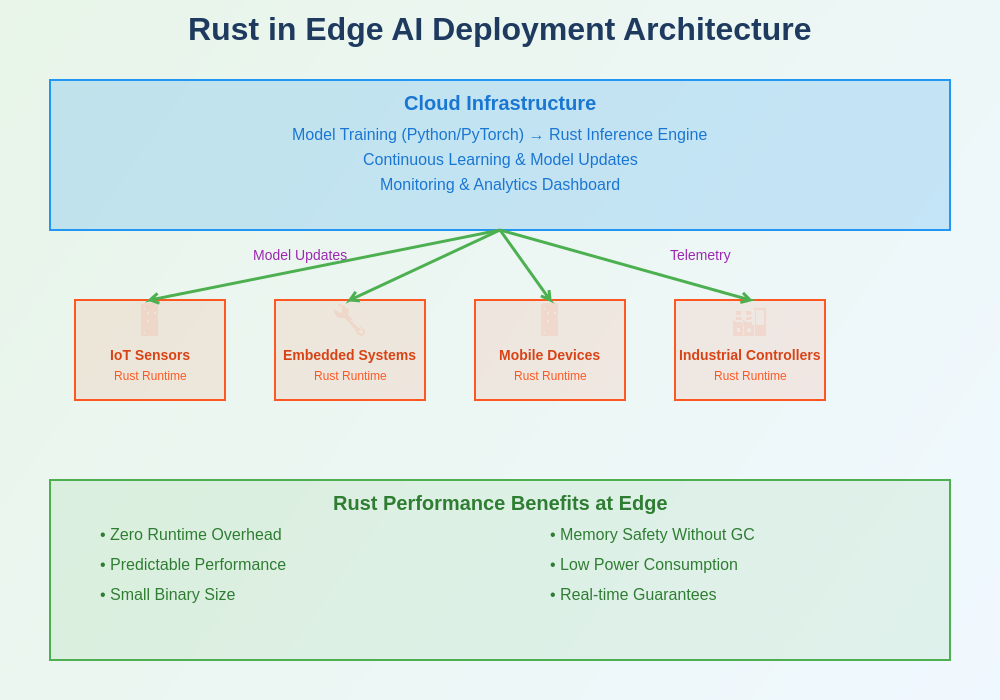

Edge Computing and Embedded AI Applications

The growing importance of edge computing and embedded AI applications creates new requirements for machine learning deployments that favor Rust’s characteristics. Edge devices typically have constrained resources, limited power budgets, and reliability requirements that align well with Rust’s efficiency and safety guarantees.

Rust’s ability to produce small, efficient binaries without runtime dependencies makes it particularly suitable for deploying machine learning models on embedded devices, IoT platforms, and edge computing infrastructure. The language’s deterministic performance characteristics prove crucial for real-time AI applications that must meet strict latency and power consumption requirements.

The architecture demonstrates how Rust’s efficiency and safety characteristics enable sophisticated AI capabilities in resource-constrained edge environments, providing reliable inference capabilities while maintaining low power consumption and predictable performance.

The elimination of garbage collection overhead and the ability to fine-tune memory usage patterns enable Rust-based AI applications to operate effectively on devices with limited RAM and processing power, expanding the range of platforms suitable for AI deployment. This capability proves increasingly valuable as AI moves closer to data sources and end users through edge computing initiatives.

Future Directions and Ecosystem Growth

The Rust AI ecosystem continues to evolve rapidly, with ongoing developments in areas such as automatic differentiation, distributed training frameworks, and specialized hardware acceleration libraries. The language’s growing adoption in AI research institutions and technology companies indicates strong momentum toward broader acceptance in the machine learning community.

Emerging projects focus on providing high-level abstractions that make Rust more accessible to data scientists and machine learning researchers who may not have extensive systems programming backgrounds. These developments aim to combine Rust’s performance advantages with the productivity and ease of use that have made Python popular in the AI community.

The integration of Rust with cloud-native AI platforms and serverless computing environments represents another area of significant development, as organizations seek to leverage Rust’s efficiency advantages in cost-sensitive cloud deployments. The language’s fast startup times and low resource requirements make it particularly well-suited for serverless AI applications where cold start performance and resource efficiency directly impact user experience and operational costs.

Performance Optimization Strategies

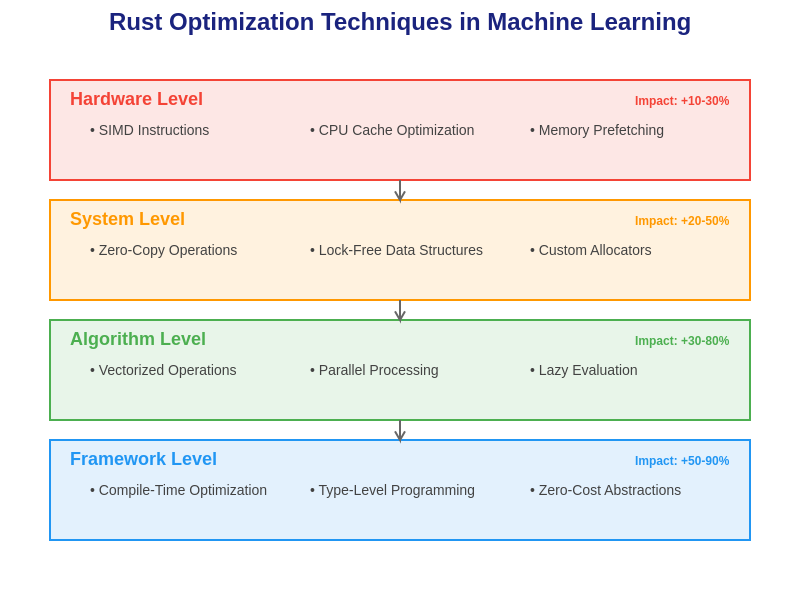

Successful deployment of Rust in machine learning applications requires understanding and applying appropriate performance optimization strategies that leverage the language’s unique characteristics. Profile-guided optimization, careful data structure design, and strategic use of unsafe code for performance-critical sections represent important techniques for achieving optimal performance in Rust-based AI systems.

The language’s ownership system enables sophisticated optimization patterns that would be difficult or dangerous to implement in other languages, such as zero-copy data processing pipelines and lock-free concurrent data structures. These optimization opportunities become particularly valuable in high-throughput machine learning applications where every microsecond of latency reduction translates to improved user experience and operational efficiency.

The comprehensive approach to optimization in Rust encompasses multiple levels, from low-level memory layout optimizations to high-level algorithmic improvements, creating opportunities for significant performance gains in machine learning workloads.

Understanding the interaction between Rust’s abstractions and the underlying hardware proves crucial for achieving optimal performance in machine learning applications. The language provides tools and techniques for measuring and optimizing performance at multiple levels, from individual function execution to entire system throughput.

Production Deployment Considerations

The deployment of Rust-based machine learning systems in production environments requires careful consideration of operational aspects such as monitoring, debugging, and maintenance. Rust’s static compilation model simplifies deployment by eliminating runtime dependencies, while its performance predictability reduces the complexity of capacity planning and resource allocation.

Observability and monitoring strategies for Rust AI applications benefit from the language’s excellent support for structured logging, metrics collection, and distributed tracing. These capabilities prove essential for maintaining visibility into complex machine learning systems that may process millions of requests and make thousands of model predictions per second.

The reliability characteristics of Rust applications, including their resistance to memory-related crashes and predictable performance under load, contribute to improved operational stability for production AI systems. This reliability translates directly into better service level agreement compliance and reduced operational overhead for teams responsible for maintaining machine learning infrastructure.

Conclusion and Industry Impact

The adoption of Rust in machine learning applications represents a significant evolution in how the industry approaches the performance and reliability challenges inherent in AI systems. The language’s unique combination of safety, performance, and productivity creates new possibilities for building more efficient and reliable machine learning infrastructure that can meet the demanding requirements of modern AI applications.

As the AI industry continues to grow and mature, the importance of efficient, reliable system implementations becomes increasingly critical for organizations seeking to deploy AI solutions at scale. Rust’s characteristics position it as a valuable tool for addressing these challenges while providing developers with the safety and productivity features necessary for maintaining complex machine learning systems.

The continued growth of the Rust AI ecosystem, combined with increasing industry recognition of the language’s advantages, suggests that Rust will play an increasingly important role in the future of machine learning and artificial intelligence development. Organizations that invest in Rust-based AI capabilities today position themselves to take advantage of the performance and efficiency benefits that will become increasingly important as AI applications continue to grow in scale and complexity.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The performance characteristics and capabilities described may vary depending on specific use cases, hardware configurations, and implementation details. Readers should conduct their own evaluations and testing when considering Rust for machine learning applications. The effectiveness of Rust-based solutions depends on factors including team expertise, project requirements, and integration with existing systems.