The rapidly expanding landscape of artificial intelligence and machine learning has introduced unprecedented security challenges that demand sophisticated defensive solutions. As organizations increasingly deploy distributed AI systems, federated learning networks, and collaborative machine learning platforms, the need for robust security mechanisms to protect model updates, training data, and communication channels has become paramount. The intersection of cryptography and artificial intelligence represents a critical frontier in cybersecurity, where advanced encryption techniques serve as the cornerstone of maintaining system integrity and preventing malicious exploitation.

Discover the latest trends in AI security to understand how security professionals are addressing emerging threats in machine learning environments. The evolution of AI security requires continuous adaptation to new attack vectors while maintaining the performance and functionality that make these systems valuable for legitimate applications.

The Critical Importance of ML Model Security

Machine learning models represent valuable intellectual property and operational assets that require comprehensive protection against various threat vectors. Traditional security approaches often fall short when applied to AI systems due to the unique characteristics of machine learning workflows, including distributed training processes, model parameter exchanges, and the inherent complexity of neural network architectures. The security challenges extend beyond conventional data protection to encompass model integrity, training process validation, and defense against sophisticated attacks such as model poisoning, adversarial examples, and gradient-based inference attacks.

The financial and operational implications of compromised machine learning systems extend far beyond simple data breaches. Malicious actors can manipulate model behavior to produce incorrect predictions, extract sensitive training data through inference attacks, or steal proprietary algorithms through model extraction techniques. These threats necessitate a comprehensive security framework that addresses both the technical vulnerabilities inherent in machine learning systems and the operational challenges of maintaining security across distributed AI infrastructures.

Cryptographic Foundations for AI Security

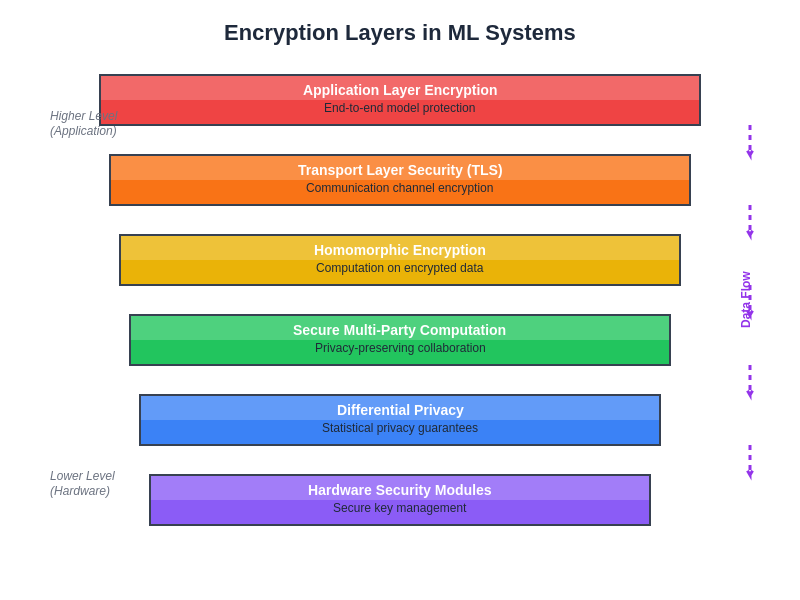

The application of cryptographic principles to machine learning security requires sophisticated understanding of both domains and careful consideration of performance implications. Advanced encryption techniques such as homomorphic encryption, secure multi-party computation, and differential privacy provide the mathematical foundations for protecting sensitive operations while maintaining computational efficiency. These cryptographic primitives enable secure computation on encrypted data, allowing machine learning algorithms to operate on protected information without exposing underlying sensitive data or model parameters.

The implementation of cryptographic protections in machine learning systems involves complex tradeoffs between security strength, computational overhead, and system usability. Homomorphic encryption schemes allow mathematical operations to be performed on encrypted data, enabling secure model training and inference without decrypting sensitive information. Secure multi-party computation protocols facilitate collaborative machine learning scenarios where multiple parties can jointly train models without revealing their private datasets, maintaining both data confidentiality and model utility.

Experience advanced AI security with Claude to explore how sophisticated reasoning capabilities can enhance security analysis and threat detection in machine learning environments. The integration of AI-powered security tools with traditional cryptographic approaches creates layered defense mechanisms that adapt to evolving threat landscapes.

Federated Learning Security Architecture

Federated learning represents a paradigm shift in machine learning that distributes training processes across multiple devices or organizations while keeping raw data localized. This approach introduces unique security challenges that require specialized protection mechanisms to ensure model integrity and prevent various attacks targeting the aggregation process. The decentralized nature of federated learning creates multiple attack surfaces, including client-side vulnerabilities, communication channel compromises, and aggregation server attacks that malicious actors can exploit to influence model behavior or extract sensitive information.

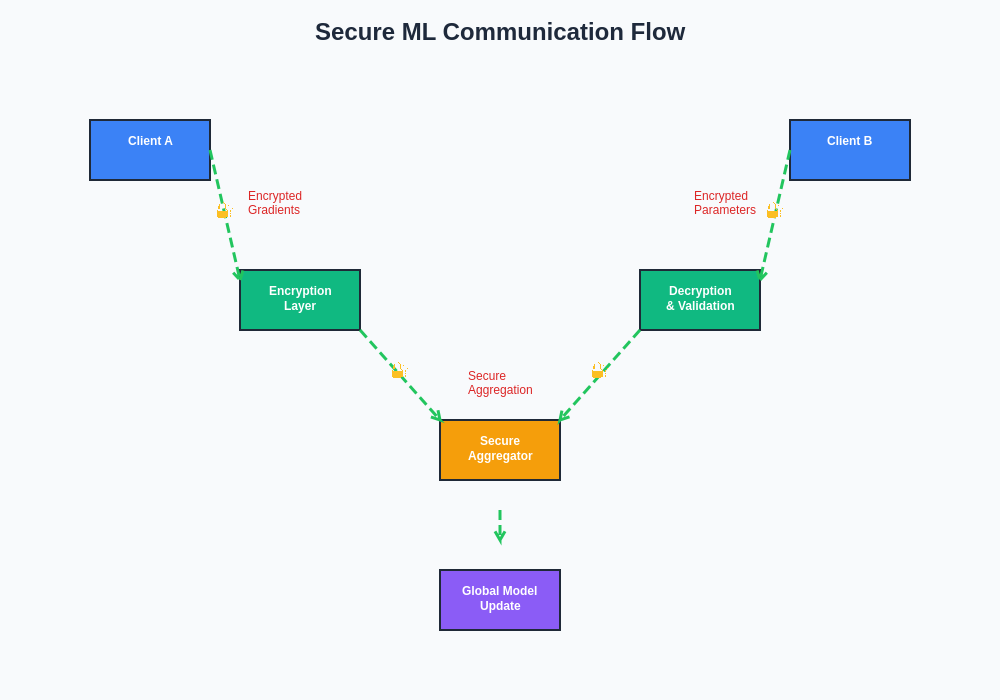

The security architecture for federated learning systems must address authentication of participating clients, integrity verification of local model updates, confidentiality protection during parameter transmission, and robust aggregation mechanisms that can detect and mitigate malicious contributions. Advanced techniques such as secure aggregation protocols, byzantine-fault-tolerant consensus mechanisms, and privacy-preserving client selection algorithms provide comprehensive protection against various attack vectors while maintaining the collaborative benefits of federated learning approaches.

Encrypted Model Parameter Exchange

The transmission of model parameters between distributed system components represents a critical vulnerability that requires sophisticated encryption mechanisms to maintain security and privacy. Traditional transport layer security protocols provide baseline protection for communication channels, but the unique characteristics of machine learning parameter exchanges demand additional security measures to protect against specialized attacks targeting gradient information, model architecture details, and optimization state data.

Advanced encryption schemes for model parameter exchange incorporate techniques such as gradient compression with encryption, secure parameter aggregation protocols, and privacy-preserving model update mechanisms that maintain mathematical properties necessary for machine learning algorithms while providing strong cryptographic guarantees. These approaches enable secure collaboration between multiple parties without exposing sensitive model information or training data characteristics that could be exploited by adversaries.

The secure communication architecture for machine learning systems requires careful orchestration of encryption, authentication, and integrity verification mechanisms. This systematic approach ensures that model updates remain protected throughout the entire communication pipeline while maintaining the computational efficiency necessary for practical deployment in production environments.

Differential Privacy in Model Updates

Differential privacy provides mathematical guarantees for privacy protection in machine learning systems by introducing carefully calibrated noise into model training processes and parameter updates. This approach enables organizations to share aggregate model improvements while preventing inference attacks that could extract information about individual training examples or sensitive data patterns. The implementation of differential privacy in machine learning requires sophisticated understanding of privacy-utility tradeoffs and careful parameter tuning to maintain model performance while providing meaningful privacy guarantees.

The integration of differential privacy mechanisms into model update processes involves complex mathematical considerations including noise distribution selection, privacy budget allocation, and adaptive privacy accounting that tracks cumulative privacy expenditure across multiple training iterations. Advanced techniques such as concentrated differential privacy, Renyi differential privacy, and private aggregation of teacher ensembles provide enhanced privacy guarantees while minimizing the impact on model accuracy and training efficiency.

Threat Landscape and Attack Vectors

The threat landscape for machine learning systems encompasses a diverse range of attack vectors that exploit vulnerabilities in training processes, model architectures, and deployment environments. Model poisoning attacks attempt to corrupt training data or manipulate gradient updates to introduce backdoors or reduce model performance, while adversarial example attacks craft malicious inputs designed to cause misclassification in deployed models. Membership inference attacks attempt to determine whether specific data points were included in training datasets, potentially exposing sensitive information about individuals or organizations.

More sophisticated attacks such as model extraction attempts seek to steal proprietary algorithms through query-based approaches, while gradient leakage attacks exploit information contained in parameter updates to reconstruct training data. The distributed nature of modern machine learning systems introduces additional vulnerabilities including communication channel attacks, Byzantine adversaries in federated learning scenarios, and supply chain attacks targeting model components or training infrastructure.

Enhance your security research with Perplexity to access comprehensive threat intelligence and security analysis capabilities that support proactive defense against emerging machine learning attack vectors. The rapidly evolving threat landscape requires continuous monitoring and adaptive security measures that can respond to novel attack techniques.

Secure Aggregation Protocols

Secure aggregation protocols provide cryptographic mechanisms for combining model updates from multiple sources without exposing individual contributions to potential adversaries. These protocols enable collaborative machine learning scenarios where participants can jointly train models while maintaining the confidentiality of their local data and model parameters. The mathematical foundations of secure aggregation involve sophisticated cryptographic constructions such as secret sharing schemes, threshold encryption, and secure multi-party computation that enable privacy-preserving computation across distributed environments.

The implementation of secure aggregation protocols requires careful consideration of various practical constraints including communication overhead, computational complexity, and fault tolerance requirements. Advanced protocols incorporate techniques such as efficient secret sharing, batch verification mechanisms, and adaptive threshold selection that optimize performance while maintaining security guarantees. These approaches enable large-scale federated learning deployments with hundreds or thousands of participating clients while providing strong privacy and security protections.

Privacy-Preserving Model Training

Privacy-preserving model training techniques enable organizations to develop machine learning models using sensitive data while maintaining strict confidentiality and privacy guarantees. These approaches combine advanced cryptographic techniques with machine learning algorithms to enable secure computation on protected data without exposing underlying information to potential adversaries. The integration of privacy preservation into the training process requires sophisticated understanding of both cryptographic protocols and machine learning optimization techniques.

Advanced privacy-preserving training approaches incorporate techniques such as homomorphic encryption for secure computation, secure multi-party computation for collaborative training, and trusted execution environments for protected model development. These approaches enable organizations to leverage sensitive datasets for model training while complying with privacy regulations and maintaining competitive advantages through confidential algorithm development. The resulting models provide similar utility to those trained on unprotected data while offering strong privacy guarantees for all participants.

The multi-layered encryption architecture for machine learning systems provides comprehensive protection across all system components and communication channels. This defense-in-depth approach ensures that sensitive information remains protected even if individual security measures are compromised, creating resilient systems that can maintain security in hostile environments.

Implementation Challenges and Solutions

The practical implementation of secure AI communications faces numerous technical and operational challenges that require innovative solutions and careful system design. Performance overhead from cryptographic operations can significantly impact training and inference times, requiring optimization techniques such as hardware acceleration, algorithm approximation, and protocol efficiency improvements. The complexity of integrating security measures into existing machine learning pipelines often necessitates significant architectural changes and specialized expertise that may not be readily available within development teams.

Scalability considerations become particularly challenging in large-scale distributed systems where cryptographic operations must be performed across thousands of nodes while maintaining acceptable performance characteristics. Solutions include hierarchical aggregation schemes, efficient batch processing techniques, and adaptive security parameter selection that balances protection levels with computational requirements. The integration of specialized hardware such as secure enclaves, cryptographic accelerators, and privacy-preserving hardware architectures provides additional capabilities for implementing secure AI communications in production environments.

Regulatory Compliance and Standards

The regulatory landscape surrounding AI security and privacy continues to evolve as governments and industry organizations develop comprehensive frameworks for managing risks associated with machine learning systems. Compliance requirements such as the General Data Protection Regulation, California Consumer Privacy Act, and emerging AI-specific regulations create legal obligations for organizations deploying machine learning systems to implement appropriate security and privacy protections.

Industry standards and frameworks such as the NIST AI Risk Management Framework, ISO/IEC 27001 information security standards, and emerging machine learning security guidelines provide structured approaches for implementing comprehensive security programs. These standards emphasize the importance of risk assessment, security control implementation, and continuous monitoring that addresses the unique characteristics of AI systems while maintaining alignment with broader cybersecurity best practices.

Performance Optimization Strategies

The computational overhead associated with cryptographic protections in machine learning systems requires sophisticated optimization strategies to maintain practical performance levels. Techniques such as gradient compression, sparse parameter updates, and efficient cryptographic implementations help minimize the impact of security measures on system performance. The development of specialized algorithms that maintain security properties while reducing computational complexity represents an active area of research and development.

Hardware acceleration through specialized cryptographic processors, GPU-based implementations, and custom silicon designed for privacy-preserving computation provide significant performance improvements for secure machine learning operations. Software optimizations including algorithm approximation, batch processing techniques, and parallel computation strategies further enhance system performance while maintaining security guarantees. The careful selection and tuning of cryptographic parameters based on specific security requirements and performance constraints enables optimal system configurations for diverse deployment scenarios.

The relationship between security strength and system performance requires careful optimization to achieve practical deployments. Advanced techniques enable organizations to maximize security protections while maintaining acceptable performance characteristics through intelligent parameter selection and system architecture optimization.

Emerging Technologies and Future Directions

The future of secure AI communications continues to evolve with advances in quantum-resistant cryptography, novel privacy-preserving techniques, and specialized hardware architectures designed for secure computation. Quantum computing developments necessitate the migration to post-quantum cryptographic algorithms that can withstand attacks from quantum computers while maintaining practical performance characteristics. The integration of quantum key distribution and quantum-secure communication channels provides additional security capabilities for highly sensitive machine learning applications.

Emerging techniques such as fully homomorphic encryption with improved efficiency, advanced secure multi-party computation protocols, and novel differential privacy mechanisms promise to enhance the security and privacy capabilities of machine learning systems. The development of specialized hardware including privacy-preserving AI chips, secure computation accelerators, and trusted execution environments specifically designed for machine learning workloads will further advance the practical deployment of secure AI communications.

Industry Applications and Case Studies

Real-world implementations of secure AI communications demonstrate the practical viability and business value of advanced security measures in machine learning systems. Financial services organizations utilize federated learning with secure aggregation to develop fraud detection models while maintaining customer privacy and regulatory compliance. Healthcare institutions implement privacy-preserving machine learning techniques to enable collaborative research on sensitive medical data without exposing patient information.

Technology companies deploy secure model update mechanisms to protect proprietary algorithms while enabling distributed training across global infrastructure. Government agencies utilize encrypted machine learning systems for national security applications that require the highest levels of confidentiality and integrity protection. These implementations provide valuable insights into the practical challenges and solutions associated with deploying secure AI communications in production environments.

Best Practices and Implementation Guidelines

Successful implementation of secure AI communications requires adherence to established best practices and systematic approaches to security design and deployment. Organizations should conduct comprehensive threat modeling exercises to identify potential attack vectors and security requirements specific to their machine learning applications. The selection of appropriate cryptographic techniques should be based on detailed analysis of security requirements, performance constraints, and regulatory compliance obligations.

Security implementation should follow defense-in-depth principles that incorporate multiple layers of protection including network security, application-level encryption, secure key management, and comprehensive monitoring and incident response capabilities. Regular security assessments, penetration testing, and compliance audits help ensure that security measures remain effective against evolving threats. The development of specialized expertise through training and collaboration with security professionals ensures that organizations can effectively manage the complex security challenges associated with machine learning systems.

Conclusion and Strategic Implications

The security of AI communications represents a fundamental requirement for the continued advancement and deployment of machine learning systems across diverse industries and applications. The integration of advanced cryptographic techniques with machine learning architectures creates powerful capabilities for maintaining confidentiality, integrity, and availability while enabling innovative applications that leverage sensitive data and proprietary algorithms.

Organizations that proactively implement comprehensive security measures for their AI systems will gain competitive advantages through enhanced trust, regulatory compliance, and protection of valuable intellectual property. The continued evolution of secure AI communications technologies will enable new applications and business models that were previously impossible due to security and privacy constraints, driving further innovation and economic growth in the artificial intelligence sector.

Disclaimer

This article is for informational and educational purposes only and does not constitute professional security advice. The implementation of security measures in machine learning systems requires specialized expertise and should be undertaken with appropriate professional guidance. Organizations should conduct thorough risk assessments and consult with qualified security professionals before implementing cryptographic protections in production systems. The effectiveness of security measures may vary depending on specific threat models, implementation quality, and operational practices.