The deployment of artificial intelligence models in production environments represents a critical juncture where cutting-edge technology meets real-world security challenges. As organizations increasingly rely on AI systems to power mission-critical applications, the protection of these models during inference becomes paramount to maintaining operational integrity, safeguarding intellectual property, and ensuring compliance with regulatory frameworks. The landscape of AI security encompasses a complex web of potential vulnerabilities that span from model extraction attempts to adversarial attacks designed to manipulate inference outcomes.

Stay updated with the latest AI security trends to understand emerging threats and defensive strategies in the rapidly evolving field of artificial intelligence security. The intersection of AI advancement and cybersecurity creates unique challenges that require specialized knowledge and proactive defensive measures to address effectively.

Understanding the AI Inference Threat Landscape

The production deployment of AI models introduces a multifaceted attack surface that differs significantly from traditional software security concerns. Unlike conventional applications where the primary focus centers on code vulnerabilities and data breaches, AI systems face unique threats that target the models themselves, their training data, and the inference process. These threats range from sophisticated model extraction techniques that attempt to reverse-engineer proprietary algorithms to subtle adversarial attacks designed to cause misclassification without detection.

Model extraction attacks represent one of the most significant concerns in production AI environments. Attackers can systematically query deployed models to reconstruct their decision boundaries, effectively stealing the intellectual property embedded within the model architecture and learned parameters. This process, known as model inversion or model stealing, can be executed through carefully crafted query sequences that gradually reveal the model’s internal logic and training data characteristics.

Adversarial attacks pose another critical threat vector, where maliciously crafted inputs are designed to fool AI models into producing incorrect outputs while appearing benign to human observers. These attacks can be particularly dangerous in high-stakes applications such as autonomous vehicle navigation, medical diagnosis systems, or financial fraud detection, where incorrect classifications can have severe real-world consequences.

Implementing Robust Authentication and Authorization

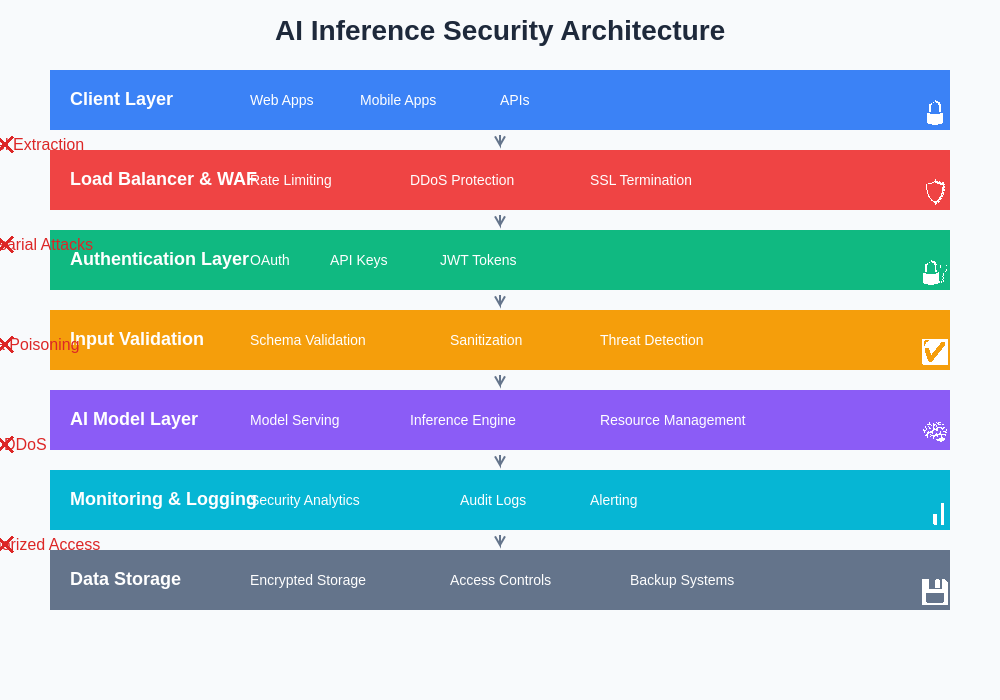

The foundation of secure AI inference begins with implementing comprehensive authentication and authorization mechanisms that control access to model endpoints and ensure that only legitimate users and applications can interact with deployed models. Traditional role-based access control systems must be enhanced to account for the unique characteristics of AI workloads, including varying computational requirements, different sensitivity levels of different models, and the need to track and audit model usage patterns.

Multi-factor authentication becomes particularly important in AI deployment scenarios where models may be accessed by both human users and automated systems. The implementation of API keys, OAuth tokens, and certificate-based authentication creates multiple layers of verification that significantly reduce the risk of unauthorized access. Additionally, the use of service mesh architectures can provide fine-grained control over inter-service communication, ensuring that model inference requests are properly authenticated and authorized at every step of the processing pipeline.

Explore advanced AI security solutions with Claude to enhance your understanding of modern authentication frameworks and their application in AI-powered systems. The integration of zero-trust security principles with AI inference pipelines creates robust defensive postures that assume no implicit trust and verify every request.

Securing Model Endpoints and Network Communications

Network security forms a critical component of AI inference protection, requiring the implementation of encrypted communications, secure API design, and comprehensive monitoring of network traffic patterns. Transport Layer Security (TLS) encryption should be mandatory for all model inference communications, protecting both the input data sent to models and the predictions returned to clients. The selection of appropriate cipher suites and regular certificate rotation helps maintain the integrity of encrypted communications over time.

API design considerations become particularly important when exposing AI models through web services or microservice architectures. Rate limiting mechanisms help prevent both denial-of-service attacks and systematic model extraction attempts by limiting the number of queries that individual users or IP addresses can make within specified time windows. The implementation of request validation and input sanitization helps prevent malicious inputs from reaching the model inference engine.

Network segmentation strategies should isolate AI inference workloads from other system components, creating controlled environments where model serving occurs within protected network zones. The use of virtual private clouds, software-defined networking, and micro-segmentation technologies enables organizations to create secure enclaves for AI operations while maintaining necessary connectivity for legitimate use cases.

Defending Against Model Extraction Attacks

Model extraction attacks represent a sophisticated threat that requires multi-layered defensive strategies combining technical controls, monitoring systems, and legal protections. The implementation of query budget limitations helps prevent systematic extraction attempts by restricting the total number of queries that individual users can make over extended periods. These limitations must be carefully balanced to allow legitimate use cases while preventing abusive extraction attempts.

Differential privacy techniques can be applied to model outputs to introduce controlled noise that preserves the utility of predictions while making systematic extraction significantly more difficult. The careful calibration of noise parameters ensures that individual predictions remain accurate for legitimate users while degrading the quality of information available to potential attackers attempting to reconstruct the model.

Query pattern analysis and anomaly detection systems provide crucial capabilities for identifying potential extraction attempts in real-time. Machine learning algorithms can be trained to recognize suspicious patterns such as systematic exploration of input spaces, unusual query frequencies, or attempts to probe model decision boundaries. These detection systems enable rapid response to potential threats and can trigger automated defensive measures such as rate limiting or temporary access restrictions.

Implementing Input Validation and Sanitization

The protection of AI models during inference requires comprehensive input validation and sanitization mechanisms that prevent malicious data from reaching the model while preserving the integrity of legitimate inputs. Input validation must account for the specific characteristics of different data types, including image formats, text encodings, numerical ranges, and structured data schemas. The implementation of schema validation, format verification, and content filtering helps ensure that only properly formatted and safe inputs are processed by AI models.

Adversarial input detection represents a specialized form of input validation that attempts to identify potentially malicious inputs designed to cause model misclassification. Statistical analysis techniques, ensemble methods, and specialized detection models can be employed to identify inputs that exhibit characteristics consistent with adversarial examples. The integration of these detection capabilities into inference pipelines enables real-time filtering of suspicious inputs before they reach production models.

Data preprocessing and normalization steps provide additional opportunities to neutralize potential threats while maintaining model performance. Techniques such as input smoothing, feature scaling, and outlier detection can help reduce the effectiveness of adversarial attacks while ensuring that legitimate inputs are properly processed. The careful design of preprocessing pipelines must balance security considerations with model accuracy requirements.

The comprehensive security architecture for AI inference systems integrates multiple defensive layers, from network security and authentication through input validation and output monitoring. This multi-layered approach ensures that potential threats are addressed at every stage of the inference process, creating robust protection against a wide range of attack vectors.

Monitoring and Logging for Security Analytics

Comprehensive monitoring and logging capabilities form the backbone of effective AI security operations, providing the visibility necessary to detect threats, investigate incidents, and maintain compliance with regulatory requirements. Model inference logging must capture sufficient detail to enable security analysis while respecting privacy requirements and avoiding the storage of sensitive information. The implementation of structured logging formats and centralized log management systems enables efficient analysis of model usage patterns and potential security incidents.

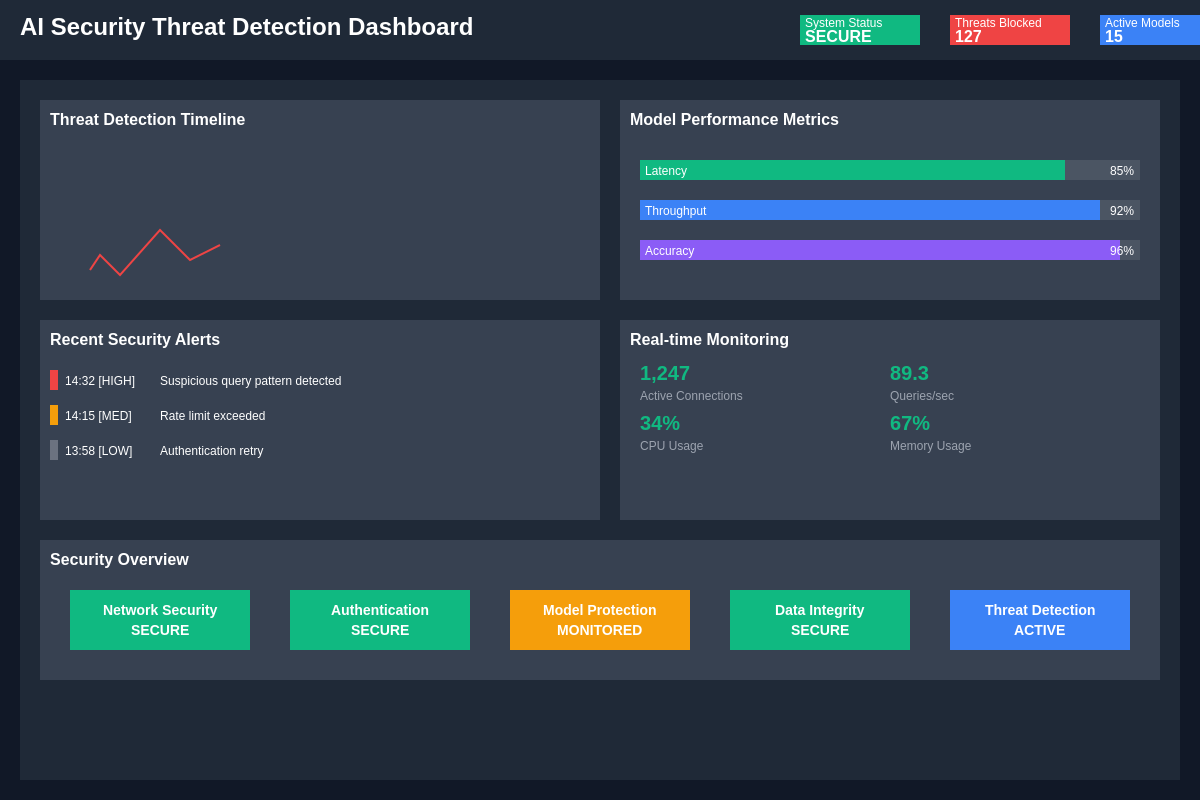

Security analytics platforms specifically designed for AI workloads can provide specialized capabilities for detecting anomalous behavior, identifying potential attacks, and correlating events across multiple system components. These platforms must be capable of processing high-volume inference logs while providing real-time alerting for critical security events. The integration of machine learning techniques into security analytics enables the detection of subtle patterns that might indicate sophisticated attack attempts.

Performance monitoring provides an additional dimension of security visibility, as many attacks against AI systems can be detected through changes in model behavior, response times, or resource utilization patterns. The establishment of baseline performance metrics enables the detection of deviations that might indicate security incidents or system compromise. Automated alerting mechanisms can notify security teams of significant performance anomalies that warrant investigation.

Enhance your security analysis capabilities with Perplexity to access comprehensive threat intelligence and security research that can inform your AI protection strategies. The combination of specialized AI security tools with traditional cybersecurity platforms creates comprehensive visibility into potential threats.

Model Versioning and Secure Updates

The management of AI model versions in production environments requires careful consideration of security implications throughout the update lifecycle. Secure model deployment pipelines must include code signing, integrity verification, and controlled rollout mechanisms that ensure only authorized model updates are deployed to production systems. The implementation of cryptographic signatures for model files enables verification that models have not been tampered with during storage or transmission.

Blue-green deployment strategies and canary releases provide mechanisms for testing model updates in controlled environments before full production deployment. These approaches enable the detection of potential security issues or performance degradations in updated models while maintaining the ability to quickly rollback to previous versions if problems are discovered. The automation of security testing within deployment pipelines helps ensure that security validation occurs consistently for every model update.

Model provenance tracking provides crucial capabilities for maintaining accountability and enabling forensic analysis in the event of security incidents. Comprehensive records of model training data, algorithms used, training environments, and deployment history enable security teams to understand the complete lifecycle of deployed models and identify potential points of compromise or vulnerability introduction.

Protecting Training Data and Model Artifacts

The security of AI inference systems extends beyond the runtime environment to include the protection of training data, model artifacts, and development infrastructure. Training data often contains sensitive information that could be exploited if accessed by unauthorized parties, making secure storage and access controls essential components of comprehensive AI security programs. Encryption at rest and in transit protects training datasets from unauthorized access while maintaining the ability to use this data for legitimate model development and validation purposes.

Model artifact security encompasses the protection of trained model files, configuration parameters, and associated metadata throughout their lifecycle. Secure storage systems with appropriate access controls ensure that model files cannot be accessed or modified by unauthorized parties. The implementation of integrity monitoring capabilities enables the detection of unauthorized changes to model artifacts that might indicate compromise or tampering.

Development environment security plays a crucial role in preventing the introduction of vulnerabilities or malicious modifications during the model development process. Secure development practices, including code review, vulnerability scanning, and access controls, help ensure that models are developed in controlled environments that minimize the risk of security issues. The isolation of development and production environments prevents potential security incidents in development systems from affecting production AI services.

Compliance and Regulatory Considerations

The deployment of AI systems in production environments must account for an increasingly complex landscape of regulatory requirements and compliance frameworks that address data protection, algorithmic accountability, and security standards. Privacy regulations such as the General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) impose specific requirements on how personal data is processed by AI systems, including requirements for data minimization, purpose limitation, and user rights management.

Industry-specific compliance requirements add additional layers of complexity to AI security implementations. Financial services organizations must comply with regulations such as the Payment Card Industry Data Security Standard (PCI DSS) and various banking regulations, while healthcare organizations must adhere to the Health Insurance Portability and Accountability Act (HIPAA) and similar privacy requirements. The integration of compliance controls into AI inference pipelines ensures that regulatory requirements are met without compromising system performance or functionality.

Audit and reporting capabilities provide essential functions for demonstrating compliance with regulatory requirements and internal security policies. Comprehensive audit trails must capture sufficient detail to enable compliance verification while protecting sensitive information and maintaining system performance. The implementation of automated reporting mechanisms helps ensure that compliance documentation is generated consistently and accurately.

Real-time threat detection and monitoring capabilities provide security teams with comprehensive visibility into AI system behavior and potential security incidents. The integration of multiple detection mechanisms creates layered defenses that can identify various types of attacks and anomalous behavior patterns.

Incident Response for AI Security Events

The development of specialized incident response capabilities for AI security events requires consideration of unique characteristics that distinguish AI-related incidents from traditional cybersecurity events. AI security incidents may involve model performance degradation, adversarial attacks, data poisoning attempts, or model extraction activities that require specialized investigation techniques and response procedures. The establishment of clear incident classification frameworks helps security teams properly categorize and prioritize AI-related security events.

Forensic analysis capabilities for AI systems must account for the complex interactions between training data, model algorithms, and inference processes that can make root cause analysis challenging. Specialized tools and techniques for analyzing AI system behavior, model decision processes, and inference patterns provide crucial capabilities for understanding security incidents and implementing appropriate remediation measures. The preservation of evidence in AI security incidents requires careful consideration of large-scale data sets, model states, and system configurations.

Recovery and remediation procedures for AI security incidents may involve model retraining, data sanitization, system reconfiguration, and enhanced monitoring implementation. The development of predefined response playbooks helps ensure that security teams can respond effectively to common types of AI security incidents while maintaining system availability and minimizing business impact. Regular testing and simulation exercises help validate incident response procedures and identify areas for improvement.

Future-Proofing AI Security Architectures

The rapidly evolving landscape of AI technology and associated security threats requires the development of flexible security architectures that can adapt to emerging challenges and incorporate new defensive capabilities. The design of modular security frameworks enables organizations to update specific components of their AI security infrastructure without requiring complete system overhauls. The adoption of standardized security interfaces and protocols facilitates the integration of new security tools and technologies as they become available.

Research and development in AI security continues to produce new defensive techniques, threat detection methods, and security tools that can enhance the protection of production AI systems. Organizations must maintain awareness of emerging security research and evaluate new technologies for potential integration into their security architectures. The establishment of relationships with security research communities and vendors helps ensure access to cutting-edge security capabilities and threat intelligence.

The evolution of regulatory frameworks and industry standards for AI security will continue to shape the requirements for production AI deployments. Organizations must monitor regulatory developments and adjust their security architectures to meet new compliance requirements while maintaining operational efficiency. The proactive implementation of security capabilities that exceed current requirements helps ensure readiness for future regulatory changes.

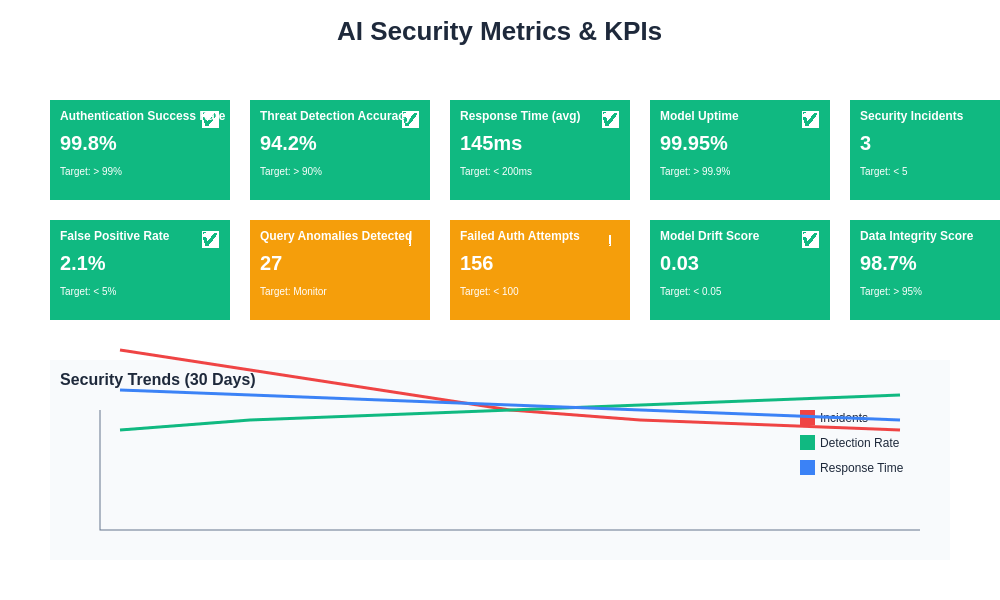

Comprehensive security metrics and key performance indicators provide essential visibility into the effectiveness of AI security measures and help organizations make informed decisions about security investments and improvements. The tracking of multiple security dimensions enables holistic assessment of AI security posture and identification of areas requiring attention.

Building a Comprehensive AI Security Program

The establishment of effective AI security requires the integration of technical controls, organizational processes, and governance frameworks that address the full spectrum of risks associated with production AI deployments. Security program development must account for the unique characteristics of AI workloads while leveraging established cybersecurity best practices and frameworks. The alignment of AI security initiatives with broader organizational security strategies ensures consistent risk management approaches and efficient resource utilization.

Training and awareness programs for development teams, operations personnel, and security staff help ensure that AI-specific security considerations are properly understood and implemented throughout the organization. Specialized training on AI threats, defensive techniques, and incident response procedures provides teams with the knowledge necessary to effectively protect AI systems. Regular security assessments and penetration testing exercises help validate the effectiveness of implemented security controls and identify areas for improvement.

The measurement and continuous improvement of AI security programs requires the establishment of appropriate metrics, monitoring capabilities, and feedback mechanisms that enable organizations to assess their security posture and make informed decisions about security investments. Regular review and updating of security policies, procedures, and technical controls helps ensure that AI security programs remain effective against evolving threats and changing business requirements.

The future of AI security lies in the development of adaptive, intelligent security systems that can automatically detect and respond to emerging threats while learning from each security event to improve future defensive capabilities. The integration of AI techniques into security operations creates opportunities for enhanced threat detection, automated incident response, and predictive security analytics that can anticipate and prevent security incidents before they occur.

Disclaimer

This article is for informational and educational purposes only and does not constitute professional security advice. The information provided reflects current understanding of AI security practices and should not be considered as definitive guidance for all situations. Organizations should conduct thorough risk assessments and consult with qualified security professionals when implementing AI security measures. The effectiveness of security controls may vary depending on specific deployment environments, threat models, and organizational requirements. Readers should stay informed about emerging threats and evolving best practices in AI security.