The convergence of Service Workers and artificial intelligence represents a transformative milestone in web development, enabling sophisticated machine learning capabilities that operate seamlessly in the background of web applications. This revolutionary approach fundamentally changes how developers can implement AI-powered features, allowing for continuous data processing, model inference, and intelligent automation without disrupting the user interface or requiring constant network connectivity. Service Workers AI opens unprecedented possibilities for creating responsive, intelligent web applications that maintain functionality even in offline environments while providing users with real-time AI insights and automated assistance.

Explore the latest AI development trends to understand how background processing technologies are reshaping modern web applications. The integration of Service Workers with machine learning frameworks creates a powerful foundation for building next-generation web applications that combine the accessibility of web technologies with the sophistication of advanced AI capabilities.

Understanding Service Workers in the AI Context

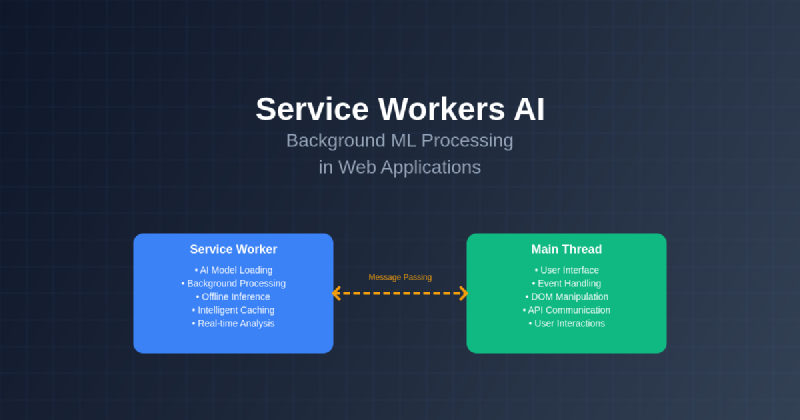

Service Workers function as programmable network proxies that sit between web applications and the network, providing developers with unprecedented control over resource caching, background synchronization, and push notifications. When combined with AI capabilities, Service Workers become intelligent orchestrators that can process machine learning models, analyze user behavior patterns, and make predictive decisions without requiring active user interaction or maintaining persistent connections to external services.

The architectural advantages of implementing AI through Service Workers extend far beyond simple background processing. These workers operate in a separate thread from the main application, ensuring that computationally intensive machine learning operations never block the user interface or degrade application performance. This separation enables developers to implement sophisticated AI features such as real-time image recognition, natural language processing, and predictive analytics while maintaining smooth, responsive user experiences that feel instantaneous and fluid.

The persistent nature of Service Workers makes them ideal containers for AI models and processing logic that need to remain available across multiple user sessions and application states. Unlike traditional web workers that are tied to specific page lifecycles, Service Workers can maintain AI model instances, cache inference results, and continue processing data even when users navigate away from the application or close browser tabs entirely.

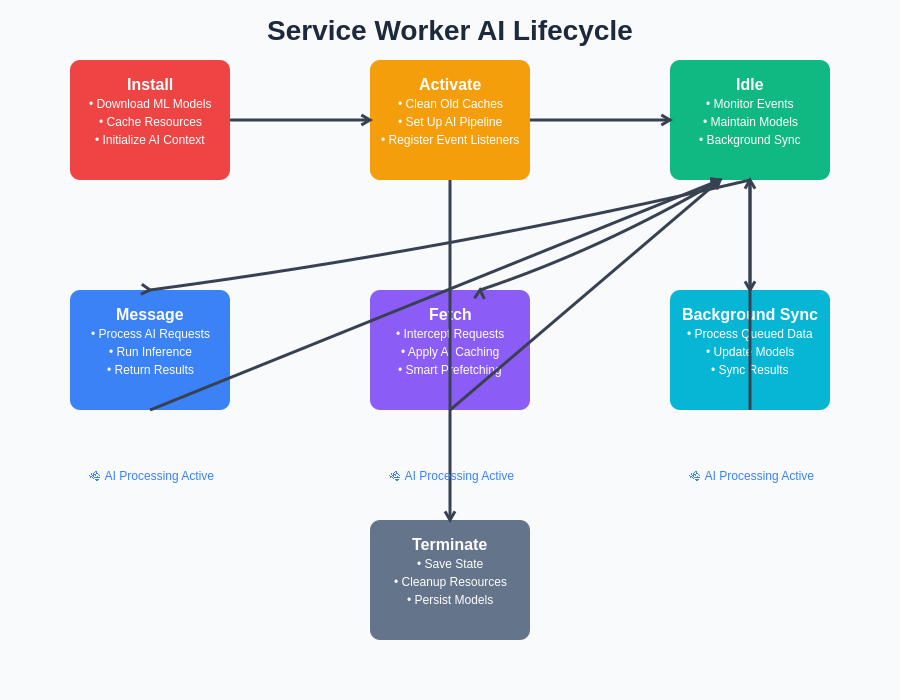

The Service Worker lifecycle provides multiple opportunities for AI integration, from initial model loading during installation to continuous background processing during the active phase. Each lifecycle state offers specific advantages for different aspects of AI functionality, ensuring optimal resource utilization and seamless user experiences.

Implementing Machine Learning Models in Service Workers

The implementation of machine learning models within Service Workers requires careful consideration of model size, processing requirements, and data flow patterns. Modern web ML frameworks like TensorFlow.js and ONNX.js provide excellent compatibility with Service Worker environments, enabling developers to load pre-trained models or implement custom neural networks that operate entirely within the browser context without requiring external API calls or cloud-based processing services.

Loading machine learning models in Service Workers typically involves fetching model files during the installation phase and caching them for immediate availability during subsequent operations. This approach ensures that AI capabilities remain functional even in offline scenarios while minimizing the latency associated with model initialization and warm-up procedures. The caching strategy becomes particularly important when dealing with larger models that might take significant time to download and initialize.

Experience advanced AI development with Claude to enhance your understanding of ML model implementation strategies and optimization techniques for web environments. The combination of intelligent development assistance and practical implementation experience accelerates the learning curve for developers entering the Service Workers AI ecosystem.

Model optimization becomes crucial when implementing AI in Service Workers due to the resource constraints and performance expectations of web environments. Techniques such as model quantization, pruning, and knowledge distillation help reduce model size and computational requirements while maintaining acceptable accuracy levels. These optimizations ensure that AI processing can occur smoothly on a wide range of devices, from high-end desktop computers to resource-constrained mobile devices.

Background Data Processing and Analysis

Service Workers excel at implementing continuous data processing pipelines that can analyze user interactions, process incoming data streams, and generate insights without interrupting the primary application flow. This background processing capability enables sophisticated AI features such as behavioral pattern recognition, anomaly detection, and predictive content recommendation that operate transparently while users interact with the application interface.

The event-driven architecture of Service Workers aligns perfectly with AI processing workflows that need to respond to various triggers such as new data arrival, scheduled analysis intervals, or specific user actions. Developers can implement intelligent background processes that monitor application state changes, analyze accumulated data, and proactively prepare AI-generated content or recommendations that enhance user experience through predictive assistance.

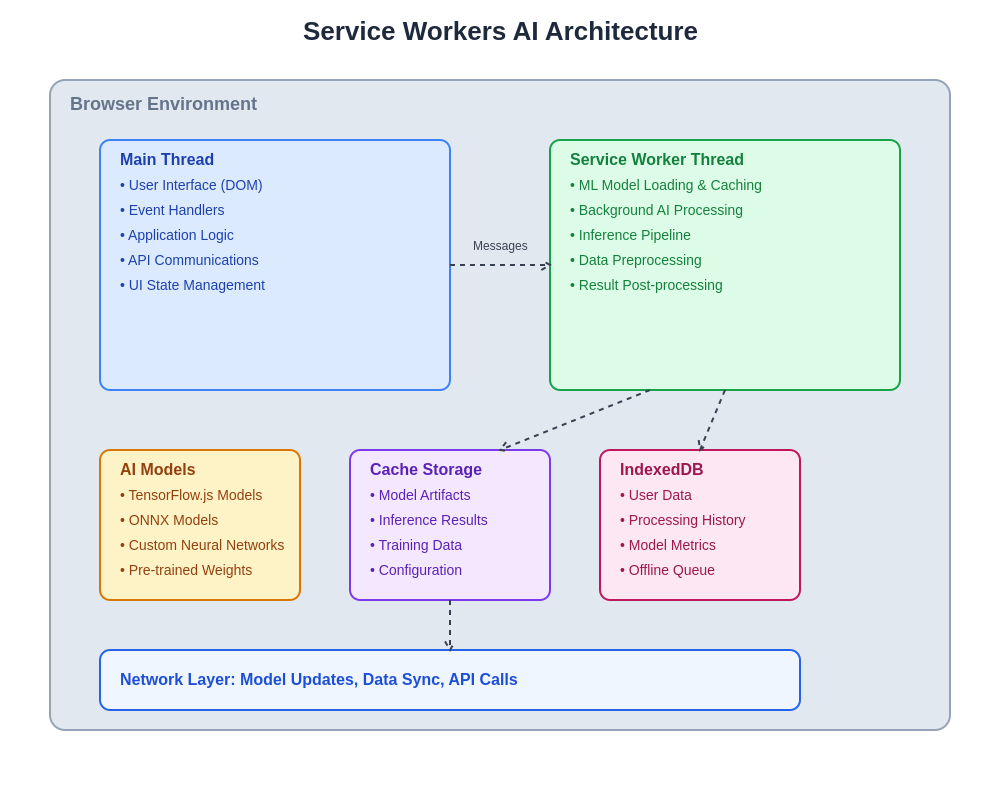

Data persistence and management within Service Workers require thoughtful design to balance storage efficiency with processing performance. IndexedDB integration enables Service Workers to maintain large datasets for training or inference while implementing intelligent caching strategies that optimize memory usage and processing speed. This local data management capability is essential for AI applications that need to maintain user privacy by processing sensitive information entirely within the browser environment.

The comprehensive architecture of Service Workers AI demonstrates the intricate relationships between different system components, from the main thread user interface to the background AI processing pipeline. This separation of concerns ensures optimal performance while maintaining the flexibility to implement sophisticated machine learning workflows entirely within the browser environment.

Real-Time Inference and Decision Making

The ability to perform real-time inference within Service Workers transforms how web applications can respond to user inputs and environmental changes. Machine learning models operating in the background can continuously analyze incoming data streams, user behavior patterns, and contextual information to make intelligent decisions that enhance application functionality without requiring explicit user commands or external processing delays.

Implementation of real-time inference systems requires careful optimization of model architecture and inference pipelines to ensure that processing occurs within acceptable time bounds while maintaining accuracy standards. Techniques such as model sharding, progressive inference, and result caching help achieve the performance characteristics necessary for responsive AI-powered web applications that feel natural and immediate to users.

The decision-making capabilities enabled by Service Workers AI extend beyond simple classification or prediction tasks to encompass complex workflow automation and intelligent resource management. AI models can make autonomous decisions about content prefetching, resource allocation, and user interface adaptations based on learned patterns and real-time analysis of application state and user context.

Offline AI Capabilities and Model Synchronization

One of the most compelling advantages of Service Workers AI lies in the ability to maintain full AI functionality during offline periods, ensuring that users can continue to benefit from intelligent features regardless of network connectivity status. This offline capability requires careful design of model synchronization strategies that balance local processing capabilities with the need to incorporate updated models and training data when connectivity is restored.

Model versioning and synchronization present unique challenges in Service Workers environments where multiple model versions might need to coexist during transition periods. Intelligent synchronization strategies can implement gradual model updates, A/B testing frameworks, and fallback mechanisms that ensure continuous service availability while incorporating improvements and updates from centralized model repositories.

Leverage Perplexity for comprehensive AI research to stay informed about the latest developments in offline AI processing and model synchronization techniques. The rapidly evolving landscape of web-based machine learning requires continuous learning and adaptation to maintain competitive advantages in AI-powered web applications.

The caching strategies for AI models and inference results require sophisticated algorithms that balance storage efficiency with performance optimization. Intelligent caching can predict which models and data will be needed based on usage patterns, preload relevant resources during idle periods, and implement efficient eviction policies that maintain optimal performance under storage constraints.

Performance Optimization and Resource Management

Optimizing AI processing performance within Service Workers demands a comprehensive understanding of browser resource allocation, threading models, and memory management patterns. The isolated execution environment of Service Workers provides both opportunities and constraints that require specific optimization strategies to achieve optimal performance while maintaining system stability and responsiveness.

Memory management becomes particularly critical when implementing AI in Service Workers due to the need to maintain model instances, intermediate processing results, and cached data across extended periods. Efficient memory allocation strategies, garbage collection optimization, and intelligent resource sharing help ensure that AI processing remains sustainable over long application lifecycles without degrading system performance or causing memory-related issues.

Processing load balancing between Service Workers and the main application thread requires careful orchestration to maximize overall system performance. Strategies such as dynamic workload distribution, priority-based task scheduling, and adaptive processing can help optimize resource utilization while maintaining responsive user interfaces and efficient AI processing pipelines.

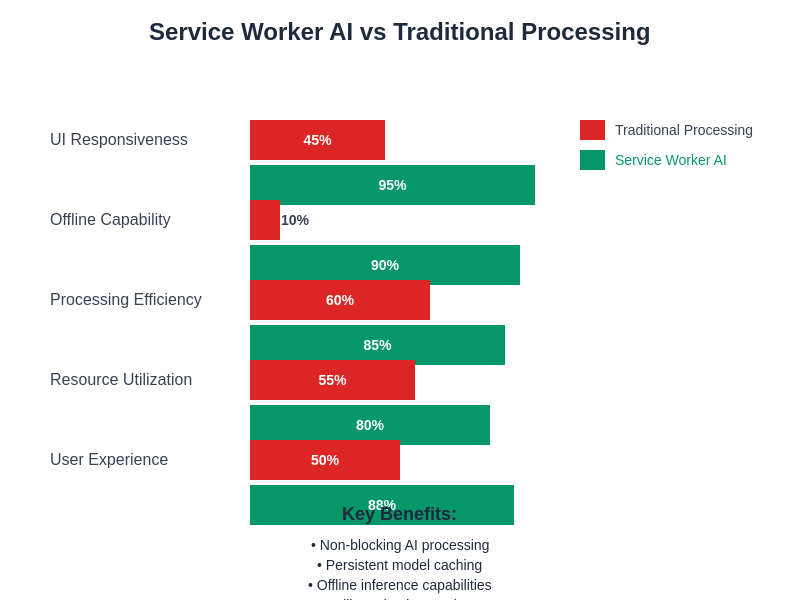

The performance advantages of Service Workers AI become evident when comparing key metrics against traditional processing approaches. The non-blocking nature of background AI processing, combined with intelligent caching and offline capabilities, delivers substantial improvements across multiple dimensions of application performance and user experience.

Security and Privacy Considerations

Implementing AI within Service Workers introduces important security and privacy considerations that require careful attention to ensure user data protection and system integrity. The persistent nature and broad capabilities of Service Workers create potential security vectors that must be addressed through comprehensive security design and implementation practices.

Data privacy becomes particularly important when processing sensitive user information within AI models operating in Service Workers. Techniques such as differential privacy, federated learning, and on-device processing help maintain user privacy while enabling sophisticated AI capabilities that learn from user behavior and preferences without compromising sensitive information.

Model security and integrity verification ensure that AI models operating within Service Workers haven’t been tampered with or compromised during download, storage, or execution phases. Cryptographic verification, secure model distribution, and runtime integrity checking help maintain the security and reliability of AI-powered web applications.

Integration with Modern Web APIs

Service Workers AI can leverage a rich ecosystem of modern web APIs to enhance functionality and create more sophisticated AI-powered experiences. Integration with APIs such as WebRTC for real-time communication, Notification API for intelligent alerts, and Background Sync for automated data processing creates comprehensive AI platforms that seamlessly integrate with the broader web ecosystem.

The WebAssembly integration capabilities of Service Workers enable deployment of high-performance AI libraries and models that achieve near-native execution speeds while maintaining the security and portability advantages of web technologies. This integration opens possibilities for implementing sophisticated AI algorithms that were previously impractical in web environments due to performance constraints.

Progressive Web App integration transforms Service Workers AI into comprehensive application platforms that combine the accessibility of web technologies with the capabilities of native applications. This integration enables AI-powered web applications that provide consistent, intelligent functionality across diverse devices and platforms while maintaining the advantages of web-based distribution and updates.

Development Tools and Debugging Strategies

Developing and debugging AI-powered Service Workers requires specialized tools and techniques that account for the unique challenges of background processing, model debugging, and performance optimization in isolated execution environments. Browser developer tools provide essential capabilities for monitoring Service Worker lifecycle, analyzing performance characteristics, and debugging AI processing pipelines.

Testing strategies for Service Workers AI must account for the complexity of background processing, offline scenarios, and long-running AI operations. Comprehensive testing approaches include unit testing for individual AI components, integration testing for Service Worker interactions, and end-to-end testing that validates complete AI workflows under various network and device conditions.

Performance profiling and optimization tools help developers identify bottlenecks, optimize resource usage, and ensure that AI processing meets performance requirements across diverse deployment environments. These tools provide insights into memory usage patterns, processing time distributions, and resource allocation efficiency that guide optimization efforts and architectural decisions.

Future Directions and Emerging Technologies

The future of Service Workers AI promises exciting developments in areas such as federated learning, edge computing integration, and advanced model architectures that will further enhance the capabilities and performance of web-based AI applications. Emerging technologies such as WebGPU and advanced WebAssembly features will unlock new levels of performance and capability for AI processing in web environments.

The evolution toward more sophisticated AI architectures, including transformer models and advanced neural networks, will require continued innovation in optimization techniques and resource management strategies. These developments will enable web applications to implement AI capabilities that rival native applications while maintaining the accessibility and cross-platform advantages of web technologies.

Integration with emerging web standards and APIs will continue to expand the possibilities for Service Workers AI, enabling new categories of applications and use cases that leverage the unique advantages of web-based AI processing. The convergence of AI, web technologies, and edge computing represents a fundamental shift toward more intelligent, responsive, and capable web applications that provide unprecedented value to users and developers alike.

The democratization of AI through web technologies, enabled by Service Workers and related technologies, promises to accelerate innovation and enable broader access to sophisticated AI capabilities. This democratization will empower developers of all skill levels to create intelligent web applications that were previously accessible only to organizations with significant AI expertise and infrastructure resources.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The technologies and techniques described are based on current web standards and AI development practices, which continue to evolve rapidly. Readers should conduct their own research and testing to ensure compatibility with their specific requirements and deployment environments. Performance and capabilities may vary depending on browser support, device specifications, and implementation details.