The realm of audio design has experienced a profound transformation with the advent of artificial intelligence technologies that can generate, manipulate, and synthesize sound effects with unprecedented sophistication and creativity. This revolutionary shift has fundamentally altered how sound designers, game developers, filmmakers, and content creators approach the creation of auditory experiences, enabling the production of complex soundscapes and realistic audio effects through intelligent algorithms that understand the nuanced relationship between physical phenomena and their acoustic manifestations.

Explore the latest AI audio innovations to discover cutting-edge developments that are reshaping the entire landscape of digital audio production and sound design. The integration of machine learning models into audio production workflows represents more than just technological advancement; it embodies a paradigm shift that democratizes high-quality sound design while expanding the creative possibilities available to artists and developers across all media formats.

The Evolution of Digital Audio Creation

Traditional sound effect creation has long been a labor-intensive process requiring specialized equipment, extensive recording sessions, and meticulous post-production work to achieve the desired auditory results. Sound designers would spend countless hours in recording studios capturing real-world sounds, manipulating existing audio libraries, or using complex synthesis techniques to create the perfect audio elements for their projects. This conventional approach, while producing excellent results, often presented significant barriers to entry for independent creators and smaller production teams due to the substantial time investment and technical expertise required.

The emergence of AI-powered sound generation has revolutionized this landscape by providing intelligent systems capable of understanding audio characteristics, analyzing sound patterns, and generating entirely new audio content based on textual descriptions, visual cues, or specific parameters. These advanced algorithms can synthesize realistic environmental sounds, create complex atmospheric textures, and produce dynamic audio effects that respond intelligently to changing conditions within interactive media applications.

Machine Learning Models in Audio Synthesis

The foundation of AI-powered sound effect generation rests upon sophisticated machine learning architectures that have been trained on vast datasets of audio samples, enabling them to understand the intricate relationships between different acoustic properties and their perceptual characteristics. These models utilize advanced techniques such as generative adversarial networks, variational autoencoders, and transformer architectures to learn the underlying patterns and structures that define different categories of sound effects.

Contemporary AI audio models can analyze the spectral characteristics of existing sounds, identify key features that define particular audio categories, and generate new variations that maintain the essential qualities while introducing novel elements that enhance creativity and provide unique sonic experiences. The sophistication of these systems has reached a level where generated audio effects can be virtually indistinguishable from traditionally recorded and processed sounds, while offering unprecedented control over timing, intensity, and tonal characteristics.

Experience advanced AI capabilities with Claude for comprehensive audio analysis and sound design consultation that can enhance your creative projects with intelligent insights and recommendations. The synergy between human artistic vision and AI-powered generation capabilities creates an environment where innovative soundscapes emerge through collaborative creativity that transcends traditional production limitations.

Procedural Audio Generation for Interactive Media

The gaming industry has particularly benefited from AI-powered sound effect generation due to the dynamic nature of interactive entertainment where audio must respond intelligently to player actions, environmental changes, and narrative developments in real-time. Traditional pre-recorded sound effects often struggle to provide the level of responsiveness and variation required for immersive gaming experiences, leading to repetitive audio patterns that can diminish player engagement over extended play sessions.

AI-driven procedural audio systems address these limitations by generating contextually appropriate sound effects on-demand, adapting their characteristics based on game state variables, environmental conditions, and player behavior patterns. These intelligent audio systems can create realistic footstep variations based on surface materials, generate dynamic weather sounds that respond to changing atmospheric conditions, and produce combat audio that scales appropriately with action intensity while maintaining acoustic realism and emotional impact.

The implementation of machine learning models in game audio has enabled developers to create vast soundscapes with minimal storage requirements while providing virtually unlimited variation in audio content. This approach not only reduces the memory footprint of audio assets but also ensures that each player experience feels unique and dynamically responsive to their individual gameplay patterns and preferences.

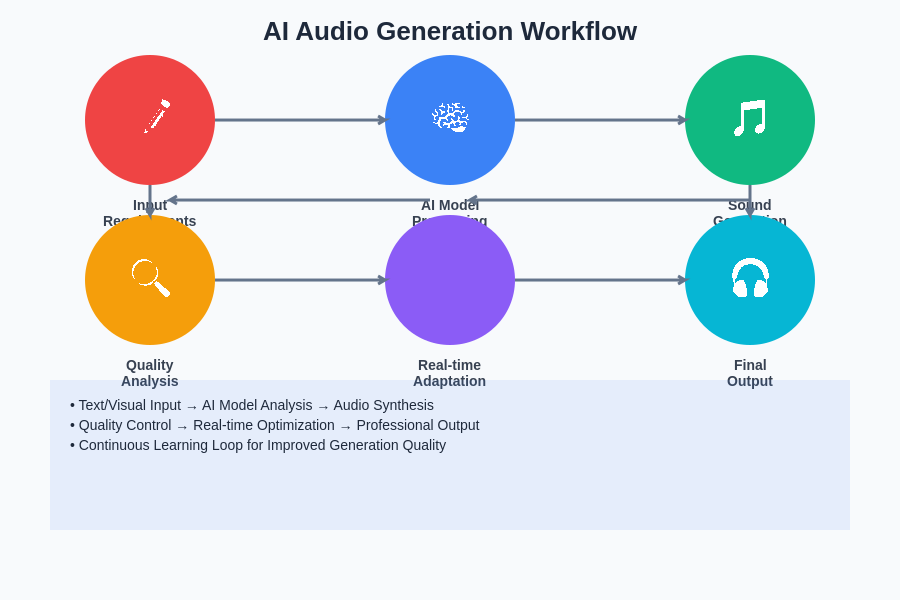

The sophisticated workflow of AI audio generation demonstrates the seamless integration of human creativity with machine intelligence, creating a comprehensive system that transforms initial requirements into polished audio content through multiple stages of processing, analysis, and refinement.

Automated Foley and Realistic Sound Recreation

Foley artistry, the traditional craft of creating sound effects through live performance and recording, has been significantly enhanced through AI-powered automation that can generate realistic recreations of common sound effects without requiring extensive recording sessions or specialized acoustic environments. Modern AI systems can analyze the visual characteristics of actions and automatically generate appropriate sound effects that match the timing, intensity, and acoustic properties expected for those specific movements or interactions.

These automated foley systems utilize computer vision algorithms to interpret visual content and generate corresponding audio effects that align with the perceived action, creating seamless synchronization between visual and auditory elements. The sophistication of these systems has advanced to the point where they can distinguish between different materials, surfaces, and object types, generating contextually appropriate sounds that enhance the realism and immersion of the overall media experience.

The integration of AI-powered foley generation into post-production workflows has streamlined the audio production process while maintaining the high quality standards expected in professional media production. Sound designers can now focus more on creative decision-making and artistic refinement rather than spending extensive time on routine sound matching and synchronization tasks.

Environmental Soundscape Creation

Creating convincing environmental soundscapes has traditionally required extensive field recording sessions, careful layering of multiple audio elements, and sophisticated mixing techniques to achieve realistic and immersive auditory environments. AI-powered soundscape generation has transformed this process by enabling the creation of complex environmental audio that adapts dynamically to changing conditions and provides rich, layered auditory experiences without requiring massive audio libraries or extensive manual composition.

Modern environmental audio AI can generate realistic nature sounds, urban atmospheres, and fantastical soundscapes that respond intelligently to time-of-day variations, weather conditions, and seasonal changes. These systems understand the relationship between different environmental factors and their acoustic manifestations, creating soundscapes that feel authentic and immersive while providing the flexibility needed for interactive and dynamic media applications.

Discover comprehensive AI research capabilities with Perplexity to explore the latest developments in environmental audio simulation and acoustic modeling that are advancing the field of immersive sound design. The ability to generate convincing environmental audio programmatically has opened new possibilities for creating vast, explorable worlds in games and virtual reality applications where traditional audio production methods would be prohibitively expensive or time-consuming.

Real-Time Audio Processing and Adaptation

The development of real-time AI audio processing capabilities has enabled the creation of responsive sound systems that can modify and adapt audio characteristics on-the-fly based on changing conditions, user preferences, or narrative requirements. These intelligent audio systems can analyze incoming audio streams, identify key characteristics, and apply appropriate modifications to enhance clarity, adjust emotional impact, or ensure optimal compatibility with different playback environments and devices.

Real-time audio adaptation extends beyond simple equalization or compression, incorporating intelligent analysis of content characteristics, listener preferences, and environmental conditions to optimize the auditory experience for each specific situation. This technology has proven particularly valuable in applications such as podcast production, live streaming, and interactive entertainment where audio quality and appropriateness must be maintained across diverse listening conditions and audience preferences.

The implementation of machine learning models in real-time audio processing has also enabled the development of intelligent audio enhancement systems that can automatically remove background noise, enhance speech clarity, and optimize dynamic range for different listening environments. These capabilities have democratized access to professional-quality audio processing while reducing the technical expertise required to achieve excellent auditory results.

Emotional and Contextual Audio Intelligence

Advanced AI audio systems have developed sophisticated understanding of the emotional and contextual implications of different sound characteristics, enabling the generation of audio effects that enhance narrative impact and emotional resonance within media content. These intelligent systems can analyze script content, visual cues, and contextual information to generate appropriate sound effects that support the intended emotional journey and enhance storytelling effectiveness.

The ability to understand emotional context has enabled AI audio systems to make intelligent decisions about timing, intensity, and tonal characteristics that support the overall artistic vision while maintaining technical excellence and acoustic realism. This level of contextual intelligence has proven particularly valuable in applications such as film scoring, game audio design, and interactive storytelling where sound effects must serve both practical and artistic functions.

Contemporary AI audio models can recognize emotional patterns in existing audio content and generate complementary effects that enhance the overall emotional impact without overwhelming or conflicting with other audio elements. This sophisticated understanding of audio psychology and perception has elevated the role of AI from simple sound generation to intelligent creative collaboration.

Integration with Digital Audio Workstations

The seamless integration of AI-powered sound generation tools into existing digital audio workstation workflows has been crucial for widespread adoption within professional audio production environments. Modern AI audio plugins and extensions provide intuitive interfaces that allow sound designers and music producers to leverage advanced machine learning capabilities without requiring extensive technical knowledge or disrupting established creative workflows.

These integrated solutions offer real-time generation capabilities, intelligent parameter suggestions, and contextual audio recommendations that enhance creativity while maintaining the familiar interface paradigms that audio professionals expect from their production tools. The ability to generate, modify, and refine AI-created audio effects within the same environment used for traditional audio production has eliminated many of the workflow disruptions that historically hindered the adoption of new audio technologies.

The development of standardized plugin formats and API interfaces for AI audio tools has also facilitated the creation of comprehensive audio production ecosystems where multiple AI-powered tools can work together seamlessly, sharing context and parameters to provide cohesive and intelligent audio generation capabilities across the entire production pipeline.

Quality Control and Audio Refinement

Ensuring consistent quality and artistic coherence in AI-generated audio effects requires sophisticated quality control mechanisms that can evaluate generated content against established standards while maintaining the creative flexibility that makes AI audio generation valuable. Modern AI audio systems incorporate intelligent quality assessment algorithms that can identify potential issues such as artifacts, inconsistencies, or inappropriate content characteristics before finalizing generated audio effects.

These quality control systems utilize multiple evaluation criteria including technical audio metrics, perceptual quality assessments, and contextual appropriateness analysis to ensure that generated content meets professional standards while supporting the intended creative vision. The implementation of intelligent feedback mechanisms also enables these systems to learn from user preferences and refine their generation capabilities over time.

The development of automated quality refinement processes has enabled AI audio systems to iteratively improve generated content through multiple passes, applying intelligent filtering, enhancement, and optimization techniques that elevate the final audio quality while maintaining the unique characteristics that make AI-generated content valuable for creative applications.

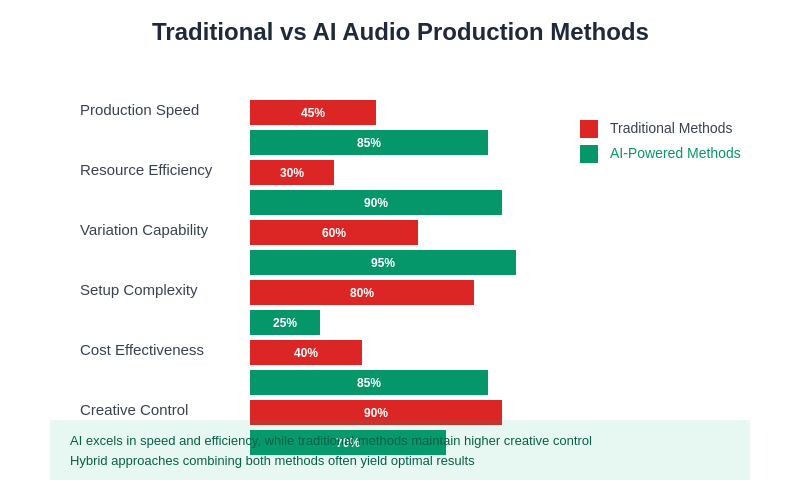

The comparative analysis reveals significant advantages of AI-powered audio production methods across multiple performance metrics, while highlighting areas where traditional approaches maintain their strengths in creative control and artistic expression.

Collaborative AI-Human Workflows

The most effective implementations of AI-powered sound effect generation recognize that artificial intelligence serves as a powerful creative tool rather than a replacement for human artistic judgment and expertise. Successful audio production workflows integrate AI capabilities in ways that enhance human creativity while preserving the essential role of artistic vision, emotional intelligence, and cultural understanding that human creators bring to the audio design process.

These collaborative workflows typically involve AI systems handling routine generation tasks, providing creative suggestions, and automating technical processes while human artists focus on high-level creative decisions, quality evaluation, and artistic refinement. This division of responsibilities allows for more efficient production processes while ensuring that final audio content maintains the emotional depth and cultural relevance that characterizes exceptional sound design.

The development of intelligent collaboration interfaces has made it easier for audio professionals to communicate their creative intentions to AI systems while receiving meaningful feedback and suggestions that enhance the overall creative process. These interfaces often incorporate natural language processing capabilities that allow creators to describe desired audio characteristics in intuitive terms rather than requiring technical parameter specifications.

Accessibility and Democratization of Sound Design

AI-powered audio generation has significantly lowered the barriers to entry for high-quality sound design, enabling independent creators, small development teams, and educational institutions to access professional-level audio creation capabilities without requiring substantial investments in equipment, software, or specialized training. This democratization of sound design technology has fostered innovation and creativity across diverse creative communities that previously lacked access to advanced audio production resources.

The availability of intuitive AI audio tools has also enabled creators from other disciplines to incorporate sophisticated sound design into their projects without requiring extensive audio engineering expertise. This cross-disciplinary accessibility has led to innovative applications of audio technology in fields such as interactive art, educational content, and experimental media where traditional audio production approaches might be impractical or prohibitively expensive.

Educational applications of AI audio generation have proven particularly valuable for teaching sound design principles, audio engineering concepts, and media production techniques. Students can experiment with complex audio creation processes and immediately hear the results of their creative decisions without requiring access to expensive recording equipment or professional studio environments.

Technical Challenges and Future Developments

Despite significant advances in AI-powered audio generation, several technical challenges continue to drive ongoing research and development efforts in this rapidly evolving field. Issues such as maintaining consistent quality across diverse audio categories, ensuring seamless integration with existing production workflows, and providing sufficient creative control while leveraging AI automation continue to challenge developers and researchers working on next-generation audio AI systems.

The computational requirements for real-time AI audio generation also present ongoing challenges, particularly for applications that require low-latency processing or operation on resource-constrained devices. Ongoing research into model optimization, efficient architectures, and specialized hardware acceleration continues to address these limitations while expanding the practical applications for AI audio technology.

Future developments in AI audio generation are likely to focus on enhanced contextual understanding, improved emotional intelligence, and more sophisticated integration with other AI systems such as computer vision and natural language processing. These advances will enable the creation of more comprehensive and intelligent media production systems that can understand and respond to complex creative requirements across multiple modalities.

Industry Applications and Market Impact

The commercial applications of AI-powered sound effect generation span numerous industries including gaming, film production, advertising, podcast creation, and interactive media development. Each industry presents unique requirements and challenges that have driven specialized developments in AI audio technology while contributing to the overall advancement of the field through diverse use cases and feedback from professional users.

The gaming industry has emerged as a particularly strong driver of AI audio innovation due to the demanding requirements for dynamic, responsive, and varied audio content that must adapt to unpredictable player behavior and changing game states. Film and television production have also embraced AI audio tools for streamlining post-production workflows and reducing the costs associated with traditional foley and sound effect creation.

The advertising and marketing industries have found AI audio generation particularly valuable for creating custom soundtracks and audio branding elements that can be quickly adapted for different campaigns, markets, and media formats. This flexibility and responsiveness have made AI audio tools essential components of modern advertising production workflows where speed and customization are critical competitive advantages.

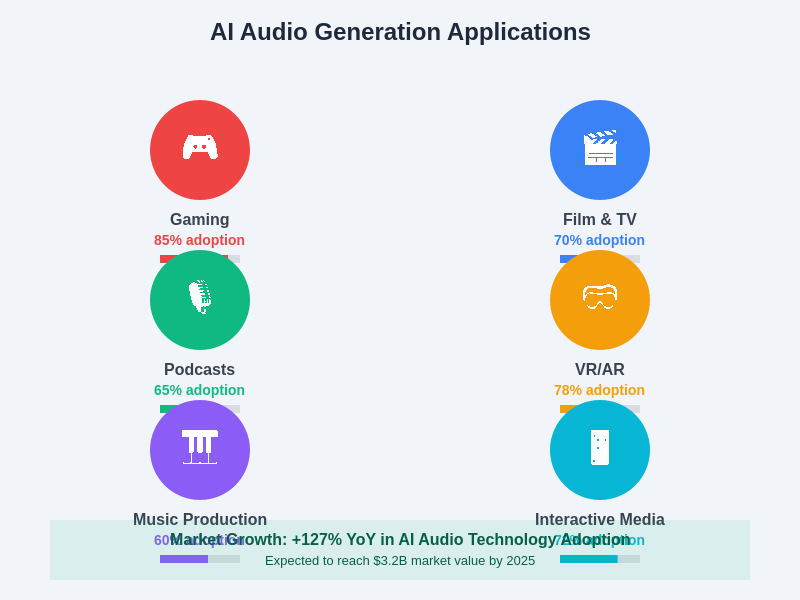

The widespread adoption of AI audio generation technology across diverse industries demonstrates its versatility and effectiveness in addressing the unique audio production challenges faced by different sectors, with gaming and virtual reality leading in implementation rates.

The widespread adoption of AI audio generation across these diverse industries has created a substantial and growing market for AI audio technology while driving continued investment in research and development. This market growth has attracted both established audio technology companies and innovative startups, fostering a competitive environment that accelerates technological advancement and drives down costs for end users.

AI-powered sound effect generation represents a transformative force in modern audio production, offering unprecedented capabilities for creating, modifying, and optimizing audio content while maintaining the essential human elements of creativity, artistic vision, and cultural understanding that define exceptional sound design. As these technologies continue to evolve and mature, we can expect even more sophisticated applications that further blur the boundaries between artificial and human creativity while opening new possibilities for immersive and emotionally resonant auditory experiences across all forms of media and interactive content.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI audio technologies and their applications in sound design and audio production. Readers should conduct their own research and consider their specific requirements when implementing AI-powered audio generation tools. The effectiveness and suitability of AI audio solutions may vary depending on specific use cases, technical requirements, and creative objectives.