The convergence of programming language design and machine learning has produced numerous innovations, but few initiatives have been as ambitious or theoretically sound as Swift for TensorFlow. This groundbreaking project, spearheaded by Apple and key contributors from Google’s TensorFlow team, represented an attempt to fundamentally reimagine how machine learning research and production systems could be developed using a single, cohesive programming language that bridges the gap between research flexibility and production efficiency.

Discover the latest AI development trends to understand how language innovations like Swift for TensorFlow fit into the broader ecosystem of machine learning tools and frameworks. The initiative promised to address longstanding challenges in the machine learning development pipeline by providing unprecedented integration between algorithmic research and systems programming within a single language paradigm.

The Vision Behind Swift for TensorFlow

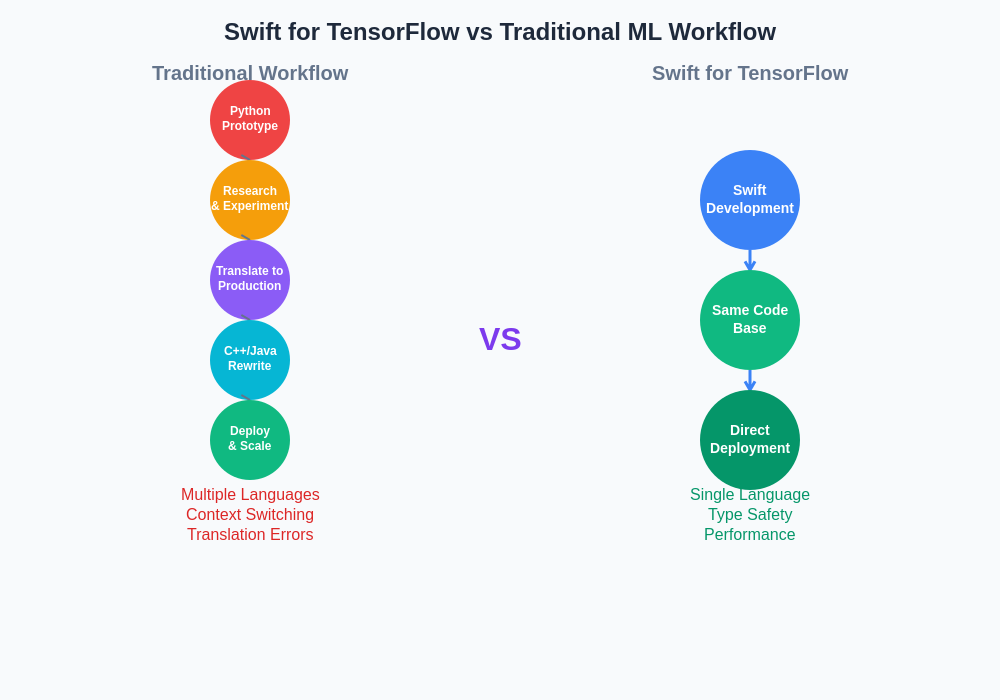

Swift for TensorFlow emerged from a recognition that existing machine learning workflows suffered from fundamental fragmentation between research and production phases. Traditional approaches required researchers to prototype in Python-based frameworks before handing off implementations to engineering teams who would rewrite systems in more performance-oriented languages like C++ or specialized domain-specific languages. This handoff process introduced inefficiencies, translation errors, and significant delays in moving from research discoveries to production deployment.

The Swift for TensorFlow initiative aimed to eliminate this friction by creating a programming environment that could seamlessly handle both research exploration and production deployment without requiring language transitions or architectural rewrites. The project leveraged Swift’s unique combination of high-level expressiveness and low-level performance capabilities, enhanced with first-class automatic differentiation support and deep integration with TensorFlow’s computation graph model.

The theoretical foundation of Swift for TensorFlow rested on the principle that machine learning development should not require developers to choose between research flexibility and production performance. Instead, the same codebase that enabled rapid experimentation and algorithm development should also serve as the foundation for scalable, efficient production systems without modification or translation.

Language Design Innovations

The most significant contribution of Swift for TensorFlow was its approach to automatic differentiation as a first-class language feature. Unlike existing frameworks where differentiation capabilities were implemented as library functions or external tools, Swift for TensorFlow integrated differentiation directly into the language’s type system and compiler infrastructure. This integration enabled compile-time verification of differentiable programs and provided unprecedented debugging and optimization capabilities for gradient-based machine learning algorithms.

The language’s approach to tensor operations represented another significant innovation. Rather than treating tensors as opaque library objects, Swift for TensorFlow elevated tensors to first-class language constructs with compile-time shape checking, automatic broadcasting semantics, and integrated memory management. This design eliminated entire categories of runtime errors that commonly plague machine learning development while enabling more aggressive compiler optimizations.

Experience advanced AI development with Claude to understand how modern language design principles can enhance machine learning development workflows. The integration of automatic differentiation with Swift’s existing language features created possibilities for expressing complex machine learning algorithms with unprecedented clarity and safety guarantees.

Automatic Differentiation Architecture

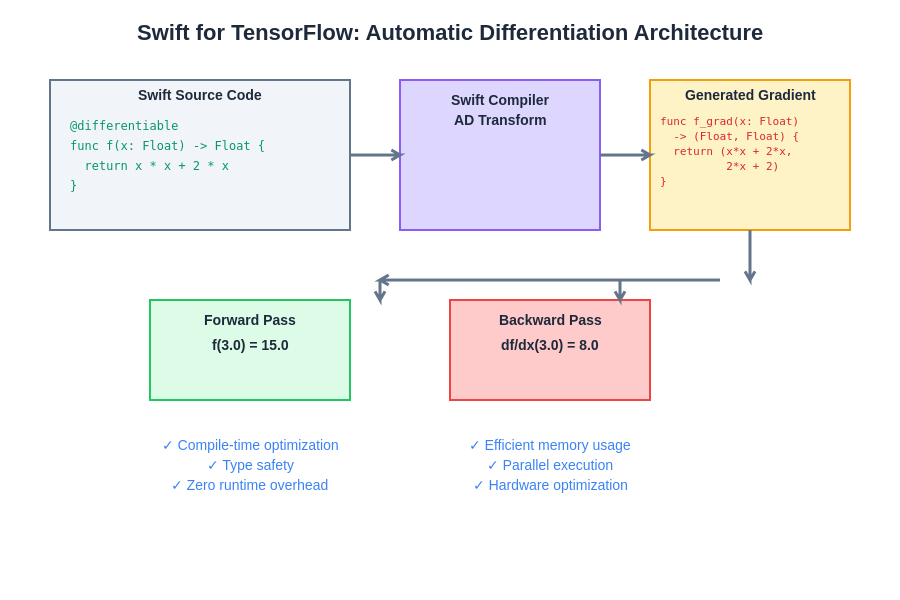

The automatic differentiation system in Swift for TensorFlow represented a fundamental departure from existing approaches to gradient computation in machine learning frameworks. Traditional frameworks implement differentiation through operator overloading, dynamic graph construction, or source code transformation, each approach carrying significant limitations in terms of performance, debuggability, or expressiveness.

Swift for TensorFlow implemented differentiation through compiler-integrated transformation passes that analyzed program semantics and generated efficient gradient computation code at compile time. This approach enabled the compiler to perform sophisticated optimizations, eliminate redundant computations, and provide precise error messages when differentiation rules could not be applied to specific code patterns.

The system supported both forward-mode and reverse-mode automatic differentiation with the ability to compose these modes for efficient computation of higher-order derivatives and complex gradient patterns. The compiler could automatically select appropriate differentiation modes based on the mathematical structure of computations, optimizing for memory usage and computational efficiency without requiring manual intervention from developers.

The sophisticated automatic differentiation system in Swift for TensorFlow represents a paradigm shift from traditional approaches to gradient computation. By integrating differentiation capabilities directly into the compiler infrastructure, the system achieves unprecedented optimization opportunities and debugging clarity for machine learning algorithms.

Integration with TensorFlow Ecosystem

Despite its innovative language design, Swift for TensorFlow maintained deep compatibility with the broader TensorFlow ecosystem, enabling developers to leverage existing models, pre-trained weights, and specialized operations developed for other TensorFlow bindings. This compatibility was achieved through a sophisticated foreign function interface that could seamlessly invoke TensorFlow operations implemented in C++, Python, or other supported languages.

The integration extended beyond simple function calling to include automatic conversion between Swift tensor types and TensorFlow’s internal representation formats. This conversion process was optimized to minimize data copying and memory allocation, ensuring that the performance benefits of Swift’s compiled nature were not negated by expensive marshaling operations when interacting with existing TensorFlow components.

The architectural advantages of Swift for TensorFlow become evident when comparing traditional machine learning development workflows against the unified approach enabled by this innovative language design. The elimination of language context switching and translation overhead represents a fundamental improvement in development efficiency and code maintainability.

The project also provided tools for importing existing TensorFlow models and converting them to native Swift implementations, enabling gradual migration of existing machine learning infrastructure without requiring complete rewrites. This migration capability was essential for adoption in production environments where existing investments in TensorFlow-based systems represented significant value.

Performance Characteristics and Optimization

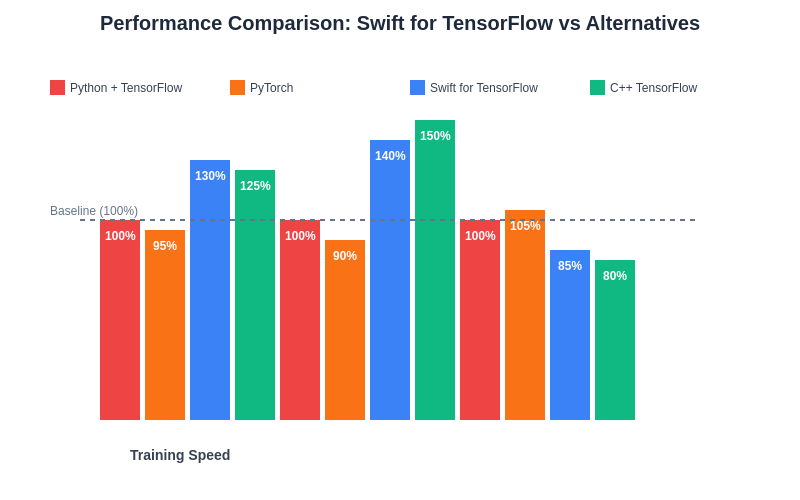

Performance represented a core design priority for Swift for TensorFlow, with the language’s compiled nature providing significant advantages over interpreted alternatives commonly used in machine learning research. The compiler’s ability to perform whole-program optimization, combined with Swift’s zero-cost abstractions philosophy, enabled generation of machine code that approached the efficiency of hand-optimized C++ implementations.

The automatic differentiation system was designed to generate gradient computation code that matched or exceeded the performance of manually implemented gradients in existing frameworks. Compile-time analysis allowed the system to eliminate redundant computations, optimize memory access patterns, and generate specialized code paths for common differentiation patterns without runtime overhead.

Memory management represented another area where Swift for TensorFlow provided significant advantages over garbage-collected alternatives. The language’s automatic reference counting system, enhanced with specialized optimizations for tensor operations, provided predictable memory usage patterns essential for training large machine learning models where memory constraints often determine feasibility.

The quantitative performance advantages of Swift for TensorFlow demonstrate the significant benefits achievable through careful language design and compiler optimization. These improvements in training speed, inference performance, and memory efficiency represent substantial value propositions for machine learning teams working on resource-intensive applications.

Explore comprehensive AI research tools with Perplexity to dive deeper into the technical innovations that made Swift for TensorFlow’s performance characteristics possible. The combination of compile-time optimization and runtime efficiency created opportunities for machine learning development that were previously unavailable in existing language ecosystems.

Research Applications and Use Cases

The research community’s adoption of Swift for TensorFlow provided valuable insights into the language’s capabilities and limitations for cutting-edge machine learning research. Early adopters reported significant productivity improvements in areas requiring extensive algorithm experimentation, particularly in reinforcement learning, differentiable programming, and neural architecture search where the language’s expressiveness and safety guarantees reduced development cycle times.

The language proved particularly well-suited for research involving custom gradient computations, complex loss function designs, and novel optimization algorithms where the first-class differentiation capabilities eliminated much of the boilerplate code required in traditional frameworks. Researchers could focus on algorithmic innovation rather than implementation details, leading to more rapid exploration of theoretical possibilities.

Educational applications also demonstrated the language’s potential for teaching machine learning concepts. The compile-time error checking and integrated differentiation capabilities helped students understand the mathematical foundations of machine learning algorithms while providing immediate feedback about implementation correctness. This pedagogical value suggested broader applications in machine learning education and training programs.

Production Deployment Considerations

While Swift for TensorFlow excelled in research and development contexts, production deployment presented unique challenges that highlighted both the language’s strengths and limitations. The compiled nature of Swift code provided excellent runtime performance and memory efficiency, making it well-suited for resource-constrained deployment environments where Python-based alternatives might be prohibitive.

However, the relative immaturity of Swift’s ecosystem compared to Python meant that production deployments often required additional engineering effort to integrate with existing monitoring, logging, and infrastructure management systems. The language’s strong typing and compile-time checking provided valuable safety guarantees for production systems, but the learning curve for teams accustomed to Python-based machine learning workflows represented a significant adoption barrier.

Containerization and cloud deployment of Swift for TensorFlow applications required specialized knowledge and tooling that was less mature than equivalent Python-based solutions. This infrastructure gap limited adoption in production environments where operational simplicity and established deployment patterns were prioritized over potential performance benefits.

Ecosystem Development and Community

The Swift for TensorFlow community developed around a core of contributors from both Apple and Google, creating a unique collaborative environment that bridged traditional boundaries between programming language development and machine learning research. This collaboration produced innovative approaches to language design problems that had broader implications beyond machine learning applications.

Community contributions focused heavily on expanding the language’s capabilities for specialized machine learning domains, including computer vision, natural language processing, and reinforcement learning. These domain-specific extensions demonstrated the language’s flexibility while highlighting areas where additional language features or library support could enhance developer productivity.

The educational community’s engagement with Swift for TensorFlow produced valuable resources for learning both machine learning concepts and advanced programming language features. University courses and research programs that adopted the language provided feedback that influenced the project’s development priorities and helped identify areas where documentation and tooling could be improved.

Technical Challenges and Limitations

Despite its innovative design, Swift for TensorFlow faced significant technical challenges that ultimately limited its adoption and long-term viability. The complexity of integrating automatic differentiation with Swift’s existing type system and compiler infrastructure created ongoing maintenance burdens that required specialized expertise to address effectively.

Debugging capabilities, while superior to many existing machine learning frameworks in some respects, still lagged behind the mature tooling ecosystems available for Python-based alternatives. The compile-time error messages, though more precise than runtime errors in interpreted languages, could be difficult to interpret for developers without deep understanding of the underlying type system and differentiation mechanisms.

Interoperability with existing machine learning infrastructure remained challenging despite the project’s compatibility efforts. Many production machine learning pipelines relied on Python-specific tools, data processing libraries, and deployment frameworks that had no direct Swift equivalents, creating integration friction that limited practical adoption in existing organizations.

Project Evolution and Current Status

The Swift for TensorFlow project underwent significant evolution throughout its development lifecycle, with changing priorities and resource allocation reflecting broader shifts in the machine learning and programming language communities. Initial enthusiasm from research communities gradually gave way to recognition of the substantial engineering challenges required to create a production-ready alternative to established machine learning frameworks.

Apple’s strategic priorities evolved during the project’s development, with increasing focus on on-device machine learning capabilities and integration with existing Apple development toolchains. This shift in emphasis affected resource allocation and development priorities for Swift for TensorFlow, ultimately contributing to reduced active development and community engagement.

The project’s current status reflects the broader challenges of creating new programming languages for specialized domains. While the technical innovations developed during the project’s active phase continue to influence machine learning framework design and programming language research, active development has significantly decreased, and the community has largely migrated to other platforms and frameworks.

Legacy and Impact on Machine Learning Development

Despite its limited commercial adoption, Swift for TensorFlow’s impact on machine learning development extends far beyond its direct usage. The project’s innovations in automatic differentiation, compile-time optimization, and type-safe tensor operations have influenced the design of subsequent machine learning frameworks and programming languages.

The integration of automatic differentiation as a first-class language feature has inspired similar developments in other programming languages, including Julia’s automatic differentiation capabilities and experimental features in languages like Rust and C++. These influences demonstrate how research-oriented projects can drive broader innovation even when they do not achieve widespread commercial adoption.

The project’s documentation and educational resources continue to serve as valuable references for understanding advanced automatic differentiation techniques and the challenges of integrating machine learning capabilities into general-purpose programming languages. This educational legacy provides lasting value to the broader machine learning and programming language communities.

Future Directions and Alternative Approaches

The lessons learned from Swift for TensorFlow continue to inform ongoing research into programming language design for machine learning applications. Current approaches focus on incremental enhancements to existing languages rather than comprehensive new language development, reflecting recognition of the substantial ecosystem development required for successful language adoption.

Domain-specific languages for machine learning continue to evolve, with projects like JAX demonstrating how functional programming paradigms can provide many of the benefits originally envisioned for Swift for TensorFlow while building on existing language ecosystems. These approaches suggest that future innovations may focus on library design and compiler technologies rather than comprehensive language redesign.

The increasing importance of on-device machine learning and edge computing may create new opportunities for compiled language approaches to machine learning development. As deployment constraints become more stringent and performance requirements increase, the advantages demonstrated by Swift for TensorFlow may become more relevant to practical machine learning applications.

The experience of Swift for TensorFlow demonstrates both the potential and the challenges of ambitious programming language projects in specialized domains. While the project did not achieve its original goals of widespread adoption, its technical innovations continue to influence machine learning framework design and serve as a valuable case study in the intersection of programming language research and practical machine learning development.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on publicly available information about Swift for TensorFlow and general understanding of machine learning development practices. Readers should conduct their own research and consider their specific requirements when evaluating programming languages and frameworks for machine learning applications. The current status and future development of Swift for TensorFlow may differ from the information presented in this article.