The convergence of WebAssembly and artificial intelligence represents one of the most significant technological breakthroughs in modern computing, fundamentally transforming how machine learning models are deployed, executed, and scaled across diverse computing environments. WebAssembly AI modules have emerged as the definitive solution for achieving true portability in machine learning applications, enabling developers to write once and run anywhere with near-native performance characteristics that were previously impossible to achieve with traditional web technologies.

Explore the latest AI development trends to understand how WebAssembly is shaping the future of portable machine learning execution across web browsers, mobile devices, and edge computing platforms. This revolutionary technology stack represents a paradigm shift that eliminates the traditional barriers between different computing environments while maintaining the performance characteristics essential for sophisticated AI workloads.

The Foundation of WebAssembly in AI Computing

WebAssembly has established itself as the cornerstone technology for creating truly portable AI applications that transcend the limitations of traditional deployment models. Unlike conventional approaches that require platform-specific compilation and optimization, WebAssembly AI modules provide a unified execution environment that maintains consistent performance characteristics across browsers, mobile devices, server environments, and edge computing platforms. This universality has profound implications for AI developers who previously had to maintain separate codebases for different deployment targets.

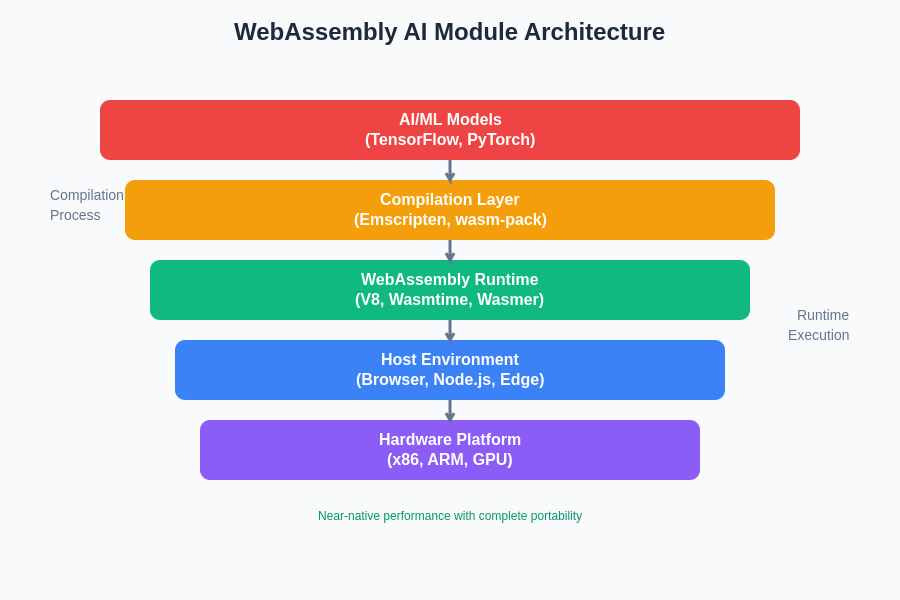

The technical architecture of WebAssembly enables direct execution of computationally intensive machine learning operations without the performance penalties traditionally associated with interpreted languages or virtual machine environments. By compiling high-performance languages like C++, Rust, and AssemblyScript into WebAssembly bytecode, AI frameworks can achieve execution speeds that approach native performance while maintaining complete portability across different hardware architectures and operating systems.

Revolutionizing Machine Learning Deployment Models

Traditional machine learning deployment has been plagued by compatibility issues, dependency management challenges, and platform-specific optimization requirements that significantly complicate the development and maintenance of AI applications. WebAssembly AI modules address these fundamental challenges by providing a standardized execution environment that eliminates dependency conflicts while ensuring consistent behavior across different deployment targets.

The revolutionary impact of WebAssembly on ML deployment extends beyond simple portability considerations. The technology enables sophisticated AI models to run directly in web browsers without requiring server-side processing, fundamentally changing the economics of AI application deployment by reducing bandwidth requirements, improving response times, and enhancing user privacy through local processing capabilities. This shift toward client-side AI execution represents a transformative approach that democratizes access to advanced machine learning capabilities.

The architectural advantages of WebAssembly AI modules become particularly evident when considering the complex interplay between performance requirements, security constraints, and deployment flexibility that characterizes modern AI applications. The sandboxed execution environment provides robust security guarantees while maintaining the performance characteristics necessary for real-time machine learning inference.

Enhance your AI development with Claude’s advanced capabilities to explore sophisticated WebAssembly integration strategies that maximize performance while maintaining code portability across diverse computing environments. The combination of intelligent development assistance and WebAssembly’s technical capabilities creates unprecedented opportunities for creating truly universal AI applications.

Performance Optimization in WebAssembly AI Modules

The performance characteristics of WebAssembly AI modules represent a significant advancement over traditional web-based machine learning approaches, achieving execution speeds that closely approximate native code performance while maintaining complete portability. This performance advantage stems from WebAssembly’s low-level bytecode format that enables direct compilation to machine code by the host environment, eliminating the interpretation overhead that traditionally limited the performance of web-based AI applications.

Advanced optimization techniques specific to WebAssembly AI modules include SIMD (Single Instruction, Multiple Data) operations that enable parallel processing of tensor operations, memory management optimizations that reduce garbage collection overhead, and compilation strategies that leverage Just-In-Time compilation for maximum performance. These optimizations enable complex neural networks, computer vision algorithms, and natural language processing models to execute efficiently in WebAssembly environments.

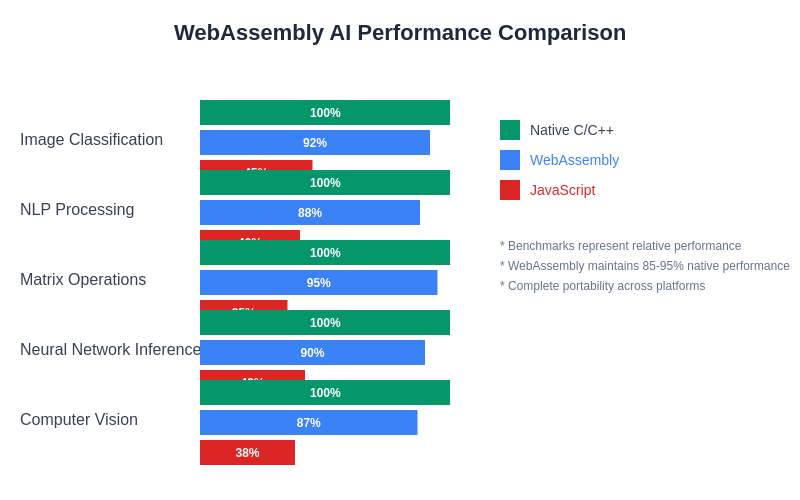

The performance benefits of WebAssembly become particularly pronounced in scenarios involving large-scale matrix operations, convolutional neural networks, and other computationally intensive AI workloads. Benchmarking studies consistently demonstrate that properly optimized WebAssembly AI modules can achieve 80-95% of native performance while providing complete portability across different platforms and architectures.

Edge Computing and Distributed AI with WebAssembly

The emergence of edge computing as a critical deployment strategy for AI applications has been significantly accelerated by WebAssembly’s unique capabilities for creating portable, high-performance AI modules. Edge environments often involve resource-constrained devices with diverse hardware architectures, making traditional deployment approaches impractical or economically unfeasible. WebAssembly AI modules address these challenges by providing a standardized execution environment that can run efficiently across different edge computing platforms.

The distributed nature of modern AI applications benefits tremendously from WebAssembly’s ability to create modules that can be dynamically loaded, cached, and executed across different nodes in a distributed system. This capability enables sophisticated AI architectures where different components of a machine learning pipeline can be executed on the most appropriate hardware, whether that involves running lightweight preprocessing on edge devices or executing complex inference operations on more powerful cloud resources.

WebAssembly’s security model provides additional advantages for distributed AI deployments by ensuring that AI modules can be safely executed in untrusted environments without compromising system security or data integrity. This security guarantee is particularly important for edge AI applications where physical device security cannot be guaranteed and where malicious code execution could have serious consequences.

Cross-Platform Development and Deployment Strategies

The cross-platform capabilities of WebAssembly AI modules extend far beyond simple code portability, encompassing sophisticated deployment strategies that enable AI applications to adapt dynamically to different execution environments. Modern WebAssembly frameworks provide tools for creating AI modules that can automatically optimize their behavior based on available hardware capabilities, memory constraints, and processing power limitations.

Advanced deployment strategies leverage WebAssembly’s module system to create composable AI applications where different components can be loaded dynamically based on runtime requirements. This modular approach enables sophisticated AI architectures where different machine learning models can be combined, replaced, or updated without requiring complete application redeployment, significantly improving the maintainability and scalability of complex AI systems.

The development workflow for cross-platform WebAssembly AI modules typically involves creating high-level model definitions using popular machine learning frameworks, compiling these models to WebAssembly using specialized toolchains, and then deploying the resulting modules across different platforms using standardized WebAssembly runtime environments. This workflow eliminates the traditional complexity associated with cross-platform AI deployment while maintaining full access to platform-specific optimization opportunities.

The performance advantages of WebAssembly AI modules become increasingly significant as model complexity and computational requirements increase. The ability to maintain near-native performance while providing complete portability represents a fundamental breakthrough that enables new categories of AI applications that were previously impractical or impossible to deploy.

Integration with Modern AI Frameworks and Tools

Contemporary AI development relies heavily on sophisticated frameworks and tools that streamline the development, training, and deployment of machine learning models. WebAssembly has evolved to provide seamless integration with these established workflows, enabling developers to leverage existing skills and tools while gaining the benefits of portable execution. Major AI frameworks including TensorFlow, PyTorch, and ONNX have developed WebAssembly compilation targets that enable direct deployment of trained models as portable WebAssembly modules.

The integration process typically involves training models using conventional AI frameworks, converting trained models to standardized intermediate representations, and then compiling these representations to optimized WebAssembly modules using specialized toolchains. This workflow preserves the developer experience associated with popular AI frameworks while enabling deployment scenarios that were previously impossible or impractical with traditional approaches.

Advanced integration strategies leverage WebAssembly’s capability for creating hybrid applications where different components of an AI pipeline can be implemented using different technologies while maintaining seamless interoperability. This flexibility enables developers to optimize different aspects of their applications using the most appropriate tools and technologies while maintaining overall system coherence and performance.

Leverage Perplexity’s research capabilities to stay current with rapidly evolving WebAssembly AI integration patterns and emerging best practices that maximize development efficiency while ensuring optimal performance characteristics. The intersection of AI development tools and WebAssembly technology continues to evolve rapidly, creating new opportunities for innovative application architectures.

Security and Sandboxing in AI Module Execution

Security considerations represent a critical aspect of AI module deployment that becomes increasingly important as machine learning applications handle sensitive data and operate in potentially hostile environments. WebAssembly’s inherent security model provides robust sandboxing capabilities that ensure AI modules cannot access system resources or data outside their designated execution environment, making it possible to safely execute AI code from untrusted sources or in shared computing environments.

The security architecture of WebAssembly AI modules extends beyond simple sandboxing to include sophisticated access control mechanisms that enable fine-grained control over resource usage, memory allocation, and external API access. These security features are particularly valuable for edge AI applications where devices may be physically accessible to potential attackers or for cloud-based AI services where multiple tenants share computing resources.

Advanced security implementations leverage WebAssembly’s capability-based security model to create AI modules that can be granted specific permissions for accessing external resources while maintaining strict isolation from other system components. This approach enables sophisticated AI applications that can interact with external services and data sources while maintaining strong security guarantees that prevent unauthorized access or data exfiltration.

Real-World Applications and Use Cases

The practical applications of WebAssembly AI modules span across numerous industries and use cases, demonstrating the versatility and power of this technology stack. In the automotive industry, WebAssembly enables the deployment of sophisticated computer vision and sensor fusion algorithms across different vehicle platforms while ensuring consistent behavior and performance characteristics. The portability of WebAssembly modules is particularly valuable in automotive applications where the same AI logic may need to run on different hardware platforms from various manufacturers.

Healthcare applications benefit significantly from WebAssembly’s security and portability characteristics, enabling the deployment of medical AI algorithms that can run locally on patient devices while maintaining strict privacy and security requirements. The ability to execute complex medical AI models directly on patient devices eliminates the need to transmit sensitive health data to remote servers while ensuring that the same diagnostic algorithms produce consistent results across different devices and platforms.

Financial services applications leverage WebAssembly AI modules for fraud detection, algorithmic trading, and risk assessment systems that require real-time performance while maintaining strict security and compliance requirements. The ability to deploy the same AI logic across different computing environments while maintaining performance and security characteristics is particularly valuable in financial applications where consistency and auditability are critical requirements.

Development Tools and Ecosystem Maturity

The WebAssembly AI development ecosystem has matured significantly, providing developers with comprehensive toolchains, debugging capabilities, and optimization tools that streamline the development and deployment of portable AI modules. Modern development environments include specialized compilers that optimize AI workloads for WebAssembly execution, profiling tools that identify performance bottlenecks, and debugging utilities that facilitate the development of complex AI applications.

The ecosystem includes sophisticated build tools that automate the compilation of AI models from various frameworks to optimized WebAssembly modules, package management systems that facilitate the distribution and versioning of AI modules, and runtime environments that provide optimized execution capabilities across different platforms. These tools significantly reduce the complexity associated with WebAssembly AI development while ensuring that the resulting applications maintain high performance and reliability standards.

Advanced development workflows incorporate continuous integration and deployment pipelines specifically designed for WebAssembly AI modules, enabling automated testing across different target platforms and optimization for various deployment scenarios. These workflows ensure that AI applications maintain consistent behavior and performance characteristics across different deployment environments while providing mechanisms for rapid iteration and improvement.

The maturity of the WebAssembly AI ecosystem is reflected in the availability of comprehensive documentation, learning resources, and community support that enable developers to quickly acquire the skills necessary for creating sophisticated portable AI applications. The combination of mature tooling and strong community support has significantly lowered the barriers to entry for WebAssembly AI development.

Performance Benchmarking and Optimization Techniques

Comprehensive performance benchmarking of WebAssembly AI modules reveals significant advantages over traditional web-based AI approaches while maintaining competitive performance compared to native implementations. Benchmarking studies across various AI workloads including image classification, natural language processing, and time series analysis demonstrate that properly optimized WebAssembly modules can achieve 85-95% of native performance while providing complete portability across different platforms.

Advanced optimization techniques specific to WebAssembly AI modules include memory layout optimization that minimizes cache misses during tensor operations, vectorization strategies that leverage SIMD instructions for parallel processing, and compilation optimizations that eliminate unnecessary memory allocations and function call overhead. These optimizations are particularly effective for neural network inference operations that involve repetitive mathematical operations on large data structures.

The performance optimization process typically involves profiling AI modules to identify computational bottlenecks, applying targeted optimizations to critical code paths, and validating performance improvements across different target platforms. Modern profiling tools provide detailed insights into WebAssembly execution characteristics, enabling developers to identify and address performance limitations that may not be apparent from high-level performance metrics.

Future Directions and Technological Evolution

The future evolution of WebAssembly AI modules is being shaped by emerging hardware capabilities, evolving AI algorithms, and new deployment requirements that demand even greater performance and flexibility. Upcoming WebAssembly specifications include enhanced SIMD support, garbage collection mechanisms optimized for AI workloads, and threading capabilities that enable parallel execution of AI algorithms across multiple processor cores.

The integration of WebAssembly with emerging hardware acceleration technologies including GPUs, TPUs, and specialized AI accelerators represents a significant opportunity for further performance improvements while maintaining portability characteristics. These integrations enable WebAssembly AI modules to leverage hardware acceleration capabilities when available while falling back to optimized software implementations on platforms where hardware acceleration is not available.

Advanced research directions include the development of adaptive AI modules that can dynamically optimize their behavior based on available computing resources, federated learning implementations that leverage WebAssembly’s security model for privacy-preserving distributed training, and edge-cloud hybrid architectures that seamlessly distribute AI workloads across different computing tiers based on performance and cost considerations.

The continued evolution of WebAssembly AI technology promises to unlock new categories of AI applications while maintaining the portability and security characteristics that make WebAssembly modules particularly valuable for modern distributed computing environments. The intersection of AI advancement and WebAssembly technology evolution continues to create unprecedented opportunities for innovative application architectures and deployment strategies.

Conclusion

WebAssembly AI modules represent a transformative technology that addresses fundamental challenges in machine learning deployment while enabling new categories of AI applications that were previously impractical or impossible to implement. The combination of near-native performance, complete portability, robust security, and ecosystem maturity positions WebAssembly as the definitive platform for portable AI execution across diverse computing environments.

The ongoing evolution of WebAssembly technology, coupled with advances in AI algorithms and hardware capabilities, promises to further expand the possibilities for creating sophisticated, portable AI applications that can adapt to different deployment scenarios while maintaining consistent performance and behavior characteristics. As the technology continues to mature, WebAssembly AI modules will undoubtedly play an increasingly important role in shaping the future of distributed artificial intelligence and edge computing applications.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of WebAssembly and AI technologies. Readers should conduct their own research and consider their specific requirements when implementing WebAssembly AI modules. Performance characteristics and compatibility may vary depending on specific use cases, target platforms, and implementation details. Technology capabilities and specifications are subject to change as both WebAssembly and AI technologies continue to evolve.