The evolution of machine learning operations has brought forth an essential requirement for sophisticated experiment tracking and model management systems. Among the leading solutions in this rapidly expanding field, Weights & Biases and MLflow have emerged as two prominent platforms that address the complex challenges of managing machine learning workflows at scale. These tools have fundamentally transformed how data scientists and machine learning engineers approach experiment reproducibility, model versioning, and collaborative development in the pursuit of building robust AI systems.

Explore the latest AI development trends to understand how experiment tracking tools are evolving alongside cutting-edge machine learning techniques. The choice between different experiment tracking platforms can significantly impact team productivity, project scalability, and the overall success of machine learning initiatives across organizations of varying sizes and complexity levels.

Understanding the Experiment Tracking Landscape

The modern machine learning development cycle involves countless experiments, hyperparameter adjustments, model iterations, and performance evaluations that generate vast amounts of metadata requiring systematic organization and analysis. Traditional approaches to tracking these experiments often relied on manual documentation, spreadsheets, or custom-built solutions that proved inadequate for handling the complexity and scale of contemporary machine learning projects. The emergence of specialized experiment tracking platforms has addressed these limitations by providing comprehensive solutions for capturing, organizing, and analyzing experimental data throughout the machine learning lifecycle.

Both Weights & Biases and MLflow represent sophisticated approaches to solving these fundamental challenges, yet they embody different philosophies and architectural decisions that influence their suitability for various use cases, team structures, and organizational requirements. Understanding these distinctions requires examining their core capabilities, integration patterns, scalability characteristics, and the specific workflows they enable for machine learning practitioners across different domains and industries.

Weights & Biases: Cloud-First Experiment Management

Weights & Biases has established itself as a comprehensive cloud-native platform designed to streamline machine learning experiment tracking, visualization, and collaboration through an intuitive web-based interface and robust API ecosystem. The platform emphasizes ease of use and rapid deployment, allowing teams to begin tracking experiments with minimal setup requirements while providing advanced features for complex machine learning workflows. This approach has made Weights & Biases particularly popular among research teams, startups, and organizations that prioritize quick iteration and collaborative development.

The platform’s strength lies in its sophisticated visualization capabilities, automatic hyperparameter optimization through Sweeps, comprehensive model registry functionality, and seamless integration with popular machine learning frameworks including PyTorch, TensorFlow, Keras, and Scikit-learn. Weights & Biases provides real-time experiment monitoring, allowing teams to observe training progress, compare multiple experiments simultaneously, and identify optimal configurations through interactive dashboards that present complex experimental data in accessible formats.

Enhance your AI workflow with Claude for advanced reasoning and analysis of experimental results and model performance patterns. The integration of intelligent assistance with experiment tracking platforms creates powerful synergies that accelerate the discovery of insights and optimization opportunities within machine learning projects.

MLflow: Open-Source Flexibility and Control

MLflow represents a fundamentally different approach to machine learning operations, positioning itself as an open-source platform that provides maximum flexibility and control over experiment tracking infrastructure. Developed by Databricks and maintained as an Apache Software Foundation project, MLflow emphasizes modularity, vendor neutrality, and the ability to deploy and customize the platform according to specific organizational requirements and constraints.

The platform consists of four primary components that work together to provide comprehensive machine learning lifecycle management: MLflow Tracking for experiment logging and comparison, MLflow Projects for packaging and reproducing machine learning workflows, MLflow Models for standardized model deployment across diverse platforms, and MLflow Registry for centralized model versioning and stage transitions. This modular architecture enables organizations to adopt MLflow incrementally, implementing only the components that align with their immediate needs while maintaining the flexibility to expand functionality as requirements evolve.

MLflow’s open-source nature provides significant advantages for organizations requiring complete control over their machine learning infrastructure, including the ability to modify source code, implement custom integrations, and maintain experimental data within private computing environments. This flexibility has made MLflow particularly attractive to large enterprises, organizations with strict data governance requirements, and teams that require extensive customization capabilities.

Architecture and Deployment Models

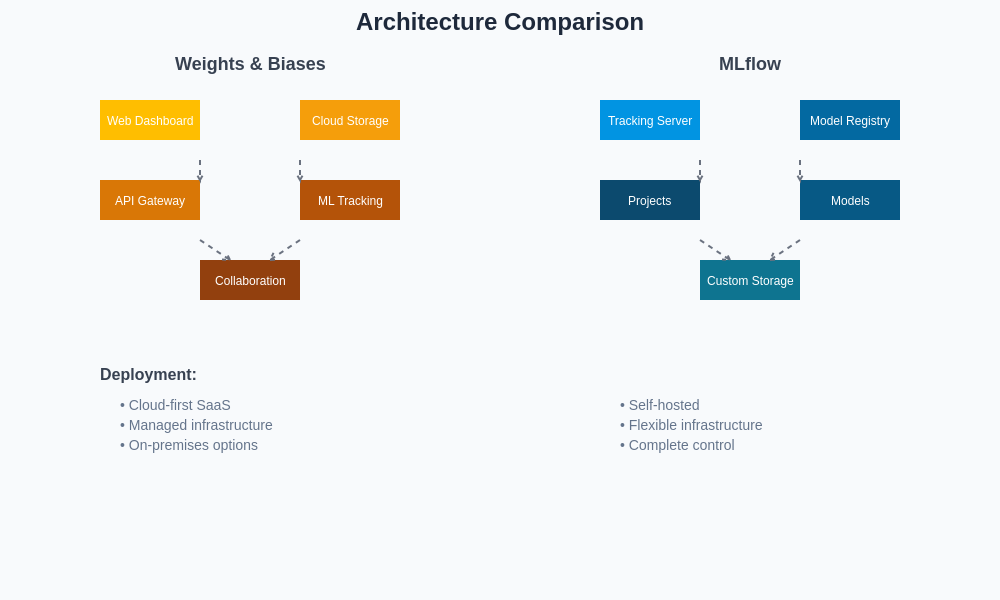

The architectural differences between Weights & Biases and MLflow reflect their distinct approaches to serving machine learning teams and organizations. Weights & Biases operates primarily as a Software-as-a-Service platform where experimental data and metadata are stored in cloud infrastructure managed by the company, though they also offer on-premises deployment options for organizations with specific security or compliance requirements. This cloud-first approach enables rapid deployment and reduces the operational overhead associated with maintaining experiment tracking infrastructure.

The architectural comparison illustrates the fundamental differences in system design and deployment philosophy between the two platforms. Weights & Biases emphasizes managed cloud services and integrated components, while MLflow provides modular, self-hosted flexibility that enables extensive customization and control over system architecture.

MLflow’s architecture provides greater flexibility in deployment options, supporting everything from local development environments to large-scale distributed systems. Organizations can deploy MLflow on their own infrastructure, utilize cloud-based managed services, or implement hybrid configurations that balance convenience with control. This flexibility extends to data storage options, allowing teams to choose between various backend systems including local filesystems, cloud storage services, and enterprise databases depending on their scalability and governance requirements.

The deployment model choice significantly impacts factors such as data privacy, compliance adherence, operational complexity, and long-term costs. Organizations must carefully evaluate their specific requirements regarding data sovereignty, security policies, integration needs, and resource availability when selecting between these architectural approaches.

Feature Comparison and Capabilities

Both platforms provide comprehensive experiment tracking capabilities, yet they differ in their implementation approaches and feature prioritization. Weights & Biases excels in providing sophisticated visualization tools, collaborative features, and automated hyperparameter optimization through their Sweeps functionality. The platform offers advanced dashboard customization, real-time collaboration features, and integration with popular development tools that enhance team productivity and experimental insight generation.

The comprehensive feature analysis reveals distinct strengths and focus areas for each platform. Weights & Biases demonstrates particular excellence in user experience design, collaborative workflows, and automated optimization capabilities, while MLflow provides superior flexibility, customization options, and integration possibilities for complex enterprise environments.

MLflow provides robust experiment tracking capabilities with emphasis on reproducibility, model packaging, and deployment flexibility. The platform’s strength lies in its comprehensive model management features, extensive API coverage, and the ability to integrate with virtually any machine learning framework or deployment target. MLflow’s model registry provides sophisticated versioning capabilities, stage transitions, and metadata management that support complex model governance requirements.

Integration Ecosystem and Framework Support

The integration capabilities of experiment tracking platforms significantly influence their adoption and effectiveness within existing machine learning workflows. Weights & Biases has invested heavily in creating seamless integrations with popular machine learning frameworks, cloud platforms, and development tools. These integrations often require minimal configuration and provide automatic capture of relevant experimental metadata, making it easy for teams to incorporate experiment tracking into their existing workflows without significant modifications.

MLflow’s integration approach emphasizes flexibility and extensibility rather than pre-built convenience integrations. While the platform provides comprehensive APIs and plugin architectures that enable integration with virtually any system, implementing these integrations often requires more technical expertise and development effort. This trade-off between convenience and flexibility reflects the platforms’ different target audiences and use case priorities.

The choice between these integration philosophies depends largely on team technical capabilities, existing infrastructure complexity, and requirements for customization. Teams that prioritize rapid implementation and standardized workflows may prefer Weights & Biases’ approach, while organizations requiring extensive customization or integration with proprietary systems may benefit from MLflow’s flexibility.

Leverage Perplexity for comprehensive research into specific integration requirements and compatibility considerations when evaluating experiment tracking platforms for your machine learning infrastructure needs.

Scalability and Performance Considerations

Scalability requirements vary significantly across machine learning projects, from individual research experiments to large-scale production systems processing massive datasets and supporting hundreds of concurrent users. Weights & Biases addresses scalability through cloud infrastructure that automatically handles resource allocation, data storage, and performance optimization without requiring direct user management. This approach simplifies scaling but may limit control over performance characteristics and cost optimization strategies.

MLflow’s scalability depends largely on the underlying infrastructure and deployment configuration chosen by the implementing organization. While this provides maximum control over performance optimization and resource allocation, it also requires expertise in system administration and infrastructure management. Organizations must carefully design their MLflow deployments to handle anticipated load patterns, data volumes, and concurrent user requirements.

The scalability consideration extends beyond technical performance to encompass factors such as team collaboration, data organization, and workflow management as projects grow in complexity and team size. Both platforms provide mechanisms for organizing experiments, managing access permissions, and facilitating collaboration, but their approaches and limitations differ significantly.

Cost Analysis and Economic Considerations

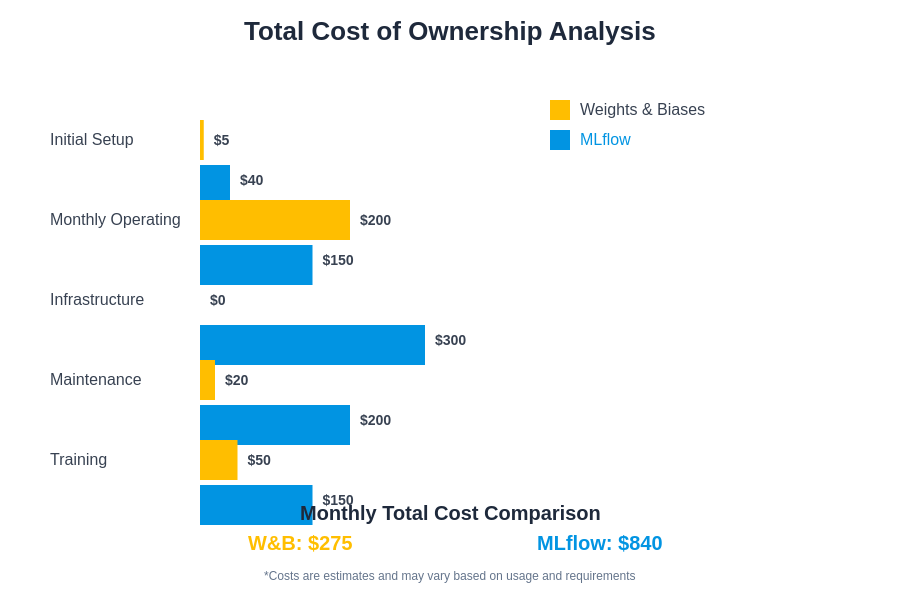

The economic implications of choosing between Weights & Biases and MLflow extend beyond simple licensing costs to encompass infrastructure requirements, operational overhead, and long-term maintenance considerations. Weights & Biases operates on a subscription-based pricing model that scales with usage and feature requirements, providing predictable costs that include infrastructure, maintenance, and support services.

The total cost of ownership analysis reveals significant differences in cost structure and economic implications between the platforms. While MLflow appears cost-effective initially due to its open-source nature, organizations must account for infrastructure, operational, and development costs when conducting comprehensive economic evaluations.

MLflow’s open-source nature eliminates licensing costs but requires investment in infrastructure, operational expertise, and ongoing maintenance activities. Organizations must evaluate the total cost of ownership including hardware or cloud infrastructure costs, personnel requirements for system administration and maintenance, and potential development costs for customization and integration projects.

The cost equation becomes more complex when considering factors such as team productivity, time-to-value, and the opportunity costs associated with managing infrastructure versus focusing on machine learning innovation. Organizations must carefully analyze their specific circumstances, resource availability, and strategic priorities when making economic comparisons between these platforms.

Security and Compliance Framework

Security and compliance considerations play crucial roles in experiment tracking platform selection, particularly for organizations operating in regulated industries or handling sensitive data. Weights & Biases provides enterprise-grade security features including data encryption, access controls, audit logging, and compliance certifications that address common regulatory requirements. The platform’s cloud-based architecture includes security measures managed by the service provider, reducing the security implementation burden on customer organizations.

MLflow’s security model depends entirely on the implementation and configuration choices made by the deploying organization. While this provides maximum control over security policies and implementations, it also requires significant expertise in security best practices, ongoing monitoring, and compliance management. Organizations must implement their own security measures including access controls, encryption, network security, and audit logging capabilities.

The compliance landscape varies significantly across industries and geographic regions, influencing the suitability of different deployment models and security approaches. Organizations must carefully evaluate their specific compliance requirements against the capabilities and limitations of each platform’s security framework.

User Experience and Learning Curves

The user experience and learning curve associated with different experiment tracking platforms significantly impact adoption success and team productivity. Weights & Biases emphasizes user experience design with intuitive interfaces, comprehensive documentation, and guided onboarding processes that enable rapid adoption by team members with varying technical backgrounds. The platform’s web-based interface provides accessible visualization and analysis tools that require minimal training for effective utilization.

MLflow’s user experience reflects its focus on flexibility and technical capability rather than ease of use. While the platform provides comprehensive functionality, effectively utilizing its full capabilities often requires deeper technical understanding and more extensive configuration. This learning curve may be justified for teams requiring extensive customization but could represent a barrier for organizations prioritizing rapid adoption and minimal training requirements.

The impact of user experience extends beyond individual productivity to encompass team collaboration, knowledge sharing, and the overall effectiveness of experiment tracking initiatives within organizations. Teams must consider their technical expertise levels, training capacity, and user experience priorities when evaluating these platforms.

Advanced Features and Specialized Capabilities

Both platforms offer advanced features that extend beyond basic experiment tracking to support sophisticated machine learning workflows and specialized use cases. Weights & Biases provides advanced capabilities including automated hyperparameter optimization through Sweeps, collaborative report generation, advanced visualization options, and integration with popular machine learning frameworks that enable sophisticated workflow automation.

MLflow’s advanced features emphasize flexibility and extensibility through comprehensive APIs, plugin architectures, and integration capabilities that enable customization for specialized requirements. The platform’s model serving capabilities, multi-model deployment support, and extensive metadata management features provide sophisticated functionality for complex machine learning operations.

The evaluation of advanced features requires careful consideration of specific use case requirements, team technical capabilities, and long-term strategic objectives. Organizations should prioritize features that align with their immediate needs while considering the platform’s ability to support evolving requirements and growing complexity.

Community and Ecosystem Development

The community and ecosystem surrounding experiment tracking platforms significantly influence their long-term viability, feature development, and support availability. Weights & Biases has cultivated a strong community of users and contributors while maintaining primary control over platform development and strategic direction. This approach provides consistent development momentum and professional support while potentially limiting community-driven innovation and customization opportunities.

MLflow benefits from its open-source nature and Apache Software Foundation governance, which encourages broad community participation and contribution. The platform’s ecosystem includes numerous plugins, integrations, and extensions developed by community members, providing diverse solutions for specialized requirements. However, the distributed nature of open-source development can sometimes result in inconsistent quality or maintenance levels across different components.

The choice between these ecosystem approaches depends on organizational preferences regarding community involvement, contribution opportunities, and the importance of having multiple development and support channels available for critical infrastructure components.

Migration and Interoperability Considerations

Organizations evaluating experiment tracking platforms must consider migration scenarios and interoperability requirements that may arise as needs evolve or strategic directions change. Weights & Biases provides export capabilities and APIs that facilitate data extraction, though migrating to alternative platforms may require significant effort depending on the complexity of existing workflows and integrations.

MLflow’s open-source architecture and standardized APIs generally provide greater flexibility for migration and interoperability scenarios. The platform’s modular design enables selective migration of components and supports hybrid deployments that may ease transition processes. However, organizations must still carefully plan migration strategies to ensure data integrity and workflow continuity.

The interoperability consideration extends beyond migration scenarios to encompass integration with existing tools, workflows, and systems that may require ongoing data exchange or coordinated functionality. Teams should evaluate platform APIs, data formats, and integration capabilities when assessing long-term flexibility requirements.

Performance Optimization and Best Practices

Effective utilization of experiment tracking platforms requires understanding performance optimization strategies and implementing best practices that maximize value while minimizing resource consumption. Weights & Biases provides guidance and automated optimization features that help teams efficiently utilize platform capabilities, though organizations with specialized requirements may need to implement custom optimization strategies.

MLflow’s performance characteristics depend heavily on deployment configuration, infrastructure choices, and usage patterns implemented by organizations. While this provides maximum control over performance optimization, it also requires expertise in system tuning and ongoing monitoring to maintain optimal performance as usage patterns evolve.

Both platforms benefit from careful consideration of data organization strategies, experiment naming conventions, metadata capture practices, and resource allocation policies that support efficient operation and effective collaboration across team members and projects.

Future Roadmap and Strategic Considerations

The future development trajectories of experiment tracking platforms influence their long-term suitability for organizations making strategic technology investments. Weights & Biases continues to invest in advanced features, integration capabilities, and user experience improvements while maintaining their cloud-first approach and focus on collaborative machine learning workflows.

MLflow’s roadmap emphasizes expanding functionality, improving performance, and enhancing integration capabilities while maintaining its open-source philosophy and commitment to vendor neutrality. The platform’s development priorities reflect community input and enterprise requirements for sophisticated machine learning operations.

Organizations must consider how platform evolution aligns with their strategic objectives, technical roadmaps, and long-term requirements when making selection decisions. The rapidly evolving machine learning landscape requires experiment tracking platforms that can adapt to emerging techniques, frameworks, and operational requirements.

Implementation Strategies and Recommendations

Successful implementation of experiment tracking platforms requires careful planning, phased deployment approaches, and ongoing optimization efforts that align with organizational capabilities and objectives. Teams should begin with pilot projects that demonstrate value while building expertise and refining implementation strategies before expanding to broader organizational adoption.

The implementation strategy should address training requirements, workflow integration, data migration needs, and success metrics that enable objective evaluation of platform effectiveness. Organizations benefit from establishing clear governance policies, usage guidelines, and optimization practices that support consistent and effective platform utilization across teams and projects.

Change management considerations play crucial roles in successful platform adoption, particularly for organizations transitioning from existing experiment tracking approaches or implementing formal experiment management practices for the first time. Teams should prioritize user experience, provide comprehensive training resources, and establish support mechanisms that facilitate smooth adoption processes.

Conclusion and Strategic Guidance

The choice between Weights & Biases and MLflow represents a fundamental decision about organizational priorities, technical approaches, and strategic objectives for machine learning operations. Weights & Biases excels in providing sophisticated user experiences, collaborative features, and managed infrastructure that enables rapid deployment and effective team collaboration. The platform’s cloud-first approach and comprehensive feature set make it particularly suitable for teams prioritizing ease of use, rapid time-to-value, and collaborative workflows.

MLflow provides unparalleled flexibility, customization capabilities, and control over machine learning infrastructure through its open-source architecture and modular design. The platform’s emphasis on vendor neutrality, extensibility, and comprehensive lifecycle management makes it ideal for organizations requiring extensive customization, specific compliance requirements, or integration with complex existing systems.

Organizations should carefully evaluate their specific requirements regarding team technical capabilities, scalability needs, security requirements, budget constraints, and long-term strategic objectives when choosing between these platforms. The decision should align with broader technology strategies and organizational priorities while considering the total cost of ownership and long-term maintenance requirements associated with each approach.

The rapidly evolving machine learning landscape continues to drive innovation in experiment tracking and model management platforms. Organizations that establish robust experiment tracking practices and choose platforms aligned with their strategic objectives will be better positioned to capitalize on emerging opportunities and navigate the complexities of modern machine learning operations.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The comparisons and analysis presented are based on publicly available information and general industry understanding as of the publication date. Organizations should conduct their own thorough evaluation including technical testing, cost analysis, and requirements assessment before making platform selection decisions. Features, pricing, and capabilities of both platforms may change over time, and readers should verify current information directly with the respective vendors.