The convergence of artificial intelligence and cybersecurity has created an unprecedented landscape where traditional security models prove inadequate for protecting machine learning systems and their associated data pipelines. Zero Trust AI represents a revolutionary security paradigm that fundamentally reimagines how organizations approach the protection of artificial intelligence infrastructure, moving beyond perimeter-based defenses to embrace a comprehensive model where no entity, whether human or machine, is inherently trusted within the AI ecosystem.

Explore the latest AI security trends to understand how rapidly evolving threat landscapes are reshaping the way organizations approach AI system protection. The implementation of Zero Trust principles in AI environments has become not merely an option but a necessity for organizations seeking to harness the transformative power of artificial intelligence while maintaining robust security postures against increasingly sophisticated threat actors.

The Imperative for Zero Trust in AI Systems

Traditional security models operate on the assumption that entities within the network perimeter are trustworthy, a premise that becomes dangerously inadequate when applied to machine learning systems that process vast amounts of sensitive data, make autonomous decisions, and operate across distributed computing environments. The unique characteristics of AI systems, including their reliance on continuous data ingestion, model training processes, and inference operations, create attack surfaces that extend far beyond conventional application security concerns.

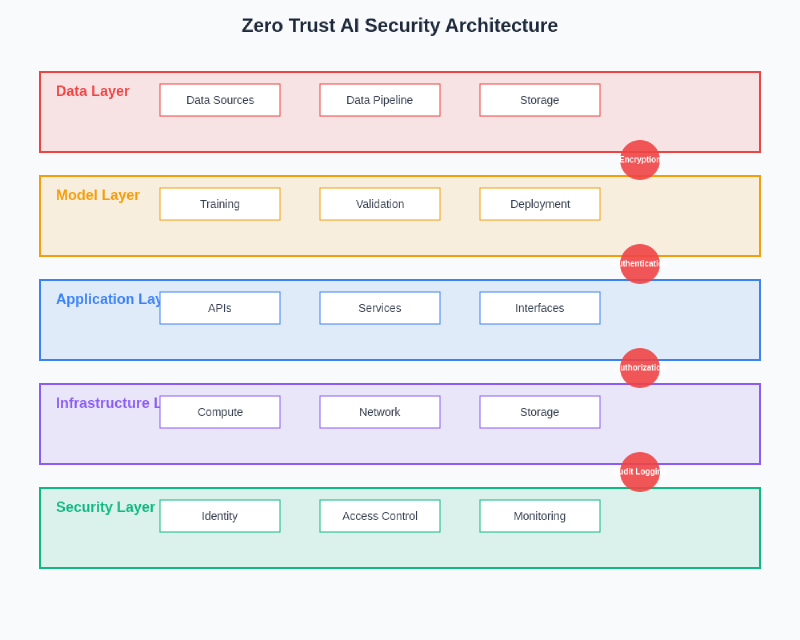

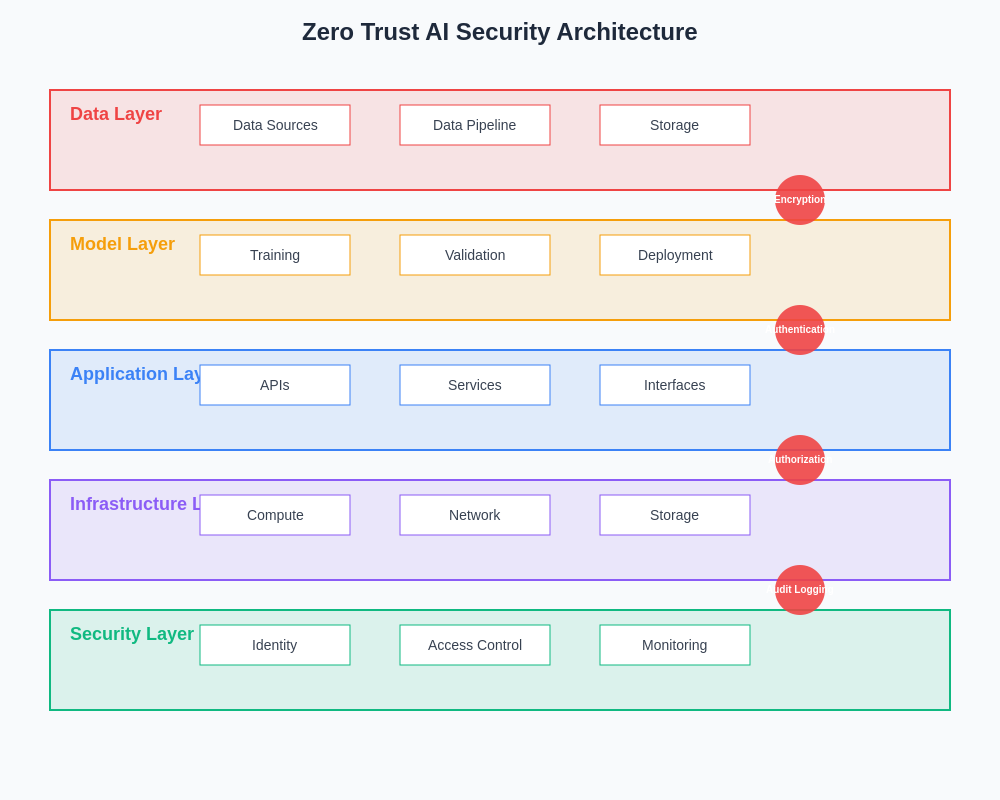

The proliferation of AI systems across enterprise environments has introduced complex interdependencies between data sources, training infrastructure, model repositories, and deployment platforms, creating a web of potential vulnerabilities that traditional security approaches cannot adequately address. Zero Trust AI architecture acknowledges these realities by implementing granular security controls, continuous verification mechanisms, and comprehensive monitoring systems that treat every interaction within the AI ecosystem as potentially hostile until proven otherwise.

Machine learning systems face unique security challenges that include data poisoning attacks, model extraction attempts, adversarial input manipulation, and privacy breaches through inference attacks, all of which require specialized defensive strategies that integrate seamlessly with broader organizational security frameworks. The implementation of Zero Trust principles provides a structured approach to addressing these challenges while maintaining the operational efficiency and performance characteristics that make AI systems valuable to organizations.

Foundational Principles of Zero Trust AI Architecture

The core tenets of Zero Trust AI extend traditional Zero Trust networking principles to encompass the unique requirements of machine learning systems, establishing a comprehensive framework that governs every aspect of AI system operation from data collection through model deployment and ongoing maintenance. These principles form the foundation upon which all security controls, monitoring systems, and governance processes are built, ensuring consistency and effectiveness across diverse AI implementations.

Leverage advanced AI security tools like Claude to implement comprehensive security analysis and threat detection capabilities within your Zero Trust AI architecture. The principle of explicit verification requires that every entity seeking access to AI resources must be authenticated and authorized through multiple verification mechanisms, regardless of their location within the network topology or their previous access history.

Least privilege access represents another fundamental principle, ensuring that users, applications, and systems receive only the minimum permissions necessary to perform their designated functions within the AI ecosystem. This approach significantly reduces the potential impact of security breaches by limiting the scope of access that compromised entities can exploit, while also providing clear audit trails that facilitate incident response and forensic analysis.

Continuous monitoring and assessment form the third pillar of Zero Trust AI, requiring real-time evaluation of system behavior, data flows, and model performance to detect anomalies that might indicate security incidents, system failures, or malicious activities. This ongoing vigilance extends beyond traditional log analysis to include specialized AI security metrics such as model drift detection, data quality assessments, and inference pattern analysis.

Data Security and Privacy Protection

The protection of training data, inference inputs, and model outputs represents one of the most critical aspects of Zero Trust AI implementation, requiring sophisticated approaches to data classification, access control, and privacy preservation that account for the dynamic nature of machine learning workflows. Data security in AI environments must address not only traditional concerns such as unauthorized access and data exfiltration but also AI-specific threats including data poisoning, membership inference attacks, and privacy violations through model inversion techniques.

Comprehensive data governance frameworks within Zero Trust AI architectures establish clear ownership models, usage policies, and retention schedules that govern how sensitive information flows through machine learning pipelines while maintaining compliance with regulatory requirements such as GDPR, CCPA, and industry-specific data protection standards. These frameworks must be sufficiently flexible to accommodate the iterative nature of AI development while providing robust controls that prevent unauthorized data usage or disclosure.

Privacy-preserving techniques such as differential privacy, federated learning, and homomorphic encryption play crucial roles in Zero Trust AI implementations, enabling organizations to extract value from sensitive data while maintaining strong privacy guarantees for individuals and protecting proprietary information from potential adversaries. The integration of these techniques requires careful consideration of trade-offs between privacy protection and model performance, necessitating ongoing evaluation and optimization to ensure that security measures do not unduly compromise AI system effectiveness.

Data lineage tracking and provenance management provide essential capabilities for maintaining data integrity and enabling rapid incident response when security breaches or data quality issues are detected. These systems must capture comprehensive metadata about data transformations, model training processes, and inference operations while providing efficient querying capabilities that support both routine auditing and emergency response scenarios.

Model Security and Integrity Protection

The protection of machine learning models themselves represents a unique challenge within Zero Trust AI architectures, requiring specialized approaches to secure model storage, version control, deployment, and runtime protection that go beyond traditional application security measures. Model security encompasses protection against theft, tampering, unauthorized modification, and various forms of adversarial attacks that can compromise model accuracy, fairness, or safety characteristics.

Secure model development lifecycles integrate security controls directly into the machine learning workflow, implementing automated security testing, vulnerability scanning, and compliance checking at every stage from initial development through production deployment. These processes must account for the iterative nature of machine learning development while ensuring that security requirements are consistently met across multiple model versions and deployment environments.

Model signing and verification mechanisms provide cryptographic assurance of model integrity, enabling organizations to detect unauthorized modifications and ensure that only approved models are deployed in production environments. These systems must be integrated with automated deployment pipelines while providing sufficient flexibility to support rapid iteration and experimentation during model development phases.

Runtime model protection involves implementing monitoring systems that can detect adversarial inputs, model extraction attempts, and other forms of attacks against deployed models while maintaining the performance characteristics necessary for real-time inference operations. These protection mechanisms must be carefully tuned to minimize false positives while providing robust defense against sophisticated adversaries who may attempt to exploit model vulnerabilities through carefully crafted inputs or systematic probing.

Enhance your security research capabilities with Perplexity to stay current with emerging threats and defensive techniques in the rapidly evolving AI security landscape. The integration of threat intelligence feeds and automated response capabilities enables Zero Trust AI systems to adapt dynamically to new attack patterns and emerging vulnerabilities.

Infrastructure Security and Access Control

The distributed nature of modern AI infrastructure creates complex security challenges that require comprehensive approaches to network segmentation, access control, and system hardening across diverse computing environments including on-premises data centers, cloud platforms, and edge computing devices. Zero Trust AI architectures must provide consistent security controls across these heterogeneous environments while maintaining the performance and scalability characteristics necessary for effective machine learning operations.

Microsegmentation strategies within AI infrastructure create isolated network zones for different components of the machine learning pipeline, limiting the potential for lateral movement by malicious actors while providing granular control over data flows and system communications. These segmentation policies must be dynamically maintained to accommodate the changing requirements of machine learning workloads while ensuring that security boundaries remain effective against evolving threat patterns.

Identity and access management systems for AI environments must support both human users and automated systems, providing sophisticated authentication and authorization mechanisms that can handle the complex permission structures required for machine learning operations. These systems must integrate with existing organizational identity providers while providing specialized capabilities for managing service accounts, API keys, and other credentials used by automated AI systems.

Container and orchestration security plays a crucial role in modern AI deployments, requiring specialized approaches to image scanning, runtime protection, and configuration management that account for the unique characteristics of machine learning workloads. Security controls must be integrated into container deployment pipelines while providing comprehensive monitoring and response capabilities for containerized AI applications.

Monitoring, Logging, and Incident Response

Comprehensive monitoring and logging capabilities within Zero Trust AI architectures must capture not only traditional security events but also AI-specific metrics and behaviors that can indicate potential security incidents, system failures, or performance degradation. These monitoring systems must process vast amounts of data generated by machine learning operations while providing actionable insights that enable rapid detection and response to security incidents.

Behavioral analytics and anomaly detection systems leverage machine learning techniques to identify unusual patterns in system behavior, user activities, and data access patterns that might indicate security threats or system compromises. These systems must be carefully tuned to account for the legitimate variability in AI system behavior while maintaining sensitivity to genuine security threats and performance issues.

Incident response procedures for AI systems must account for the unique characteristics of machine learning environments, including the potential for model corruption, data poisoning, and other AI-specific attack scenarios that require specialized response procedures and forensic analysis techniques. Response teams must be trained to handle AI-specific security incidents while maintaining coordination with broader organizational security operations centers.

Forensic analysis capabilities for AI systems require specialized tools and techniques that can examine model behavior, training data integrity, and inference patterns to determine the scope and impact of security incidents. These capabilities must be integrated with existing forensic workflows while providing the specialized expertise necessary for investigating AI-specific security events.

The comprehensive Zero Trust AI security architecture illustrates the integrated approach required to protect machine learning systems across all operational phases, from data ingestion through model deployment and ongoing monitoring. This architecture demonstrates how multiple security layers work together to provide robust protection while maintaining system performance and operational efficiency.

Compliance and Regulatory Considerations

The regulatory landscape surrounding AI systems continues to evolve rapidly, with new requirements emerging at national, regional, and industry levels that impose specific obligations for AI system security, privacy protection, and algorithmic transparency. Zero Trust AI architectures must be designed with sufficient flexibility to accommodate these evolving requirements while providing robust evidence collection and reporting capabilities that support compliance auditing and regulatory inquiries.

Data protection regulations such as GDPR and CCPA impose specific requirements for AI systems that process personal data, including obligations for data minimization, purpose limitation, and individual rights that must be integrated into Zero Trust AI architectures from the design phase. These requirements often conflict with traditional machine learning approaches that rely on comprehensive data collection and analysis, necessitating innovative approaches that balance compliance obligations with system effectiveness.

Industry-specific regulations in sectors such as healthcare, finance, and transportation impose additional requirements for AI system validation, testing, and ongoing monitoring that must be integrated with Zero Trust security controls. These requirements often mandate specific documentation, testing procedures, and audit trails that require careful coordination between security, compliance, and engineering teams.

Emerging AI governance frameworks and ethical guidelines are increasingly influencing regulatory requirements, creating new obligations for algorithmic transparency, bias detection, and fairness assessment that must be incorporated into Zero Trust AI implementations. These requirements often necessitate specialized monitoring and reporting capabilities that extend beyond traditional security metrics to include fairness, explainability, and social impact assessments.

Implementation Strategies and Best Practices

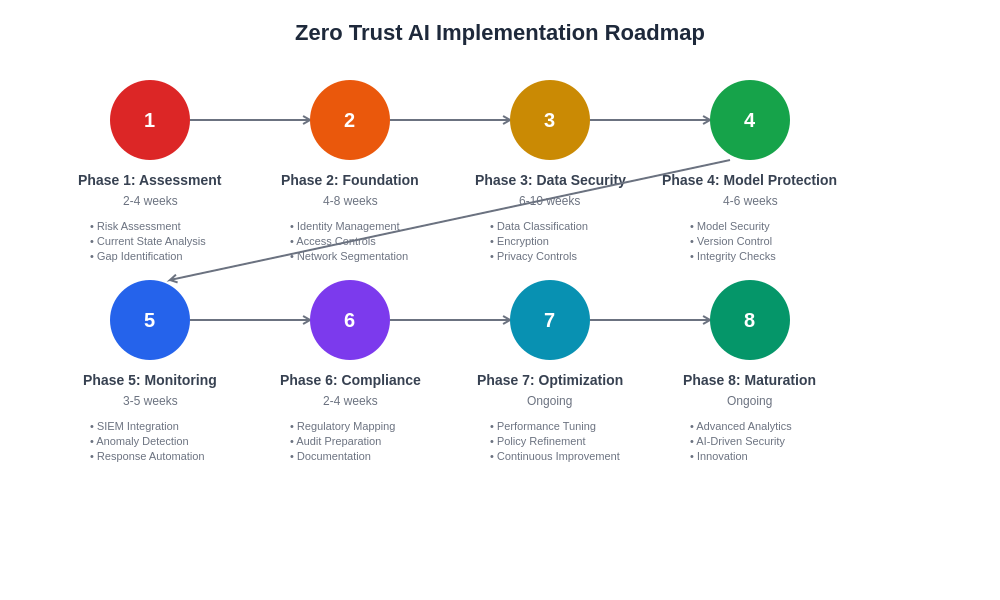

Successful implementation of Zero Trust AI architectures requires careful planning, phased deployment approaches, and ongoing optimization that accounts for the unique characteristics of organizational AI systems and operational requirements. Implementation strategies must balance security objectives with performance requirements while minimizing disruption to existing AI development and deployment processes.

Risk assessment and threat modeling exercises provide essential inputs for Zero Trust AI implementation, helping organizations identify the most critical assets, threats, and vulnerabilities that should be addressed through security controls and monitoring systems. These assessments must account for AI-specific threats and attack vectors while considering the broader organizational risk context and regulatory requirements.

Technology selection and integration strategies must carefully evaluate the compatibility of security tools and platforms with existing AI infrastructure while ensuring that new security controls do not introduce unacceptable performance overhead or operational complexity. Organizations must balance the benefits of specialized AI security tools against the advantages of integrated security platforms that provide consistent controls across diverse technology environments.

Change management and training programs play crucial roles in successful Zero Trust AI implementation, ensuring that development teams, operations staff, and security personnel understand their roles and responsibilities within the new security framework. These programs must address both technical aspects of the new architecture and procedural changes required to maintain security controls throughout AI system lifecycles.

The structured implementation roadmap provides organizations with a practical framework for deploying Zero Trust AI architectures in phases, allowing for gradual adaptation and learning while building security capabilities progressively. This approach minimizes disruption to existing operations while ensuring that critical security controls are prioritized appropriately.

Performance and Scalability Considerations

The implementation of comprehensive security controls within AI systems inevitably introduces performance overhead that must be carefully managed to ensure that security measures do not compromise the effectiveness or efficiency of machine learning operations. Performance optimization strategies must balance security requirements with operational needs while providing sufficient monitoring capabilities to detect when security controls are negatively impacting system performance.

Scalability challenges in Zero Trust AI environments require innovative approaches to security control implementation that can accommodate the massive data volumes, computational requirements, and distributed processing characteristics of modern machine learning systems. Security architectures must be designed to scale horizontally across multiple computing nodes while maintaining consistent policy enforcement and monitoring capabilities.

Caching and optimization strategies can significantly reduce the performance impact of security controls by minimizing redundant authentication operations, optimizing encryption and decryption processes, and implementing efficient policy evaluation mechanisms. These optimizations must be carefully implemented to ensure that performance improvements do not compromise security effectiveness or introduce new vulnerabilities.

Load balancing and resource management systems must account for the computational overhead introduced by security controls while ensuring that critical AI workloads receive sufficient resources to maintain acceptable performance levels. These systems must be dynamically configurable to accommodate varying workload patterns and security requirements across different AI applications and environments.

Future Evolution and Emerging Technologies

The rapid evolution of AI technologies continues to introduce new security challenges and opportunities that will shape the future development of Zero Trust AI architectures. Emerging technologies such as quantum computing, neuromorphic processors, and advanced AI accelerators require new approaches to security control implementation that account for their unique characteristics and operational requirements.

Integration with emerging security technologies including zero-knowledge proofs, secure multi-party computation, and advanced cryptographic techniques promises to enhance the privacy and security capabilities of Zero Trust AI systems while enabling new applications that were previously impossible due to security or privacy constraints. These technologies must be carefully evaluated and integrated to ensure that they provide genuine security benefits without introducing new vulnerabilities or operational complexities.

The evolution toward more autonomous AI systems that can make independent decisions about resource allocation, security policy enforcement, and incident response will require new approaches to trust management and control validation that ensure human oversight remains effective while enabling the benefits of automated security operations. These systems must be designed with appropriate safeguards and override mechanisms that prevent automated security systems from causing unintended disruptions or security weaknesses.

Standardization efforts within the AI security community are working to establish common frameworks, protocols, and best practices that will facilitate interoperability between different Zero Trust AI implementations while providing consistent security assurances across diverse organizational environments. Organizations should actively participate in these standardization efforts while preparing for the adoption of emerging standards and practices.

The comprehensive threat landscape visualization demonstrates the diverse range of security challenges that Zero Trust AI architectures must address, from traditional cybersecurity threats to AI-specific attack vectors that target machine learning systems directly. Understanding this threat environment is essential for designing effective defensive strategies and security controls.

Conclusion and Strategic Recommendations

The implementation of Zero Trust AI architectures represents a critical evolution in organizational security strategies that acknowledges the unique challenges and opportunities presented by artificial intelligence systems. Organizations that proactively adopt these comprehensive security frameworks will be better positioned to realize the benefits of AI technologies while maintaining robust protection against evolving threats and regulatory compliance requirements.

Strategic planning for Zero Trust AI implementation should prioritize risk assessment, stakeholder engagement, and phased deployment approaches that allow organizations to build security capabilities gradually while learning from early implementations and adapting to changing requirements. Success requires coordination between security, AI development, and business stakeholders to ensure that security measures support rather than impede organizational AI objectives.

Investment in specialized security tools, training programs, and organizational capabilities will be essential for effective Zero Trust AI implementation, requiring careful resource allocation and long-term commitment to security excellence in AI environments. Organizations must balance immediate security needs against long-term strategic objectives while maintaining flexibility to adapt to emerging threats and evolving regulatory requirements.

The future of AI security lies in the continued evolution of Zero Trust principles and their integration with emerging technologies, regulatory frameworks, and organizational practices that enable secure, responsible, and effective deployment of artificial intelligence systems across diverse operational environments and use cases.

Disclaimer

This article provides general information about AI security principles and practices and does not constitute professional security advice. Organizations should conduct thorough risk assessments and engage qualified security professionals when implementing Zero Trust AI architectures. The effectiveness of security measures may vary depending on specific implementation details, organizational contexts, and evolving threat landscapes. Readers should validate all security recommendations against their specific requirements and regulatory obligations.