The landscape of artificial intelligence and machine learning has been dominated by high-level languages like Python and frameworks that prioritize developer convenience over raw computational efficiency. However, as AI systems become increasingly complex and demanding, the need for low-level performance optimization has never been more critical. Enter Zig, a modern systems programming language that is quietly revolutionizing how we approach performance-critical AI applications by offering unprecedented control over memory management, compile-time optimizations, and hardware-level interactions while maintaining the safety and expressiveness that modern developers expect.

Discover the latest AI development trends that are shaping the future of machine learning performance and efficiency. The emergence of Zig in the AI ecosystem represents a fundamental shift toward performance-first development philosophies that prioritize computational efficiency without sacrificing code maintainability or developer productivity.

The Performance Imperative in Modern AI Systems

Contemporary machine learning applications face unprecedented computational demands that strain the limits of traditional high-level programming approaches. Large language models with billions of parameters, real-time computer vision systems processing high-resolution imagery, and distributed training pipelines handling massive datasets all require every ounce of computational efficiency available. The traditional approach of using Python with C/C++ extensions for performance-critical sections has proven increasingly inadequate as AI workloads become more complex and performance requirements more stringent.

Zig addresses these challenges by providing a programming environment that combines the performance characteristics of C and C++ with modern language design principles that eliminate entire classes of bugs and undefined behavior. The language’s approach to memory management, compile-time computation, and zero-cost abstractions makes it particularly well-suited for the demanding requirements of AI systems where microseconds of latency can determine the difference between real-time responsiveness and unacceptable delays.

The growing adoption of Zig in AI applications reflects a broader industry recognition that the traditional trade-offs between development velocity and runtime performance are no longer sustainable in an era where AI systems must process ever-increasing volumes of data with ever-decreasing latency requirements. Organizations building production AI systems are discovering that the long-term benefits of investing in performance-optimized implementations far outweigh the initial development overhead associated with lower-level programming approaches.

Zig’s Unique Advantages for Machine Learning

Zig’s design philosophy centers around providing programmers with explicit control over program behavior while eliminating the undefined behavior and memory safety issues that plague traditional systems programming languages. This approach proves particularly valuable in machine learning contexts where numerical stability, predictable memory allocation patterns, and deterministic performance characteristics are essential for reproducible results and reliable system operation.

The language’s compile-time execution capabilities enable sophisticated optimizations that are particularly relevant to machine learning workloads. Mathematical operations, tensor shape calculations, and algorithm parameter tuning can all be performed at compile time, resulting in runtime code that is optimized for specific model architectures and deployment scenarios. This level of specialization allows Zig-based AI systems to achieve performance levels that approach hand-optimized assembly code while maintaining the readability and maintainability of higher-level language implementations.

Zig’s approach to error handling through explicit error unions provides machine learning systems with robust mechanisms for handling the various failure modes that can occur during model training, inference, and data processing operations. Rather than relying on exception handling mechanisms that can introduce unpredictable performance overhead, Zig encourages developers to explicitly account for potential failure scenarios, resulting in more reliable and predictable AI system behavior.

Experience advanced AI development with Claude for sophisticated reasoning about low-level optimization strategies and system architecture decisions that maximize the performance potential of Zig-based AI implementations.

Memory Management Excellence in AI Workloads

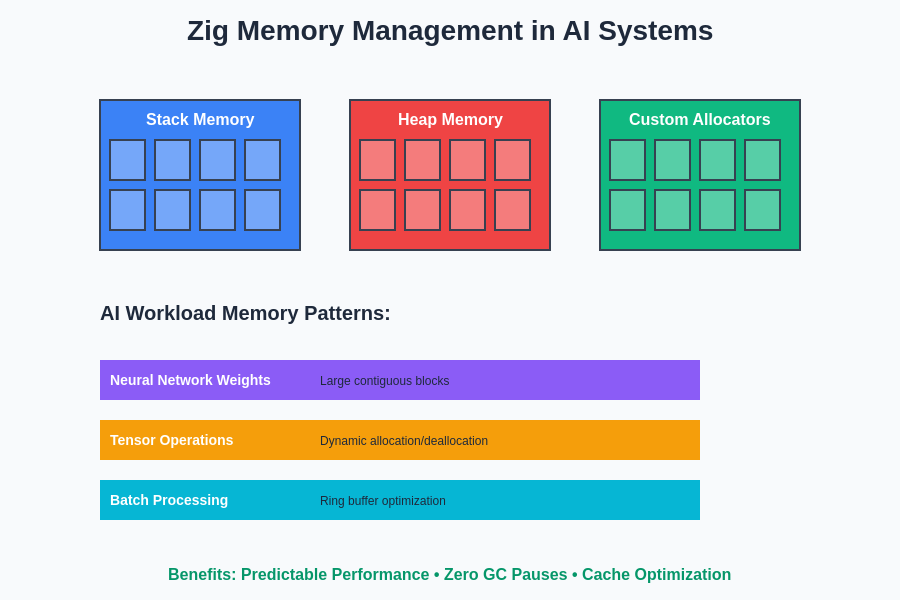

Memory management represents one of the most critical aspects of high-performance AI system development, as machine learning workloads typically involve processing large datasets, maintaining complex model states, and performing intensive numerical computations that generate significant amounts of intermediate data. Zig’s approach to memory management provides developers with unprecedented control over allocation patterns, memory layout optimization, and garbage collection avoidance while maintaining safety guarantees that prevent the memory corruption issues that commonly plague C and C++ implementations.

The language’s allocator abstraction allows AI developers to implement custom memory management strategies that are specifically optimized for machine learning workload characteristics. Neural network training operations, which typically involve repeated allocation and deallocation of tensor data structures, can benefit from specialized memory pool implementations that minimize allocation overhead and improve cache locality. Similarly, inference workloads that process continuous streams of input data can utilize ring buffer allocators that eliminate dynamic memory allocation entirely during steady-state operation.

Zig’s compile-time memory layout optimization capabilities enable developers to structure data in ways that maximize cache efficiency and minimize memory bandwidth requirements. Tensor data structures can be laid out in memory patterns that align with specific hardware architectures, vectorized instruction sets, and GPU memory hierarchies, resulting in significant performance improvements for computationally intensive machine learning operations.

The sophisticated memory management capabilities of Zig enable AI developers to achieve performance levels that rival hand-optimized C implementations while providing safety guarantees and development productivity that significantly exceed traditional systems programming approaches. This combination of performance and safety makes Zig particularly attractive for production AI systems where both computational efficiency and system reliability are essential requirements.

GPU Computing and Hardware Acceleration Integration

Modern machine learning systems increasingly rely on specialized hardware accelerators, particularly GPUs and tensor processing units, to achieve the computational throughput required for training large models and processing high-volume inference workloads. Zig’s system-level programming capabilities and foreign function interface make it exceptionally well-suited for integrating with CUDA, OpenCL, and other hardware acceleration frameworks while maintaining the performance characteristics necessary for effective GPU utilization.

The language’s ability to generate highly optimized native code enables efficient data transfer operations between CPU and GPU memory spaces, minimizing the overhead associated with accelerator communication that often becomes a performance bottleneck in AI applications. Zig’s explicit memory management allows developers to implement sophisticated buffer management strategies that optimize GPU memory utilization and minimize the expensive memory allocation operations that can significantly impact training and inference performance.

Zig’s compile-time computation capabilities prove particularly valuable for GPU kernel generation and optimization. Mathematical operations, tensor dimension calculations, and algorithm parameter configurations can be computed at compile time and embedded directly into GPU kernels, resulting in specialized implementations that are optimized for specific model architectures and hardware configurations. This level of specialization enables Zig-based AI systems to achieve GPU utilization rates that approach theoretical maximums while maintaining code that remains readable and maintainable.

The integration of Zig with emerging hardware acceleration technologies, including neuromorphic processors, quantum computing interfaces, and specialized AI chips, positions the language as a strategic choice for organizations developing next-generation AI systems that must leverage cutting-edge hardware technologies to achieve competitive performance advantages.

High-Performance Linear Algebra and Numerical Computing

Linear algebra operations form the computational foundation of virtually all machine learning algorithms, from basic regression models to complex neural network architectures. The performance characteristics of matrix multiplication, vector operations, and tensor manipulations directly determine the overall efficiency of AI systems, making the optimization of these fundamental operations a critical concern for performance-oriented AI development.

Zig’s approach to numerical computing emphasizes explicit control over floating-point behavior, vectorization strategies, and memory access patterns that enable developers to implement linear algebra routines that rival the performance of highly optimized libraries like BLAS and LAPACK. The language’s ability to generate code that takes advantage of specialized instruction sets, including AVX, SSE, and ARM NEON extensions, allows for the implementation of vectorized operations that maximize computational throughput on modern processor architectures.

The compile-time optimization capabilities of Zig enable the generation of specialized linear algebra routines that are optimized for specific matrix dimensions, data types, and algorithm requirements commonly encountered in machine learning applications. Rather than relying on generic library implementations that must handle arbitrary input configurations, Zig-based AI systems can utilize compile-time specialization to generate code that is optimized for the specific computational patterns of individual model architectures.

Enhance your research capabilities with Perplexity to stay current with the latest developments in high-performance numerical computing and optimization strategies that maximize the computational efficiency of machine learning implementations.

Real-Time Inference and Edge Computing Applications

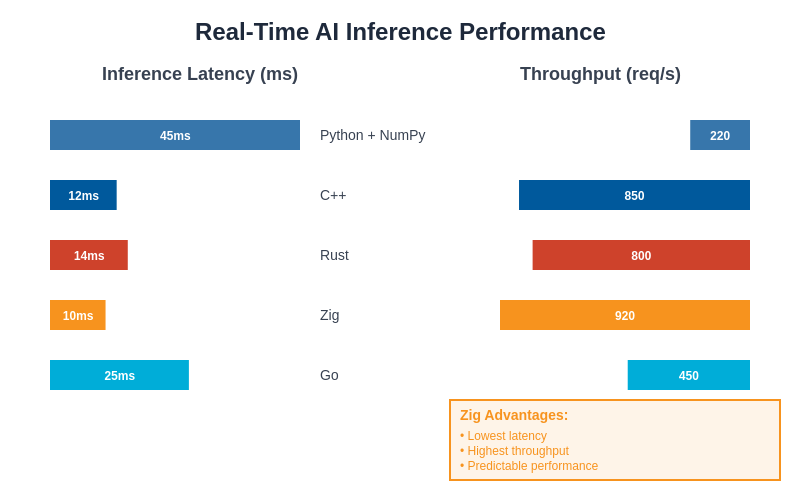

The deployment of AI systems in real-time and edge computing environments presents unique challenges that require careful optimization of computational efficiency, memory utilization, and power consumption. Applications such as autonomous vehicle control systems, real-time video processing, and IoT sensor data analysis demand inference capabilities that can process input data within strict latency constraints while operating within the resource limitations of embedded hardware platforms.

Zig’s performance characteristics and minimal runtime overhead make it particularly well-suited for edge AI applications where every millisecond of latency and every byte of memory utilization directly impacts system capabilities and user experience. The language’s ability to generate compact, efficient machine code enables the deployment of sophisticated AI models on resource-constrained hardware platforms that would be unable to support the overhead associated with traditional high-level language implementations.

The predictable performance characteristics of Zig-based AI systems prove essential for real-time applications where timing guarantees are critical for system safety and reliability. Unlike garbage-collected languages that can introduce unpredictable latency spikes during collection cycles, or languages with complex runtime systems that exhibit variable performance characteristics, Zig provides the deterministic execution behavior required for real-time AI applications.

The combination of high performance, predictable behavior, and minimal resource utilization makes Zig an ideal choice for organizations developing AI systems that must operate in challenging deployment environments where computational resources are limited and performance requirements are stringent.

Neural Network Optimization and Custom Operators

The implementation of neural network architectures often requires custom operators and optimization strategies that are specifically tailored to particular model designs or computational requirements. While high-level frameworks like PyTorch and TensorFlow provide extensive operator libraries, they may not always offer the specialized functionality or performance characteristics required for cutting-edge research or production optimization scenarios.

Zig’s system-level programming capabilities enable the implementation of custom neural network operators that are optimized for specific mathematical operations, data layouts, and hardware architectures. The language’s compile-time computation features allow for the generation of specialized operator implementations that are tailored to particular tensor dimensions, activation functions, and numerical precision requirements commonly encountered in production neural network deployments.

The ability to implement custom backpropagation algorithms, gradient optimization strategies, and numerical stability enhancements provides AI researchers and engineers with unprecedented control over the training process behavior. This level of customization enables the exploration of novel optimization techniques and the implementation of specialized training strategies that may not be practical or efficient within the constraints of existing high-level frameworks.

Zig’s integration capabilities with existing machine learning ecosystems allow for the seamless incorporation of custom operators into broader AI development workflows. High-performance Zig implementations can be exposed through Python interfaces, integrated with existing model serving infrastructure, and deployed alongside traditional framework-based components, providing organizations with the flexibility to optimize critical performance bottlenecks without requiring complete system rewrites.

Distributed Training and High-Performance Computing

Large-scale machine learning model training increasingly requires distributed computing approaches that coordinate training operations across multiple processors, machines, and potentially geographic locations. The implementation of efficient distributed training systems requires careful attention to communication overhead, synchronization strategies, and fault tolerance mechanisms that can significantly impact training performance and resource utilization.

Zig’s performance characteristics and system-level programming capabilities make it well-suited for implementing the communication and coordination infrastructure required for distributed training systems. The language’s explicit memory management and zero-cost abstractions enable the implementation of efficient message passing systems, gradient aggregation algorithms, and distributed synchronization mechanisms that minimize the communication overhead that often becomes a bottleneck in large-scale training scenarios.

The predictable performance characteristics of Zig-based distributed training implementations provide significant advantages for resource planning and cost optimization in cloud computing environments where training costs are directly related to computational resource utilization and training completion times. The ability to achieve higher utilization rates of expensive GPU resources through more efficient software implementations can result in substantial cost savings for organizations training large models.

Integration with Existing AI Ecosystems

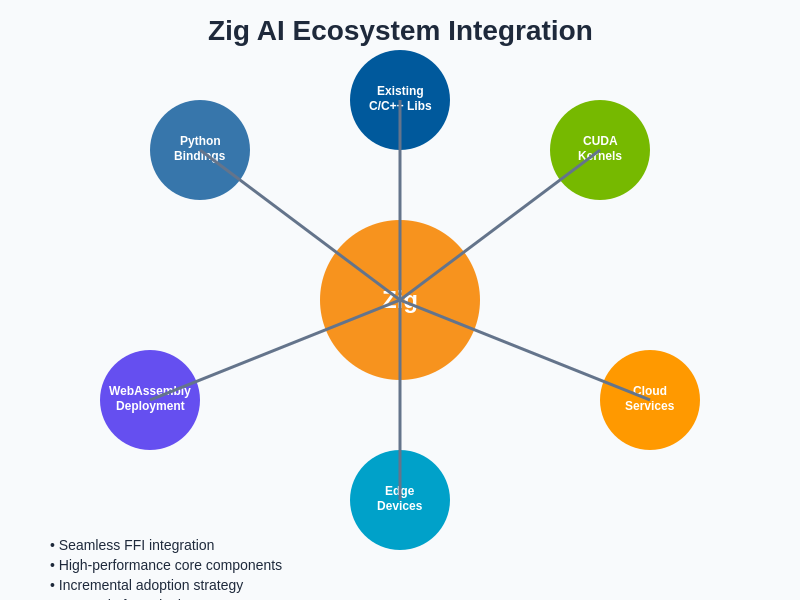

The practical adoption of any programming language for AI development requires seamless integration with existing development tools, frameworks, and deployment infrastructure that organizations have invested in over years of AI system development. Zig’s design includes robust foreign function interface capabilities that enable integration with existing C and C++ libraries, Python extensions, and established machine learning frameworks without requiring complete rewrites of existing systems.

The language’s ability to generate libraries that can be consumed by higher-level languages provides a pathway for incremental adoption where performance-critical components can be reimplemented in Zig while maintaining compatibility with existing Python-based development workflows. This approach allows organizations to realize the performance benefits of Zig implementation without disrupting established development processes or requiring extensive retraining of development teams.

Zig’s growing ecosystem of AI-focused libraries and tools reflects the increasing recognition of the language’s potential for high-performance machine learning applications. Community-developed linear algebra libraries, neural network frameworks, and GPU computing abstractions provide developers with foundational components that accelerate the development of Zig-based AI systems while maintaining the performance characteristics that make the language attractive for demanding computational workloads.

The strategic integration capabilities of Zig position it as a complementary technology that enhances rather than replaces existing AI development infrastructure, providing organizations with a practical pathway for adopting performance-optimized implementations where they provide the greatest value.

Future Prospects and Industry Adoption

The trajectory of Zig adoption in the AI community reflects broader industry trends toward performance-conscious development practices and the recognition that computational efficiency will become increasingly critical as AI systems continue to grow in complexity and scale. Organizations developing production AI systems are beginning to recognize that the long-term benefits of investing in performance-optimized implementations exceed the initial development overhead associated with lower-level programming approaches.

The language’s continued evolution includes features and optimizations that are specifically relevant to AI and scientific computing applications. Ongoing development efforts focus on enhanced vectorization support, improved compile-time optimization capabilities, and expanded integration with specialized hardware accelerators that are becoming increasingly important for cutting-edge AI research and deployment scenarios.

The growing community of AI researchers and engineers exploring Zig reflects the increasing sophistication of AI development practices and the recognition that achieving competitive advantages in AI applications increasingly requires attention to fundamental computational efficiency considerations. As AI systems continue to push the boundaries of what is computationally feasible, languages like Zig that provide direct access to hardware capabilities while maintaining modern development productivity will likely play increasingly important roles in the AI development ecosystem.

The intersection of Zig’s capabilities with emerging AI technologies, including neuromorphic computing, quantum-classical hybrid systems, and specialized AI processors, positions the language as a strategic choice for organizations developing next-generation AI systems that must leverage cutting-edge hardware technologies to achieve breakthrough performance capabilities.

Conclusion

The emergence of Zig as a viable option for high-performance AI development represents a significant evolution in how the industry approaches the fundamental trade-offs between development productivity and computational efficiency. By providing system-level control over program behavior while maintaining modern safety guarantees and development ergonomics, Zig offers a compelling alternative for organizations that require maximum performance from their AI systems without sacrificing code quality or maintainability.

The language’s unique combination of performance characteristics, safety guarantees, and integration capabilities positions it as a valuable tool for addressing the increasingly demanding computational requirements of modern AI applications. As machine learning systems continue to grow in complexity and scale, the ability to optimize critical performance bottlenecks through direct hardware control and specialized algorithm implementations will become increasingly important for maintaining competitive advantages and meeting user expectations for system responsiveness and efficiency.

The continued development of Zig’s AI ecosystem, combined with growing industry recognition of the importance of computational efficiency in AI systems, suggests that the language will play an increasingly significant role in the development of next-generation artificial intelligence applications that push the boundaries of what is computationally achievable while maintaining the reliability and maintainability required for production deployment scenarios.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of the Zig programming language and its applications in AI development. Readers should conduct their own research and consider their specific requirements when evaluating programming language choices for AI projects. The effectiveness of Zig for particular AI applications may vary depending on specific use cases, performance requirements, and development team expertise levels.